Imagine if we had to explain all of the actions that take place on Earth to aliens. We could provide them with non-fiction books or BBC documentaries. We could try to explain verbally what twerking is. But, really, nothing conveys an action better than a three second video clip.

Falling Asleep via GIPHY

Thanks to researchers at MIT and IBM, we now have a clearly labelled dataset of more than one million such clips. The dataset, called Moments in Time, captures hundreds of common actions that occur on Earth, from the beautiful moment of a flower opening to the embarrassing instance of a person tripping and eating dirt.

Tripping via GIPHY

(We’ve all been there.)

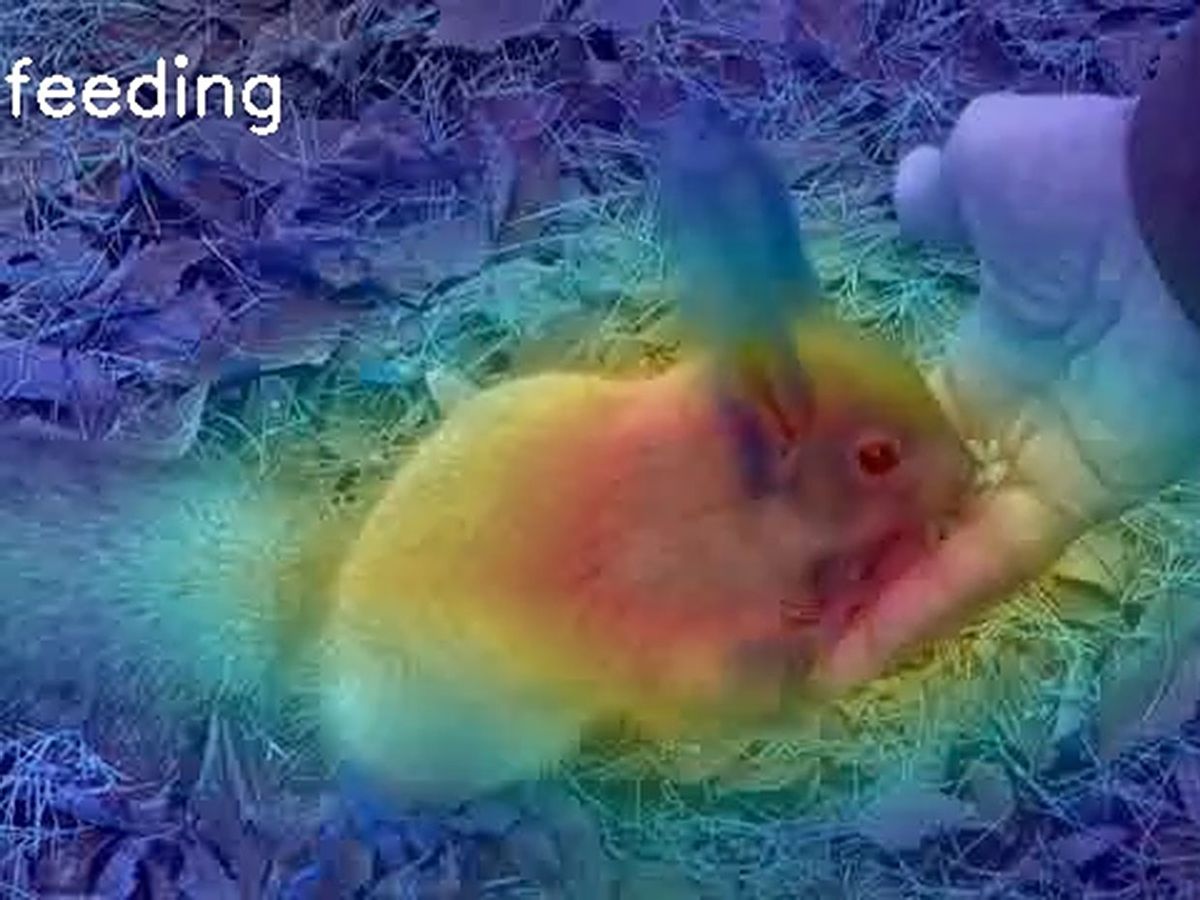

Moments in Time, however, wasn’t created to provide a bank of GIFs, but to lay the foundation for AI systems to recognize and understand actions and events in videos. To date, massive labeled image datasets such as ImageNet for object recognition and Places for scene recognition have played a major role in the development of more accurate models for image classification and understanding.

“The situation for video understanding, and in particular action recognition in videos, is different,” says Dan Gutfreund, principal investigator at the MIT-IBM Watson AI Lab and a lead researcher involved in the creation of Moments in Time. “While video datasets with labeled actions existed before Moments in Time, they were orders of magnitude smaller than the image datasets. As well, they were human-centric and sometimes domain-specific, like sports.”

Video: MIT CSAIL

Gutfreund and his colleagues therefore sought to develop a classification system that could encompass the most common actions, regardless of whether it was completed by humans, animals, or objects, as well as across different contexts. They started with a list of 4,500 of the most commonly used verbs from VerbNet, a compilation developed and used by linguists.

They parsed the verbs into semantically related clusters, then selected the most common verb from each cluster. The results show just how superfluous the English language is. Washing, showering, bathing, soaping, shampooing, manicuring, moisturizing, and flossing—these can all simply fall under the category of “grooming,” for example. After meticulous consolidation of verbs, the team identified 339 key ones that lay the foundation for Moments in Time.

Grooming via GIPHY

But when it comes to categorizing the videos themselves, this comes with a unique set of challenges. For instance, describing something that is "opening" could entail anything from a person opening a door, to a flower opening its petals or even a cartoon dog opening its mouth. What’s more, the same set of frames in reverse can actually depict a different action ("closing"), which means capturing the temporal aspect of a video is crucial to understanding it and classifying it properly.

Opening via GIPHY

The researchers selected videos off the Internet related to their 339 verbs, cutting each one down to three seconds. The clips were sent to the crowd-souring platform Amazon Mechanical Turk, where participants helped categorized the one million plus videos. They simply clicked “yes” or “no” to confirm whether the designated action was occurring in each clip.

Each label was verified by several participants. Details of how the dataset was created were published in IEEE Transactions on Pattern Analysis and Machine Intelligence on 25 February.

A lead researcher on the project, Mathew Monfort of MIT’s Computer Science and Artificial Intelligence Laboratory (CSAIL) notes that some classifications, such as “walking” or “cooking,” are straightforward, while others are not. “Playing music” could involve a band playing on a stage or a person listening to music on a radio. “Classes with this level of visual and auditory diversity can be very challenging for current machine learning models to recognize,” Monfort says.

Playing music via GIPHY

In the next version of the dataset, the team plans to use the same collection of videos and label multiple actions that occur in each one. “When we consider the nature of videos, it’s clear that more information is needed to properly describe an event and it’s incomplete to train and evaluate models with a single action label,” says Monfort. “Incorporating multiple labels into the dataset should significantly improve model training, as well as open the door to questions about relations between different actions and how to reason about them.”

Soon, more complicated videos may be effortlessly categorized by machine learning algorithms, easy peasy.

Machine Learning via GIPHY

Michelle Hampson is a freelance writer based in Halifax. She frequently contributes to Spectrum's Journal Watch coverage, which highlights newsworthy studies published in IEEE journals.