Hoping to speed AI and neuromorphic computing and cut down on power consumption, startups, scientists, and established chip companies have all been looking to do more computing in memory rather than in a processor’s computing core. Memristors and other nonvolatile memory seem to lend themselves to the task particularly well. However, most demonstrations of in-memory computing have been in standalone accelerator chips that either are built for a particular type of AI problem or that need the off-chip resources of a separate processor in order to operate. University of Michigan engineers are claiming the first memristor-based programmable computer for AI that can work on all its own.

“Memory is really the bottleneck,” says University of Michigan professor Wei Lu. “Machine learning models are getting larger and larger, and we don’t have enough on-chip memory to store the weights.” Going off-chip for data, to DRAM, say, can take 100 times as much computing time and energy. Even if you do have everything you need stored in on-chip memory, moving it back and forth to the computing core also takes too much time and energy, he says. “Instead, you do the computing in the memory.”

His lab has been working with memristors (also called resistive RAM, or RRAM), which store data as resistance, for more than a decade and has demonstrated the mechanics of their potential to efficiently perform AI computations such as the multiply-and-accumulate operations at the heart of deep learning. Arrays of memristors can do these tasks efficiently because they become analog computations instead of digital.

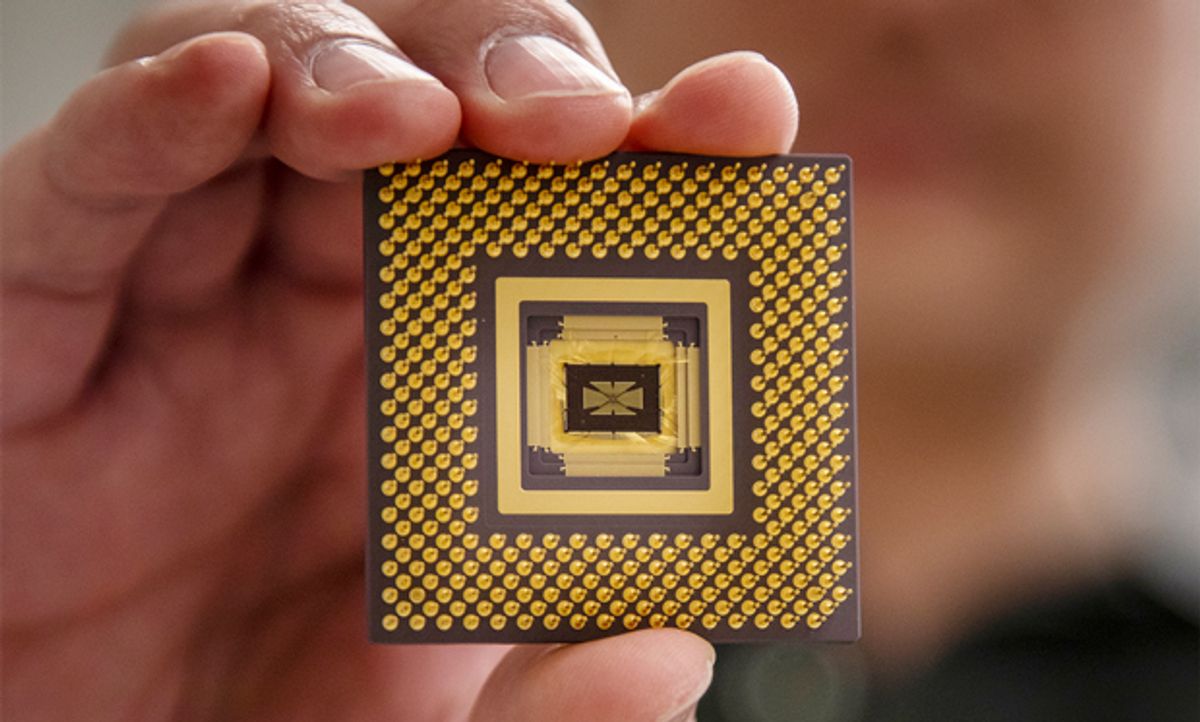

The new chip combines an array of 5,832 memristors with an OpenRISC processor. 486 specially-designed digital-to-analog converters, 162 analog-to-digital converters, and two mixed-signal interfaces act as translators between the memristors’ analog computations and the main processor.

“All the functions are implemented on chip,” says Lu, an IEEE Fellow. “To show the promise, you can’t just build the individual pieces.”

At its maximum frequency, the chip consumed just over 300 milliwatts while performing 188 billion operations per second per watt (GOPS/W). That doesn’t compare well to, say, Nvidia’s latest research AI accelerator chip at 9.09 trillion operations per second per watt (TOPS/W), although without considering the energy cost and latency of transferring data from DRAM. But Lu points out that the CMOS portion was built using the two-decade-old 180-nanometer semiconductor manufacturing process. Moving it to a newer process, such as to 2008-era 40-nanometer tech, would drop power consumption to 42 mW and boost performance to 1.37 TOPS/W without needing to transfer data from DRAM. Nvidia’s chip was made using a 16-nanometer process that debuted in 2014.

Lu’s team put the chip through three tests to prove its programmability and ability to handle a wide variety of machine learning tasks. The most straightforward one is called a perceptron, which is used to classify information. For that task, the memristor computer had to recognize Greek letters even when the image of them was noisy.

The second, and more difficult task was a problem of sparse coding. In sparse coding, you are trying to build the most efficient network of artificial neurons that will get the job done. That means that as the network learns its task, it has neurons compete with each other for a place in the network. The losers are excised, leaving a more brain-like and efficient neural network with only the connections absolutely needed. Lu demonstrated memristor-based sparse coding in 2017 on a smaller array.

The final task was a dual layer neural network capable of what’s called unsupervised learning. Rather than being presented with a set of labelled images to learn from, the chip was given a bunch of mammography test scores. The neural network first worked out what the important features of the combination of scores were and then distinguished malignant from benign tumors with 94.6 percent accuracy.

The next version of the chip, which Lu says will be done next year, will have both faster, more-efficient CMOS and multiple memristor arrays. “We will use multiple arrays to show you can tie them together to form larger networks,” he says.

Lu has formed a startup called MemryX with the aim of commercializing the chip. His previous RRAM startup, Crossbar, is also chasing the AI space. Last year Crossbar inked a deal with aerospace chipmaker Microsemi and demoed a chip that did face recognition and read license plates.

Samuel K. Moore is the senior editor at IEEE Spectrum in charge of semiconductors coverage. An IEEE member, he has a bachelor's degree in biomedical engineering from Brown University and a master's degree in journalism from New York University.