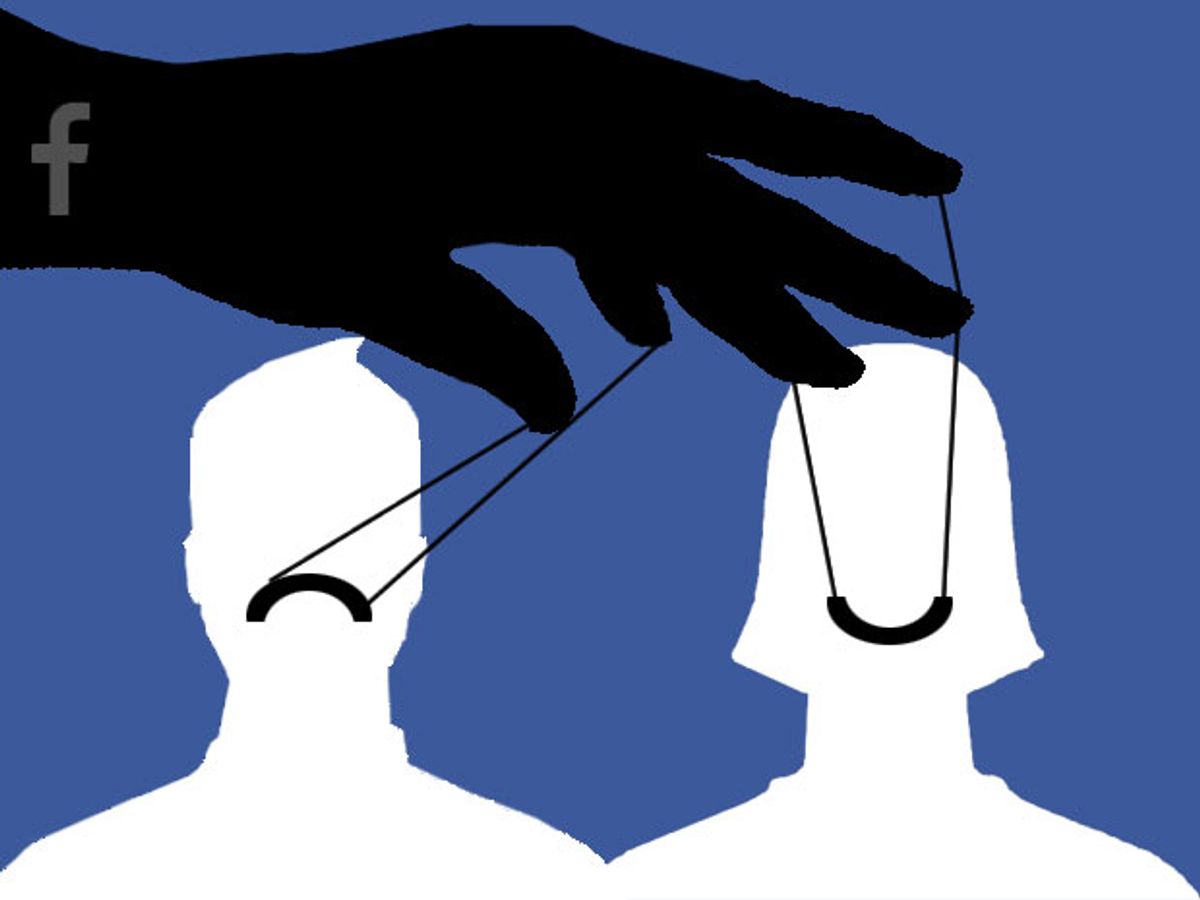

Last week, the news began spreading that Facebook, in the name of research, had manipulated users news feeds for a week back in 2012, skewing the distribution of posts to determine the impact on the users' moods. Over the weekend, the rumble turned into an outcry, with privacy activist Lauren Weinstein tweeting "I wonder if Facebook killed anyone with their emotion manipulation stunt."

The revelation came as a result of the publication, earlier this month, of a 2012 study by researchers at Facebook, Cornell University, and the University of California, San Francisco. The study demonstrated that seeing a lot of positive news on Facebook is likely to lead you to produce more positive posts yourself. The reverse is also true: Seeing lots of negative news pushes people to post more negative material. That example of an emotional contagion was fairly interesting: It countered earlier theories that seeing all your friends having fun on Facebook might make someone’s own life seem bleak by comparison. I would much prefer to see happiness as a contagious.

It turned out that the study was conducted by newsfeed manipulation, not just monitoring positive and negative posts.

Without explicit consent (barring a user agreement that says Facebook can pretty much do anything to your page that it wants), and certainly without the knowledge of its users, Facebook turned 700,000 users into research subjects, skewing their news feeds to be more positive, by removing negative posts, more negative, by removing positive posts, or neutral, by removing random posts. Or, in the shorthand of headlines, “Facebook made users depressed.”

I knew that Facebook has been manipulating my news feed in sometimes annoying ways, causing me to miss interesting news from people I care about and instead giving cat videos—actually any video—precedence. As a word-person that particularly irritates me. Still, though it may be annoying, I wouldn’t call it evil. This, however, goes beyond annoying.

One of the authors of the study has apologized, on his Facebook page, of course. And it is responsible of Facebook to try to understand the impact of the service the company provides. However, the days of experimenting on people without their knowledge or consent are supposed to be over.

Facebook will likely be more careful about informed consent when conducting psychological research in the future. At least for a while. The temptation to conduct other psychological studies has got to be huge. Do people get over broken hearts better when pictures of former flames are suppressed? Does seeing graduation photos motivate students to do better in school? It’s easy to come up with questions to ask.

And what about the companies that are responsible for the other data-gathering and information-providing technologies that I have let into my life? I wear a Fitbit, I do find it motivates me to walk a little more and sit a little less. Fitbit could certainly benefit from user studies that prove it to be motivating, and could easily manipulate my setting of 10,000 steps a day to let me think I’ve walked more or less than I’ve actually walked. If Fitbit congratulated me for reaching my daily goal before dinner, would I skip an evening walk? Maybe. Fitbit would probably like to know. But I would not like to find out later I’d been manipulated.

And what about the Nest thermostat? I’m sure there’s valuable research to be done around energy conservation here: would users really notice if their thermostat setting was turned up or down a degree, as long as the number on the dial read a comfortable 70 degrees? Would the research work as well if Nest told me it might be happening? I would certainly want to know if I’ve been manipulated. Or perhaps this research has already been done and has just not been published.

One thing for sure: going forward, when I’m thinking about bringing a new Internet-of-Things gizmo into my house or onto my body, I won’t only be thinking about how it might breach my privacy, I’ll be thinking about how it could manipulate me.

Tekla S. Perry is a senior editor at IEEE Spectrum. Based in Palo Alto, Calif., she's been covering the people, companies, and technology that make Silicon Valley a special place for more than 40 years. An IEEE member, she holds a bachelor's degree in journalism from Michigan State University.