Data centers are hungry, hot, and thirsty. The approximately 3 million data centers in the United States consume billions of liters of water and about 70 billion kilowatt-hours of electricity per year, or nearly 2 percent of the nation’s total electricity use. About 40 percent of that energy runs air conditioners, chillers, server fans, and other equipment to keep computer chips cool.

Now, Forced Physics, a company based in Scottsdale, Ariz., has developed a low-power system that it says could slash a data center’s energy requirements for cooling by 90 percent. The company’s JouleForce conductor is a passive system that uses ambient, filtered, nonrefrigerated air to whisk heat away from computer chips. In February, Forced Physics plans to launch its first on-site pilot test at a commercial facility in Chandler, Ariz., owned by H5 Data Centers. There, a rack of 30 conductors will cool IT equipment consuming 36 kilowatts, as sensors track airflow, temperature, power usage, and air pressure. Information gleaned from the one-year test will be used to demonstrate performance to potential customers.

The computer equipment in a typical data center runs at about 15 megawatts, devoting 1 MW of that power to server fans. But such a data center would require an additional 7 MW (for a total load of 22 MW) to power other cooling equipment, and it would need 500 million liters of water per year. At a time when data-center traffic is expected to double every two years, the industry’s appetite for electricity and water could soon reach unsustainable levels.

According to Forced Physics’ chief technology officer, David Binger, the company’s conductor can help a typical data center eliminate its need for water or refrigerants and shrink its 22-MW load by 7.72 MW, which translates to an annual reduction of 67.6 million kWh. That data center could also save a total of US $45 million a year on infrastructure, operating, and energy costs with the new system, according to Binger. “We are solving the problem that electrons create,” he said.

In today’s data centers, circuit boards and other electronic components are enclosed in metal containers about the size of pizza boxes. Forty boxes are stacked vertically into racks. Row after row of these racks, arranged side by side in narrow aisles, fill sprawling one-story buildings. An elaborate ventilation network blows chilled air onto the front of the racks. Small fans at the rear of each box draw the cool air over the electronics inside. Then, larger fans at the back of the rack suck out the heated air.

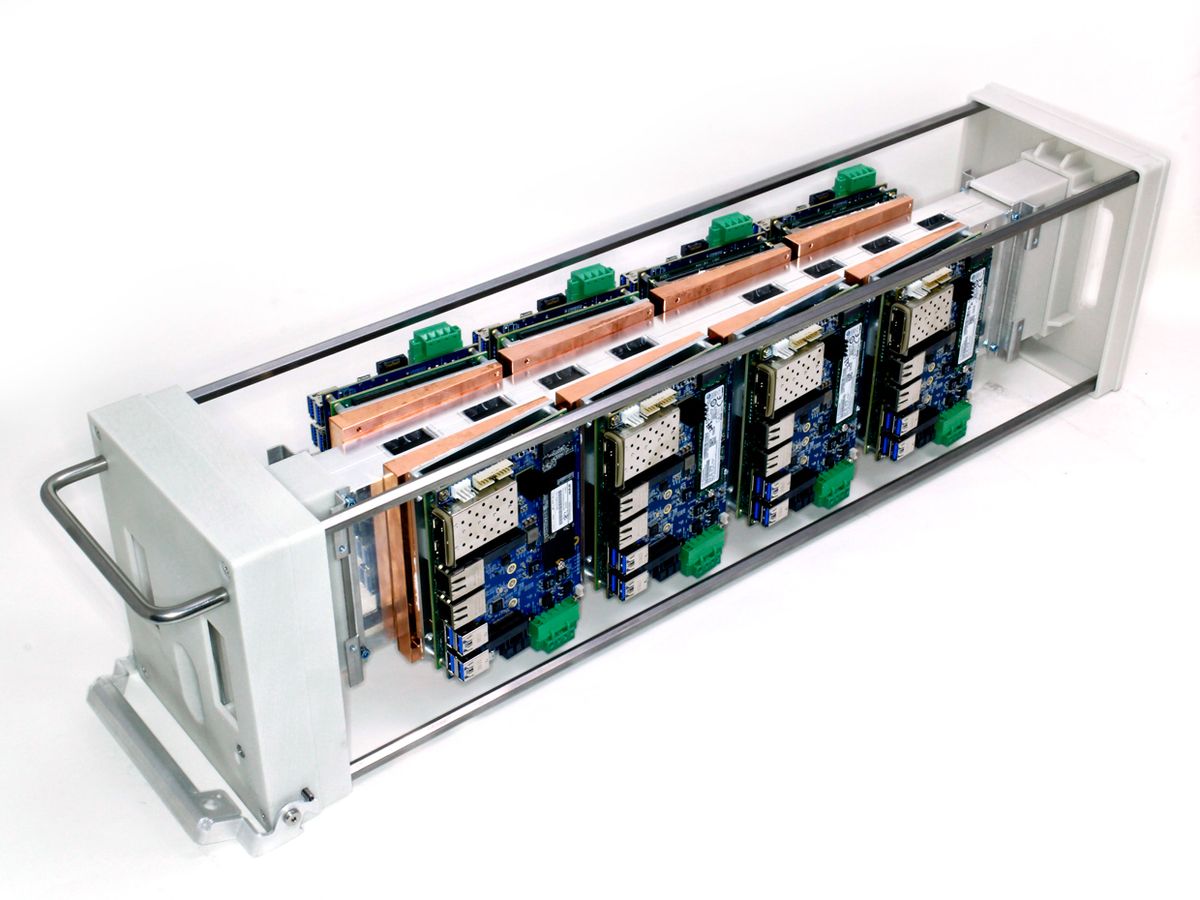

The JouleForce conductor is a narrow box that looks like it could hold a couple of long-stem roses. Circuit boards are not housed inside but rather are attached to the outside. It takes 40 conductors, made entirely of aluminum, to fill a standard rack. There are no small server fans—instead, four large fans at the rear of the rack suck ambient air through the conductors. The fans are the only moving parts in the system, and the air itself is never chilled. For the average data center, these large fans need just 0.28 MW—a fraction of the 1 MW that server fans generally use.

Inside the conductor, 3,000 very thin aluminum fins are lined up in two rows that form a V. The V shape moves air easily and quickly out the back. As a fan sucks air through a conductor, low air pressure draws it into the microchannels between the fins. The fins work like teeth in a comb, neatly orienting air molecules to point in the same direction and arranging them into columns. At the same time, heat from the attached electronics transfers into the aluminum fins. When air molecules contact the fins’ surfaces, they pick up heat from the metal, which prompts them to accelerate out the back.

The hotter the air is as it exits the conductor, the better. In dozens of lab tests with ambient air temperatures between 21 °C and 49 °C, the air exiting the JouleForce conductor measured around 65 °C—which is 27 °C hotter than with conventional cooling systems.

“It’s very efficient,” says Richard Madzar, head of critical systems at H.F. Lenz Co., a firm that designs data centers. Madzar, who is not affiliated with Forced Physics, has seen demonstrations of the conductor in laboratories under simulated conditions. “It eliminates the server fans and requires less power than they would have otherwise consumed,” he says. He also likes that the conductor is reusable, modular, and recyclable.

How data-center managers will respond to this new approach is unclear. Their business models are based on cooling technologies that are already widely available, says Madzar. They may have heard of other kinds of conductive cooling that rely on water or refrigerants, for instance, but these systems are primarily used in supercomputers and tend to be more expensive than conventional cooling technologies. If the JouleForce conductor is to move into everyday use, it will have to be more widely available and competitively priced. “I need to see it in a catalog,” says Madzar.

This article appears in the February 2019 print issue as “A Cooler Cloud.”