President Barack Obama’s victory on Tuesday in the U.S. Presidential election was not only predictable, it was predicted. The guy who got it totally right this time around was Nate Silver of the New York Time’s FiveThirtyEight blog (538 is the total number of votes in the Electoral College).

The guy who got it 100 percent right in 2004, and missed the final count in 2008 by a single vote, got one state wrong this time around—Florida. Sam Wang, of the Princeton Election Consortium, who we profiled back in September, knew he was on shaky ground in that state, and that state alone. Here’s what he wrote Tuesday afternoon:

Florida is a hard case. Several new polls came out this morning, making the median basically zero. As a tie-breaker I resorted to mean-based statistics. I will be unsurprised for it to go either way. Nate Silver and Drew Linzer went the other way. We are all tossing coins. I am prepared to lose the coin toss.

David Rothschild, an economist now working at Microsoft Research, has been studying—and prognosticating—the election all year. He was on IEEE Spectrum’s weekly podcast, Techwise Conversations, twice: in March and in October. Both times, he predicted an Obama win. In fact, the March prediction followed a blog post back in February that called the election with the same near-perfect accuracy. He blogged today,

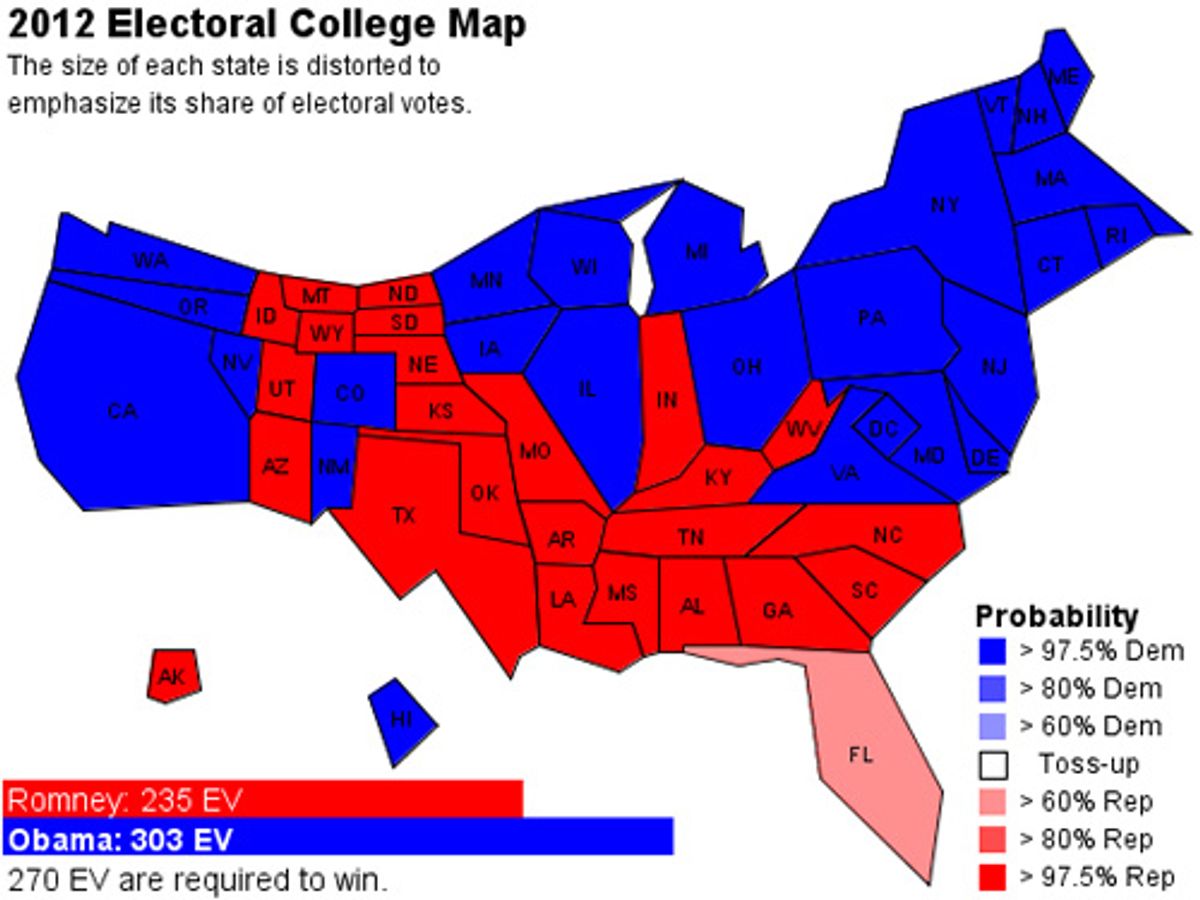

“Last February, the Signal predicted that President Barack Obama would win reelection with 303 electoral votes to his opponent's 235--a prediction we made before the Republican party had chosen the identity of that challenger.

Needless to say, Rothschild’s predictions varied between February and November—indeed, at some points, Romney was ahead. I asked him today what, then, was the point of advanced prediction. After all, if he’s going to brag about being nearly perfectly accurate back in February, he has to acknowledge that he was wildly wrong for much of the summer. Here’s what he said.

Forecasts serve two purposes which I believe we delivered on this cycle. First, they provide efficiency in a multi-billion dollar industry. For forecasts to be useful, they need to early, accurate, and consistent. Second, forecasts provide insight in how and why things happen. Granular forecasts, like mine, will help answer questions about the value of debates, big TV buys, etc., I look forward to pouring over the data in the coming weeks and months and hopefully provide answers to some major political science questions regarding campaigns and elections.

That makes sense. It’s not that the summer forecasts were wrong—Romney really was ahead, and the odds are, if the election had been held then, Romney would be president and the forecasts would have been right. Something else Rothschild told me today also makes a lot of sense:

The election was another vindication of scientific and statistical forecasting versus punditry. Polling, prediction markets, and the statistical models that surrounded them were extremely accurate on the final outcome.

If you want to know what’s going to happen, increasingly, don’t turn to MSNBC or Fox News. Go to sites like Rothschild’s The Signal, the Princeton Election Consortium, and FiveThirtyEight. Some things are predictable—if you go to the people who rely on data and not their gut.

Editor's note: this post was updated to reflect the still undecided outcome of the Florida vote as of this writing.

Image: Princeton Election Consortium