When in 1935 physicist Erwin Schrödinger proposed his thought experiment involving a cat that could be both dead and alive, he could have been talking about D-Wave Systems. The Canadian start-up is the maker of what it claims is the world’s first commercial-scale quantum computer. But exactly what its computer does and how well it does it remain as frustratingly unknown as the health of Schrödinger’s poor puss. D-Wave has succeeded in attracting big-name customers such as Google and Lockheed Martin Corp. But many scientists still doubt the long-term viability of D-Wave’s technology, which has defied scientific understanding of quantum computing from the start.

D-Wave has spent the last year trying to solidify its claims and convince the doubters. “We have the world’s first programmable quantum computer, and we have third-party results to prove it computes,” says Vern Brownell, CEO of D-Wave.

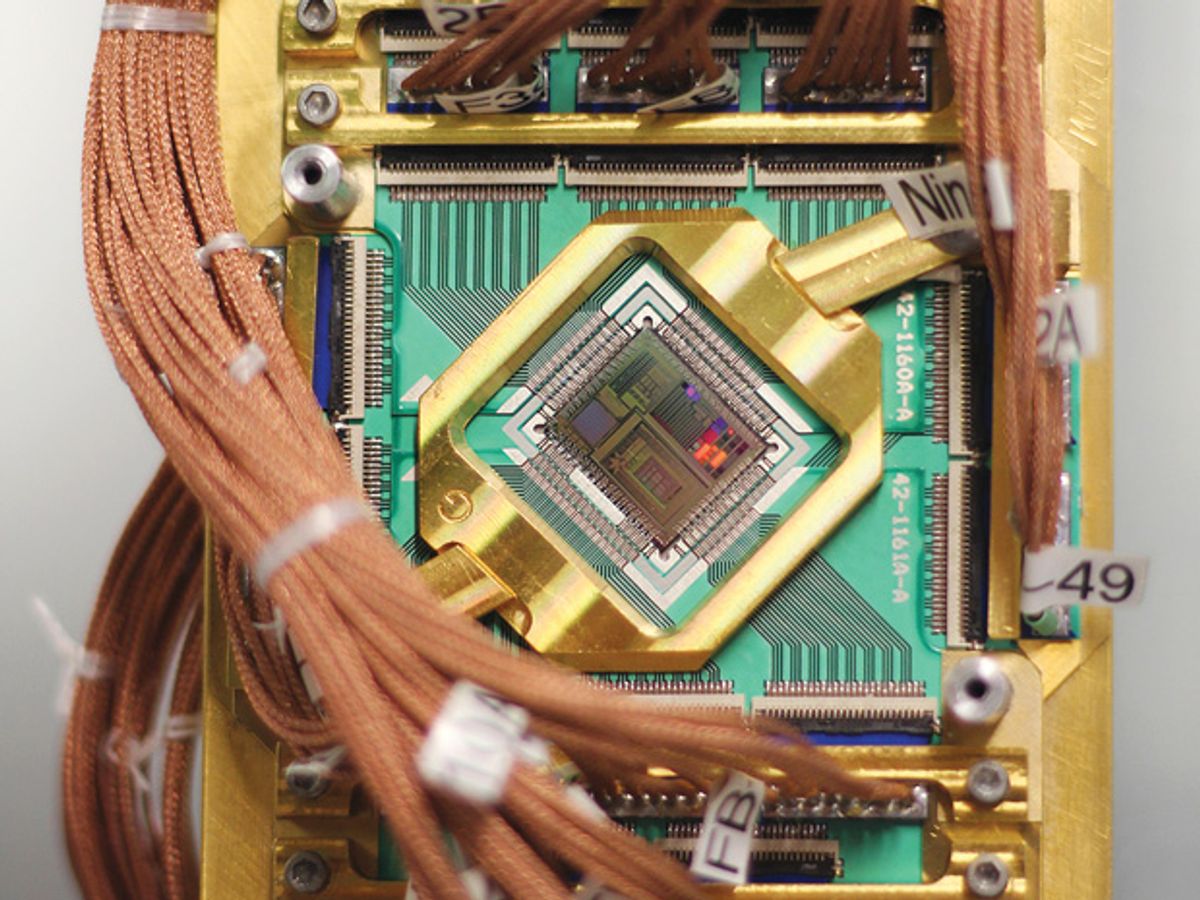

But some leading experts remain skeptical about whether the D-Wave computer architecture really does quantum computation and whether its particular method gives faster solutions to difficult problems than classical computing can. Unlike ordinary computing bits that exist as either a 1 or a 0, the quantum physics rule known as superposition allows quantum bits (qubits) to exist as both 1 and 0 at the same time. That means quantum computing could effectively perform a huge number of calculations in parallel, allowing it to solve problems in machine learning or figure out financial trading strategies much faster than classical computing could. With that goal in mind, D-Wave has built specialized quantum-computing machines of up to 512 qubits, the latest being a D-Wave Two computer purchased by Google for installation at NASA’s Ames Research Center in Moffett Field, Calif.

D-Wave has gained some support from independent scientific studies that show its machines use both superposition and entanglement. The latter phenomenon allows several qubits to share the same quantum state, connecting them even across great distances.

But the company has remained mired in controversy by ignoring the problem of decoherence—the loss of a qubit’s quantum state, which causes errors in quantum computing. “They conjecture you don’t need much coherence to get good performance,” says John Martinis, a professor of physics at the University of California, Santa Barbara. “All the rest of the scientific community thinks you need to start with coherence in the qubits and then scale up.”

Most academic labs have painstakingly built quantum-computing systems—based on a traditional logic-gate model—with just a few qubits at a time in order to focus on improving coherence. But D-Wave ditched the logic-gate model in favor of a different method called quantum annealing, also known as adiabatic quantum computing. Quantum annealing aims to solve optimization problems that resemble landscapes of peaks and valleys, with the lowest valley representing the optimum, or lowest-energy, answer.

Classical computing algorithms tackle optimization problems by acting like a bouncing ball that randomly jumps over nearby peaks to reach the lower valleys—a process that can end up with the ball getting trapped when the peaks are too high.

Quantum annealing takes a different and much stranger approach. The quantum property of superposition essentially lets the ball be everywhere at once at the start of the operation. The ball then concentrates in the lower valleys, and finally it can aim for the lowest valleys by tunneling through barriers to reach them.

That means D-Wave’s machines should perform best when their quantum-annealing system has to tunnel only through hilly landscapes with thin barriers, rather than those with thick barriers, Martinis says.

Independent studies have found suggestive, though not conclusive, evidence that D-Wave machines do perform quantum annealing. One such study—with Martinis among the coauthors—appeared in the arXiv e-print service this past April. Another study by a University of Southern California team appeared in June in Nature Communications.

But the research also shows that D-Wave’s machines still have yet to outperform the best classical computing algorithms—even on problems ideally suited for quantum annealing.

“At this point we don’t yet have evidence of speedup compared to the best possible classical alternatives,” says Daniel Lidar, scientific director of the Lockheed Martin Quantum Computing Center at USC, in Los Angeles. (The USC center houses a D-Wave machine owned by Lockheed Martin.)

What’s more, D-Wave’s machines have not yet demonstrated that they can perform significantly better than classical computing algorithms as problems become bigger. Lidar says D-Wave’s machines might eventually reach that point—as long as D-Wave takes the problem of decoherence and error correction more seriously.

The growing number of independent researchers studying D-Wave’s machines marks a change from past years when most interactions consisted of verbal mudslinging between D-Wave and its critics. But there’s still some mud flying about, as seen in the debate over a May 2013 paper [PDF] that detailed the performance tests used by Google in deciding to buy the latest D-Wave computer.

Catherine McGeoch, a computer scientist at Amherst College, in Massachusetts, was hired as a consultant by D-Wave to help set up performance tests on the 512-qubit machine for an unknown client in September 2012. That client later turned out to be a consortium of Google, NASA, and the Universities Space Research Association.

Media reports focused on the fact that D-Wave’s machine had performed 3600 times as fast as commercial software by IBM. But such reporting overlooked McGeoch’s own warnings that the tests had shown only how D-Wave’s special-purpose machine could beat general-purpose software. The tests had not pitted D-Wave’s machines against the best specialized classical computing algorithms.

“I tried to point out the impermanency of that [3600x] number in the paper, and I tried to mention it to every reporter that contacted me, but apparently not forcefully enough,” McGeoch says.

Indeed, new classical computing algorithms later beat the D-Wave machine’s performance on the same benchmark tests, bolstering critics’ arguments.

“We’re talking about solving the one problem that the D-Wave machine is optimized for solving, and even for that problem, a laptop can do it faster if you run the right algorithm on it,” says Scott Aaronson, a theoretical computer scientist at MIT.

Aaronson worries that overblown expectations surrounding D-Wave’s machines could fatally damage the reputation of quantum computing if the company fails. Still, he and other researchers say D-Wave deserves praise for the engineering it has done.

The debate continues to evolve as more independent researchers study D-Wave’s machines. Lockheed Martin has been particularly generous in making its machine available to researchers, says Matthias Troyer, a computational physicist at ETH Zurich. (Troyer presented preliminary results at the 2013 Microsoft Research Faculty Summit suggesting that D-Wave’s 512-qubit machine still falls short of the best classical computing algorithms.)

Google’s coalition also plans to let academic researchers use its D-Wave machine.

“The change we have seen in the past years is that by having access to the machines that Lockheed Martin leased from D-Wave, we can engage with the scientists and engineers at D-Wave on a scientific level,” Troyer says.

About the Author

Brooklyn, N.Y.–based reporter Jeremy Hsu knew the time was right for a story about the Canadian quantum-computer company D-Wave Systems and its controversial claims. “There are finally independent studies that go at these big questions that have been hanging over this company from the start,” he says. “It was time to check in with the quantum-computing community to see if their attitude had changed.” The answer? It’s complicated.

Jeremy Hsu has been working as a science and technology journalist in New York City since 2008. He has written on subjects as diverse as supercomputing and wearable electronics for IEEE Spectrum. When he’s not trying to wrap his head around the latest quantum computing news for Spectrum, he also contributes to a variety of publications such as Scientific American, Discover, Popular Science, and others. He is a graduate of New York University’s Science, Health & Environmental Reporting Program.