A little over a year ago, we wrote about some clumsy-looking but really very clever research from Vijay Kumar’s lab at the University of Pennsylvania. That project showed how small drones with just protective cages and simple sensors can handle obstacles by simply running into them, bouncing around a bit, and then moving on. The idea is that you don’t have to bother with complex sensors when hitting obstacles just doesn’t matter, which bees figured out about a hundred million years ago.

Over the past year, Yash Mulgaonkar, Anurag Makineni, and Luis Guerrero-Bonilla (all in Kumar’s lab) have come up with a bunch of different ways in which smashing into obstacles can actually be a good and useful thing. From making maps to increased agility to (mostly) on purpose payload deployment, running into stuff and bouncing off again can somehow do it all.

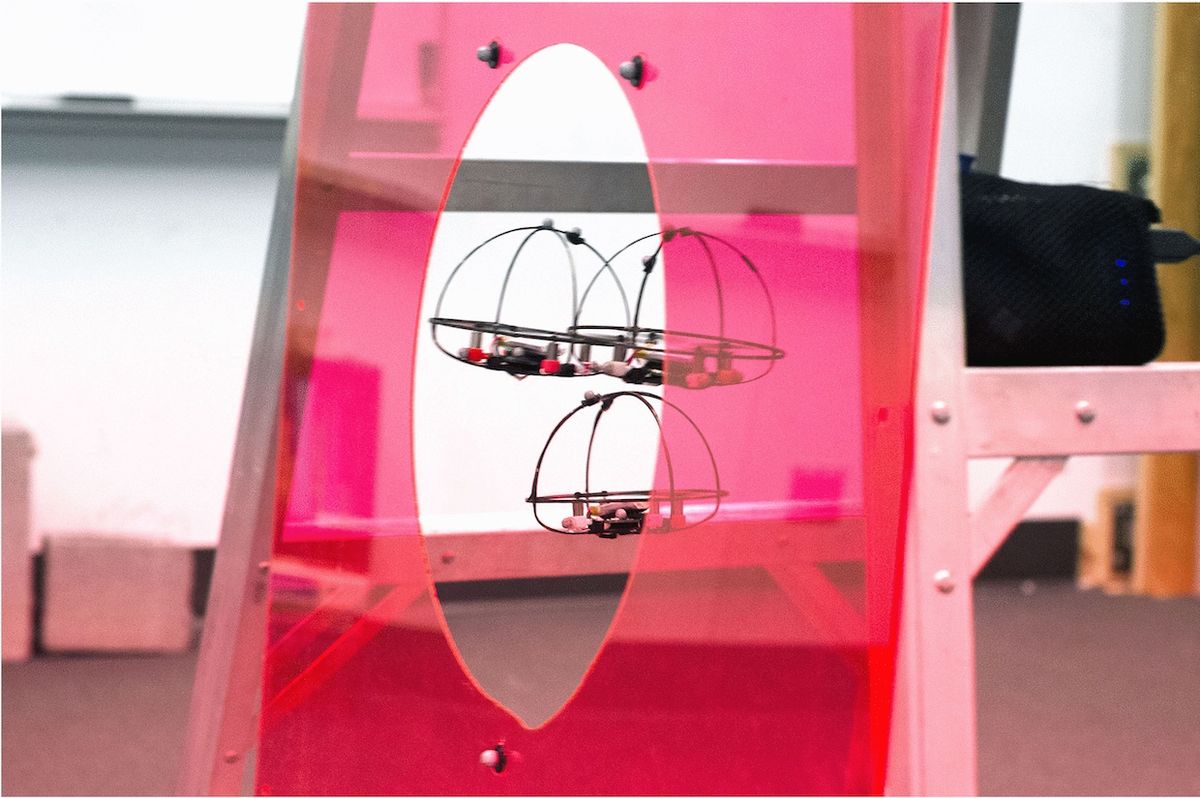

You can read more about the non-collision avoidance that these drones have going on in our previous article, but it’s essentially as simple as ignoring collisions while relying on a roll cage (made out of heat-cured carbon fiber yarn) modeled after the general shape of a gömböc, which is maybe my favorite shape ever.

Anyway, the video above highlights the three main improvements:

- Bumbling drones can map obstacles: Imagine (if you haven’t already) that every time one of these drones runs into something it says, “Ow.” A whole swarm of them wandering through an area full of obstacles will be going “ow ow ow ow ow” the whole time, and if you keep track of exactly where you hear each “ow,” you can build up a picture of where each collision takes place, and eventually, you’ll get a (sparse) map of where all the obstacles are. While you can do this sort of thing with drones equipped with stereo cameras and lidar and whatnot, that means a big, heavy, expensive, fragile drone. Using a large number of inexpensive robots equipped with basic sensors is potentially more reliable and cost effective.

- Bumbling drones can change direction: All drones can reverse which direction they’re flying in, but they have to do it by decelerating to a complete stop and then accelerating again. If you’re near an obstacle and your drone can handle it, it’s sometimes much faster to smash headlong into that obstacle and use the impact to change direction in a fraction of the time.

- Bumbling drones can deploy payloads: First author on this paper Yash Mulgaonkar explains the last one: “Given that our robots can sustain collisions, we can fly in dark, unstructured environments and deploy small payloads by colliding. We demonstrate it here by flying in a dark basement and deploying small magnetic LED flares to illuminate the environment. This payload can be substituted with other small sensors as well, like those for measuring air quality, radiation, chemical contamination, etc.”

Right now, this all requires an external motion-capture system to work, and the computation isn’t done on the robots either, meaning that it’ll work in a comfortably equipped robotics lab but not anywhere particularly useful. The good news is that the researchers are working on on-board localization and visual odometry, and we’re pretty sure that they’ll make it happen. They’re pretty sure too, and the paper promises that “the ideas described in this paper can be realized on independent robots with cameras and IMUs within the next year or two.”

“Robust Aerial Robot Swarms Without Collision Avoidance,” by Yash Mulgaonkar, Anurag Makineni, Luis Guerrero-Bonilla, and Vijay Kumar from the University of Pennsylvania, appears in the January 2018 issue of IEEE Robotics and Automation Letters.

Evan Ackerman is a senior editor at IEEE Spectrum. Since 2007, he has written over 6,000 articles on robotics and technology. He has a degree in Martian geology and is excellent at playing bagpipes.