It’s an unfortunate fact that the laws of physics make it difficult to design and program robots that can move both smoothly and dynamically. The problem is that when a robotic part moves dynamically, all of the stuff that it’s attached to ends up bending and flexing. The bending and flexing may be just a little bit, if your robot is really bulky and stiff, but if you have a lightweight, compliant robot that’s designed to work around humans, the bending and flexing can be so much that it ultimately disrupts whatever motion the robot was trying to make in the first place.

Disney Research, which has an understandable interest in developing lightweight and dynamic robot characters, has presented a paper at SIGGRAPH 2019 demonstrating an effective vibration damping method for robots that would otherwise be very, very wiggly.

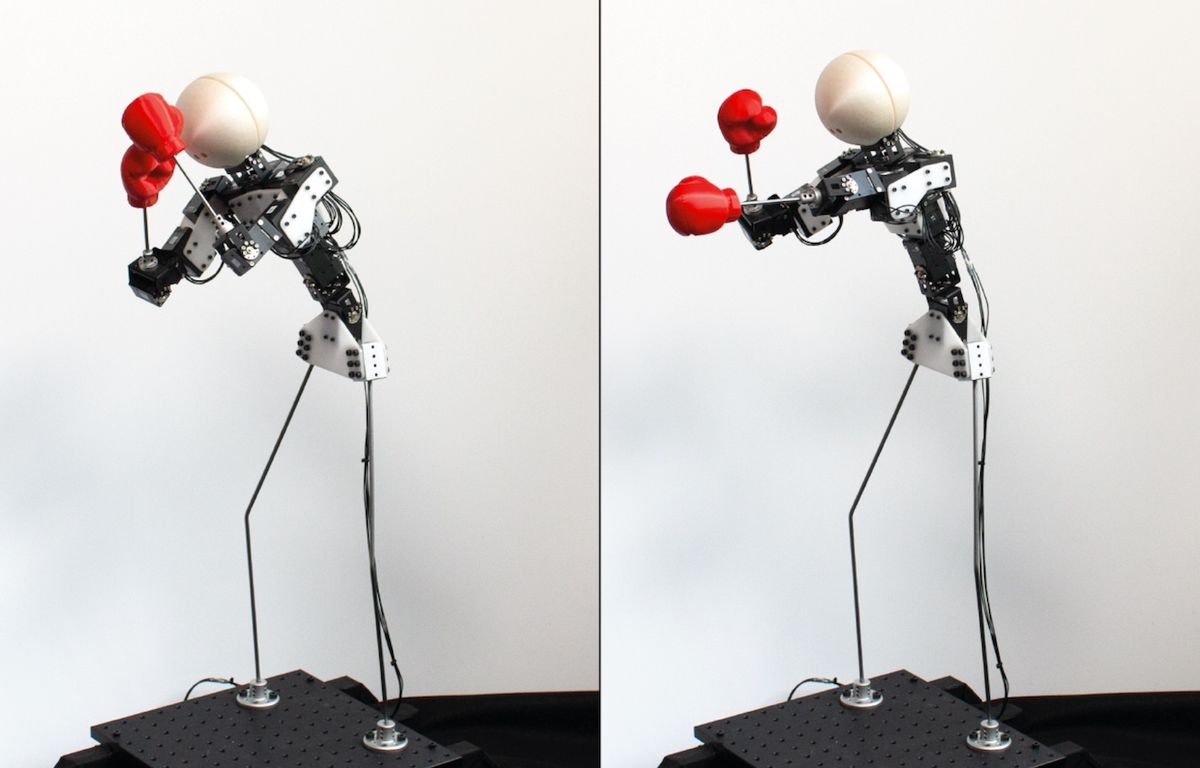

This fascinating video shows a sort of worst-case scenario for wiggly robots—very low stiffness structures making dynamic motions with high mass limbs.

What you’re seeing here is not some kind of dynamic vibration damping system. In other words, if you give any of these robots a shove, they’re going to bounce all over the place. Rather, the specific motions that the robots are making (which are designed by animators) have been optimized to suppress vibrations by a computational tool described in Disney’s paper. You can’t see this happen in real time, but the tool is using a model of the robot to predict how it will vibrate, and then instruct the motors to make the very slight (but very exact) additional motions necessary to cancel out those vibrations while still making the robot move the way the animator wants it to.

This technique does require simulation and computation in advance, and its effectiveness depends in large part on how good your model of the robot is. It gets harder and harder to do efficiently as your robot gets more complicated, and as all of the parts that can flex increases—essentially, each point of flexure introduces another degree of freedom into the mix, and since one part flexing can cause another part to flex, it quickly turns into a huge mess. Part of how the researchers tackle this is to prioritize damping out large amplitude vibrations that are the most visible. And it works very well, even on relatively complex robotic characters:

In a final demonstration, we retarget a boxing animation to the same 13-DOF full-body character, replacing the two hands with boxing gloves on both our simulation model and our physical system. Unlike the drumming sequence, the boxing motion contains faster motions with abrupt stops. The naïve retargeting causes excessive vibrations, especially when the character dodges and moves his upper body backwards and forwards. With the same objective and optimization parameters as for our Drummer, our optimized motor controls lead to deviations smaller than 1.5 cm (compared to 9 cm before optimization) while preserving the input animation without noticeable visual differences.

“Vibration-Minimizing Motion Retargeting for Robotic Characters,” by Shayan Hoshyari, Hongyi Xu, Espen Knoop, Stelian Coros, and Moritz Bächer from Disney Research, was presented at SIGGRAPH 2019 in Los Angeles.

[ Disney Research ]

Evan Ackerman is a senior editor at IEEE Spectrum. Since 2007, he has written over 6,000 articles on robotics and technology. He has a degree in Martian geology and is excellent at playing bagpipes.