The folks at DeepMind are pushing their methods one step further toward the dream of a machine that learns on its own, the way a child does.

The London-based company, a subsidiary of Alphabet, is officially publishing the research today, in Nature, although it tipped its hand back in November with a preprint in ArXiv. Only now, though, are the implications becoming clear: DeepMind is already looking into real-world applications.

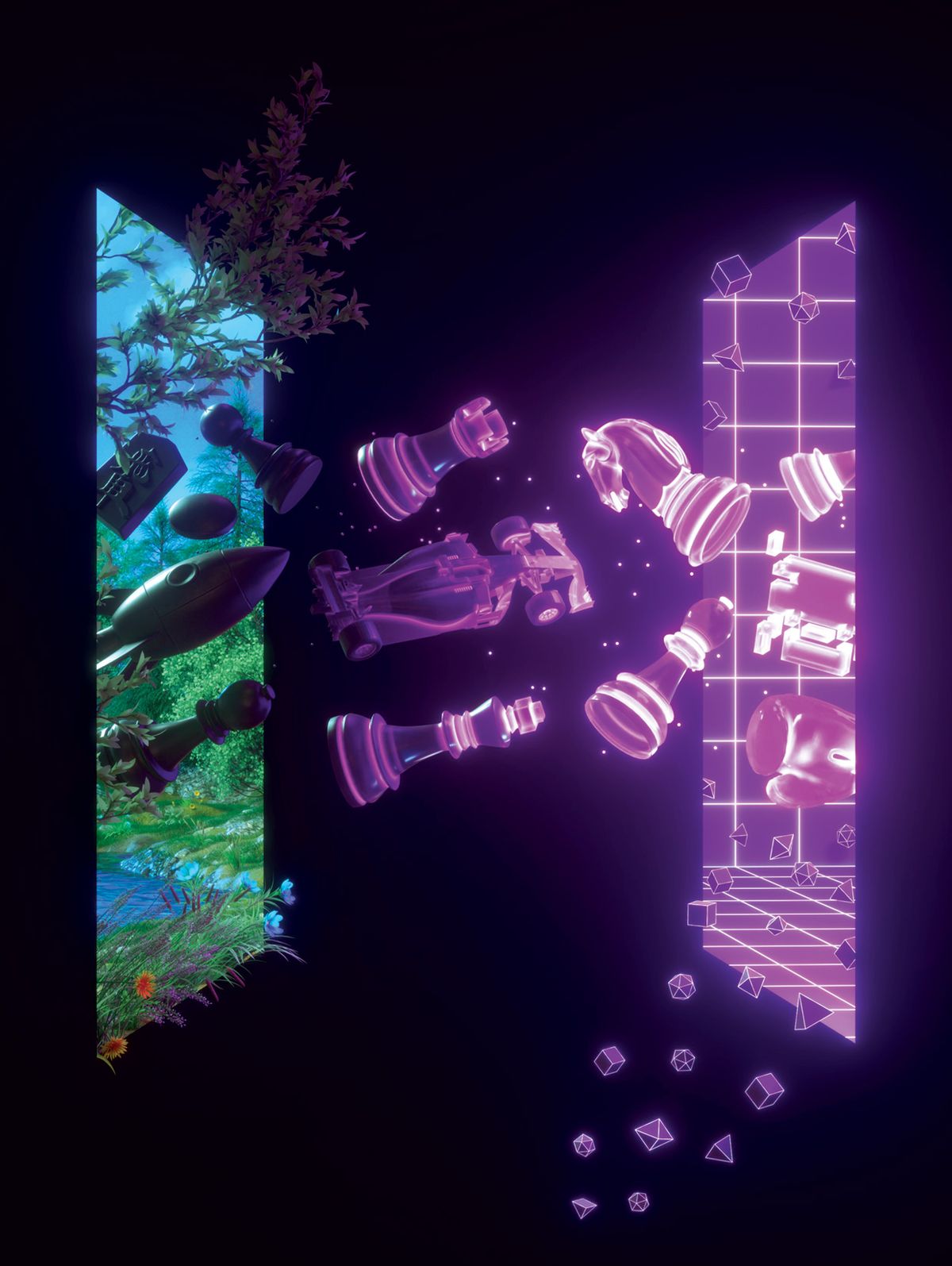

DeepMind won fame in 2016 for AlphaGo, a reinforcement-learning system that beat the game of Go after training on millions of master-level games. In 2018 the company followed up with AlphaZero, which trained itself to beat Go, chess and Shogi, all without recourse to master games or advice. Now comes MuZero, which doesn't even need to be shown the rules of the game.

The new system tries first one action, then another, learning what the rules allow, at the same time noticing the rewards that are proffered—in chess, by delivering checkmate; in Pac-Man, by swallowing a yellow dot. It then alters its methods until it hits on a way to win such rewards more readily—that is, it improves its play. Such learning by observation is ideal for any AI that faces problems that can't be specified easily. In the messy real world—apart from the abstract purity of games—such problems abound.

“We’re exploring the application of MuZero to video compression, something that could not have been done with AlphaZero,” says Thomas Hubert, one of the dozen co-authors of the Nature article.

“It’s because it would be very expensive to do it with AlphaZero,” adds Julian Schrittwieser, another co-author.

Other applications under discussion are in self-driving cars (which in Alphabet is handled by its subsidiary, Waymo) and in protein design, the next step beyond protein folding (which sister program AlphaFold recently mastered). Here the goal might be to design a protein-based pharmaceutical that must act on something that is itself an actor, say a virus or a receptor on a cell’s surface.

By simultaneously learning the rules and improving its play, MuZero outdoes its DeepMind predecessors in the economical use of data. In the Atari game of Ms. Pac-Man, when MuZero was limited to considering six or seven simulations per move—“a number too small to cover all the available actions,” as DeepMind notes, in a statement—it still did quite well.

The system takes a fair amount of computing muscle to train, but once trained, it needs so little processing to make its decisions that the entire operation might be managed on a smartphone. “And even the training isn’t so much,” says Schrittwieser. “An Atari game would take 2-3 weeks to train on a single GPU.”

One reason for the lean operation is that MuZero models only those aspects of its environment—in a game or in the world—that matter in the decision-making process. “After all, knowing an umbrella will keep you dry is more useful to know than modeling the pattern of raindrops in the air,” DeepMind notes, in a statement.

Knowing what’s important is important. Chess lore relates a story in which a famous grandmaster is asked how many moves ahead he looks. “Only one,” intones the champion, “but it is always the best.” That is, of course, an exaggeration, yet it holds a kernel of truth: Strong chessplayers generally examine lines of analysis that span only a few dozen positions, but they know at a glance which ones are worth looking at.

Children can learn a general pattern after exposure to a very few instances—inferring Niagara from a drop of water, as it were. This astounding power of generalization has intrigued psychologists for generations; the linguist Noam Chomsky once argued that children had to be hard-wired with the basics of grammar because otherwise the “poverty of the stimulus” would have made it impossible for them to learn how to talk. Now, though, this idea is coming into question; maybe children really do glean much from very little.

Perhaps machines, too, are in the early stages of learning how to learn in that fashion. Cue the shark music!

Philip E. Ross is a senior editor at IEEE Spectrum. His interests include transportation, energy storage, AI, and the economic aspects of technology. He has a master's degree in international affairs from Columbia University and another, in journalism, from the University of Michigan.