Driving your car until it breaks down on the road is never anyone’s favorite way to learn the need for routine maintenance. But preventive or scheduled maintenance checks often miss many of the problems that can come up. An Israeli startup has come up with a better idea: Use artificial intelligence to listen for early warning signs that a car might be nearing a breakdown.

The service of 3DSignals, a startup based in Kefar Sava, Israel, relies on the artificial intelligence technique known as deep learning to understand the noise patterns of troubled machines and predict problems in advance. 3DSignals has already begun talking with leading European automakers about possibly using the deep learning service to detect possible trouble both in auto factory machinery and in the cars themselves. The startup has even chatted with companies about using their service to automatically detect problems in future taxi fleets of driverless cars.

“If you’re a passenger in a driverless taxi, you only care about getting to your destination and you’re not reporting maintenance problems,” says Yair Lavi, a co-founder and head of algorithms for 3DSignals. So actually having the 3DSignals solution in autonomous taxis is very interesting to the owners of taxi fleets.”

Deep learning usually refers to software algorithms known as artificial neural networks. These neural networks can learn to become better at specific tasks by filtering relevant data through multiple (deep) layers of artificial neurons. Many companies such as Google and Facebook have used deep learning to develop AI systems that can swiftly find that one face in a million online images or do millions of Chinese to English translations per day.

Many tech giants have also applied deep learning to make their services become better at automatically recognizing the spoken sounds of different human languages. But few companies have bothered with using deep learning to develop AI that’s good at listening to other acoustic signals such as the sounds of machines or music. That’s where 3DSignals hopes it can become a big player with its deep learning focus on more general sound patterns, Lavi explains.

“I think most of the world is occupied with deep learning on images. This is by far the most popular application and the most recent. But part of the industry is doing deep learning on acoustics focused on speech recognition and conversation. I think we are probably in the very small group of companies doing acoustics which is more general. This is my aim, to be the world leader in general acoustics deep learning.”

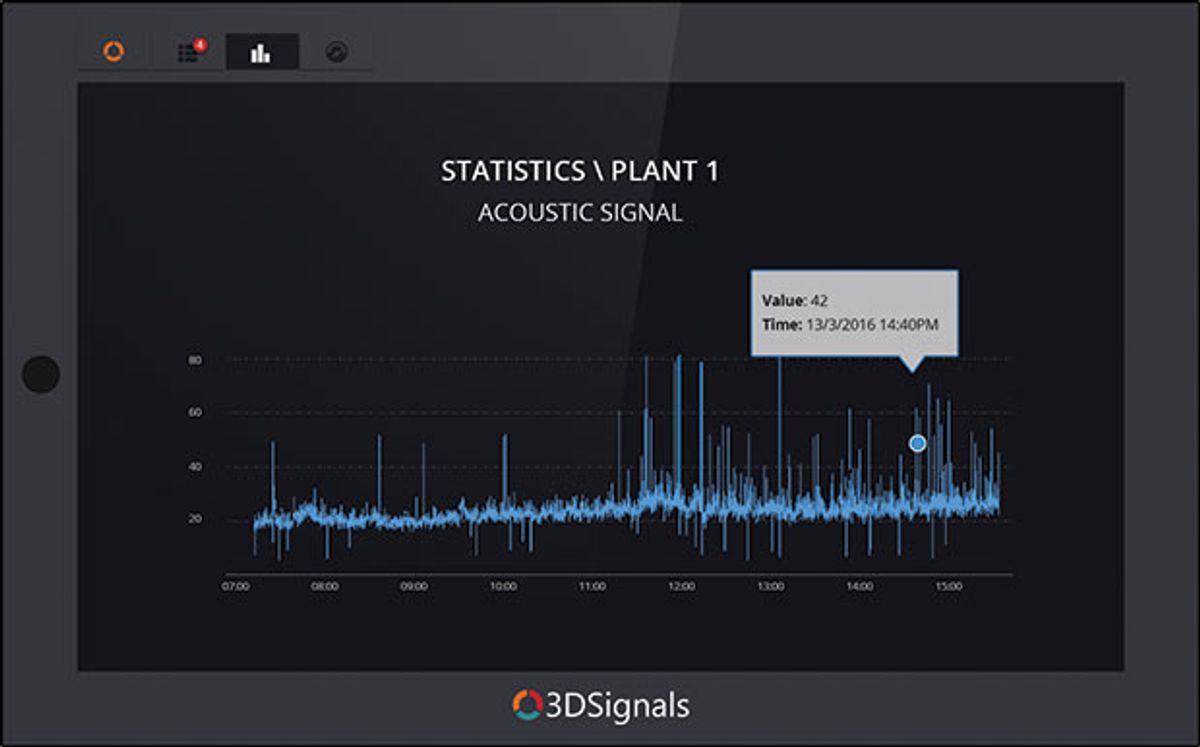

For each client, 3DSignals installs ultrasonic microphones that can detect sounds ranging up to 100 kilohertz (human hearing range is between 20 hertz and 20 kilohertz). The startup’s “Internet of Things” service connects the microphones to a computing device that can process some of the data and then upload the information to an online network where the deep learning algorithms do their work. Clients can always check the status of their machines by using any Web-connected device such as a smartphone or tablet.

The first clients for 3DSignals include heavy industry companies operating machinery such as circular cutting blades in mills or hydroelectric turbines in power plants. These companies started out by purchasing the first tier of the 3DSignals service that does not use deep learning. Instead, this first tier of service uses software that relies on basic physics modeling of certain machine parts—such as circular cutting saws—to predict when some parts may start to wear out. That allows the clients to begin getting value from day one.

The second tier of the service uses a deep learning algorithm and the sounds coming from the microphones to help detect strange or unusual noises from the machines. The deep learning algorithms train on sound patterns that can signal general problems with the machines. But only the third tier of the service, also using deep learning, can classify the sounds as indicating specific types of problems. Before this can happen, though, the clients need to help train the deep learning algorithm by first labeling certain sound patterns as belonging to specific types of problems.

“After a while, we can not only say when problem type A happens, but we can say before it happens, you’re going to have problem type A in five hours,” Lavi says. “Some problems don’t happen instantly; there’s a deterioration.”

When trained, the 3DSignals deep learning algorithms are able to identify predict specific problems in advance with 98 percent accuracy. But the current clients using the 3DSignals system have not yet begun taking advantage of this classification capability; they are still building their training datasets by having people manually label specific sound signatures as belonging to specific problems.

The one-year-old startup has just 15 employees, but it has grown fairly fast and raised $3.3 million so far from investors such as Dov Moran, the Israeli entrepreneur credited with being one of the first to invent USB flash drives. Lavi and his fellow co-founders are already eying several big markets that include automobiles and the energy sector beyond hydroelectric power plants. A series A funding round to attract venture capital is planned for sometime in 2017.

If all goes well, 3DSignals could expand its lead in the growing market for providing “predictive maintenance” to factories, power plants, and car owners. The impending arrival of driverless cars may put even more responsibility on the metaphorical shoulders of a deep learning AI that could listen for problems while the human passengers tune out from the driving experience. On top of all this, 3DSignals has the chance to pioneer the advancement of deep learning in listening to general sounds. Not bad for a small startup.

“It’s important for us to be specialists in general acoustic deep learning, because the research literature does not cover it,” Lavi says.

Jeremy Hsu has been working as a science and technology journalist in New York City since 2008. He has written on subjects as diverse as supercomputing and wearable electronics for IEEE Spectrum. When he’s not trying to wrap his head around the latest quantum computing news for Spectrum, he also contributes to a variety of publications such as Scientific American, Discover, Popular Science, and others. He is a graduate of New York University’s Science, Health & Environmental Reporting Program.