It's difficult to find an area of scientific research where deep learning isn't discussed as the next big thing. Claims abound: deep learning will spot cancers; it will unravel complex protein structures; it will reveal new exoplanets in previously-analyzed data; it will even discover a theory of everything. Knowing what's real and what's just hype isn't always easy.

One promising—perhaps even overlooked—area of research in which deep learning is likely to make its mark is microscopy. In spite of new discoveries, the underlying workflow of techniques such as scanning probe microscopy (SPM) and scanning transmission electron microscopy (STEM) has remained largely unchanged for decades. Skilled human operators must painstakingly set up, observe, and analyze samples. Deep learning has the potential to not only automate many of the tedious tasks, but also dramatically speed up the analysis time by homing in on microscopic features of interest.

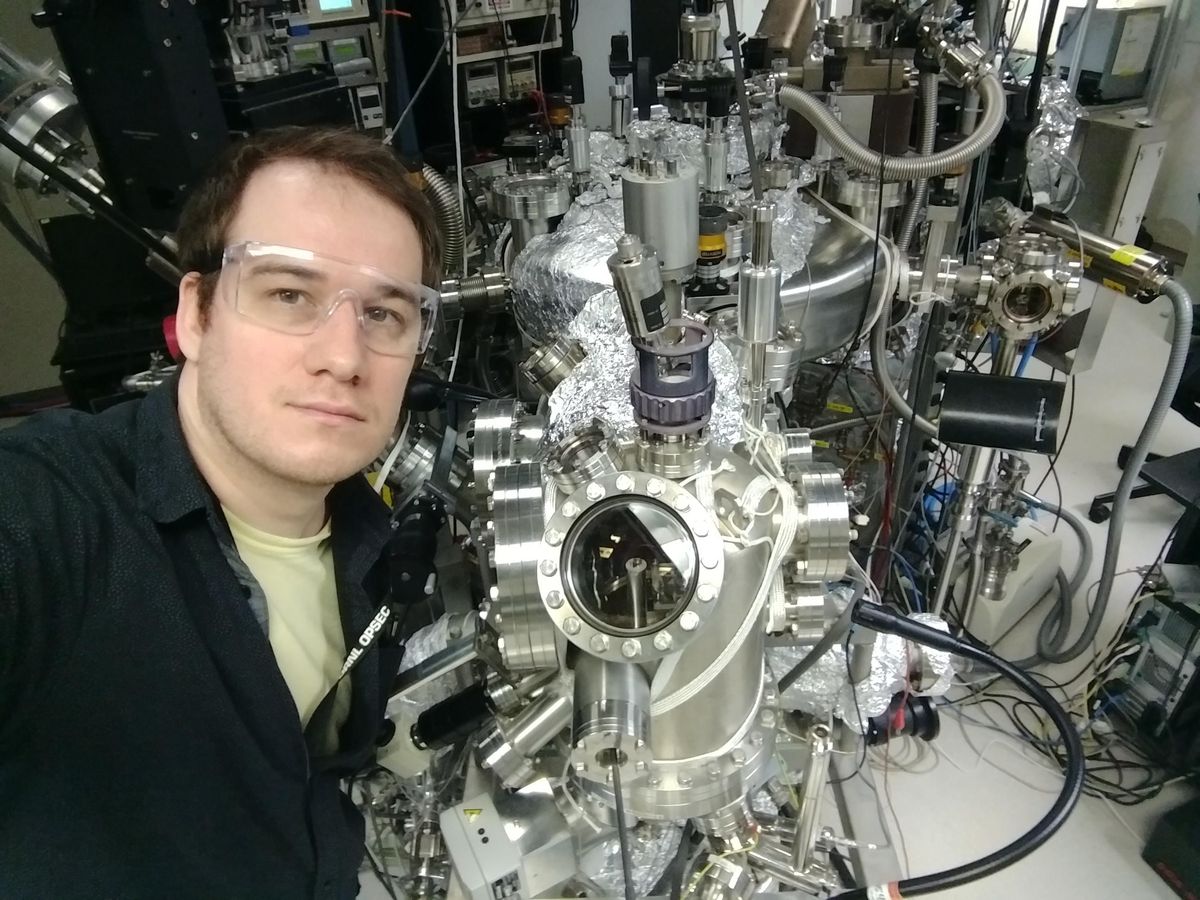

"People usually just look at the image and they identify a few properties of interest," says Maxim Ziatdinov, a researcher at Oak Ridge National Lab in Tennessee. "They basically discard most of the information, because there is just no way to actually extract all the features of interest from the data." With deep learning, Ziatdinov says that it's possible to extract information about the position and type of atomic structures (that would otherwise escape notice) in seconds, opening up a vista of possibilities.

It's a twist on the classical dream of doing more with smaller things (most famously expressed in Richard Feynman's "There's Plenty of Room at the Bottom"). Instead of using hardware to improve the resolution of microscopes, software could expand their role in the lab by making them autonomous. "Such a machine will 'understand' what it is looking at and automatically document features of interest," an article in the Materials Research Society Bulletin declares. "The microscope will know what various features look like by referencing databases, or can be shown examples on-the-fly."

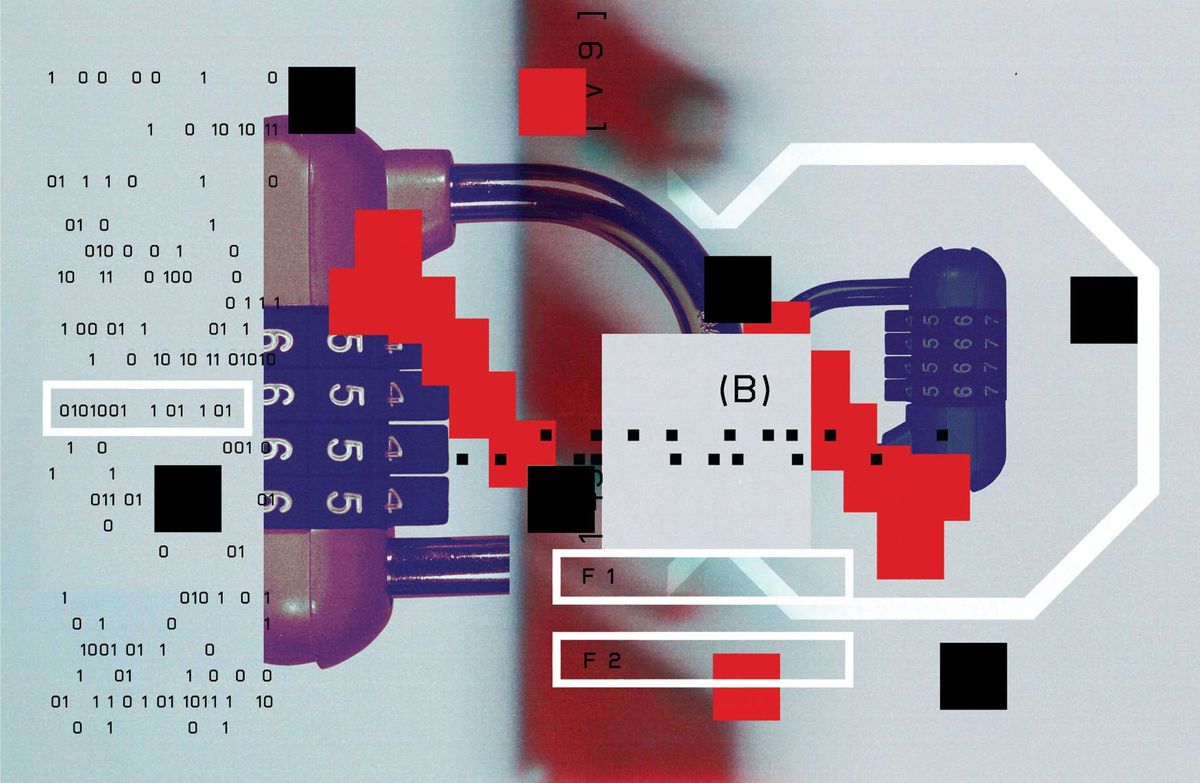

Despite its micro- prefix, microscopy techniques such as SPM and STEM actually deal with objects on the nano- scale, including individual atoms. In SPM, a nanoscale tip hovers over the sample's surface and, like a record player, traces its grooves. The result is a to an visual image instead of an audio signal. On the other hand, STEM generates an image by showering a sample with electrons and collecting those which pass through, essentially creating a negative.

Both microscopy techniques allow researchers to quickly observe the broad structural features of a sample. Researchers like Ziatdinov are interested in the functional properties of certain features such as defects. By applying a stimulus like an electric field to a sample, they can measure how it responds. They can also use the sample's reactions to the applied stimuli to build a functional map of the sample.

With automation, researchers could make measurements that have never been accessible.

But taking functional data takes time. Zooming in on a structural image to take functional data is time-prohibitive, and human operators have to make educated guesses about which features they are hoping to analyze. There hasn't been a rigorous way to predict functionality from structure, so operators have simply had to get a knack for picking good features. In other words, the cutting edge of microscopy is just as much art as it is science.

The hope is that this tedious feature-picking can be outsourced to a neural network that predicts features of interest and navigates to them, dramatically speeding up the process.

Automated microscopy is still at the proof-of-concept stage, with a few groups of researchers around the world hammering out the principles and doing preliminary tests. Unlike many areas of deep learning, success here would not be simply automating preexisting measurements; with automation, researchers could make measurements that have heretofore been impossible.

Ziatdinov and his colleagues have already made some progress toward such a future. For years, they sat on microscopy data that would reveal details about graphene—a few frames that showed a defect creating strain in the atomically thin material. "We couldn't analyze it, because there's just no way that you can extract positions of all the atoms," Ziatdinov says. But by training a neural net on the graphene, they were able to categorize newly recognized structures on the edges of defects.

Microscopy isn't just limited to observing. By blasting samples with a high energy electron beam, researchers can shift the position of atoms, effectively creating an "atomic forge." As with a conventional billows-and-iron forge, automation could make things a lot easier. An atomic forge guided by deep learning could spot defects and fix them, or nudge atoms into place to form intricate structures—around the clock, without human error, sweat, or tears.

"If you actually want to have a manufacturing capability, just like with any other kind of manufacturing, you need to be able to automate it," he says.

Ziatdinov is particularly interested in applying automated microscopy to quantum devices, like topological qubits. Efforts to reliably create these qubits have not proven successful, but Ziatdinov thinks he might have the answer. By training a neural network to understand the functions associated with specific features, deep learning could unlock which atomic tweaks are needed to create a topological qubit—something humans clearly haven't quite figured out.

Benchmarking exactly how far we are from a future where autonomous microscopy helps build quantum devices isn't easy. There are few human operators in the entire world, so it's difficult to compare deep learning results to a human average. It's also unclear which obstacles will pose the biggest problems moving forward in a domain where the difference of a few atoms can be decisive.

Researchers are also applying deep learning to microscopy on other scales. Confocal microscopy, which operates at a scale thousands of times larger than SPM and STEM, is an essential technique that gives biologists a window into cells. By integrating new hardware with deep learning software, a team at the Marine Biological Laboratory in Woods Hole, Mass., dramatically improved the resolution of images taken from a variety of samples such as cardiac tissue in mice and cells in fruit fly wings. Critically, deep learning allowed the researchers to use much less of light for imaging, reducing damage to the samples.

The conclusion reached by a recent review of the prospects for autonomous microscopy is that it "will enable fundamentally new opportunities and paradigms for scientific discovery. "But it came with the caveat that "this process is likely to be highly nontrivial." Whether deep learning lives up to its promise on the microscopic frontier remains, literally, to be seen.

This article appears in the January 2022 print issue as "Navigating the Nanoscale."

Dan Garisto is a freelance science journalist who covers physics and other physical sciences. His work has appeared in Scientific American, Physics, Symmetry, Undark, and other outlets.