To Crack the Toughest Optimization Problems, Just Add Lasers

An odd device known as an optical Ising machine could route airplanes and help the NFL schedule its games

Last December, a glitch in the crew-scheduling system for American Airlines threatened to disrupt thousands of flights over the holiday season. The error allowed pilots to drop flights without requiring another pilot to cover for them, imperiling as many as 15,000 trips. And while the airline managed to spot the problem and staff the flights, the snafu was a reminder of how much we depend on computers to schedule the vast array of services and functions on which our societies have become completely dependent.

All major airlines, for example, use sophisticated scheduling-optimization algorithms to assign crews to planes. And while the American Airlines incident was not caused directly by the failure of one of these algorithms, the end result was much the same. Such a failure would make it likely that hundreds of thousands of people would be stranded or seriously inconvenienced while the airline sought a solution.

It is a triumph of algorithmic science and of Moore’s Law that many complicated optimization problems can be tackled, including ones in transportation, logistics, and scheduling. Much of the modern world would be crippled without these algorithms: The 50,000 cargo ships delivering goods, the 25,000 terawatt-hours of electricity produced, and the 1 zettabyte of Internet traffic routed annually—to name just a few examples—would be used far less efficiently. However, organizations often work with less-than-optimal solutions due to tight deadlines and available computing resources. What’s more, there is still a lot of room for improvement in the methods we use to solve a large fraction of optimization problems.

Given the importance of optimization, and the fact that the era of steady, large improvements in computer-processor performance appears to be coming to a close, researchers have begun to explore how machines specially designed for optimization might deliver significant improvements in our ability to conquer complex problems.

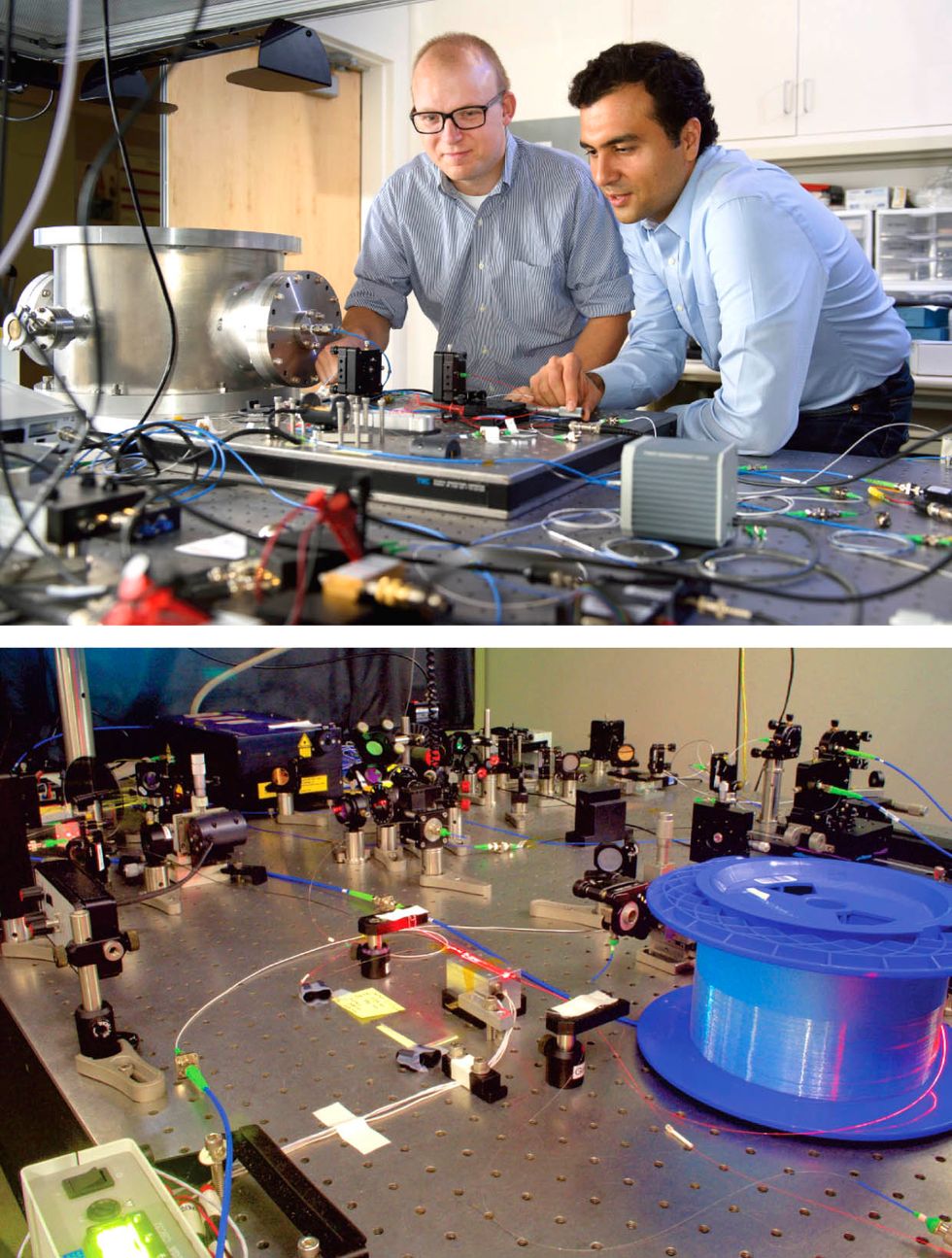

One promising approach is to develop optical machines for optimization. A group at Stanford University (of which I am a member) led by Yoshihisa Yamamoto launched this research seven years ago, and it is now being pursued by several academic groups, as well as researchers at Hewlett Packard Labs and NTT Basic Research Laboratories. After years of work, there’s growing confidence that at least one of these research groups will someday be able to build a machine that could help us tackle some of the most complex optimization problems demanded by modern industries.

Recall the classic traveling salesman problem, in which the salesman moves from city to city to sell his wares. He wants to avoid wasting time and spending extra money on gasoline. This is an optimization problem in which the goal is to find the shortest route for the salesman to take, given that he wants to visit each location only once, and at the end of his trip he wants to return to the city where he started his tour.

For five cities, it’s easy. You can solve the problem by considering all 12 relevant paths. But if our hardworking salesman plans to visit 50 cities, then the brute-force approach of considering all possible paths is overwhelming, because there are more than 1 novemdecillion paths—that’s a 1 with 60 zeros following it.

Algorithms that make use of a variety of shortcuts and take advantage of reasonable approximations can produce useful solutions to this problem. But even the best of those algorithms can bog down a powerful computer. In a recent example, an effort at the University of Waterloo, in Canada, to find the shortest path between almost 50,000 sites in the U.S. National Register of Historic Places—and to prove that its answer was correct—used 310 powerful processors running around the clock for nine months.

Optimization encompasses far more than the traveling salesman problem. Scheduling is another difficult optimization challenge. For example, the National Football League (NFL) in the United States has to schedule several hundred games each year while attempting to abide by thousands of rules, such as one that restricts teams from playing more than three consecutive away games. To solve the problem in 2017, the NFL relied on a cluster of nearly 400 computers.

Ising Optimization

Elsewhere, manufacturing facilities need to schedule maintenance work on machines. Universities need to schedule classes in classrooms. Postal services need to plan delivery routes. Large cities, such as Beijing and Tokyo, would love to be able to efficiently route the millions of cars that try to navigate their streets during rush hour. These problems can involve hundreds or thousands of events that need to be scheduled, and in many cases practical solutions are still elusive because they require too much time or too many computers.

Researchers have been trying to build special-purpose machines to solve optimization problems for years. In the mid-1980s, David Tank, then at AT&T Bell Laboratories, and John Hopfield, who then held dual appointments at AT&T Bell Labs and Caltech, proposed using analog electronic circuits representing neural networks to solve optimization problems like the traveling salesman problem. Their paper stimulated a decade of research in that direction. Then, in 1994, Leonard Adleman, at the University of Southern California, discovered that DNA could, in theory, be used to solve the same kinds of problems. His insight sparked a similar spate of research. However, none of these efforts to develop radically new and effective approaches to solving optimization problems resulted in a practical alternative to conventional computers and techniques, which remain the dominant methods today.

The efforts, at Stanford and elsewhere, to build special-purpose optical machines to solve optimization problems target one particular such problem, called Ising optimization. It is named for the late physicist Ernst Ising, who is known for his work on a model of magnetic moments and how it explains transitions between different magnetic states. It turns out that many common optimization problems, including scheduling and route-finding problems, can be easily converted into Ising optimization problems.

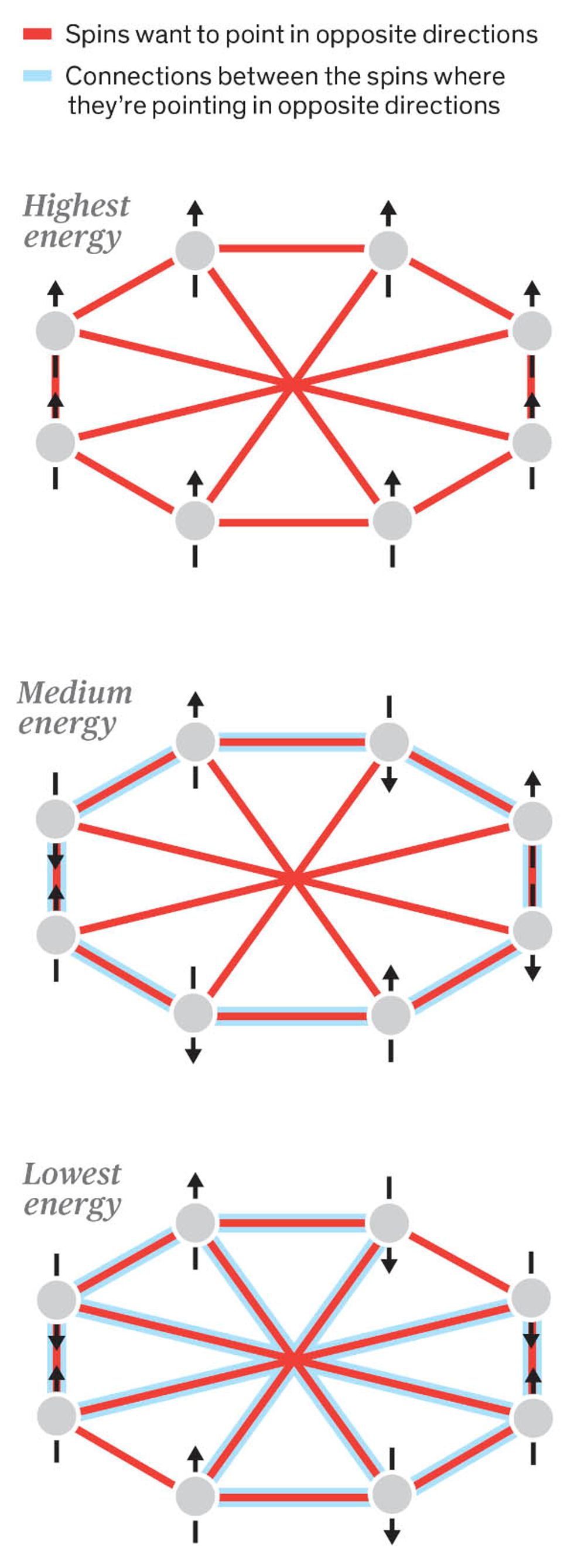

To understand how the Ising model is related to optimization, you have to start with its use by physicists to understand magnetism. Consider a regular bar magnet. Using the Ising model, you can think of the bar magnet as a grid of atoms in three dimensions, in which each atom itself acts as a tiny bar magnet. The electrons of each atom have a property called spin. The spins of the valence electrons—that is, the outermost electrons—point either up or down, by convention. The direction of these spins determines whether a material is magnetized or not. If all the spins point up, the material is magnetized. If they all point down, the material is also magnetized, but with the opposite polarity. If the spins are a mix of up and down, the material is not magnetized.

These spins also interact with one another. In a bar magnet, two neighboring electrons have a total “combined energy” that is lower if their spins are aligned—that is, if they both point either up or down. Conversely, their sum total energy is higher if their spins are pointing in opposite directions.

An Optical Ising Machine

In the Ising model, you add up the energy from the interactions between the spins of every pair of electrons in a collection of atoms. Because the amount of energy depends on whether spins are aligned or not, the total energy of the collection depends on the direction in which each spin in the system points. The general Ising optimization problem, then, is determining in which state the spins should be so that the total energy of the system is minimized.

In the simplest model, only neighboring spins are assumed to interact. But in the general Ising optimization problem, any spin could interact with any other spin, regardless of their distance from one another, and the sign and strength of that interaction could be unique to each pair of spins. When the problem is this general, it is very difficult to solve—as difficult as solving the routing problem of a salesman visiting hundreds of thousands of potential buyers. If we can find a way to solve Ising optimization problems quickly, and if we find a way to think about the traveling salesman and similar problems in the same way we think about Ising problems, we might be able to solve those problems faster too. The lowest energy state of the system in the Ising problem will represent the fastest route between cities, the most efficient solution to packing a cargo vessel, or whatever other optimization we might need.

So how do you actually converta traveling salesman’s route to spins? The main task is mapping: We need to convert our optimization problem into a form that can be solved by a machine designed to solve Ising optimization problems. The first thing you have to do is map the original optimization problem—finding a route for a vacuum salesman, for example—onto a representative set of spins and define how the spins influence each other. Thanks to research done over the past several decades by both computer scientists and operations researchers, the mappings of many different optimization problems to Ising forms are generally known.

However, individual atoms and their electron spins are difficult to work with, so our efforts have been focused on building a machine that implements the Ising model using pulses of light in place of electron spins. The Ising problem is mapped onto the pulses and the interactions between them. The result is assessed in terms of the problem’s total energy, with the lowest energy state as the optimal solution. Then this solution is translated into what it means for the original problem, such as the shortest route for our hardworking salesman.

Key to our prototype system’s ability to map a spin onto a pulse of light is an optical parametric oscillator (OPO), a device similar to a laser. An OPO, unlike a conventional laser, produces light that is either exactly in or exactly out of phase with respect to some reference light. That’s precisely what we need to represent the binary spin states, up and down. We can represent “spin up” as the condition in which the light from the OPO is in phase with the reference light and, conversely, “spin down” if it is out of phase.

There’s more than one way to construct an Ising machine using OPOs. Groups at NTT, Caltech, Cornell, and Columbia, among others, are exploring different approaches. But the prototype Ising machine that was first demonstrated at Stanford in an experiment led by Alireza Marandi (now at Caltech), used a technique that we continue to work with: time-division-multiplexed OPOs with optical coupling.

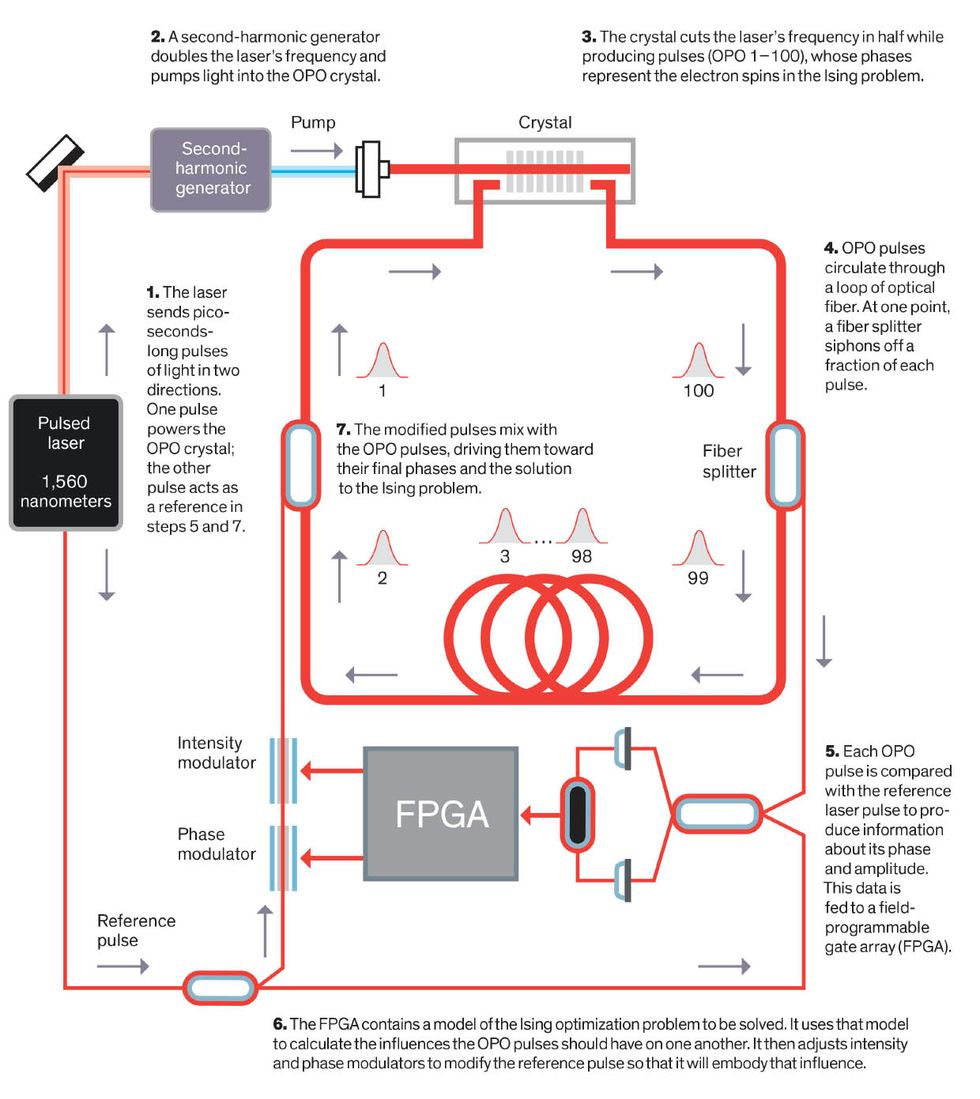

That’s quite a mouthful, so let’s unpack it. We start with a pulsed laser source. The source sends simultaneous, picoseconds-long pulses of light in two directions. The first will become a reference pulse, which itself splits to follow two separate branches and will be important to our explanation later.

The second is used as a source of energy for the OPO; it stimulates a crystal in the OPO to emit pulses of photons. Each OPO pulse is injected into a spooled loop of optical fiber that is typically at least a few hundred meters long, depending on how many pulses we want to work with. Hundreds or even thousands of OPO pulses can be loaded into this fiber ring to chase each other around and around, passing again and again through the crystal.

The phases of these OPO pulses will ultimately act as the spins in the Ising model. But when they are first created, before they’ve made multiple transits around the loop, they are of such low intensity that their phases are not well defined. It’s the way we force the pulses to interact that will ultimately give them their final phases and the solution to our Ising problem.

Remember the reference light from earlier? At one point in the loop, a fiber splitter siphons off a small fraction of each pulse, and it is compared with the reference pulse in what’s called a homodyne detector. That detector outputs a voltage that contains information about the pulse’s phase and amplitude. That signal is digitized and fed into a field-programmable gate array (FPGA). It’s here that the Ising problem itself is represented.

Recall that solving the Ising problem means finding the lowest energy state of a collection of spins, in which the spins have different interactions with one another, and these interactions contribute different energies to the total energy of the system. In the OPO, each pulse represents a spin. So for each pulse—and we’ve used 100 in our setup—the FPGA performs a calculation that involves the recorded measurements of all the other pulses that, according to the Ising problem, should influence the spin in question. The processor then applies that calculation to the settings of an intensity modulator and a phase modulator that sit in the path of one branch of the reference pulse. The newly modified reference pulse is then fed into the optical-fiber ring where the OPO pulses are zipping past.

The timing is crucial—we want to ensure that the modified reference pulse will combine with the correct OPO pulse. Get it right and the two pulses mix. Based on whether the two pulses are in phase or not, the fed-in pulse pushes that OPO pulse toward representing either a spin-up or a spin-down electron.

We repeat the whole process for each OPO pulse in the loop, and it can take tens to hundreds of trips around the loop for all the pulses to achieve their final phase states. Once that’s done, a separate computer reads off the set of phases, interprets them as either spin-up or spin-down electrons in the Ising problem, and then translates that into a meaningful solution to the original optimization problem you wanted to solve.

In our experiments, we first constructedfour-spin and then, later, 16-spin time-division-multiplexed OPO systems. These were essentially hardwired, encoding the problem as a set of optical-fiber branches of a particular length. We successfully found minimum-energy states in those experiments, and that motivated us to pursue the approach further. In 2016, we constructed a machine with FPGA-based feedback that can solve Ising problems with 100 spins. Further benchmarking against other specialized systems, including a “quantum annealer” at NASA, has given us confidence that OPO Ising machines can be effective optimizers.

The results have been encouraging, but we have much to learn before we can even say whether this optical approach will ever be able to beat a conventional processor in solving optimization problems of a practical nature. It’s even possible that the machine’s problem-solving capabilities could be improved by utilizing quantum states of light, which are difficult to simulate. We’re still only beginning to address many of these questions, and we expect to explore an exciting interplay between theory and experiment over the next several years as we develop this new type of computational machine.

This article appears in the December 2018 print issue as “To Solve Optimization Problems, Just Add Lasers.”

About the Author

Peter McMahon is a postdoctoral research fellow at Stanford University and will become an assistant professor of applied and engineering physics at Cornell University in July 2019.