Sultan of Sound

Voice mail, speech recognition, the artificial larynx, packet-switched voice—these commonplace applications build on the pioneering research of James L. Flanagan

The challenge was clear. Take the telegraph line that ran across the bottom of the Atlantic Ocean, carrying simple pulses of electricity over its narrow 300-hertz bandwidth, and use it to transmit voice conversations. The problem was, it was 1952, and the technology was rudimentary. Bandwidth compression of voice signals, called vocoding, had been done during World War II for radio transmission of speech—but the quality of the resulting speech was inadequate.

It was up to James L. Flanagan, then a graduate student at the Massachusetts Institute of Technology, in Cambridge, to come up with a better idea. He dismissed the then-current vocoding technology, which took 10 different frequencies of the voice signal and converted their varying amplitudes into analog signals for transmission. Instead, he went back to the fundamentals: how the elements of the voice are created and resonate within the human vocal tract. For different elements, resonances peak at different points in the spectrum; the frequencies of these points are called formant frequencies.

In human speech, the frequencies of formants depend on the shape and motion of the articulators—mouth, jaw, tongue, and lips. Different combinations of these shapes and motions create many different formants. Flanagan chose three of those. Then, by coding the variations in frequency for each formant, he supplied a more efficient means of representing speech than the technique used earlier, which coded the amplitudes of multiple signals at fixed positions of frequency. Although this formant coding was never implemented on those telegraph cables, it did set the stage for later advances in bandwidth conservation.

He also did experiments to determine how accurately the ear detects errors in such coding, establishing the engineering criteria for his own and future speech coding technology. In fact, a short letter to the editor of the Journal of the Acoustical Society of America (May 1955) describing these experiments is still his most frequently requested publication.

Flanagan’s work with speech coding heralded a series of advances over the years, including a currently favored technique, linear predictive coding. In this technique, the value of a speech signal at each sample point is predicted by a combination of past samples. This type of coding is used in low-bandwidth speech communications today—in cellphones, voice mail systems, and computer-generated speech. Later ideas championed by Flanagan contributed to the development of modern automatic speech-recognition systems, audio codecs like MP3, and today’s voice over IP technology.

“Wayne Gretzky once said he played hockey well because he doesn’t go to where the puck is; he skates to where it is going to be,” said Rich Cox, the vice president who directs the IP and Voice Services Research Lab for AT&T Labs Research in Florham Park, N.J. “That’s Jim. He could see where things were going to go,” even if it would take decades to get there.

For this—his “sustained leadership and outstanding contributions in speech technology”—Flanagan is being honored with the 2005 IEEE Medal of Honor.

Flanagan never expected to be an electrical engineer. He grew up on a cotton farm in Mississippi and figured he’d stay there. In fact, when offered a choice between a typing course and a physics course in high school, he selected physics only because the encyclopedia definition, “the study of natural phenomena and how to use them,” implied that physics could be useful in cotton farming.

This pioneer in voice communications didn’t even have a telephone—or electricity—during most of his childhood. The farm was too far from any town to be on the grid until the Communications Act of 1934 and the Rural Electrification Act of 1936 extended phone and electricity networks into rural America.

Getting electricity at about age 12 was, for Flanagan, like getting a new toy. He read about Guglielmo Marconi, got some instruction manuals, and built a spark-gap transmitter, using a spark coil from a Model T. He also made arc lamps, wired an induction coil to a doorknob to shock his brother, and built a telephone system, pirating the carbon button microphone from his home telephone, since he couldn’t afford to buy that expensive part.

In the summer of 1943, Flanagan took engineering classes at Mississippi State University, Starkville, biding his time until he turned 18 and could join the U.S. Army, where he hoped to become a pilot. A mild deficiency in color vision got him booted out of the pilot training program and reassigned to communications. As part of that group, he was trained to maintain and operate the first radar-based air traffic control systems, a job that gave him his first exposure to nonlinear circuits and precision engineering.

In 1946 he went back to Mississippi State, turning down the Army’s offer—an 18-month stint testing nuclear bombs on Bikini Atoll. This time he entered the university as an electrical engineering major, determined to learn how to design the type of systems he had been working with in the Army. After graduating from Mississippi State, he accepted a graduate assistantship in MIT’s acoustics lab, which led to his seminal research in voice coding.

And that led to a job, in 1957, at what was then called Bell Telephone Laboratories, in Murray Hill, N.J., where Flanagan would spend the next 33 years. “I hired him because of his interest in speech and hearing—and because he was a prize student,” recalled Edward E. David Jr., then executive director at Bell Laboratories and later science advisor to President Richard M. Nixon. “He brought a new look to the whole area of communications.”

At Bell Labs in the late 1950s, Flanagan continued to research formant-based vocoders. His first technical assistant was Bernie Watson—a name that couldn’t help appealing to Flanagan’s puckish sense of humor.

“He often found a reason to say, ‘Mr. Watson, come here, I want to see you,’” says David.

After just three years, Flanagan was made a department head (although throughout his career he has spent about 50 percent of his time doing his own research). He and his team worked on an artificial larynx, eventually getting more than 30 000 of the devices out to people who needed them.

In the 1960s, digital computers began changing how research was done, and speech research was no exception. Flanagan and his group started using an IBM 650 mainframe computer, intending to simulate a telephone transmission system that would enable them to test future advances without building hardware. That sounds commonplace today, but when Flanagan began this work, such simple components as analog-to-digital (A-to-D) converters did not exist. There was no way to get real-time analog signals into a digital computer.

Instead, Flanagan and his group would use a photograph of an oscillogram and measure the various amplitudes of the signal. Those numbers were then recorded on punch cards—with a huge stack representing a few seconds of speech—and fed into the computer. The output would be a plot of processed waveforms.

This direction of research, Flanagan says, excitement in his vibrant blue eyes, “opened up the field of signal processing, which had not even existed before 1965 or thereabouts—and now it’s an IEEE society,” the IEEE Signal Processing Society.

Making these physical measurements of waveforms to feed into the computer gave Flanagan other ideas. Instead of measuring the waveforms as generated by an oscilloscope, why not measure the motion of the vocal cords themselves? Working with high-speed motion pictures taken of the vocal cords via a dental mirror, he measured the vibration and area of those cords and used these data to compute spectral characteristics of the vocal source.

The analysis supported new computer simulations of the interaction between vocal cords and vocal tracts, using physiological factors to form natural speech synthesis, an early example of what we now call model-based coding. “People don’t use this [type of simulation] as a basis for a voice synthesizer yet,” he says, but he thinks they eventually will. “It’s a frontier challenge.”

By the 1970s, Flanagan was still working on efficient transmission of speech, but now it was in a digital world. Again, he turned conventional methodologies upside down—or at least sideways. Speech was being sent digitally through the telephone transmission system by a method called pulse-code modulation, or PCM. A PCM encoder samples signals at regular intervals and represents the various amplitudes with binary numbers. Flanagan developed a version of PCM that, instead of recording the amplitudes themselves, encodes differences between successive samples for transmission or storage, adapting the algorithm depending on characteristics of the input signal.

This adaptive differential PCM immediately doubled the efficiency of conventional digital telephone transmission. It enabled digital telephone channels that had required 64 kilobits per second of bandwidth to run at 32 kb/s. A further advance in this direction—coding in sub-bands—reduced the rate to 16 kb/s and led to the first Audix voice mail system, a product that eventually became a huge business for AT&T. Along the way to that success, Flanagan was awarded an early patent for packet transmission of speech, one form of which we now call voice over IP (though the patent expired long before the technology was commercialized).

In the 1980s, the research team was working on efficient speech coding for cellphones. Lawrence Rabiner, now a professor of electrical and computer engineering at Rutgers University, New Brunswick, N.J., and the University of California at Santa Barbara, was then a Bell Labs researcher under Flanagan. He recalls that Flanagan immediately began asking how music could be coded in a similarly efficient way. He assigned researchers to work on the challenge, and members of that team later developed the ubiquitous MPEG-1 Layer 3 audio coding format, known as MP3.

“Every time we solved one challenge, he was way ahead of us with the next challenge,” says Rabiner. “He felt that his job was to produce a steady stream of out-of-the-box thinking.”

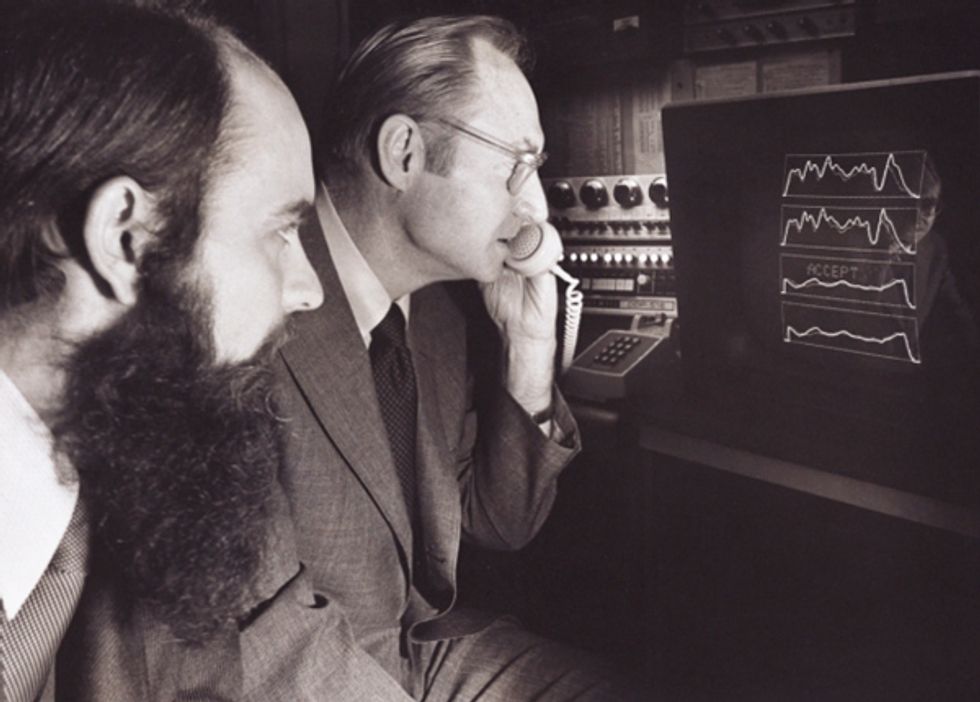

Flanagan climbed steadily up the ranks at Bell Labs, eventually becoming director of the Information Principles Research Laboratory. But while taking on management functions, he always continued his own work [see photo, "In the Lab"]. “I liked the fact that you could influence the directions of work you considered important,” he says with a hint of a Mississippi drawl, “but I’ve always tried to balance my own work interests with facilitating what other people are doing.”

Among his other projects, he pushed ahead with work in automatic speech recognition, even though it didn’t have much support from higher-ups. Eventually, he used a Data General Nova 16-bit minicomputer to build a telephone reservation system for air travel.

“The ultimate fruit of that didn’t come until 1992,” Cox told IEEE Spectrum, “when Voice Recognition Call Processing was put into the telephone network. Jim had promised that there would be tangible results and persisted in spite of the skepticism and resistance he met. And it paid off hugely for AT&T.”

In his spare time, Flanagan helped his brother, also an electrical engineer, run the cotton farm and a cattle ranch back in Mississippi. Balancing his two worlds, he had the ideal career, Flanagan says, except for six months in 1973.

That was the year of the Watergate hearings, Judge John J. Sirica, and an 18 1/2-minute gap in the White House tapes—and of what Flanagan today calls, anguish apparent in his voice, “the worst six months of my life.”

It started with a call from his vice president of research at AT&T, an executive several levels in rank above Flanagan. “He said, ‘Jim, you’re about to get a call from the White House asking for some help, and I hope you will be able to be responsive,’” Flanagan recalls. The call came almost immediately. Judge Sirica was setting up a panel, responsible to him, that would examine the audiotape that contained the 18 1/2-minute gap. Flanagan was one of six audio experts on that panel, charged with determining what had happened to that tape and whether or not the signal could be recovered.

The team flew around the country, working in their own labs, each other’s labs, and various other facilities. When the actual recording equipment and tapes impounded from President Nixon’s offices were being tested, armed federal marshals observed the group as they worked. “We had these guys with pistols standing over us, watching what we were doing,” he recalls, still marveling at the memory.

The worst, for Flanagan, was his time on the witness stand. “It was a three-ring circus. There was Mr. [Leon] Jaworski, the special prosecutor. There was Mr. [Charles S.] Rhyne for Rose Mary Woods. And there was Mr. [James D.] St. Clair for the White House. And almost every time something was said, one of them would bob up and challenge it. I understand it’s the business of lawyers to be aggressive. And their intent is to try to discredit unfavorable testimony,” Flanagan says tersely. “There was a lot of that.”

James L. Flanagan

Date of Birth: 26 August 1925

Birthplace: Greenwood, Miss.

Height: 178 centimeters

Family: wife, Mildred; three sons: Stephen, James, Aubrey

Education: BSEE 1948, Mississippi State University; S.M. 1950, Sc.D. 1955, Massachusetts Institute of Technology

First Job: assembling Christmas toys for J.C. Penney Co. in Greenwood

Patents: around 50

Board Memberships: The Institute for Infocom Research, Singapore; National Institute of Informatics, Tokyo; Fraser Research, Princeton, N.J.; IDIAP Research Institute, Martigny, Switzerland

Computers: Sony VAIO laptop, four Dell desktops

Most Recent Books Read: Dan Brown's The DaVinci Code; Henry Fielding's Tom Jones

Favorite Movie:She Wore a Yellow Ribbon (1949, with John Wayne)

Car: Chevrolet Blazer

Organizational Memberships: IEEE (Life Fellow), National Academy of Sciences, National Academy of Engineering, American Association for the Advancement of Science, Acoustical Society of America (Fellow)

Major Awards: IEEE Medal of Honor, L.M. Ericsson International Prize in Telecommunications, National Medal of Science, Marconi International Fellowship, Gold Medal of the Acoustical Society of America; the IEEE James L. Flanagan Speech and Audio Processing Award honors him.

The committee eventually wrote a report detailing its findings. Basically, Flanagan told Spectrum, the tape had been erased in at least five segments, erasures that had required hand operation of keyboard controls, definitely not by accidentally operating the foot pedal, as Nixon secretary Rose Mary Woods had theorized. And there was no way to recover the signal by known techniques. The tape has been reanalyzed regularly ever since, and the signal has not yet been recovered.

Flanagan left Bell Labs in 1990; he had turned 65, the age Bell Labs’ policy required directors and officers to step down from their posts. Instead of moving down in the organization, Flanagan entertained offers from academia. Ultimately, he accepted a position as director of the Center for Advanced Information Processing at Rutgers University, not far from where his three sons and their families live. The center acts as an interface between the university and industry: corporate members pay annual fees to have access to research, hire students, and guide (but not dictate) research directions.

Flanagan also took on graduate thesis students, graduating 33 to date. In 1992 he was made part of a search committee charged with hiring a vice president of research for the university, but a year into the search, he himself was offered the job. He accepted with the agreement that he could continue his technical work and performed those dual roles until his retirement at the end of last year. During his tenure, the university doubled its external contract research to US $250 million annually.

At Rutgers, Flanagan continued to pursue the last project he had started at Bell Labs, a system called HuMaNet, for Human Machine Network, a hands-free teleconferencing system that allows users to set up telephone calls and call up computer displays.

“My main interest then—and now—is multimodal communication,” Flanagan says, sitting in the Computing Research and Education Building on Rutgers’ Busch Campus, in Piscataway, N.J. “Most of my research here related to it.” Multimodal communication refers to systems with multiple input and output methods, such as speech, touch, hand gestures, eye tracking, facial expressions, and general body movements.

His research at Rutgers included developing computer-controlled microphone arrays that selectively zero in on a narrow area for sound pickup—locking onto an area as small as one person talking quietly in a crowded auditorium. “Scaled-down versions of this notion are now used in conference telephony by a number of companies,” Flanagan says. “The idea of signal processing for selective sound capture has caught on worldwide.” He is now in the process of organizing an international conference on the topic, sponsored by the National Science Foundation, in Arlington, Va.

A frontier in today’s communications research, Flanagan says, is network systems that faithfully reproduce a sense of face-to-face communication. This, he says, requires that people and objects be positioned realistically in space in three dimensions, that sound be selectively captured from each speaker without the need to pass around a microphone, that sound be perceived as coming from a realistic position in space, and that people be able to use their hands in meaningful ways—for example, to “grasp” virtual objects and cause things to happen.

What’s missing today, he believes, is a formal language framework for such multimodal communication. “We know what the distinctive sounds of speech are,” Flanagan says. “We have lexicons that describe our vocabulary, we have grammar that says how the words can be joined, we even have semantic relationships that we can program for meaning. We have nothing comparable for multimodal exchange. If I’m looking at a display and gesturing, speaking, and pointing to indicate that I want to move this to there, what is the vocabulary for representing all that? How do you get meaning from this combination of things? How do you do the equivalent of speech recognition? How do you synthesize that?”

The challenge, once again, is clear, and though Flanagan is officially retired, he won’t be walking away from it.