Cognitive Radios Will Go Where No Deep-Space Mission Has Gone Before

How radios can use neural networks to adapt to alien environments

Space seems empty and therefore the perfect environment for radio communications. Don’t let that fool you: There’s still plenty that can disrupt radio communications. Earth’s fluctuating ionosphere can impair a link between a satellite and a ground station. The materials of the antenna can be distorted as it heats and cools. And the near-vacuum of space is filled with low-level ambient radio emanations, known as cosmic noise, which come from distant quasars, the sun, and the center of our Milky Way galaxy. This noise also includes the cosmic microwave background radiation, a ghost of the big bang. Although faint, these cosmic sources can overwhelm a wireless signal over interplanetary distances.

Depending on a spacecraft’s mission, or even the particular phase of the mission, different link qualities may be desirable, such as maximizing data throughput, minimizing power usage, or ensuring that certain critical data gets through. To maintain connectivity, the communications system constantly needs to tailor its operations to the surrounding environment.

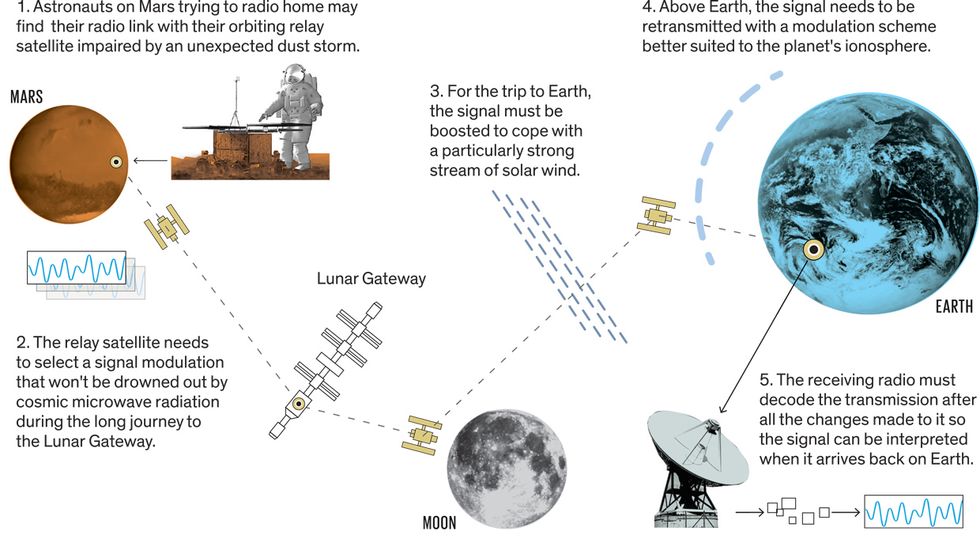

Imagine a group of astronauts on Mars. To connect to a ground station on Earth, they’ll rely on a relay satellite orbiting Mars. As the space environment changes and the planets move relative to one another, the radio settings on the ground station, the satellite orbiting Mars, and the Martian lander will need continual adjustments. The astronauts could wait 8 to 40 minutes—the duration of a round trip—for instructions from mission control on how to adjust the settings. A better alternative is to have the radios use neural networks to adjust their settings in real time. Neural networks maintain and optimize a radio’s ability to keep in contact, even under extreme conditions such as Martian orbit. Rather than waiting for a human on Earth to tell the radio how to adapt its systems—during which the commands may have already become outdated—a radio with a neural network can do it on the fly.

Such a device is called a cognitive radio. Its neural network autonomously senses the changes in its environment, adjusts its settings accordingly—and then, most important of all, learns from the experience. That means a cognitive radio can try out new configurations in new situations, which makes it more robust in unknown environments than a traditional radio would be. Cognitive radios are thus ideal for space communications, especially far beyond Earth orbit, where the environments are relatively unknown, human intervention is impossible, and maintaining connectivity is vital.

Worcester Polytechnic Institute and Penn State University, in cooperation with NASA, recently tested the first cognitive radios designed to operate in space and keep missions in contact with Earth. In our tests, even the most basic cognitive radios maintained a clear signal between the International Space Station (ISS) and the ground. We believe that with further research, more advanced, more capable cognitive radios can play an integral part in successful deep-space missions in the future, where there will be no margin for error.

Long-Distance Calls

When astronauts eventually travel to Mars, powerful engines and plenty of fuel will get them there and back again, but it will be their radios that keep them

connected to Earth. The seemingly empty space between planets is actually filled with obstacles to be overcome by any radio transmission.

Future crews to the moon and Mars will have more than enough to do collecting field samples, performing scientific experiments, conducting land surveys, and keeping their equipment in working order. Cognitive radios will free those crews from the onus of maintaining the communications link. Even more important is that cognitive radios will help ensure that an unexpected occurrence in deep space doesn’t sever the link, cutting the crew’s last tether to Earth, millions of kilometers away.

Cognitive radio as an idea was first proposed by Joseph Mitola III at the KTH Royal Institute of Technology, in Stockholm, in 1998. Since then, many cognitive radio projects have been undertaken, but most were limited in scope or tested just a part of a system. The most robust cognitive radios tested to date have been built by the U.S. Department of Defense.

When designing a traditional wireless communications system, engineers generally use mathematical models to represent the radio and the environment in which it will operate. The models try to describe how signals might reflect off buildings or propagate in humid air. But not even the best models can capture the complexity of a real environment.

A cognitive radio—and the neural network that makes it work—learns from the environment itself, rather than from a mathematical model. A neural network takes in data about the environment, such as what signal modulations are working best or what frequencies are propagating farthest, and processes that data to determine what the radio’s settings should be for an optimal link. The key feature of a neural network is that it can, over time, optimize the relationships between the inputs and the result. This process is known as training.

Scanning for Signals

NASA’s SCaN test bed was the ideal place to test cognitive radios in space without risking an experiment on a deep-space mission. The test bed [top] sits finished before being launched into space and later perches on board the International Space Station [bottom].

For cognitive radios, here’s what training looks like. In a noisy environment where a signal isn’t getting through, the radio might first try boosting its transmission power. It will then determine whether the received signal is clearer; if it is, the radio will raise the transmission power more, to see if that further improves reception. But if the signal doesn’t improve, the radio may try another approach, such as switching frequencies. In either case, the radio has learned a bit about how it can get a signal through its current environment. Training a cognitive radio means constantly adjusting its transmission power, data rate, signal modulation, or any other settings it has in order to learn how to do its job better.

Any cognitive radio will require initial training before being launched. This training serves as a guide for the radio to improve upon later. Once the neural network has undergone some training and it’s up in space, it can autonomously adjust the radio’s settings as necessary to maintain a strong link regardless of its location in the solar system.

To control its basic settings, a cognitive radio uses a wireless system called a software-defined radio. Major functions that are implemented with hardware in a conventional radio are accomplished with software in a software-defined radio, including filtering, amplifying, and detecting signals. That kind of flexibility is essential for a cognitive radio.

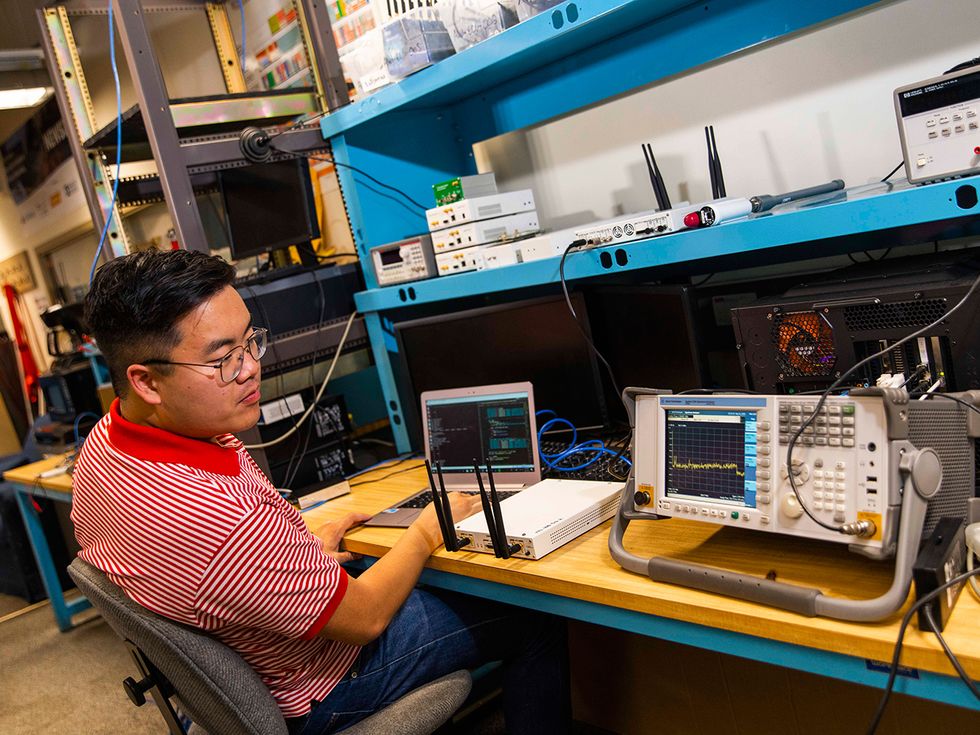

There are several basic reasons why cognitive radio experiments are mostly still limited in scope. At their core, the neural networks are complex algorithms that need enormous quantities of data to work properly. They also require a lot of computational horsepower to arrive at conclusions quickly. The radio hardware must be designed with enough flexibility to adapt to those conclusions. And any successful cognitive radio needs to make these components work together. Our own effort to create a proof-of-concept cognitive radio for space communications was possible only because of the state-of-the-art Space Communications and Navigation (SCaN) test bed on the ISS.

NASA’s Glenn Research Center created the SCaN test bed specifically to study the use of software-defined radios in space. The test bed was launched by the Japan Aerospace Exploration Agency and installed on the main lattice frame of the space station in July 2012. Until its decommissioning in June 2019, the SCaN test bed allowed researchers to test how well software-defined radios could meet the demands expected of radios in space—such as real-time reconfiguration for orbital operations, the development and verification of new software for custom space networks, and, most relevant for our group, cognitive communications.

The test bed consisted of three software-defined radios broadcasting in the S-band (2 to 4 gigahertz) and Ka-band (26.5 to 40 GHz) and receiving in the L-band (1 to 2 GHz). The SCaN test bed could communicate with NASA’s Tracking and Data Relay Satellite System in low Earth orbit and a ground station at NASA’s Glenn Research Center, in Cleveland.

Nobody has ever used a cognitive radio system on a deep-space mission before—nor will they, until the technology has been thoroughly vetted. The SCaN test bed offered the ideal platform for testing the tech in a less hostile environment close to Earth. In 2017, we built a cognitive radio system to communicate between ground-based modems and the test bed. Ours would be the first-ever cognitive radio experiments conducted in space.

Teaching a Neural Network New Tricks

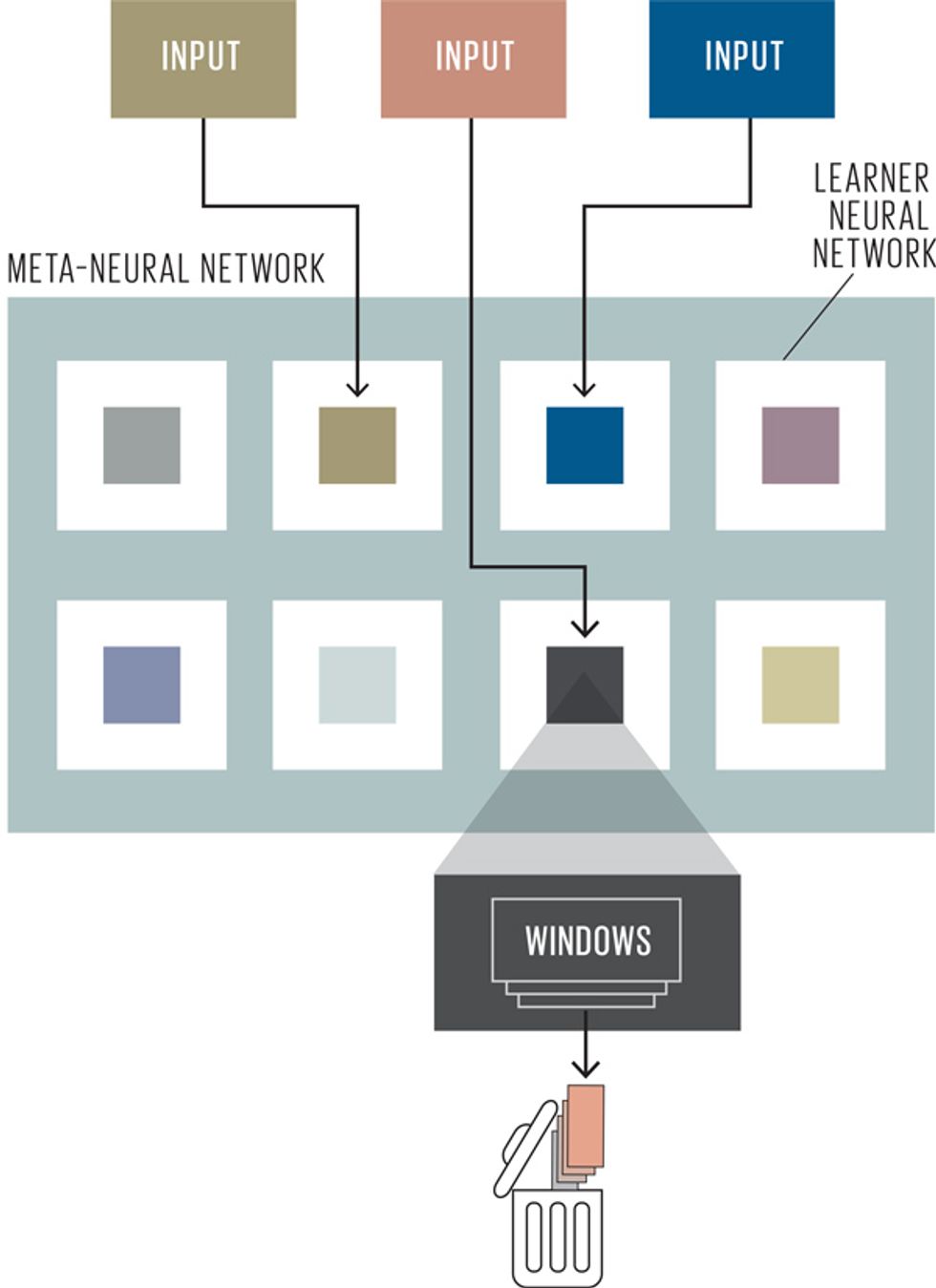

Ensemble learning breaks up the work of a neural network into specialized subnetworks called learner networks. Upon receiving input data, a meta–neural network is responsible for matching inputs to the learner networks most capable of processing them. Here, the inputs that best match the learner networks are indicated by similar colors.

In our experiments, the SCaN test bed was a stand-in for the radio on a deep-space probe. It’s essential for a deep-space probe to maintain contact with Earth. Otherwise, the entire mission could be doomed. That’s why our primary goal was to prove that the radio could maintain a communications link by adjusting its radio settings autonomously. Maintaining a high data rate or a robust signal were lower priorities.

A cognitive radio at the ground station would decide on an “action,” or set of operating parameters for the radio, which it would send to the test-bed transmitter and to two modems at the ground station. The action dictated a specific data rate, modulation scheme, and power level for the test-bed transmitter and the ground station modems that would most likely be effective in maintaining the wireless link.

We completed our first tests during a two-week window in May 2017. That wasn’t much time, and we typically ended up with only two usable passes per day, each lasting just 8 or 9 minutes. The ISS doesn’t pass over the same points on Earth during each orbit, so there’s a limited number of opportunities to get a line-of-sight connection to a particular location. Despite the small number of passes, though, our radio system experienced plenty of dynamic and challenging link conditions, including fluctuations in the atmosphere and weather. Often, the solar panels and other protrusions on the ISS created large numbers of echoes and reflections that our system had to take into account.

During each pass, the neural network would compare the quality of the communications link with data from previous passes. The network would then select the previous pass with the conditions that were most similar to those of the current pass as a jumping-off point for setting the radio. Then, as if fiddling with the knob on an FM radio, the neural network would adjust the radio’s settings to best fit the conditions of the current pass. These settings included all of the elements of the wireless signal, including the data rate and modulation.

The neural network wasn’t limited to drawing on just one previous pass. If the best option seemed to be taking bits and pieces of multiple passes to create a bespoke solution, the network would do just that.

During the tests, the cognitive radio clearly showed that it could learn how to maintain a communications link. The radio autonomously selected settings to avoid losing contact, and the link remained stable even as the radio adjusted itself. It also managed a signal power strong enough to send data, even though that wasn’t a primary goal for us.

Overall, the success of our tests on the SCaN test bed demonstrated that cognitive radios could be used for deep-space missions. However, our experiments also uncovered several problems that will have to be solved before such radios blast off for another planet.

The biggest problem we encountered was something called “catastrophic forgetting.” This happens when a neural network receives too much new information too quickly and so forgets a lot of the information it already possessed. Imagine you’re learning algebra from a textbook and by the time you reach the end, you’ve already forgotten the first half of the material, so you start over. When this happened in our experiments, the cognitive radio’s abilities degraded significantly because it was basically retraining itself over and over in response to environmental conditions that kept getting overwritten.

Our solution was to implement a tactic called ensemble learning in our cognitive radio. Ensemble learning is a technique, still largely experimental, that uses a collection of “learner” neural networks, each of which is responsible for training under a limited set of conditions—in our case, on a specific type of communications link. One learner may be best suited for ISS passes with heavy interference from solar particles, while another may be best suited for passes with atmospheric distortions caused by thunderstorms. An overarching meta–neural network decides which learner networks to use for the current situation. In this arrangement, even if one learner suffers from catastrophic forgetting, the cognitive radio can still function.

To understand why, let’s say you’re learning how to drive a car. One way to practice is to spend 100 hours behind the wheel, assuming you’ll learn everything you need to know in the process. That is currently how cognitive radios are trained; the hope is that what they learn during their training will be applicable to any situation they encounter. But what happens when the environments the cognitive radio encounters in the real world differ significantly from the training environments? It’s like practicing to drive on highways for 100 hours but then getting a job as a delivery truck driver in a city. You may excel at driving on highways, but you might forget the basics of driving in a stressful urban environment.

Now let’s say you’re practicing for a driving test. You might identify what scenarios you’ll likely be tested on and then make sure you excel at them. If you know you’re bad at parallel parking, you may prioritize that over practicing rights-of-way at a 4-way stop sign. The key is to identify what you need to learn rather than assume you will practice enough to eventually learn everything. In ensemble learning, this is the job of the meta–neural network.

The meta–neural network may recognize that the radio is in an environment with a high amount of ionizing radiation, for example, and so it will select the learner networks for that environment. The neural network thus starts from a baseline that’s much closer to reality. It doesn’t have to replace information as quickly, making catastrophic forgetting much less likely.

We implemented a very basic version of ensemble learning in our cognitive radio for a second round of experiments in August 2018. We found that the technique resulted in fewer instances of catastrophic forgetting. Nevertheless, there are still plenty of questions about ensemble learning. For one, how do you train the meta–neural network to select the best learners for a scenario? And how do you ensure that a learner, if chosen, actually masters the scenario it’s being selected for? There aren’t solid answers yet for these questions.

In May 2019, the SCaN test bed was decommissioned to make way for an X-ray communications experiment, and we lost the opportunity for future cognitive-radio tests on the ISS. Fortunately, NASA is planning a three-CubeSat constellation to further demonstrate cognitive space communications. If the mission is approved, the constellation could launch in the next several years. The goal is to use the constellation as a relay system to find out how multiple cognitive radios can work together.

Those planning future missions to the moon and Mars know they’ll need a more intelligent approach to communications and navigation than we have now. Astronauts won’t always have direct-to-Earth communications links. For example, signals sent from a radio telescope on the far side of the moon will require relay satellites to reach Earth. NASA’s planned orbiting Lunar Gateway, aside from serving as a staging area for surface missions, will be a major communications relay.

The Lunar Gateway is exactly the kind of space communications system that will benefit from cognitive radio. The round-trip delay for a signal between Earth and the moon is about 2.5 seconds. Handing off radio operations to a cognitive radio aboard the Lunar Gateway will save precious seconds in situations when those seconds really matter, such as maintaining contact with a robotic lander during its descent to the surface.

Our experiments with the SCaN test bed showed that cognitive radios have a place in future deep-space communications. As humanity looks again at exploring the moon, Mars, and beyond, guaranteeing reliable connectivity between planets will be crucial. There may be plenty of space in the solar system, but there’s no room for dropped calls.

This article appears in the August 2020 print issue as “Where No Radio Has Gone Before.”

About the Authors

Alexander Wyglinski is a professor of electrical engineering and robotics engineering at Worcester Polytechnic Institute. Sven Bilén is a professor of engineering design, electrical engineering, and aerospace engineering at the Pennsylvania State University. Dale Mortensen is an electronics engineer and Richard Reinhart is a senior communications engineer at NASA’s Glenn Research Center, in Cleveland.