AI was a hot trend at CES 2023, though not due to new AI models or research breakthroughs. The Consumer Electronics Show is focused on, well, consumers. The most alluring AI demos at the show target a specific use. Yet this isn’t a detriment to AI’s potential: on the contrary, I’d argue it’s precisely what makes AI so alluring this year. The field is mature enough to move beyond demos and offer tangible improvements to countless consumer devices, apps, and services. Here are a few that stood out:

AI hardware comes to affordable Windows laptops

AMD came to CES 2023 with a full salvo of mobile processors. This includes the Ryzen 7040 series, which packs the first dedicated AI processor in an x86 processor: Ryzen AI. It finally provides a Windows alternative to the AI hardware found in Apple Silicon. AMD claims the Ryzen AI processor is up to 20 precent quicker, and 50 percent more efficient, than the AI hardware on Apple’s M2 chip.

The Ryzen AI processor won’t beat big GPUs like Nvidia’s RTX, AMD’s Radeon, or Intel’s Xe. However, even the least powerful GPUs require 50 to 75 watts at full tilt, and top-tier cards can exceed 400 watts. The Ryzen 7040 processors which include Ryzen AI have a total thermal design power of 35 to 45 watts, with the AI processor accounting for just a fraction of that.

And AMD says it’s committed to keeping Ryzen AI on its roadmap for future products. That should mean a consistent cadence of improvements and innovation like AMD’s Ryzen CPUs and Radeon GPUs. It’s still early days for Ryzen AI, but it’s a big step in the right direction.

AI-driven speech makes its voice heard

Speech morphing and translation often doesn’t demo (unless you’re Google), but a pair of AI speech startups made a strong showing at CES.

OneMeta AI brought Verbum, a real-time speech translation web app that is currently available. CEO Saúl Leal sat me down at a video chat with Dayanna Rojas, the company’s head of product, who called in from Chile. She spoke Spanish, while I spoke English, with Verbum translating: first through text, then AI-generated speech. It was a convincing demo made more impressive by show floor’s hectic audio environment. Leal says the service can also work over phone, even without an Internet connection.

I also spoke with Martin Ahlers, VP of Sales at Speechmorphing. In addition to translation, Speechmorphing can either modulate a person’s voice to sound like another or create speech from text input. The service can train using existing voice recording to reproduce any voice for which there’s sufficient data. Yes, that means you can sound like Morgan Freeman. But the app can also return voice to people who have lost some or all of their ability to speak.

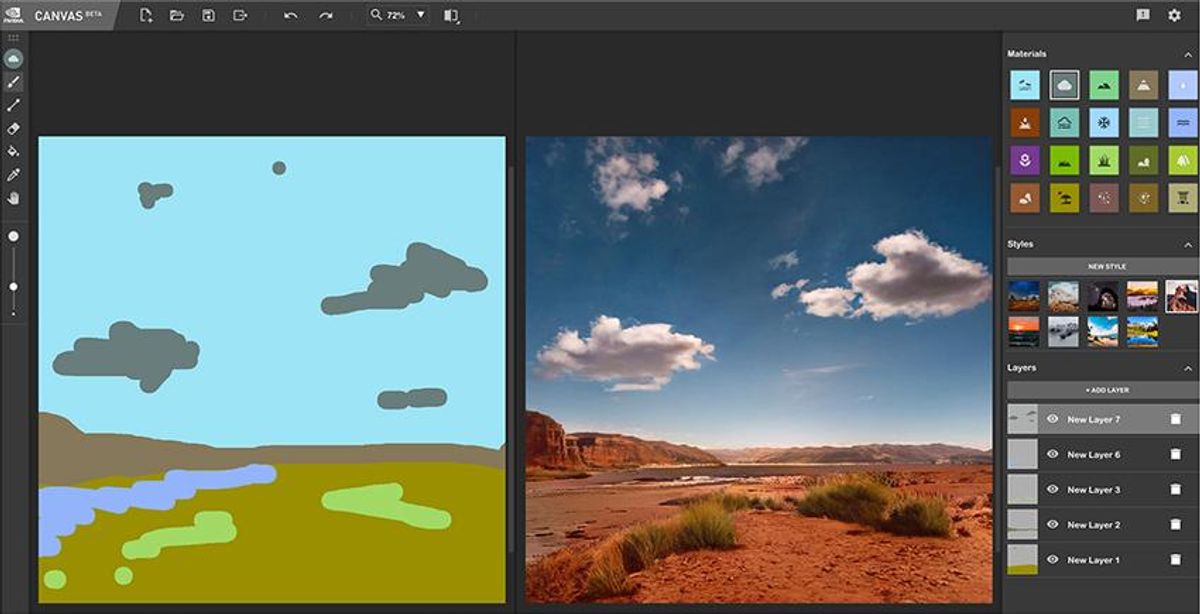

Nvidia Canvas gets a 360-degree upgrade

NVIDIA Canvas Update: 360 Degree Environment Maps for Your 3D ScenesNvidia

I first tried Nvidia Canvas, which generates impressive 2D landscapes with just a few brushstrokes, at CES 2020. It was impressive even then, offering the artistically disinclined (like myself) a chance to create attractive scenery. At CES 2023, Nvidia introduced Canvas 360, which adds support for creating 360-degree panoramic images.

This might seem like a gimmick: the 360-degree camera fad didn’t take off, after all. But Canvas 360’s purpose goes well beyond panoramic pictures. Its real value will be mined by 3D artists hoping to quickly create an appropriate background for a 3D scene. An architect might use it to create scenery similar to the environment where a client wants to build, while a game designer could rapidly iterate on background scenery to change the vibe of a level.

3D scanning gets NeRF’ed

KIRI Engine, an Android, iOS, and web app that lets creators use photogrammetry for professional-grade scans of 3D objects, is about to get an upgrade: support for neural radiance fields, better known as NeRFs.

KIRI Engine will use NeRFs to create 3D scenes from photos and videos.youtu.be

This technique can create 3D objects and scenes based on a photo or video. Unlike photogrammetry, which requires multiple photos taken at different angles, NeRFs use AI to estimate hidden portions of the object. The resulting 3D model can be moved, resized, rotated, and viewed from any angle.

NeRFs already made waves in 2022, but KIRI Engine will bring them to Android and iPhone devices. KIRI representatives told me the feature will launch within the first few months of 2023.

Eye contact is tough on video calls, so why not fake it?

Maintaining Eye Contact in a Video Conference with NVIDIA MaxineNvidia

In 2013, Microsoft Research showed me a secretive demo of its attempts to build a webcam hidden directly behind an LCD screen. The goal? Make direct eye contact possible on virtual web conferences. It worked, mostly, and a few smartphone makers tried similar tactics—but it proved difficult to execute well.

Nvidia has an alternate solution: just use AI to fake it. This feature, known as eye correction, will become part of Nvidia Broadcast, the company’s suite of software utilities designed for video and livestreaming creators. It automatically fakes the position of your eye so that it appears you’re looking at the camera.

A similar feature will also be available to AMD laptops with the new Ryzen AI processor. Microsoft’s Studio Effects (including eye correction), introduced to select Windows devices in 2022, are compatible with Ryzen AI.

- CES 2021: My Top 3 Gadgets of the Show—and 3 of the Weirdest ›

- CES 2023 Preview: A Stick-on-the-Wall TV, a COVID Breath Test, and More ›

- The Best Tech of CES 2023 - IEEE Spectrum ›

Matthew S. Smith is a freelance consumer technology journalist with 17 years of experience and the former Lead Reviews Editor at Digital Trends. An IEEE Spectrum Contributing Editor, he covers consumer tech with a focus on display innovations, artificial intelligence, and augmented reality. A vintage computing enthusiast, Matthew covers retro computers and computer games on his YouTube channel, Computer Gaming Yesterday.