An integral part of the autopilot system in Tesla's cars is a deep neural network that identifies lane markings in camera images. Neural networks "see" things much differently than we do, and it's not always obvious why, even to the people that create and train them. Usually, researchers train neural networks by showing them an enormous number of pictures of something (like a street) with things like lane markings explicitly labeled, often by humans. The network will gradually learn to identify lane markings based on similarities that it detects across the labeled dataset, but exactly what those similarities are can be very abstract.

Because of this disconnect between what lane markings actually are and what a neural network thinks they are, even highly accurate neural networks can be tricked through "adversarial" images, which are carefully constructed to exploit this kind of pattern recognition. Last week, researchers from Tencent's Keen Security Lab showed [PDF] how to trick the lane detection system in a Tesla Model S to both hide lane markings that would be visible to a human, and create markings that a human would ignore, which (under some specific circumstances) can cause the Tesla's autopilot to swerve into the wrong lane without warning.

Usually, adversarial image attacks are carried out digitally, by feeding a neural network altered images directly. It's much more difficult to carry out a real-world attack on a neural network, because it's harder to control what the network sees. But physical adversarial attacks may also be a serious concern, because they don't require direct access to the system being exploited—the system just has to be able to see the adversarial pattern, and it's compromised.

The initial step in Tencent's testing involved direct access to Tesla’s software. Researchers showed the lane detection system a variety of digital images of lane markings to establish its detection parameters. As output, the system specified the coordinates of any lanes that it detected in the input image. By using "a variety of optimization algorithms to mutate the lane and the area around it," Tencent found several different types of "adversarial example[s] that [are similar to] the original image but can disable the lane recognition function." Essentially, Tencent managed to find the point at which the confidence threshold of Tesla's lane-detecting neural network goes from "not a lane" to "lane" or vice-versa, and used that as a reference point to generate the adversarial lane markings. Here are a few of them:

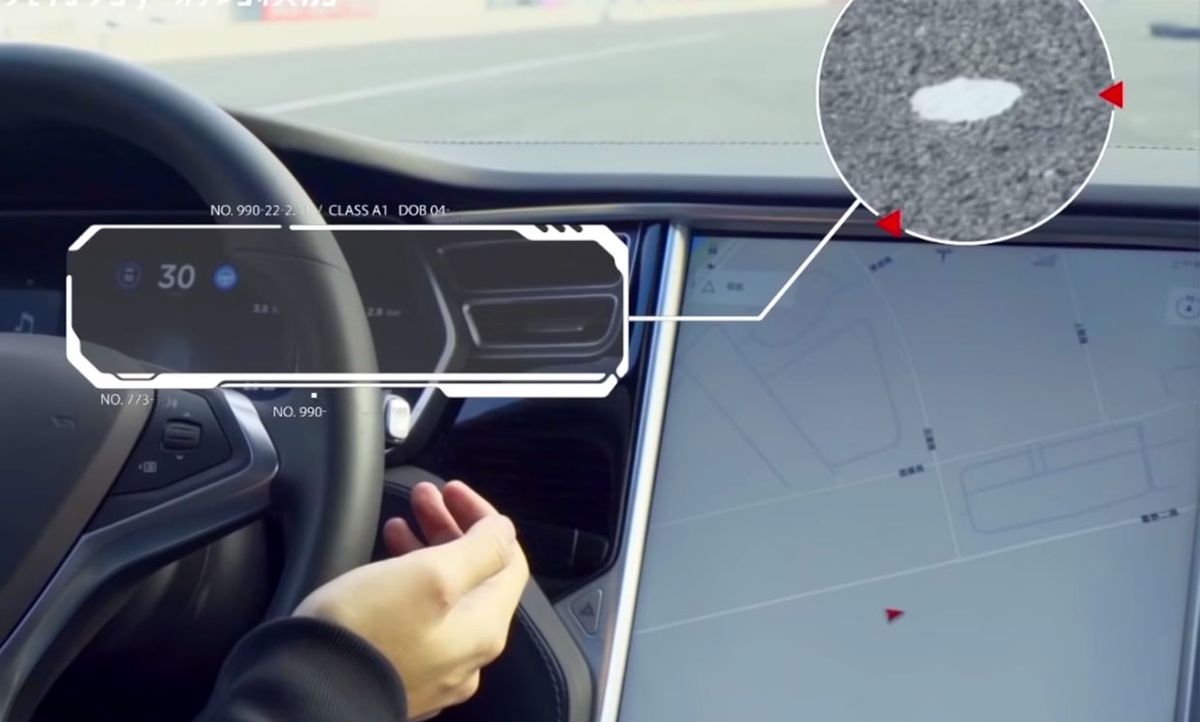

Based on the second example in the image above, Tencent recreated the effect in the real world using some paint, and the result was the same, as you can see on the Tesla's display in the image on the right.

The extent to which the lane markings have to be messed up for the autopilot to disregard them is significant, which is a good thing, Tencent explains:

We conclude that the lane recognition function of the [autopilot] module has good robustness, and it is difficult for an attacker to deploy some unobtrusive markings in the physical world to disable the lane recognition function of a moving Tesla vehicle. We suspect this is because Tesla has added many abnormal lanes (broken, occluded) in their training set for holding the complexity of physical world, [which results in] good performance in a normal external environment (no strong light, rain, snow, sand and dust interference).

It's this very robustness, however, that makes the autopilot more vulnerable to attacks in the opposite direction: creating adversarial markings that trigger the autopilot's lane recognition system where a human driver would see little or no evidence of a lane. Tencent's experiments showed that it takes just three small patches on the ground to fake a lane:

The security researchers identified a few other things with the Tesla that aren't really vulnerabilities, but are kind of interesting, like the ability to trigger the rain sensor with an image. They were also able to control the Tesla with a gamepad, using a vulnerability that Tesla says has already been patched. The lane recognition demonstration starts at 1:08 in the below video.

It's important to keep this example in context, since it appears to only work under very specific circumstances—the demonstration occurs in what looks a bit like an intersection (perhaps equivalent to an uncontrolled 4-way junction), where there are no other lane lines for the system to follow. It seems unlikely that the stickers would cause the car to cross over a well-established center line, but the demo does show that stickers placed where other lane markings don’t exist can cause a Tesla on autopilot to switch into an oncoming lane, without providing any visual cue for the driver that it’s about to occur.

Of course, we'd expect that the car would be clever enough not to actually veer into oncoming traffic if it spotted any. It's also worth pointing out that for the line detection system to be useful, Tesla has to allow for a substantial amount of variation, because there's a lot of real-world variation in the lines that are painted on roads. I'm sure you've had that experience on a highway where several sets of lines painted at different times (but all somehow faded the exact same way) diverge from each other, and even the neural networks that are our brains have trouble deciding which to follow.

In situations like that, we do what humans are very good at—we assimilate a bunch of information very quickly, using contextual cues and our lifelong knowledge of roads to make the best decision possible. Autonomous systems are notoriously bad at doing this, but there are still plenty of ways in which Tesla's autopilot could leverage other data to make better decisions than it could by looking at lane markings alone. The easiest one is probably what the vehicles in front of you are doing, since following them is likely the safest course of action, even if they're not choosing the same path that you would.

According to a post on the Keen Security Lab blog (and we should note that Tencent has been developing its own self-driving cars), Tesla responded to Tecent's lane recognition research with the following statement:

“In this demonstration the researchers adjusted the physical environment (e.g. placing tape on the road or altering lane lines) around the vehicle to make the car behave differently when Autopilot is in use. This is not a real-world concern given that a driver can easily override Autopilot at any time by using the steering wheel or brakes and should be prepared to do so at all times.”

Tesla appears to be saying that this is not a real-world concern only because the driver can take over at any time. In other words, it's absolutely a real world concern, and drivers should be prepared to take over at any time because of it. Tesla consistently takes a tricky position with their autopilot, since they seem to simultaneously tell consumers "of course you can trust it" and "of course you can't trust it." The latter statement is almost certainly more correct, and the lane recognition attack is especially concerning in that the driver may not realize there's anything wrong until the car has already begun to veer, because the car itself doesn't recognize the issue.

While the potential for this to become an actual problem for Tesla's autopilot is likely small, the demonstration does leave us with a few questions, like what happens if there are some random white spots in the road that fall into a similar pattern? And what other kinds of brittleness have yet to be identified? Tesla has lots of miles of real-world testing, but the real world is very big, and the long tail of very unlikely situations is always out there, waiting to be stepped on.

Evan Ackerman is a senior editor at IEEE Spectrum. Since 2007, he has written over 6,000 articles on robotics and technology. He has a degree in Martian geology and is excellent at playing bagpipes.