About two years ago, we covered a research project from Duke University that sped up motion planning for a tabletop robot arm by several orders of magnitude. The robot relied on a custom processor to do in milliseconds what normally takes seconds. The Duke researchers formed a company based on this tech called Realtime Robotics, and recently they’ve been focused on applying it to autonomous vehicles.

The reason that you should care about fast motion planning for autonomous vehicles is because motion planning encompasses the process by which the vehicle decides what it’s going to do next. Making this process faster doesn’t just mean that the vehicle can make decisions more quickly, but that it can make much better decisions as well—keeping you, and everyone around you, as safe as possible.

Here’s the problem: Navigating through an inherently unpredictable world involves a substantial amount of computers trying to guess what humans are going to do next. Computers are pretty good at this, especially in semi-structured environments like on roads. Most of them use what are called probabilistic models to predict what nondeterministic objects (things that have some independent agency) will do. For example, most models would probably agree that a car that’s in front of you on the highway has a high probability of continuing to drive at about the same speed while staying in its lane. It has a somewhat lower probability of changing lanes without signalling, and an even lower probability of suddenly braking.

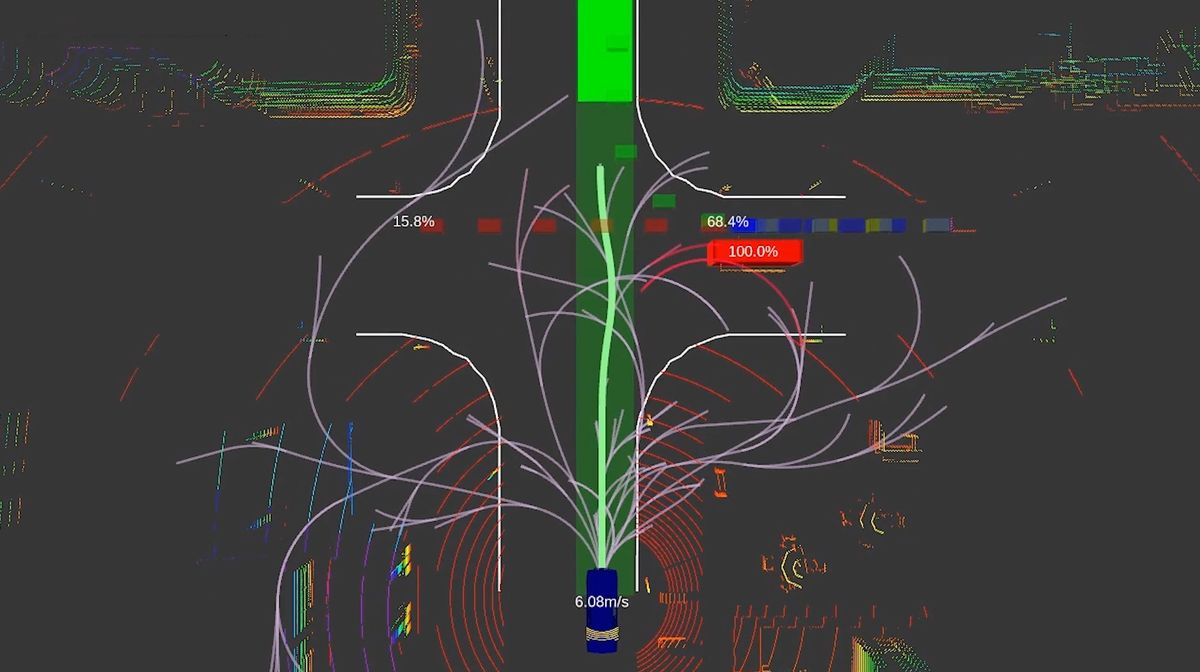

Most autonomous driving systems plan motions based on their model’s most probable scenario for what the objects around them will do. These models can provide a confidence level and the system can slow the vehicle down if it’s not sure what’s going to happen, but it’s still planning just for one scenario at a time, as opposed to considering all possible actions that a vehicle could take (even the improbable ones) and making a motion plan that keeps the vehicle in the optimal position to handle even the things that only might happen. Unlike most autonomous driving systems that can only manage a single motion plan at a time at a rate of between 3 Hz and 10 Hz, Realtime Robotics can run through tens or even hundreds of motion plans while spending less than 1 millisecond computing each—a rate of 1,000 Hz. This allows the system to consider far more potential outcomes in far less time to arrive at the best decision possible.

Realtime Robotics’ motion-planning system for vehicles begins with a lattice—an enormous precomputed graph consisting of all the different trajectories that the vehicle could take in an obstacle-free environment. It does that for a time horizon of between 5 and 10 seconds. The lattice consists of nodes and edges: Nodes are specific vehicle configurations (position, velocity, heading), while edges connect nodes and represent the trajectory between those configurations over about 1 second’s worth of travel time for the vehicle. There are tens of millions of edges in the lattice, reflecting all the different possible transitions between configuration states through the entire time horizon that the lattice covers. Each edge comes with a cost that represents things like fuel usage or passenger comfort, and the lowest cost edges imply more efficient, gentle motion.

At each planning interval (about every 10 milliseconds), here’s the sequence of steps that Realtime’s system uses to motion plan:

- Perception data from cameras, radar, lidar, and other sensors is fed into the system, which identifies static obstacles (like buildings and trees), deterministic moving obstacles (like soccer balls), and the more challenging nondeterministic moving obstacles, including other cars, pedestrians, and bicycles. All of the perception data with the location of static and deterministic obstacles (which get modeled as larger static objects) gets dropped into the lattice. Edges that intersect with obstacles are given very high costs, because you definitely don’t want the vehicle to follow them.

- For each of the nondeterministic obstacles, the system has to take an educated guess about what trajectory they’re likely to follow across the planning interval. Fortunately, lots of people have spent lots of time thinking about this, and there are plenty of models that do their best to predict what cars, pedestrians, and bicycles are likely to do. The system makes its guess, uses it to model a nondeterministic obstacle as a static object, and updates the lattice edges with those new costs.

With all of the obstacle data in the lattice, it’s time to do the actual motion planning that tells the vehicle where it should go next. This is where Realtime Robotics’ custom hardware comes in—again, you can read about exactly how this works in one of our earlier articles, but the secret sauce is an FPGA reconfigurable chip that can encode the lattice data in hardware, and then run through the edges of the lattice (mostly in parallel) to find the path with the lowest cost. This hardware parallelism makes the motion planning step extremely fast, taking less than a millisecond to deliver a plan, and it’s easy to add more hardware if you need to scale up.Realtime Robotics’ system uses an FPGA reconfigurable chip that can encode the lattice data in hardware, and then run through it in parallel.Photo: Realtime Robotics

- At this point, the motion plan that you get out of Realtime’s system is just about as good as the motion plan you’d get out of any other system. The difference is that Realtime’s system has delivered the plan between one and two orders of magnitude faster, meaning it can circle back to Step 3 and run the whole thing again, using a slightly different guess about what the nondeterministic moving obstacles might do. Since the models are just making probabilistic guesses about what other cars or pedestrians or whatever are going to do next, the most likely guess is exactly that—most likely, but it may not be what in fact happens. And the more complex the situation, the harder it’s going to be to guess correctly. By running through Step 3 and Step 4 repeatedly, anywhere from 10 to a 100 times, you can consider the most likely scenario as well as many other scenarios, and then choose the best possible motion plan that has the highest guarantee of safety across all of the possible outcomes that you’ve modeled.

- The final step is to execute the motion plan. Or, more specifically, to tell the vehicle which edge of the lattice to take next. Since each edge of the lattice represents 1 second of vehicle time, and a new motion plan is being calculated and executed every 10 milliseconds, it might be better to think of this final step as executing just the very first action of a several second-long motion plan that refreshes itself a 100 times every second. You might end up following that plan, or something might change and a new (and totally different) plan might be better—it’s an unpredictable world out there, and planning quickly means that your vehicle can make the best decisions as fast as it needs to.

And it’s not just about being able to model a whole bunch of motion plans at once (although that’s pretty great)—it’s also about the sheer speed, because when you’re motion planning for a fast-moving vehicle, time spent planning equates to distance traveled. At speeds of 60 km/h (about 40 mph), the difference between 10-ms planning and 100-ms planning is about a meter and a half, which could easily be the difference between successfully avoiding a wayward pedestrian, and not. At faster speeds and in more constrained environments, like on the highway, you might want to consider fewer plans in exchange for faster planning speed to give the vehicle more room to react. And at slower speeds in more complex environments, you can afford to spend longer planning, which might be beneficial in city centers where probabilistic models have to keep up with all kinds of more or less unpredictable things.

While Realtime, which is based in Boston, has done extensive testing in simulation, they haven’t yet had the chance to try this in a real car, although they’re developing prototypes for multiple companies in the autonomous vehicle space. It’s good to see this level of innovation and competition on something besides sensors, and we’re looking forward to seeing how the industry responds.

Evan Ackerman is a senior editor at IEEE Spectrum. Since 2007, he has written over 6,000 articles on robotics and technology. He has a degree in Martian geology and is excellent at playing bagpipes.

Realtime Robotics’ system uses an FPGA reconfigurable chip that can encode the lattice data in hardware, and then run through it in parallel.Photo: Realtime Robotics

Realtime Robotics’ system uses an FPGA reconfigurable chip that can encode the lattice data in hardware, and then run through it in parallel.Photo: Realtime Robotics