Communication in a doctor's office is like a marriage gone bad: As you describe to your doctor that what pain or symptoms you have, you realize that while the doctor may hear you, he or she isn't really listening. And in the other direction, you hear the doctor's words, but do you walk away with a full understanding of the diagnosis, what exactly you're being prescribed, why, and what the risks are?

In a recent article in the London Telegraph, fully 25 percent of National Health Service patients complained that their doctors discuss their conditions as if they weren’t there; 20 percent reported that “they were not given enough information about their condition and treatment;” and 25 percent confessed that “there was no one they could talk to about their worries and fears.”

Another recent story about doctors’ people-skills—and lack thereof—in the Wall Street Journal sums up the issue nicely: “Doctors are rude. Doctors don't listen. Doctors have no time. Doctors don't explain things in terms patients can understand.” The introduction of electronic health records has, ironically, often made things worse. A recent study noted that even as EHR systems have "allowed [doctors] to spend more face-to-face time with patients," they nonetheless often prove to be a "distraction" as doctor attention becomes focused on keyboards and not patients.

The WSJ article talks about how medical schools, malpractice insurers, and major hospitals are trying to improve patient-doctor communication, and for good reason: A break-down in patient-doctor communication is cited in at least 40 percent of malpractice claims. Further, research confirms that poor communication often leads patients to not follow their prescribed treatments regimens, whereas the opposite also seems to be true. Doctor-patient communication can be improved by having doctors coached in practices like the Four Habits, which, the WSJ says, “teaches doctors how to create rapport with patients, elicit their views, demonstrate empathy and assess their ability to follow a treatment regimen.”

If new technology is partly to blame, it can also help. An article at Health Management Technology describes the use of speech-based “virtual assistants” to capture patient data and automatically enter it into a patient’s EHR, for example, allowing the doctor to talk to the patient without the distraction of having to type what is being said.

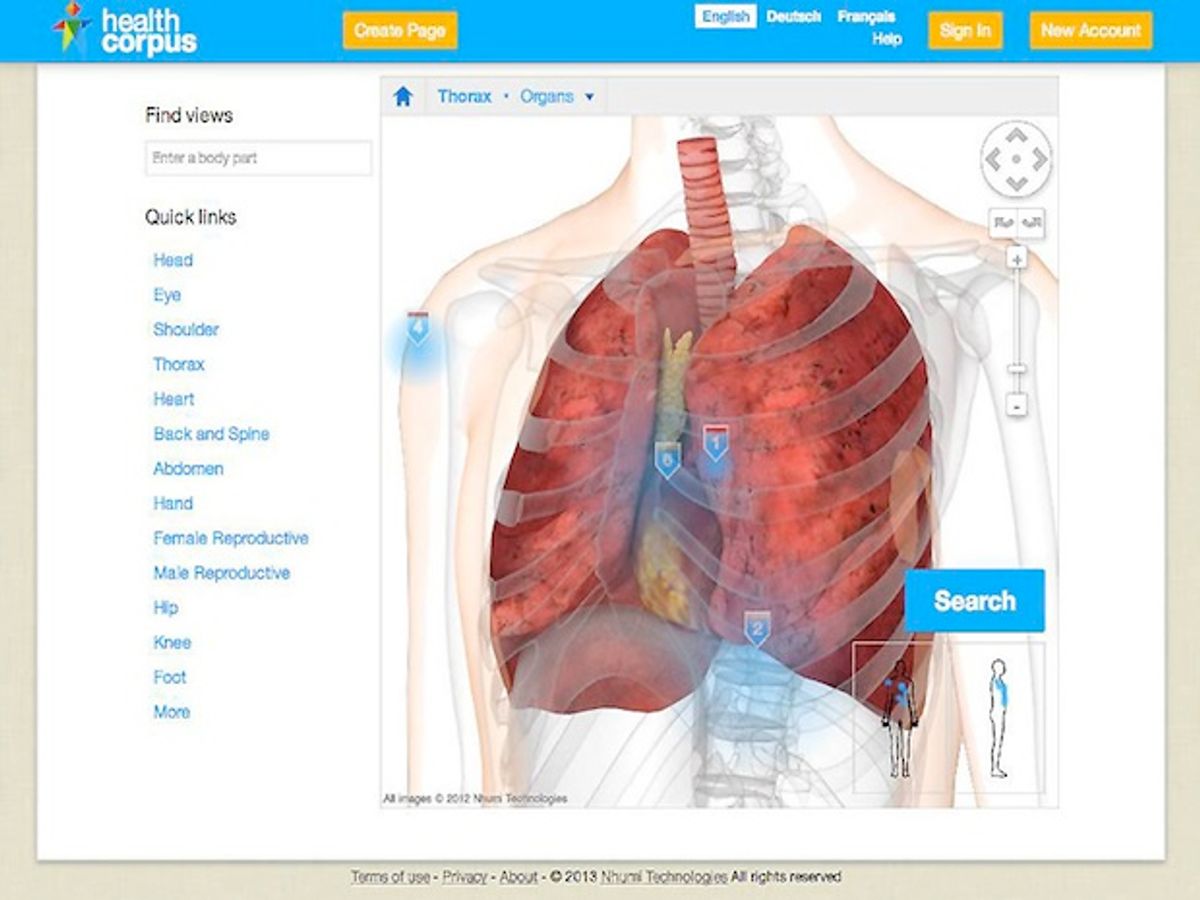

Recently, I spoke to André Elisseeff, one of the founders of Nhumi (“new-me”) Technologies about the use of avatars to improve patient-doctor communication. You may recall that Elisseeff and his team created (while working for IBM’s Zurich Research Lab) a “Google Earth for the Body” as a means of visualizing a person’s EHR using a 3-D image of the human-body. At Nhumi, he developed the idea further into an avatar system that could be used to depict adverse drug reactions. Elisseeff’s team late last year created a new visual search engine to explore the human body using a detailed, interactive 3-D model to depict human anatomy. Calling it the “HealthCorpus,” they have put medical data, FDA data, and user-generated content into a single site that lets a person search this integrated content by clicks on a virtual body.

Elisseeff told me that the new site is meant “for the patient to help indicate to the doctor where it hurts,” and correspondingly, allows the doctor “to explain to the patient why it hurts.”

HealthCorpus is also designed at giving a patient something tangible to refer back to when they leave the doctor’s.

“We asked some GPs about how they would use the system, the first thing they told us is that they would use it to educate the patients, and second, give patients [a visual record] so they don’t go home with empty hands,” Elisseeff said.

The latter use might be the most important. The research reported in WSJ, for instance, found that 80 percent of patients forget what the doctor told them as soon as they leave the office and 50 percent of what patients do remember is actually incorrect, especially in regard to the risks of the treatment prescribed. Other studies indicate that 50 percent of patients don’t take their prescribed medications, and that some 70 percent of the non-adherence is intentional. The reasons for non-adherence include patients not believing their doctor’s diagnosis, not sharing their doctor’s belief about the severity of the condition, or a belief that the detrimental side-effects outweigh the benefits of the medications prescribed. Using HealthCorpus can help a doctor address each of these issues.

Elisseeff told me, for example, how one doctor used the avatar on HealthCorpus to show a patient what was going on with his ankle and foot, and how he needed to strengthen certain muscles in order to relieve the pain the patient felt. The patient could “see” (on the 3-D Model) exactly which muscles the doctor was referring to, grasp how they were causing the problem, and understand that until the muscles were strengthened, whatever pain the patient felt was “normal.” With greater understanding, a patient is more motivated to follow a doctor's treatment plan.

Elisseeff also believes that an avatar-based approach could also help overcome another patient complaint: scolding doctors. No one likes to be nagged by their doctor over something you are doing (or not doing), even (or especially) if you know your doctor is right—we disengage from listening or even rebel against the advice being given, in the face of what we perceive as a verbal “attack.” The avatar as a personal “proxy” might alleviate these natural defensive mechanisms. By talking dispassionately instead about the state of the avatar, and what happens to it when a treatment isn’t followed, a patient might be more willing to listen to what the doctor is saying.

The hope is that by using HealthCorpus, the patient and doctor can have a richer conversation and diminish the fears and misunderstandings that seem inevitable when visiting the doctor. Maybe then, Elisseeff says, the patient-doctor dialogue can focus on what needs to be done to get better instead of talking past one another.

Photo: Nhumi Technologies

Robert N. Charette is a Contributing Editor to IEEE Spectrum and an acknowledged international authority on information technology and systems risk management. A self-described “risk ecologist,” he is interested in the intersections of business, political, technological, and societal risks. Charette is an award-winning author of multiple books and numerous articles on the subjects of risk management, project and program management, innovation, and entrepreneurship. A Life Senior Member of the IEEE, Charette was a recipient of the IEEE Computer Society’s Golden Core Award in 2008.