Artificial intelligence seems to be the go-to solution to every problem there is (technological in nature or otherwise), and it’s only getting worse. A staggering number of both startups and established companies are loudly proclaiming how AI, or machine learning, or deep learning, or whatever is absolutely going to make everything faster, better, cheaper, fairer, and so on. And there’s a lot of so on.

The reason that this sort of breathless and inevitably shallow media-driven enthusiasm for artificial intelligence is effective is because there’s just enough of a general understanding of AI for people to know that it can do some cool things, but not so much of an understanding for people to question what it’s actually capable of, or whether applying to to a specific problem is a good idea. This is not to say that a lack of understanding is anyone’s fault, really: it’s hard to define what AI even is, much less communicate how it works. And without the proper context, there’s no way to make an informed judgement about the future potential of artificial intelligence.

In his new book, Architects of Intelligence: The Truth About AI from the People Building It, Martin Ford somehow managed to interview 23 of the most experienced AI and robotics researchers in the world, asking them about the current state of AI, how AI can be applied to solve useful problems, and what that means for the future of robotics and computing.

The book itself consists almost entirely of transcripts of these interviews, so you’re hearing from these experts directly, and Martin’s role is simply to ask the right questions, which he excels at. He keeps things technical enough to make sure that we get the whole story from each interviewee, but the conversations still flow naturally in a way that’s pleasant to read, and just about everything is easy to understand without a technical background.

In addition to helping us understand the context of artificial intelligence, the interviews tackle a wide variety of topics related to how AI is changing our world now, and how it will likely change things even more in the near future— Martin asks the interviewees for their thoughts on things like economics and whether AI will necessitate a universal basic income, and how much regulation they think might be necessary to make sure that AI is a positive influence on society. There’s also discussion about AGI, artificial general intelligence: when will there be systems that are able to think for themselves and to understand the world like we do?

What’s most interesting to me is how little consensus there is from many of these experts about what AI means and where it’s going. While it’s probably safe to say that most are generally optimistic about AI (frequently with some caveats), there’s strong disagreement about both what the pace of AI development is, as well as what it should be, and what needs to happen to take us to the next stage of AI. But that’s precisely why this book is so good: it’s these areas of disagreement that best define what the current state of AI really is, in a way that you’d never be able to get from some sort of generalized distillation or summary. If you’re interested in an accurate, honest view of the current state of artificial intelligence, Martin’s book is a must-read.

I read through the whole book, and chose a few excerpts that we’ve been allowed to republish. This is just a little taste of what Architects of Intelligence has to offer, and it’s biased a bit towards robotics rather than generalized AI, but it should give you a sense of the kind of depth that each interview gets to.

Daniela Rus

Professor of Electrical Engineering and Computer Science and Director of the Computer Science and Artificial Intelligence Laboratory (CSAIL) at MIT

“...I want to be a technology optimist. I want to say that I see technology as something that has the huge potential to unite people rather than divide people, and to empower people rather than estrange people. In order to get there, though, we have to advance science and engineering to make technology more capable and more deployable.

We also have to embrace programs that enable broad education and allow people to become familiar with technology to the point where they can take advantage of it and where anyone could dream about how their lives could be better by the use of technology. That’s something that’s not possible with AI and robotics today because the solutions require expertise that most people don’t have. We need to revisit how we educate people to ensure that everyone has the tools and the skills to take advantage of technology. The other thing that we can do is to continue to develop the technology side so that machines begin to adapt to people, rather than the other way around.”

Gary Marcus

Professor of Psychology and Neural Science at NYU; CEO and Founder of machine learning startup Geometric Intelligence, acquired by Uber

Martin Ford: “What do you see as the main hurdles to getting to AGI, and when can we get there with current tools?”

Gary Marcus: “I see deep learning as a useful tool for doing pattern classification, which is one problem that any intelligent agent needs to do. We should either keep it around for that, or replace it with something that does similar work more efficiently, which I do think is possible.

At the same time, there are other kinds of things that intelligent agents need to do that deep learning is not currently very good at. It’s not very good at abstract interference, and it’s not a very good tool for language, except things like translation where you don’t need real comprehension, or at least not to do approximate translation. It’s also not very good at handling situations that is hasn’t seen before and where it has relatively incomplete information. We therefore need to supplement deep learning with other tools.

More generally, there’s a lot of knowledge that humans have about the world that can be codified symbolically, either through math or sentences in a language. We really want to bring that symbolic information together with the other information that’s more perceptual.

Psychologists talk about the relationship between top-down information and bottom-up information. If you look at an image, light falls on your retina and that’s bottom-up information, but you also use your knowledge of the world and your experience of how things behave to add top-down information to your interpretation of the image.

Deep learning systems currently focus on bottom-up information. They can interpret the pixels of an image, but don’t then have any knowledge of the objects the image contains. To get to AGI, we need to be able to capture both sides of that equation. Another way to put it is that humans have all kinds of common-sense reasoning, and that has to be part of the solution. It’s not well captured by deep learning. In my view, we need to bring together symbol manipulation, which has a strong history in AI, with deep learning. They have been treated separately for too long, and it’s time to bring them together.”

Rodney Brooks

Professor of Robotics (emeritus) at MIT; Founder, Chairman, and CTO of Rethink Robotics; Founder, former Board Member, and former CTO of iRobot

“...Our robots [at iRobot] had been in war zones for nine years being used in the thousands every day. They weren’t glamorous, and the AI capability would be dismissed as being almost nothing, but that’s the reality of what’s real and what is applicable today. I spend a large part of my life telling people that they are being delusional when they see videos and think that great things are around the corner, or that there will be mass unemployment tomorrow due to robots taking over all of our jobs.

At Rethink Robotics, I say, if there was no lab demo 30 years ago, then it’s too early to think that we could make it into a practical product now. That’s how long it takes from a lab demo to a practical product. It’s certainly true of autonomous driving; everyone’s really excited about autonomous driving right now. People forget that the first automobile that drove autonomously on a freeway at over 55 miles an hour for 10 miles was in 1987 near Munich. The first time a car drove across the US, hands off the wheel, feet off the pedals coast to coast, was No Hands Across America in 1995. Are we going to see mass-produced self-driving cars tomorrow? No. It takes a long, long, long time to develop something like this, and I think people are still overestimating how quickly this technology will be deployed.”

Cynthia Breazeal

Professor of Media Arts and Sciences at MIT and director of the Personal Robots Group at the Media Lab; founder and Chief Scientist of Jibo

“...I think there is the scientific question and challenge of wanting to understand human intelligence, and one way of trying to understand human intelligence is to model it and to put it in technologies that can be manifested in the world, and trying to understand how well the behavior and capabilities of these systems mirror what people do.

Then, there’s the real-world application question of what value are these systems supposed to be bringing to people? For me, the question has always been about how you design these intelligent machines that dovetail with people— with the way we behave, the way we make decisions, and the way we experience the world— so that by working together with these machines we can build a better life and a better world. Do these robots have to be exactly human to do that? I don’t think so. We already have a lot of people. The question is what’s the synergy, what’s the complementarity, what’s the augmentation that allows us to extend our human capabilities in terms of what we do that allows us to really have greater impact in the world.

That’s my own personal interest and passion; understanding how you design for the complementary partnership. It doesn’t mean I have to build robots that are exactly human. In fact, I feel I have already got the human part of the team, and now I’m trying to figure out how to build the robot part of the team that can actually enhance the human part of the team. As we do these things, we have to think about what people need in order to be able to live fulfilling lives and feel that there’s upward mobility and that they and their families can flourish and live with dignity. So, however we design and apply these machines needs to be done in a way that supports both our ethical and human values. People need to feel that they can contribute to their community. You don’t want machines that do everything because that’s not going to allow for human flourishing. If the goal is human flourishing, that gives some pretty important constraints in terms of what is the nature of that relationship and that collaboration to make that happen.”

Josh Tenenbaum

Professor of Computational Cognitive Science at MIT

Martin Ford: “Do you think that we’ll succeed in making sure that the benefits of artificial intelligence outweigh the downsides?”

Josh Tenenbaum: “I’m an optimist by nature, so my first response is to say yes, but we can’t take it for granted. It’s not just AI, but technology, whether it’s smartphones or social media, is transforming our lives and changing how we interact with each other. It really is changing the nature of human experience. I’m not sure it’s always for the better. It’s hard to be optimistic when you see a family where everybody’s just on their phones, or when you see some of the negative things that social media has led to.

I think it’s important for us to realize, and to study, all the ways these technologies are doing crazy things to us! They are hacking our brains, our value systems, our reward systems, and our social interaction systems in a way that is pretty clearly not just positive. I think we need more active immediate research to try to understand this and to try to think about this. This is a place where I feel that we can’t be guaranteed that the technology is leading us to a good outcome, and AI right now, with machine learning algorithms, are not necessarily on the side of good.

I’d like the community to think about that in a very active way. It the long term, yes, I’m optimistic that we will build the kinds of AI that are on balance forces for good, but I think this is really a key moment for all of us who work in this field to really be serious about this.”

Excerpted with permission from Architects of Intelligence: The Truth about AI from the People Building It by Martin Ford. Published by Packt Publishing Limited. Copyright (c) 2018. All rights reserved. This book is available at Amazon.com.

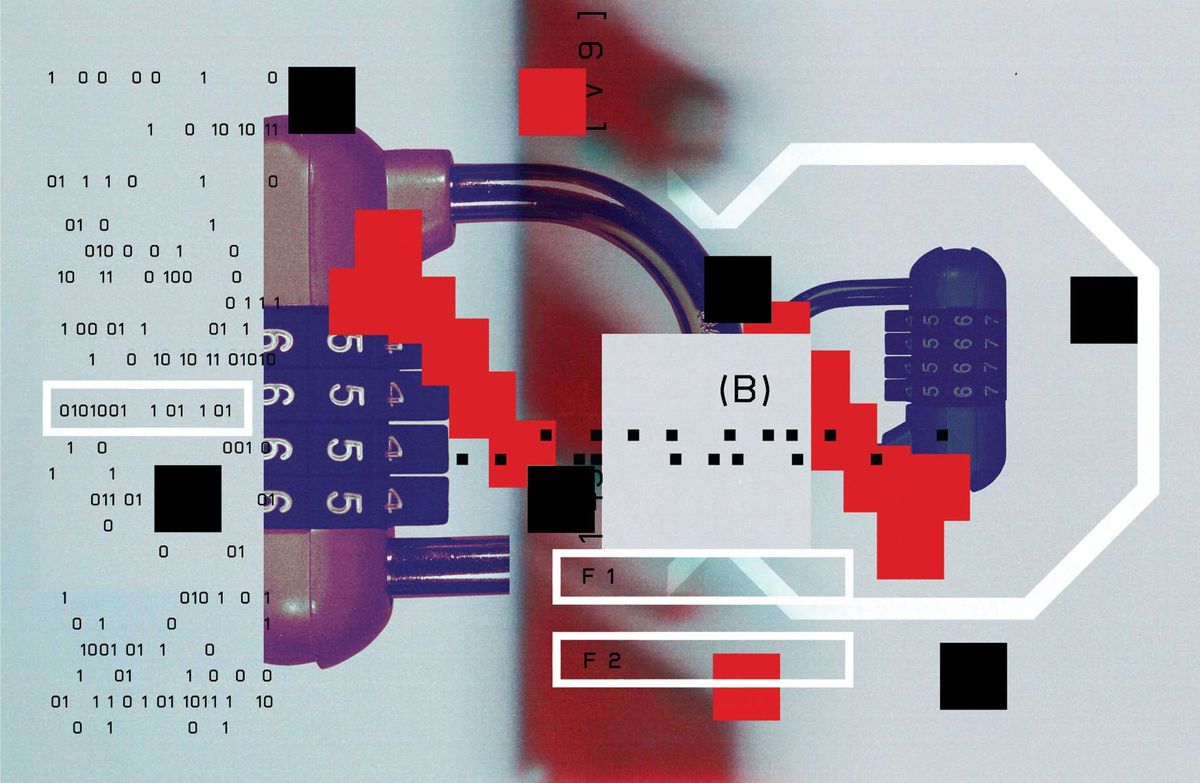

One more thing: Martin also sent us a very informal survey, where he asked each person he interviewed “to give me his or her best guess for a date when there would be at least a 50 percent probability that artificial general intelligence (or human-level AI) will have been achieved.” Sixteen people agreed to take an anonymous guess, and two people took a guess with their name attached. Who those two are likely won’t surprise you.

2029 ..........11 years from 2018 (Ray Kurzweil)

2036 ..........18 years

2038 ..........20 years

2040 ..........22 years

2068 (3) .....50 years

2080 ..........62 years

2088 ..........70 years

2098 (2) .....80 years

2118 (3) .....100 years

2168 (2) .....150 years

2188 ..........170 years

2200 ..........182 years (Rod Brooks)Mean: 2099, 81 years from 2018

As Martin points out, “the average date of 2099 is quite pessimistic compared with other surveys that have been done … most other surveys have generated results that cluster in the 2040 to 2050 range for human-level AI with a 50 percent probability. It’s important to note that most of these surveys included many more participants and may, in some cases, have included people outside the field of AI research.”

And again, that’s why this book is worth reading. You’re hearing about artificial intelligence from the people who know the most about artificial intelligence, the people who developed it, who are continuing to advance it, and who are applying it to real problems.

Architects of Intelligence: The Truth about AI from the People Building It, is available now.

[Amazon] via [ Martin Ford ]

Evan Ackerman is a senior editor at IEEE Spectrum. Since 2007, he has written over 6,000 articles on robotics and technology. He has a degree in Martian geology and is excellent at playing bagpipes.