IBM’s Dr. Watson Will See You...Someday

The game-show-winning AI struggles to find the answers in health care

Four years ago, Neil Mehta was among the 15 million people who watched Ken Jennings and Brad Rutter—the world’s greatest “Jeopardy!” players—lose to an IBM-designed collection of circuits and algorithms called Watson.

“Jeopardy!” is a television game show in which the host challenges contestants with answers for which they must then supply the questions—a task that involves some seriously complicated cognition. Artificial-intelligence experts described Watson’s triumph as even more extraordinary than IBM supercomputer Deep Blue’s history-making 1997 defeat of chess grandmaster Garry Kasparov.

To an AI aficionado, Watson was a tour de force of language analysis and machine reasoning. To Mehta, a physician and professor at the world-renowned Cleveland Clinic, Watson was a question unto itself: What might be possible were Watson’s powers turned to medicine? “I love technology, and I was rooting for Watson,” says Mehta. “I knew that the world was changing. And if not Watson, then something like it, with artificial intelligence, was needed to help us.”

Mehta wasn’t the first doctor to dream of a computer coming to his rescue. There’s a rich history of medical AIs, from Internist-1—a 1970s-era program that encoded the expertise of internal-medicine guru Jack Myers and gave rise to the popular Quick Medical Reference program—to contemporary software like Isabel and DXplain, which can outperform human doctors in making diagnoses. Even taken-for-granted ubiquities like PubMed literature searches and automated patient-alert systems demonstrate forms of intelligence.

Powerful as those programs may be, though, they’re not always considered smart. Watson, with its ability to process natural language, make inferences, and learn from mistakes, embodied something much more sophisticated. It also arrived at an opportune time: Health care, particularly in the United States, was finally experiencing a digital overhaul.These days, clinical findings, research databases, and journal articles are all available in machine-readable form, making them that much easier to feed to a computer. And federal mandates have made electronic medical records nearly universal. Therefore, software is more tightly integrated than ever into medicine, and there’s a sense that making health care more effective and less expensive requires improved programming.

So it’s no wonder that shortly after Watson’s “Jeopardy!” triumph, IBM announced that it would make Watson available for medical applications. The tech press buzzed in anticipation of “Dr. Watson.” What was medicine, after all, but a series of logical inferences based on data? Four years later, however, the predicted revolution has yet to occur. “They are making some headway,” says Robert Wachter, a specialist in hospital medicine at the University of California, San Francisco, and author of The Digital Doctor: Hope, Hype, and Harm at the Dawn of Medicine’s Computer Age (McGraw-Hill, 2015). “But in terms of a transformative technology that is changing the world, I don’t think anyone would say Watson is doing that today.”

Where’s the delay? It’s in our own minds, mostly. IBM’s extraordinary AI has matured in powerful ways, and the appearance that things are going slowly reflects mostly on our own unrealistic expectations of instant disruption in a world of Uber and Airbnb. Improving health care represents a profound challenge, and “going to medical school,” as so many headlines have quipped of Watson, takes time.

Impressive as that original “Jeopardy!”-blitzing Watson was, in medical contexts such an automaton is not really useful. After all, that version of Watson was fine-tuned specifically for one trivia game. It couldn’t play The Settlers of Catan, much less make useful recommendations about a 68-year-old man with diabetes and heart palpitations. “ ‘Watson, given my medical record, which is hundreds of pages long, what is wrong with me?’ That’s a question,” says Watson software engineer Mike Barborak. “But it wasn’t a good question for Watson to answer.”

Watson’s engine was powerful, but it needed to be adapted for medicine and, within that broad field, to specific disciplines and tasks. Watson is not a singular program; rather, in the words of Watson research director Eric Brown, it’s a “collection of cognitive-computing technologies that are combined and instantiated in different ways for each of the solutions.”

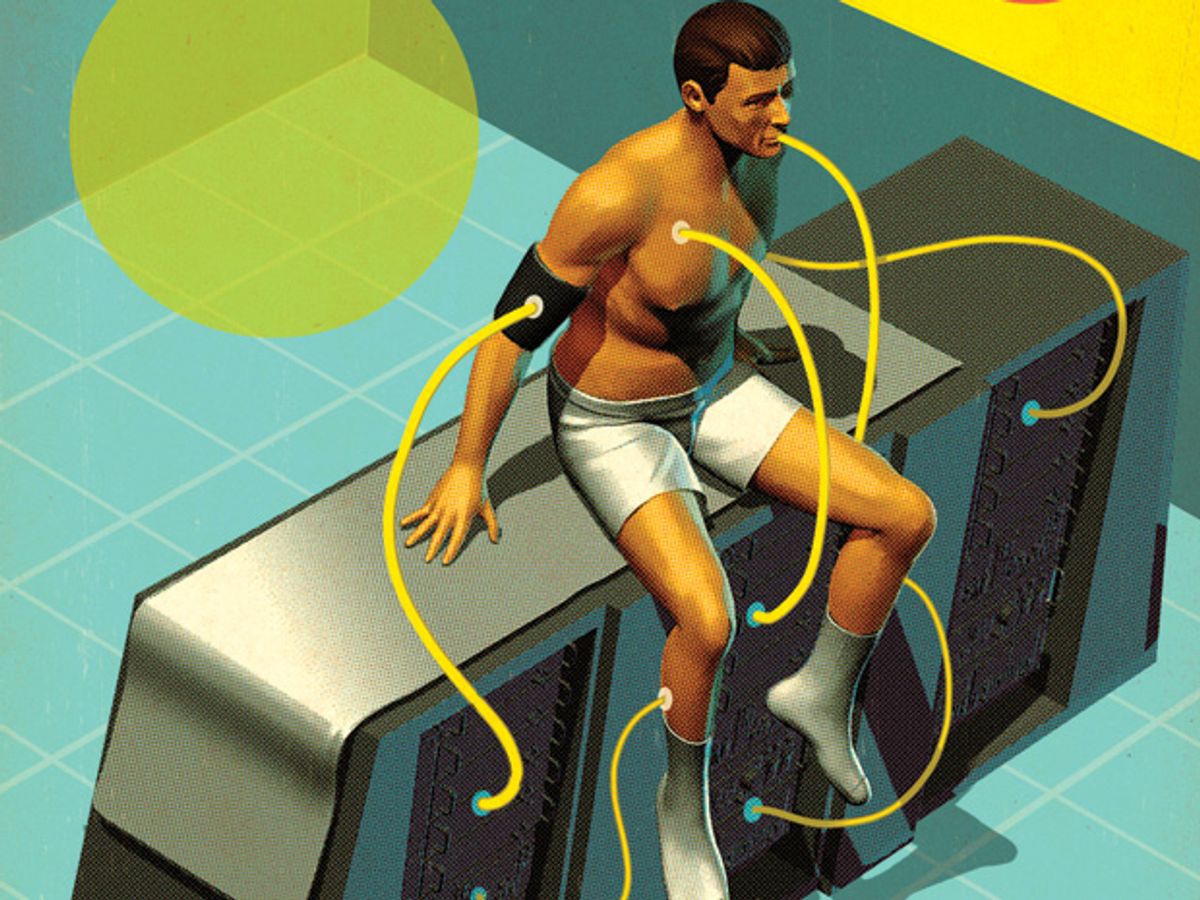

So there are many different Watsons now being applied to medicine. Some of the first could be found at the Cleveland Clinic, Memorial Sloan Kettering Cancer Center, MD Anderson Cancer Center, and insurance company WellPoint (now called Anthem), each of which started working with IBM to develop its own health-care-adapted version of Watson about three years ago. Two years later, as the hardware shrank from room-size to small enough for a server rack, another round of companies signed on to collaborate with IBM. Among these are Welltok, makers of personal health advisory software; @Point of Care, which is trying to customize treatments for multiple sclerosis; and Modernizing Medicine, which uses Watson to analyze hundreds of thousands of patient records and build treatment models so doctors can see how similar cases have been handled.

Watson’s training is an arduous process, bringing together computer scientists and clinicians to assemble a reference database, enter case studies, and ask thousands of questions. When the program makes mistakes, it self-adjusts. This is what’s known as machine learning, although Watson doesn’t learn alone. Researchers also evaluate the answers and manually tweak Watson’s underlying algorithms to generate better output.

Here there’s a gulf between medicine as something that can be extrapolated in a straightforward manner from textbooks, journal articles, and clinical guidelines, and the much more complicated challenge of also codifying how a good doctor thinks. To some extent those thought processes—weighing evidence, sifting through thousands of potentially important pieces of data and alighting on a few, handling uncertainty, employing the elusive but essential quality of insight—are amenable to machine learning, but much handcrafting is also involved.

It’s slow going, especially as each iteration of Watson needs to be tested with new questions. There’s a certain irony: While modern AI researchers tend to look down on earlier medical AIs like Internist-1 as primitive rules-based attempts to codify expertise, today’s medical Watsons are trying to do something similar, albeit in a far more sophisticated way.

Expectations have also been altered in another respect. Watson’s text-processing powers (its “Jeopardy!” database contained some 200 million pages of text) seemed to make it an ideal tool for handling the rapid growth of medical literature, which doubles in size every five years or so. But a big pool of information isn’t always better. Sometimes, as when modeling the decisions of top lung-cancer specialists at Memorial Sloan Kettering, there aren’t yet journal articles or clinical guidelines with the right answers. The way to arrive at them is by watching doctors practice. And even when relevant data do exist, they are often most useful when presented in smaller expert-curated sets.

Another issue is data quality. WatsonPaths, which Mehta has been developing at the Cleveland Clinic, is the closest thing yet to that archetypal Dr. Watson, but it can work only if the AI can make sense of a patient’s records. As of now, electronic medical records are often an arcane collection of error-riddled data originally structured with hospital administration in mind rather than patient care.

Mehta’s other project, then, is the Watson Electronic Medical Records Assistant, in which the computer is trained to distill those records into something doctors and the program itself might actually use. “That has been a challenge,” says Mehta. “We are not there yet.”

The issues with electronic records underscore the fact that each Watson, whatever its theoretical potential, is deployed in the all-too-human—and often all-too-inhuman—reality of modern health care. Watson can’t make up for the shortage in primary-care physicians or restore the crucial doctor-patient bond lost in an era of 5-minute office visits.

Most fundamentally, Watson alone can’t change the fee-for-service reimbursement structure, common in the United States, which makes the quantity of care—the number of tests, treatments, and specialist visits—more profitable than bottom-line quality. “It’s not just a technology problem,” says Wachter. “It’s a social, clinical, policy, ethical, and institutional problem.”

Watson can’t address all those issues, but it might, perhaps, ameliorate some of them. Better medical-record processing could make for extra minutes with patients instead of extra screens. And helping doctors to analyze hospital and research data could make it easier for them to practice effective evidence-based medicine.

While its “Jeopardy!” triumph was “a great shot in the arm” for the field, says Mark Musen, a professor of medical informatics at Stanford, IBM is just one of many companies and institutions in the medical-AI space. Indeed, mention of Watson sometimes raises hackles within that community. It’s a response rooted partly in competitiveness, but also in a sense that attention to Watson has obscured the accomplishments of others.

Take the AI that Massachusetts General Hospital developed called QPID (Queriable Patient Inference Dossier), which analyzes medical records and was used in more than 3.5 million patient encounters last year. Diagnostic programs like DXplain and Isabel are already endorsed by the American Medical Association, and startup company Enlitic is working on its own diagnostics. The American Society of Clinical Oncology built its big-data-informed CancerLinQ program as a demonstration of what the Institute of Medicine, part of the U.S. National Academies, called a “learning health system.” Former Watson developer Marty Kohn is now at Sentrian, designing programs to analyze data generated from home-based health-monitoring apps.

Meanwhile, IBM is making its own improvements. In addition to refinements in learning techniques, Watson’s programmers have recently added speech recognition and visual-pattern analysis to their toolbox. Future versions might, like the fictional HAL 9000 of sci-fi fame, see and hear. They might also collaborate: Innovations in individual deployments could eventually be shared across the platform, turning the multiplicity of Watsons into a giant laboratory for developing new tools.

How will all this shake out? When will AI transform medicine, or at least help improve it in significant ways? It’s too soon to say. Medical AI is about where personal computers were in the 1970s, when IBM was just beginning to work on desktop computers,Bill Gates was writing Altair BASIC, and a couple of guys named Steve were messing around in a California garage. The application of artificial intelligence to health care will, similarly, take years to mature. But it could blossom into something big.

This article originally appeared in print as “Dr. Watson Will See You... Someday.”

An abridged version of this article appeared in the June 2015 issue of IEEE Spectrum.