The U.S. Defense Advanced Research Projects Agency, or DARPA, unveiled last month an ambitious program to significantly advance robotic manipulation.

The four-year Autonomous Robotic Manipulation, or ARM, program aims at developing both software and hardware that would enable robots to autonomously perform complex tasks with humans providing only high-level direction.

This being DARPA, the tasks include things like disarming bombs and finding guns in gym bags. But as it’s happened with other DARPA initiatives, the program could have a broader impact in non-military robotics as well. To find out more about the agency’s plans, I spoke to Dr. Robert Mandelbaum, the program manager who conceived the ARM program hoping to move robotic manipulation “from an art to a science.”

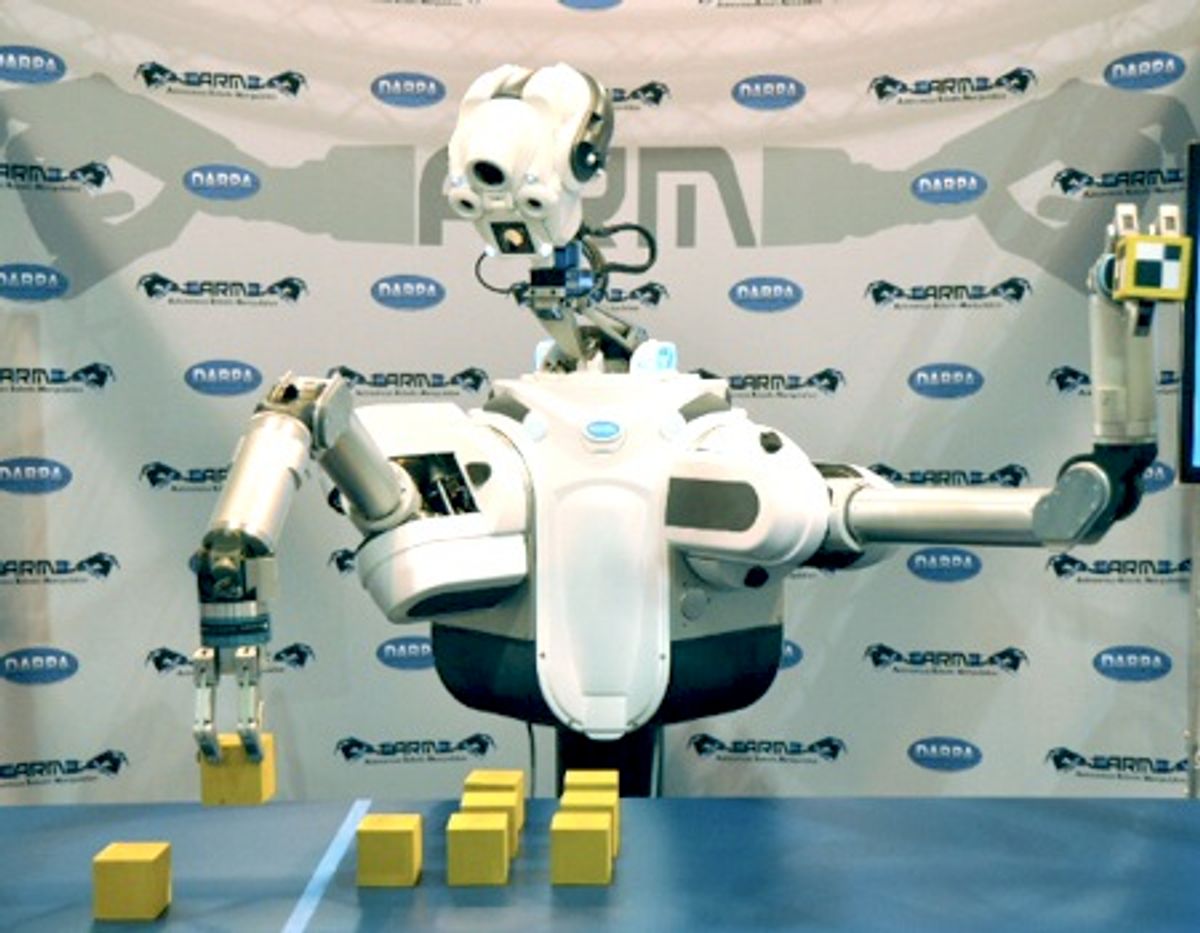

The program has three components. In the software track, six teams will each receive a half-million dollar two-armed robot [photo above, and still nameless], to be used as a standard platform that they will have to program. In the hardware track, three teams will design and build new multi-fingered hands, which will have to be both robust and low cost.

The last track is the one I find most interesting. It consists of an outreach component, in which DARPA is essentially inviting anyone to participate by developing software and testing it out in one of its half-million dollar robots. The ARM program is still in its beginnings, but Dr. Mandelbaum, who has just left DARPA after concluding his four-year stint as program manager, expects a big impact.

“I believe that once you figure out how to do manipulation right, it will fundamentally change the way we think about robotics,” he says. “The commercial applications will be all over the place. Robots will be everywhere.”

Here’s my full interview with him.

Erico Guizzo: Why does DARPA think it can make a significant advance in robotic manipulation when so many people have been working on this problem for so long?

Robert Mandelbaum: In preparing for this program, I did my due diligence. I ran around the country for about a year visiting every lab that had, or planned to have, a manipulation project. I was there to see what the state-of-the-art is and decide whether there was something DARPA should be investing in. What I found is that there are pockets of very good research across the country, but there’s no galvanizing theme. At this point robotic manipulation is an art rather than a science. One reason for that is that it’s very expensive to build manipulation systems. There are research groups that build these robot arms and hands but they are building one every six moths or so. Only those that manage to win an NSF grant or some chunk of money from somewhere are able to build these systems and maybe support a grad student for a couple of years. But then they run out of funding and that’s that. It’s almost like a self-perpetuating cycle. What is needed, I believe, and DARPA is exactly the right agency to do this, is a major attack on manipulation to galvanize and move the field forward.

EG: Is the goal to develop highly specialized robots skilled at specific manipulation tasks or more adaptable robots that can tackle a broad range of tasks?

RM: The goal is to enable robots to autonomously manipulate their environment. And by autonomously what I mean is that instead of having to control every joint of a robot, which is the current approach, you would be able to communicate with the robot by saying things like, “Pick up the IED [improvised explosive device], cut the blue wire, bring the rest back here for forensic testing.” And it would be able to do the hand-eye coordination and other motions itself to get the task done. The reality is that, as we build robots to assist humans, we’re not going to redesign human spaces and we’re not going to create a whole new set of tools that robots can use. Robots will have to navigate around human spaces and deal with human tools, and that’s what this program is about. That’s why we want to develop better [robotic] hands and arms and software to control them.

EG: How will each component of the program work, and who are the selected participants?

EG: What sort of advances you expect to see?

RM: Some of the things we want them to do have never been done before. First, we want the robots to do bimanual manipulation. They have to use two arms to do tasks like opening up a gym bag. Another thing is they have to deal with deformable objects. I have no interest in robots that can pick up a soda can. That’s boring. Anything that has a rigid structure that’s easily modeled as a cylinder—c’mon, anyone can do that! What I’m interested in are things that don’t have a definite shape and are hard to model. So picking up a t-shirt or piece of clothing, or the gym bag I mentioned. A third point, I want the teams to focus on complete, meaningful manipulation tasks. Again, I’m not interested in a robot that picks up a soda can. I don’t even care if it can pick up a screwdriver. What I care about is if it can pick up a screwdriver and then use it to put in a screw. Or use a hammer to hit a nail. Or another example, manipulation without vision: reach inside the gym bag that you just opened and find the gun that I hid inside. Humans do this type of task all the time; you find the keys in your pocket without looking. To do that, our teams will have to work on haptic feedback and other novel concepts.

EG: What kind of robot is the standard platform?

RM: It’s a bimanual platform. It has two arms, two hands, a head and neck. It has stereo cameras, laser range finders, two microphones, and a whole bunch of computing. One novelty is that the head and neck move. We wanted to have an active perception system that plans where it wants to look. Contrast that with a surveillance system that can’t change its viewpoint; you receive what the camera sees. Our robot’s head and neck can move under software control. Let’s say for example the robot has to grab a coffee mug by the handle but the robot can’t see the handle because it’s behind the mug. The robot can move its head from side to side to get a better view and do the task. At this point the robot is a fixed platform, but in the last phase of the program we’ll look into making it mobile, which would extend the workspace of the robot. We also want to make it go outdoors and deal with uncontrolled lighting and things that humans deal with. We want this robot to be able to go where humans go and do what humans do.

EG: What about the hardware track? Why try to build new manipulators? Aren’t the ones out there good enough?

RM: The hardware track consists of three performers: SRI, iRobot, and Sandia National Labs. Their job is to design and build an affordable robotic hand. The affordable aspect is key here. Yes, there are lots of robot arms and hands out there. For example, we have robots that build cars in car plants. The problem is they are very expensive. One of these manipulators may cost in the region of 50,000 dollars, and that’s just for the hardware. It costs 10 times that to install and program the robot in a production line. So if you want to build a car and you need 50 of these robots, you can start figuring out how much money that would cost you. This explains in part why car companies don’t change their models very often—because they’d have to reprogram all their robots! These robots are designed to be highly reliable, very repetitive, and very accurate. They’re totally scripted and don’t have much knowledge and monitoring of their environments. From a market perspective, this approach supports itself. The car companies are churning out hundreds of cars a day and the cars are high value assets. The companies can amortize the cost of the robots easily and afford supporting expensive robots and calibration systems. Now, if you look from a [Department of Defense] point of view, which is what DARPA is about, that doesn’t work for us. We need robots to go do jobs that we don’t want to send humans to do because they’re too dangerous, too dirty, too dull.

EG: What’s an example of a task you expect to accomplish with the software and hardware under development?

RM: One example would be, say, we found an IED on the side of the road and we must defuse it. I don’t want to send a human in and risk a life. I want to send a robot in. There’s only one problem: If I have to send a robot that costs me half a million dollars and it costs the enemy like 10 dollars to put together a fertilizer bomb, well, they’ve won already. They put in 10 dollars and we have counter with half a million dollars worth of robot? That doesn’t work. My approach is we can counter it without a super expensive hand and arm. We should be able to do the same job almost with one of those 5 dollar plastic hands that you can buy in toy shops—I’m exaggerating a little bit here. But the basic concept I’m not exaggerating: I’d like to be able to have a hand that costs in the tens or maybe hundreds of dollars, not thousands, and that can go and defuse that IED.

EG: How can you make robots cheaper without mass producing them?

RM: Here’s my reasoning. You can bring the cost down if you don’t have to be so precise and accurate as an industrial robot has to be. You can get rid of the precision of the hardware if you have very good control and feedback software. So you replace the cost of producing hardware, which is hard to bring down, sometimes even if you have quantity, and replace that with clever software. Imagine you have a very sloppy hand that isn’t repeatable: That is, if you tell it to go to the same place 10 times in a row it doesn’t really go to the exact same point. But because you’re watching with your cameras, when it errs, you get the system to correct itself. Then you don’t need a better, very precise hand. And I’d argue that the human body is almost like that. If you close your eyes and reach out with your hand, it doesn’t really go exactly where you told it to. But if your eyes are open, you hardly ever make a mistake.

EG: So the hardware teams will design new robotic hands. Where do you envision these designs going? Do they have to do a humanlike hand or there’s freedom to create new kinds of hands?

RM: The teams have complete freedom to do whatever they want. The human hand can serve as a guide for them but they are not limited to it. The only limitations they have are that they need to show that the hand can perform the tasks we provide them. That’s their success criteria. In the hardware track, the hands the teams design can be controlled by a human operator. They can pick their best operators and attempt to do the tasks we give them. And an interesting thing is that these tasks are the same ones we’re expecting the software guys to do autonomously—with their software running on the standard robotic platform.

EG: And then what? Are you going to hold a competition to decide which is the best hand?

RM: Exactly. We kicked off Phase 1 in July. The teams have until around November 2011 for the first phase. After that we’re going into Phase 2. For the hardware guys Phase 2 consists in making five or six of the hands they designed and we’ll use them to replace the hands that we currently have on our standard platform. For the software teams, they will have to perform the same tasks that they did with the standard hand now using the new unseen hand. So the two tracks are going to merge. And this forces the teams to make sure their software and hardware are portable and adaptable. And this entire program is about adaptability and resilience and being able to put a system in an unknown environment and have it adapt.

EG: Why do you think it might be possible to “solve” the manipulation problem today and not before?

RM: People have been wanting to do manipulation for decades, but there’s been some technical advances in the last five years or so that have finally made it possible. People had great dreams [for manipulation] in the ’80s, but we just did not have the computing and the understanding of control that we have now. Just to give one example of a major advance: DARPA’s BigDog program. [Mandelbaum was the program manager for that program.] BigDog is not about manipulation, but if you think about what we have to control in BigDog, the complexity is amazing. If we compare BigDog to an autonomous cars, which had a big push in the ’90s, to control an autonomous car you deal with basically two degrees of freedom—you control the steering and you control the gas or the brakes, that is, you have a linear and an angular acceleration. When you want to control BigDog and make sure it doesn’t fall over even when it slips on black ice and things like that, you’re controlling four legs each with four actuators at the same time. So you’re in 16th dimensional space. Control in 16th dimensional space, as any engineer would know, is not just a little bit harder than two dimensional space—it’s exponentially harder. But now we know how to do that. And this kind of knowledge I believe is the key enabling factor that’s changed in the last five years. The insights into how to do dynamic control of very complex system is the key piece to solve manipulation today.

EG: Okay—manipulation adds more degrees of freedom. But still, why is it so hard?

RM: When you are doing manipulation, the number of degrees of freedom grows very fast. First you have the degrees of freedom in your arm and hand, somewhere in the region of 23 for just one arm and hand. If you’re doing a bimanual task, you have 46. But worse than that, if you’re dealing with a deformable object like clothing or a piece of paper. The piece of paper itself has hundreds of degrees of freedom and in many cases they are not predictable because you don’t know ahead of time what the mass is, or the friction, or how bendable the object is, or how it’s going to react to the forces that you apply to it. When you put the whole system together, meaning the hand and the object that it’s manipulating, you’re in the hundreds of degrees of freedom. And another reason why this is so different from, say, autonomous driving is, when you do navigation you’re trying to not interact with the world—you’re trying to avoid a crash. When you’re doing manipulation, if you don’t interact with the world, then you have failed. So my observation was, in order to move us from the current state of art, which is small number of researchers and small number of very expensive systems, we have to get rid of that barrier to entry. I want to get lots of groups involved. To do that, you need an agency like DARPA that has the muscle to provide expensive systems to various groups and also to support their research.

EG: So what’s the ultimate goal of the program?

RM: The goal is to move us from an art to a science. What differentiates an art from a science? Repeatability. The fact that if you do an experiment and you write a paper and you publish the results and if I read your paper I can verify your results by doing the same experiment myself. That’s the definition of science: When you can verify by repeating an experiment. That does not exist in the manipulation world because no two people have the same arm. So in our program we got rid of that problem by saying everybody has the same platform. The second element to move from art to science is to have metrics to measure performance. So we’ll have a set of realistic tasks that everybody has to perform. It’s similar to what happened in the computer vision field in the 1990s. The community went through a transformation from art into science and what changed was that, in the early 1990s, people would self evaluate their results and there was no way to verify whether that was really good or not. Then the community started using standard images—the teapot and the woman with the hat—to compare their algorithms. If you want to prove that you had a great new stereo processing algorithm, you had to prove that against the same set of images that previous people had used. So our program is trying to introduce a standard platform and set of tests. We’ll have numbers and metrics and the teams will get a score. And the teams can compare their own scores over time and also score against each other.

EG: This effort seems limited to the program’s participants. How will this have a broader impact, beyond the few teams lucky to get DARPA funding?

RM: First, the teams we’re funding have subteams, so we’re involving a lot of people from this community. But you’re right. How to make this broader? We really wanted anyone out there to be able to participate. When DARPA sponsored the Grand Challenge and the Urban Challenge [autonomous driving competitions], one thing we noticed was the huge number of people who came out of the woodwork to participate. We want to see the same thing with manipulation. So what we’re doing is our program has an outreach component. We’re making a standard platform—the exact same robot we’re giving to our teams—available on the Web for people to test their manipulation software. Anyone can log in over the web, download the interface and simulation software, and program the robot to perform a task. And when they are ready to try their software, they can send it over the Web and our actual robot [located in a DARPA facility somewhere] will run the software. So for example one of the tasks is to use a hammer to put in a nail. You will be able to challenge, say, iRobot, because you think you can do better than them. And the robot will do the hammer and nail task with your software and you’ll get a score. Maybe you’ll prove that you’re just as good if not better than the DARPA teams.

EG: Robotics open source software is gaining a lot of momentum. Will the software developed as part of the ARM program be open source?

RM: We don’t require our teams to make their software open source. Some of them are going to be open source on their own choosing. A lot of the support software will be open source. For various legal and other practical reasons, I did not want to make that a criteria in the program. But I have told the teams that I strongly encourage them to collaborate with each other and the outside world. What I told them is that they should be competitive but don’t destroy each other in the beginning. Try to collaborate as everyone gets started, then you compete.

EG: This is a DARPA program, so it has certain types of applications in sight, such as disarming IEDs. But what about home and industrial applications? How do you see the advances from this program trickling down to commercial applications that not only soldiers but regular people will benefit from?

RM: I think it won’t just trickle down—it will be a flood. I believe that once you figure out how to do manipulation right, it will fundamentally change the way we think about robotics. The commercial applications will be all over the place. Robots will be everywhere. I don’t know what those applications will be, exactly. I'm eager to wait and see. This is the end of my four years at DARPA, and after that program managers have to leave. [Dr. Mandelbaum has taken a job with Lockheed Martin.] It’s one of the things that keeps DARPA rejuvenated: You come in and you give your best ideas and then you leave. And when I came in to DARPA, I inherited the BigDog program and I almost thought of as my duty that I had to leave behind a program as exciting as BigDog. I think I managed to do that with the ARM program. So I’m planting this cherry tree today and I hope it will give some nice cherries one day.

This interview has been condensed and edited.

More photos:

Erico Guizzo is the Director of Digital Innovation at IEEE Spectrum, and cofounder of the IEEE Robots Guide, an award-winning interactive site about robotics. He oversees the operation, integration, and new feature development for all digital properties and platforms, including the Spectrum website, newsletters, CMS, editorial workflow systems, and analytics and AI tools. An IEEE Member, he is an electrical engineer by training and has a master’s degree in science writing from MIT.