Video Friday is your weekly selection of awesome robotics videos, collected by your Automaton bloggers. We’ll also be posting a weekly calendar of upcoming robotics events for the next few months; here’s what we have so far (send us your events!):

MARSS 2018 – July 4-8, 2018 – Nagoya, Japan

AIM 2018 – July 9-12, 2018 – Auckland, New Zealand

ICARM 2018 – July 18-20, 2018 – Singapore

ICMA 2018 – August 5-8, 2018 – Changchun, China

SSRR 2018 – August 6-8, 2018 – Philadelphia, Pa., USA

ISR 2018 – August 24-27, 2018 – Shenyang, China

BioRob 2018 – August 26-29, 2018 – University of Twente, Netherlands

RO-MAN 2018 – August 27-30, 2018 – Nanjing, China

ELROB 2018 – September 24-28, 2018 – Mons, Belgium

ARSO 2018 – September 27-29, 2018 – Genoa, Italy

Let us know if you have suggestions for next week, and enjoy today’s videos.

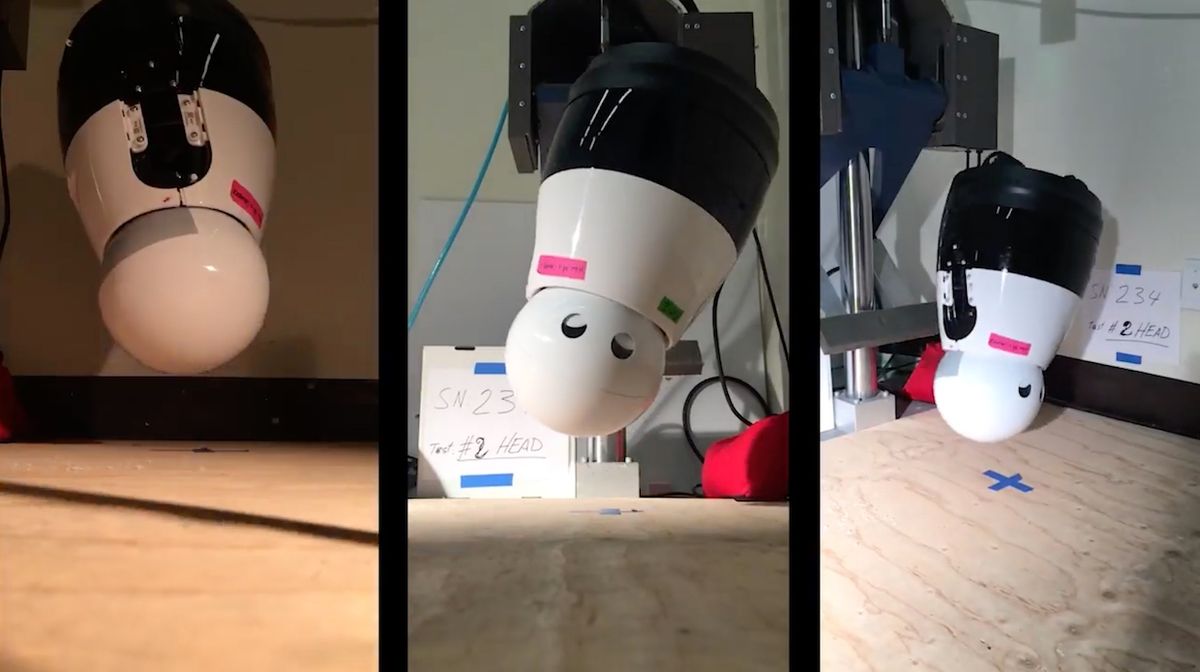

Ouch!!

[ Kuri ]

Living organisms intertwine soft (e.g., muscle) and hard (e.g., bones) materials, giving them an intrinsic flexibility and resiliency often lacking in conventional rigid robots. The emerging field of soft robotics seeks to harness these same properties in order to create resilient machines. The nature of soft materials, however, presents considerable challenges to aspects of design, construction, and control -- and up until now, the vast majority of gaits for soft robots have been hand-designed through empirical trial-and-error. This manuscript describes an easy-to-assemble tensegrity-based soft robot capable of highly dynamic locomotive gaits and demonstrating structural and behavioral resilience in the face of physical damage. Enabling this is the use of a machine learning algorithm able to discover novel gaits with a minimal number of physical trials. These results lend further credence to soft-robotic approaches that seek to harness the interaction of complex material dynamics in order to generate a wealth of dynamical behaviors.

[ Paper ]

One of my favorite RoboCup events is when a team of mediocre humans goes up against the mid-size robots:

Okay, the humans were a bit better perhaps. But they weren’t THAT much better.

[ Tech United ]

RoboCup Standard Platform League is 5v5 Nao on Nao. Here’s this year’s final.

[ Team HTWK ]

We’d rather see real fields full of Naos chasing soccer balls, but simulation is the next best thing. These are the finals of the RoboCup 2018 Simulation League.

[ UT Austin Villa ]

Soccer with adult size robots is, well, it’s not the most exciting, but it’s impressive nonetheless. This is the adult size RoboCup 2018 final.

[ NimbRo ]

By having and expressing a personality, a robot interacts with us in ways we already understand. Instead of using canned animations and expressions for personality, each Misty generates new, nuanced behaviors on the fly.

[ Misty Robotics ]

From the Robotics and Perception Group at UZH:

Autonomous agile flight brings up fundamental challenges in robotics, such as coping with unreliable state estimation, reacting optimally to dynamically changing environments, and coupling perception and action in real time under severe resource constraints. In this work, we consider these challenges in the context of autonomous, vision-based drone racing in dynamic environments. Our approach combines a convolutional neural network (CNN) with a state-of-the-art path-planning and control system. The CNN directly maps raw images into a robust representation in the form of a waypoint and desired speed. This information is then used by the planner to generate a short, minimum-jerk trajectory segment and corresponding motor commands to reach the desired goal. We demonstrate our method in autonomous agile flight scenarios, in which a vision-based quadrotor traverses drone-racing tracks with possibly moving gates. Our method does not require any explicit map of the environment and runs fully onboard. We extensively test the precision and robustness of the approach in simulation and in the physical world. We also evaluate our method against state-of-the-art navigation approaches and professional human drone pilots.

[ RPG ]

From Disney Research: Automated Deep Reinforcement Learning Environment for Hardware of a Modular Legged Robot

In this paper, we present an automated learning environment for developing control policies directly on the hardware of a modular legged robot. This environment facilitates the reinforcement learning process by computing the rewards using a vision-based tracking system and relocating the robot to the initial position using a resetting mechanism. We employ two state-of-the-art deep reinforcement learning (DRL) algorithms, Trust Region Policy Optimization (TRPO) and Deep Deterministic Policy Gradient (DDPG), to train neural network policies for simple rowing and crawling motions. Using the developed environment, we demonstrate both learning algorithms can effectively learn policies for simple locomotion skills on highly stochastic hardware and environments. We further expedite learning by transferring policies learned on a single legged configuration to multi-legged ones.

[ Disney Research ]

Hey, it’s a drone being real-world commercially useful! Amazing!

KOTUG has chosen Delft Dynamics to develop a pioneering invention - using a drone to connect the towline to an assisted vessel. This patent pending drone technology will drastically improve the safety margin of tug operations as this will avoid the need for manoeuvring in the so-called danger zone.

[ Delft Dynamics ]

From Disney Research: Computational Co-Optimization of Design Parameters and Motion Trajectories for Robotic Systems

We present a novel computational approach to optimizing the morphological design of robots. Our framework takes as input a parameterized robot design as well as a motion plan consisting of trajectories for end-effectors and, optionally, for its body. The algorithm optimizes the design parameters including link lengths and actuator placements whereas concurrently adjusting motion parameters such as joint trajectories, actuator inputs, and contact forces. Our key insight is that the complex relationship between design and motion parameters can be established via sensitivity analysis if the robots movements are modeled as spatiotemporal solutions to an optimal control problem. This relationship between form and function allows us to automatically optimize the robot design based on specifications expressed as a function of actuator forces or trajectories. We evaluate our model by computationally optimizing four simulated robots that employ linear actuators, four bar linkages, or rotary servos. We further validate our framework by optimizing the design of two small quadruped robots and testing their performances using hardware implementations.

[ Disney Research ]

With the initial purpose of increasing the productivity of our own automobile component manufacturing facilities, we have been developing DENSO robots for 50 years. Today, we introduce the new collaborative robot “COBOTTA" The human-friendly, compact, and portable design allows you to take COBOTTA anywhere, and automate tasks right away. No expert knowledge is required, making operation amazingly easy. Do you need that extra hand? Do you want to leave simple tasks to robots, and make more time for creative work? COBOTTA will open infinite possibilities to address your needs, and realize creative, new ideas.

Here’s another Denso robot removing the eyes from peeled potatoes, because potatoes watching themselves being boiled, mashed, and eaten is kind of creepy.

[ Denso ]

Created in 2010, this is the interactive demo that fleshed out the basics of the train by demonstration paradigm which was ultimately implemented on Baxter and Sawyer. In this case, the user is demonstrating a pick and place. Note how the robot solicits information from the user through gaze. For example, when the video first starts, the robot alternates looking at the user and its arm, in effect, soliciting the user to show it what the arm should do. The user mouses over the arm, and moves it over to the location where a pick will occur. When disks appear, the robot picks up a red disk, but doesn’t know where to put it, and solicits the user to show it where to put red disks. Similarly, when a blue disk appears, the robot solicits the user to show it where to put blue disks. The end of the video demonstrates how the robot signals it sees something enter its work space, by slowing down and alternating its gaze.

[ Rethink Robotics ]

Who needs a mobile manipulator when your manipulator IS mobile?

[ YouTube ]

Physical therapy is time consuming and expensive, but robots are here to help!

[ LCRA ]

Cynthia Breazeal speaks at Microsoft Research on Living, Learning, and Creating With Social Robots.

Social robots are designed to interact with people in an interpersonal way, engaging and supporting collaborative social and emotive behavior for beneficial outcomes. In a time where citizens are beginning to live with intelligent machines in a daily basis, we have the opportunity to explore, develop and assess humanistic design principles to support and promote human flourishing at all ages and stages. In this talk, I highlight a number research projects where we are developing, fielding, and assessing social robots over repeated encounters with people in real-world environments such as the home, schools, or hospitals. We develop adaptive algorithmic capabilities for robots to support sustained interpersonal engagement and personalization to support specific interventions. We then examine the impact of the robot’s social embodiment, non-verbal and emotive expression, and personalization capabilities on sustaining people’s engagement, improving learning, impacting behavior, and shaping attitudes — in comparison to other personal technologies. I will highlight some provocative findings for a 1-month, cross-generational, in-home study comparing a smart speaker to a social robot covering engagement, usage and desired roles. Finally, it is imperative that the general public understand AI and are empowered to create with AI. I will describe ongoing work with preK-middle school AI education with toolkits that enable kids to code, train, and interact with AI on projects of personal interest. Only when AI is democratized so that everyday people are empowered to design solutions with AI, will AI truly benefit all.

In this week’s episode of Robots in Depth, Per interviews Stefano Stramigioli from the Robotics and Mechatronics lab at University of Twente.

Stefano Stramigioli talks about how he leads the Robotics and Mechatronics lab at University of Twente. The lab focuses on inspection and maintenance robotics, as well as medical applications. Stefano got into robotics when he saw the robots in Star Wars, and started out building a robotic arm from scratch, including doing his own PCBs etc. He also tells us about the robotic peregrine falcon that has been spun out and is now a successful company of their own. Stefano and Per agree that the simple reason for being in robotics is that it’s just so cool!

[ Robots in Depth ]

Evan Ackerman is a senior editor at IEEE Spectrum. Since 2007, he has written over 6,000 articles on robotics and technology. He has a degree in Martian geology and is excellent at playing bagpipes.

Erico Guizzo is the Director of Digital Innovation at IEEE Spectrum, and cofounder of the IEEE Robots Guide, an award-winning interactive site about robotics. He oversees the operation, integration, and new feature development for all digital properties and platforms, including the Spectrum website, newsletters, CMS, editorial workflow systems, and analytics and AI tools. An IEEE Member, he is an electrical engineer by training and has a master’s degree in science writing from MIT.