This is a guest post. The views expressed here are solely those of the author and do not represent positions ofIEEE Spectrum or the IEEE.

Killer drones in the hands of terrorists massacring innocents. Robotic weapons of mass destruction breeding chaos and fear. A video created by advocates of a ban on autonomous weapons would have you believe this dystopian future is right around the corner if we don't act now. The short video, called “Slaughterbots," was released last month coinciding with United Nations meetings on autonomous weapons. The UN meetings ended inconclusively, but the video is getting traction. It's gotten over 2 million views and has sparked dozens of news stories. As a piece of propaganda, it works great. As a substantive argument for a ban on autonomous weapons, the video fails miserably.

Obviously, a world in which terrorists can unleash swarms of killer drones on innocent civilians would be terrible, but is the future the video depicts realistic? The movie's slick production quality helps to gloss over its leaps of logic. It immerses the viewer in a dystopian nightmare, but let's be clear: It's very much science fiction.

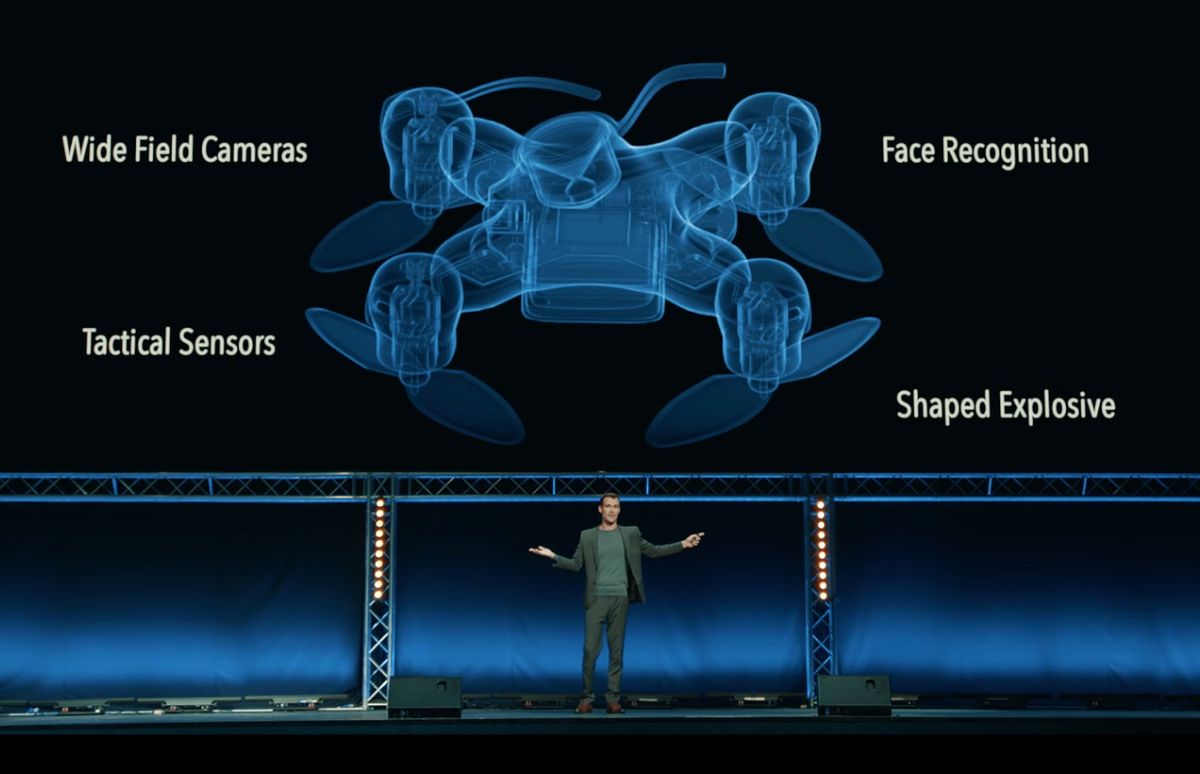

The central premise of “Slaughterbots" is that in the future militaries will build autonomous microdrones with shaped charges that can fly up to someone's head and detonate an explosive, killing the person. In the film, these “slaughterbots" quickly fall into the hands of terrorists, resulting in mass killings worldwide.

The basic concept is grounded in technical reality. In the real world, the Islamic State has used off-the-shelf quadcopters equipped with small explosives to attack Iraqi troops, killing or wounding dozens of Iraqi soldiers. Today's terrorist drones are largely remotely controlled, but hobbyist drones are becoming increasingly autonomous. The latest models can navigate to a fixed target on their own, avoid obstacles, and autonomously track and follow moving objects. A small drone equipped with facial recognition technology could potentially be used to autonomously search for and kill specific individuals, as “Slaughterbots" envisions. It took me just a few minutes of searching online to find the resources necessary to download and train a free neural network to do facial recognition. So while no one has yet cobbled the technology together in the way the video depicts, all of the components are real.

I want to make something very clear: There is nothing we can do to keep that underlying technology out of the hands of would-be terrorists. This is upsetting, but it's very important to understand. Just like how terrorists can and do use cars to ram crowds of civilians, the underlying technology to turn hobbyist drones into crude autonomous weapons is already too ubiquitous to stop. This is a genuine problem, and the best response is to focus on defensive measures to counter drones along with surveillance to catch would-be terrorists ahead of time.

The “Slaughterbots" video takes this problem and blows it out of proportion, however, suggesting that drones would be used by terrorists as robotic weapons of mass destruction, killing thousands of people at a time. Fortunately, this nightmare scenario is about as likely to happen as HAL 9000 locking you out of the pod bay doors. The technology shown in the video is plausible, but basically everything else is a bunch of malarkey. The video assumes the following:

- Governments will mass-produce lethal microdrones to use them as weapons of mass destruction;

- There are no effective defenses against lethal microdrones;

- Governments are incapable of keeping military-grade weapons out of the hands of terrorists;

- Terrorists are capable of launching large-scale coordinated attacks.

These assumptions range from questionable, at best, to completely fanciful.

Of course, the video is fictional, and defense planners do often use fictionalized scenarios to help policymakers think through plausible events that may occur. As a defense analyst at a think tank and in my prior job as a strategic planner at the Pentagon, I used fictional scenarios to help inform choices about what technologies the United States military should invest in. To be useful, however, these scenarios need to at least be plausible. They need to be something that could happen. The scenario depicted in the “Slaughterbots" video fails to account for political and strategic realities about how governments use military technology.

First, there is no evidence that governments are planning to mass-produce small drones to kill civilians in large numbers. In my forthcoming book, Army of None: Autonomous Weapons and the Future of War, I examine next-generation weapons being built in defense labs around the world. Russia, China, and the United States are all racing ahead on autonomy and artificial intelligence. But the types of weapons they are building are generally aimed at fighting other militaries. They are “counter-force" weapons, not “counter-value" weapons that would target civilians. Counter-force autonomous weapons raise their own sets of concerns, but they aren't designed for mass targeting of civilians, nor could they be easily repurposed to do so.

Second, in the video, we're told the drones can defeat “any countermeasure." TV pundits scream, “We can't defend ourselves." This isn't fiction; it's farce. Every military technology has a countermeasure, and countermeasures against small drones aren't even hypothetical. The U.S. government is actively working on ways to shoot down, jam, fry, hack, ensnare, or otherwise defeat small drones. The microdrones in the video could be defeated by something as simple as chicken wire. The video shows heavier-payload drones blasting holes through walls so that other drones can get inside, but the solution is simply layered defenses. Military analysts look at the cost-exchange ratio between offense and defense, and in this case, the costs heavily favor static defenders.

In a world where terrorists launch occasional small-scale attacks using DIY drones, people are unlikely to absorb the inconveniences of building robust defenses, just like people don't wear body armor to protect against the unlikely event of being caught in a mass shooting. But if an enemy country built hundreds of thousands of drones to wipe out a city, you bet there'd be a run on chicken wire. The video takes a plausible problem—terrorist attacks with drones—and scales it up without factoring in how others would respond. If lethal microdrones were built en masse, defenses and countermeasures would be a national priority, and in this case the countermeasures are simple. Any weapon that can be defeated by a net isn't a weapon of mass destruction.

Third, the video assumes that militaries are incapable of preventing terrorists from getting access to military-grade weapons. But we don't give terrorists hand grenades, rocket launchers, or machine guns today. Terrorist attacks with drones are a concern precisely because they involve DIY explosives strapped to readily available technology. This is a genuine problem, but again the video scales this threat up in ways that are unrealistic. Even if militaries were to build lethal microdrones, terrorists are no more likely to get their hands on large numbers of them than other military technologies. Weapons do proliferate over time to nonstate actors in war zones, but just because antitank guided missiles are prevalent in Syria doesn't mean they're commonplace in New York. Terrorists use airplanes and trucks for attacks precisely because successfully smuggling military-grade weapons into a Western country isn't that easy.

Fourth, the video assumes terrorists can carry out coordinated attacks at a scale that is not plausible. In one scene, two men release a swarm of about 50 drones from the back of a van. This specific scene is fairly realistic; one of the challenges of autonomy is that a small group of people could launch a larger attack than might otherwise be possible. Something like a truck full of 50 drones is a reasonable possibility. Again, though, the video takes this scenario to the absurd. The video claims that 8,300 people are killed in simultaneous attacks. If the men in the van depict a typical attack, then this level of casualties would equate to over 160 coordinated attacks worldwide. Terrorist groups often launch coordinated attacks, but usually on the scale of single digit numbers of attacks. The video assumes not just superweapons but ones that are in the hands of supervillains.

The movie uses hype and fear to skip past these crucial assumptions, and in doing so it undermines any rational debate about the risk of terrorists acquiring autonomous weapons. The video makes clear we're supposed to be afraid. But what are we supposed to be afraid of? A weapon that chooses its own targets (which the video is actually ambiguous about)? A weapon with no countermeasure? The fact that terrorists can get ahold of the weapon? The ability of autonomy to scale up attacks? If you want to drum up fears of “killer robots," the video is great. But as a substantive analysis of the issue, it falls apart under even the most casual scrutiny. The video doesn't put forward an argument. It's sensationalist fear-mongering.

Of course, the whole purpose of the video is to scare the viewer into action. The video concludes with UC Berkeley professor Stuart Russell warning of the dangers of autonomous weapons and imploring the viewer to act now to stop this nightmare from becoming a reality. I have tremendous respect for Stuart Russell, both as an artificial intelligence researcher and as a contributor to the debate on autonomous weapons. I've hosted Russell at events at the Center for a New American Security, where I run a research program on artificial intelligence and global security. I have no doubt Russell's views are sincere. But in attempting to persuade the viewer, the video makes assumptions that are not supportable.

Even worse, the proposed solution—a legally binding treaty banning autonomous weapons—won't solve the real problems humanity faces as autonomy advances in weapons. A ban won't stop terrorists from fashioning crude DIY robotic weapons. Nor would a ban on the kinds of weapons the video imagines do anything to address the risks that arise from counter-force autonomous weapons. (In fact, it's not even clear whether a ban would prohibit the weapons shown in the video, which are actually fairly discriminate.)

By focusing on extreme and implausible scenarios, the video actually undermines progress on real concerns about autonomous weapons. Nations who are leading developers of robotic weapons are likely to dismiss the fears raised in “Slaughterbots" out of hand. The video plays into the hands of those who argue that these fears of autonomous weapons are overhyped and irrational.

Autonomous weapons raise important questions about compliance with the laws of war, risk, and controllability, and the role of humans as moral agents in warfare. These are important issues that merit serious discussion. When Russell and others engage in spirited debate on these topics, I welcome the conversation. But that's not what “Slaughterbots" is. The video has succeeded in grabbing media attention, but its sensationalism undercuts the kind of serious intellectual discourse that is actually needed on autonomous weapons.

Paul Scharre (@paul_scharre) is the vice president and director of studies at the Center for a New American Security. From 2009-2012, Scharre led the Defense Department's working group that resulted in the DoD policy directive on autonomy in weapons.