Nurses, doctors, and other healthcare workers form the front line in the fight against pandemics. Not only are they needed to treat sick patients, but they also put themselves at high risk of contracting the disease themselves. In the COVID-19 outbreak, thousands of doctors and nurses have fallen ill, and hundreds have died. These risks become even more hazardous when shortages of personal protective equipment (PPE) leave healthcare workers no alternative but to reuse or improvise PPE.

The interest in using robotics to help combat the COVID-19 outbreak has been huge, and for good reason: Having more robots implies less person-to-person contact, which means fewer healthcare workers get sick. This also reduces community transmission, while consuming fewer supplies of PPE. At the same time, the use of telemedicine to allow doctors and nurses to communicate with patients without the risk of infection is rising sharply. And although robots have so far not been physically interacting with patients, it’s not too far-fetched to imagine a future in which this could be possible.

In this article, we ask the question: How can robots minimize exposure of healthcare workers to patients throughout the entire treatment process? Over the last several years, our research in the Intelligent Motion Laboratory at the University of Illinois at Urbana-Champaign has built and tested prototype systems to explore the technical feasibility, human factors, and economic viability of robot use in patient care, and here we’ll share some of our insights and lessons learned.

Robots and the future of healthcare

We envision that the future of healthcare will involve an integration of robotics and telemedicine that we call tele-nursing. Tele-nursing is the idea that a human nurse can remotely control a robot to perform most (or many) of the tasks involved in patient care. In other words, the robot becomes the nurse’s eyes, ears, and body. The components needed to make tele-nursing possible—robotic manipulation, teleconferencing, augmented reality, health sensors, and low-latency communication networks—are becoming increasingly mature. As tele-nursing becomes more capable, nurses will be able to perform a large portion of patient care through robots, reducing the rates of PPE usage and improving social distancing.

Due to the COVID-19 pandemic, the trend toward increased use of robotics and telemedicine in healthcare is accelerating. Both technologies have the potential to aid in social distancing, which reduces the rate of healthcare acquired infections for patients and personnel.

Hospitals have already been using autonomous robots to disinfect hospital rooms with ultraviolet light, transfer specimen samples, deliver food, medicine, and supplies, and greet patients and provide information. And although telemedicine is most heavily applied to in-home remote consultation, hospitals and nursing schools are increasingly using telepresence robots such as those from VGo, Beam, and Double Robotics. These “Skype-on-wheels” platforms allow for communication with patients, visual inspection, and driving around a room to view equipment and monitors. In the COVID-19 response, mobile telepresence robots with video screens and touchscreen interfaces have been adopted in Italy to let healthcare workers check on patients without physically entering quarantine rooms.

Is it realistic for healthcare robots to transition from the specialized tasks they’re performing now to the level of reliable and safe general purpose autonomy required for them to be responsible for patient treatment? One could imagine a robot like Baymax in the animated movie “Big Hero 6” that diagnoses, treats, and even comforts patients. However, human healthcare professionals are well-trained, highly adaptable, and possess specialized knowledge that sets an extremely high bar that is unlikely to be cleared by a robotic system any time soon. Instead, tele-nursing aims to combine the strengths of telemedicine (leveraging the expert knowledge of healthcare workers and face-to-face contact) with the strengths of robotics (social distancing and capabilities in hazardous environments) to give the best outcome to patients and healthcare workers alike.

Tele-nursing robot components and capabilities

As part of years of research into telemedicine and telehealth in partnership with the Health Innovation Lab at Duke University’s School of Nursing, we’ve identified key capabilities that will enable the next generation of tele-nursing. A tele-nursing system will require a robot that is physically present with the patient, as well as a user interface that a nurse or other healthcare provider operates from a remote location. The robot should provide manipulation capabilities in addition to the navigation and telemedicine capabilities already available with traditional telepresence platforms.

Overall, a tele-nursing robot serves five primary functions:

- Communication (bidirectional audio and video link between staff and patient)

- Mobility (within a room or between rooms)

- Measurement (clinical data collection and assessments)

- General manipulation (from centimeter to sub-millimeter accuracy)

- Tool use (human or robot-specific tools)

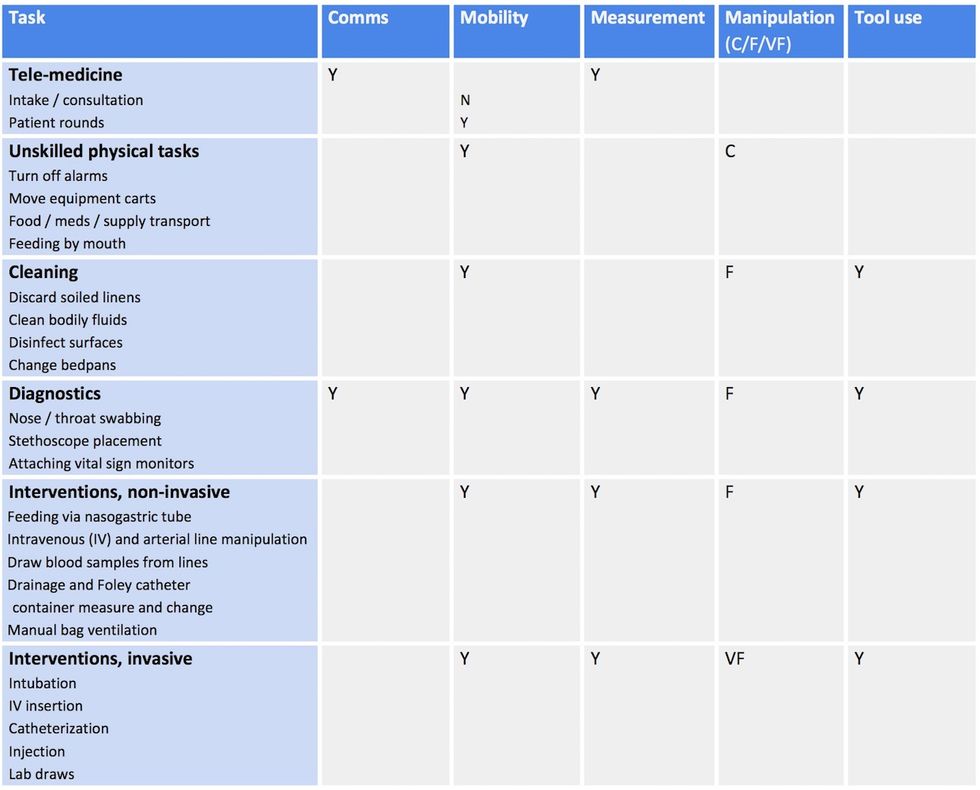

In consultation with practicing nurses on the front lines of COVID-19 care delivery, we identified a set of clinical tasks that are related to treatment of COVID-19 patients. In Table 1 we list these tasks and indicate which of the functions listed above are needed for each task.

Across tasks, mobility and measurement are important, but these are relatively easy to provide. The bottleneck areas for robotics R&D are manipulation and tool use, since the level of strength and dexterity required can range significantly from brute patient lifting strength to delicate precision for IV insertions. Current hardware technology is unlikely to be able to perform well at both coarse and fine scales, so coverage of the entire treatment regime will either need multiple specialized robots or a combination of human and robot patient care.

Lessons learned from the TRINA Project

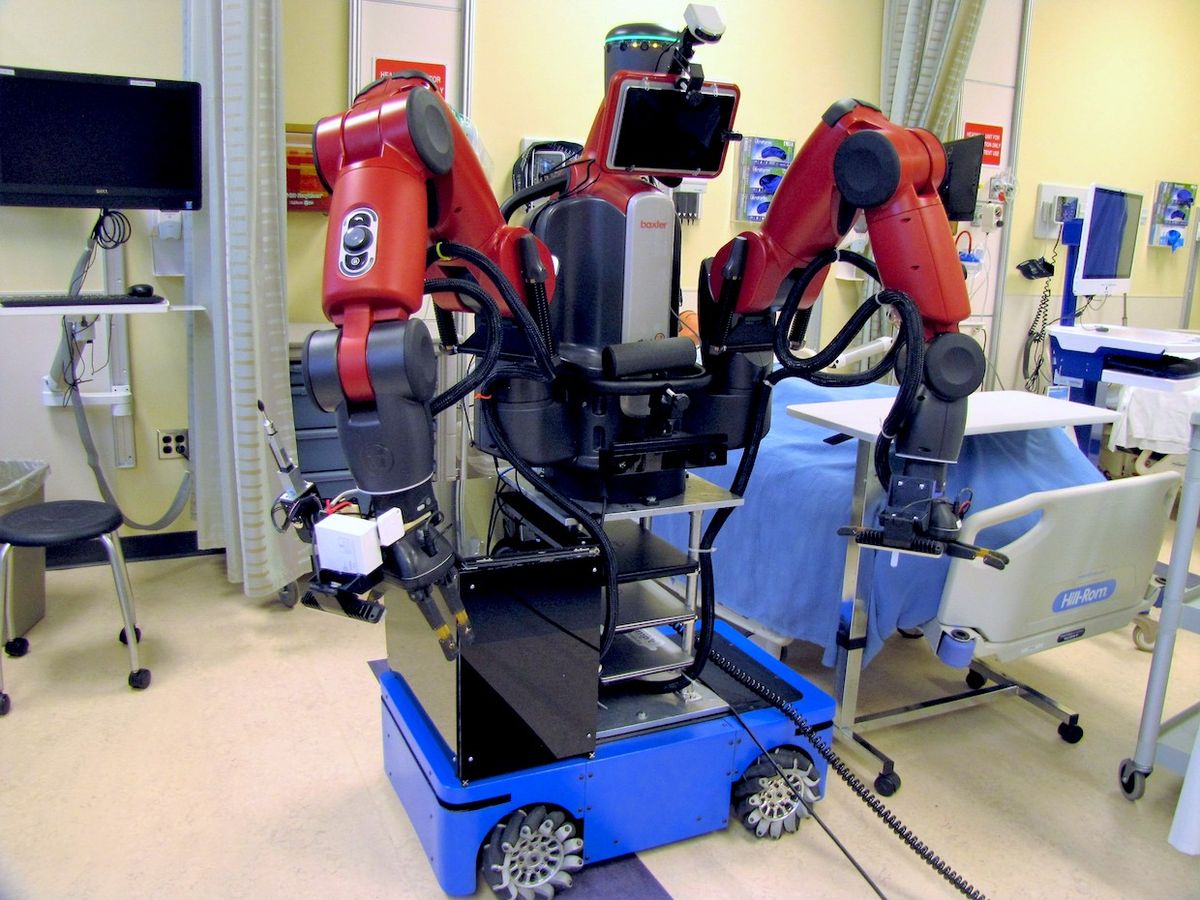

Over the last five years, we have been deeply immersed in these challenges through the development of a prototype tele-nursing robot called the Tele-Robotic Intelligent Nursing Assistant, or TRINA. Originally sponsored by the National Science Foundation in response to the 2014 Ebola outbreak, TRINA is a mobile manipulation robot with telepresence capabilities that is designed to let medical staff perform a variety of routine tasks, such as bringing food and medicine, moving equipment, cleaning, and monitoring vital signs, while communicating with the patient.

We’ve tested the TRINA platform over hundreds of hours in labs and simulated clinical tests (mock hospital rooms and professional actors used in nursing training); using both trained expert “pilots” and lightly-trained nurses as operators. There are now three versions of TRINA in operation: The original at Duke University, a second at Worcester Polytechnic Institute, and TRINA 2.0, currently in development at University of Illinois at Urbana-Champaign.

TRINA 2.0 features a slimmer profile that helps it travel through tighter spaces as well as more precise manipulation capabilities that let it handle smaller objects like IV connectors. In reference to Table 1, TRINA 1.0 was able to perform telemedicine and unskilled physical tasks. When completed, TRINA 2.0 should also be able to perform cleaning tasks, diagnostics, and non-invasive interventions.

Direct teleoperation vs. supervisory control

One of the unique aspects of tele-nursing is that it asks non-technology experts to operate extremely complex robots in stressful, time-sensitive situations for extended periods of time. Hence, the convenience, understandability, and ergonomics of the user interface are a primary concern. Interestingly, there are two philosophically-opposed roads toward achieving usability. The telerobotics literature calls this the debate between direct teleoperation vs. supervisory control.

In the direct teleoperation paradigm, the goal is to provide the operator with an experience that is immersive, transparent, and naturalistic. In other words, the operator “feels” like the robot is part of their body. Our first implementations of TRINA experimented with this approach, but we found that controlling a robot can be a foreign experience. The input / output mapping between the human body and the robot’s body will never be exactly one-to-one, and operators can be unfamiliar with the robot’s capabilities without training. In particular, precise control of gripper orientation is challenging, and reflexes are sluggish due to sensorimotor control delays, meaning that grasping objects can feel a bit like a carnival claw game. In our tests, a trained operator using the robot was 50 to 150x slower than a human nurse wearing PPE at performing routine manipulation tasks. Matching human performance is a high bar to clear because people have mastered manipulation with their own bodies!

By contrast, the supervisory control paradigm puts the operator in the role of a supervisor over a semi-autonomous robot. The operator issues commands, monitors progress, and occasionally intervenes. For example, the nurse could use a “point and click” interface to guide the robot navigate to a target, grab items, and use them. So, even if a robot were slow, the operator uses only a fraction of their attention, so most of the time they can be free to perform other tasks (including controlling multiple robots at once).

We are also investigating operator assistance approaches that augment direct control with a bit of autonomy, reminiscent of advanced vehicle safety features—e.g., backup cams, collision warnings, and collision avoidance—as well as manipulation assistance like “grasp autocompletion.” We hypothesize that a small amount of assistance, appropriately tailored to the task context, can make tele-manipulation both casual and natural.

Designing PPE for robots

We found how important it is to listen to end users in the early stages of the development of TRINA. We were surprised that one of the most common requests from nurses was for the robot to simply turn off equipment alarms! Apparently, false alarms are frustrating and very common on a normal day, but utterly infuriating when you have to don and doff PPE just to press a single button. For example, the ability to visually inspect ventilator connections and IV lines for medication delivery, and then if confirmed false have the ability to press a button using simple, inexpensive arms to existing telepresence robots could accomplish this task, and would be a very useful step forward toward clinical adoption.

Another serious issue is that robots should be disinfected between patients and sanitized between shifts, but the electronics, joint seals, vents for fans, and wheels used in most robots make thorough cleaning difficult. An alternative is to use disposable robot PPE. We designed PPE that is compatible with a robot’s sensors and joints, ensures sufficient heat dissipation, and that can be safely donned and doffed. This took an iterative design and fitting process reminiscent of textile design. Moreover, the problem of doffing without contamination is an excellent candidate for automation, since robots can perform doffing procedures more consistently than humans.

A powerful new tool in the fight against pandemics

The COVID-19 pandemic has ushered in renewed interest in telerobotic systems that could allow clinicians to perform care delivery tasks at a distance. Beyond keeping healthcare workers safe and reducing use of PPE, tele-nursing robots also have applications in treating immunocompromised patients, improving access to healthcare in rural areas, and helping seniors age in place. Immunocompromised patients could be given a dedicated robot in a quarantine room that performs all physical interaction, never interacts with the outside world, and never gets tired.

Rural areas are also seeing shortages of local clinicians, particularly those with specialized expertise. Using tele-nursing robots stationed at rural hospitals, knowledgeable remote clinicians could be called on-demand when a patient requires specialized procedures outside of the expertise of local providers. Aging in place could be facilitated by tele-nursing robots, with nurses or family members logging to perform care tasks as needed. This would be particularly useful in remote areas with poor access to emergency and urgent care.

Although the remaining challenges of usability, robustness, clinical integration, and scaling up will take a concerted effort of scientists, engineers, and medical professionals to address, the rewards are enormous: Once tele-nursing becomes commonplace, the world will have a powerful new tool for keeping healthcare workers safe in the fight against pandemics.

Kris Hauser is an associate professor in the Department of Computer Science and the Department of Electrical and Computer Engineering at the University of Illinois at Urbana-Champaign. His research interests include robot motion planning and control, semiautonomous robots, and integrating perception and planning, as well as applications to intelligent vehicles, robotic manipulation, robot-assisted medicine, and legged locomotion.

Ryan J. Shaw is an associate professor at the Duke University Schools of Nursing and Medicine. He is the director of the Health Innovation Lab and faculty lead of the Duke Mobile App Gateway. He is a digital health scientist that engineers new models of care delivery based on emerging digital health infrastructure of health systems and society.