Video Friday is your weekly selection of awesome robotics videos, collected by your soft-bodied Automaton bloggers. We’ll also be posting a weekly calendar of upcoming robotics events for the next two months; here’s what we have so far (send us your events!):

RO-MAN 2016 – August 26-31, 2016 – New York, N.Y., USA

ECAI 2016 – August 29-2, 2016 – The Hague, Holland

NASA SRRC Level 2 – September 2-5, 2016 – Worcester, Mass., USA

ISyCoR 2016 – September 7-9, 2016 – Ostrava, Czech Republic

Let us know if you have suggestions for next week, and enjoy today’s videos.

Francesco Corucci is a researcher at The BioRobotics Institute of Scuola Superiore Sant'Anna (Pisa, Italy) under the supervision of Prof. Cecilia Laschi. He is currently a visiting researcher in the Morphology, Evolution & Cognition Lab (Vermont Complex Systems Center, University of Vermont, USA) under the supervision of Prof. Josh Bongard. He wrote in to share some of his latest publications and videos, and it’s fascinating. I’m just going to let Francesco explain it all:

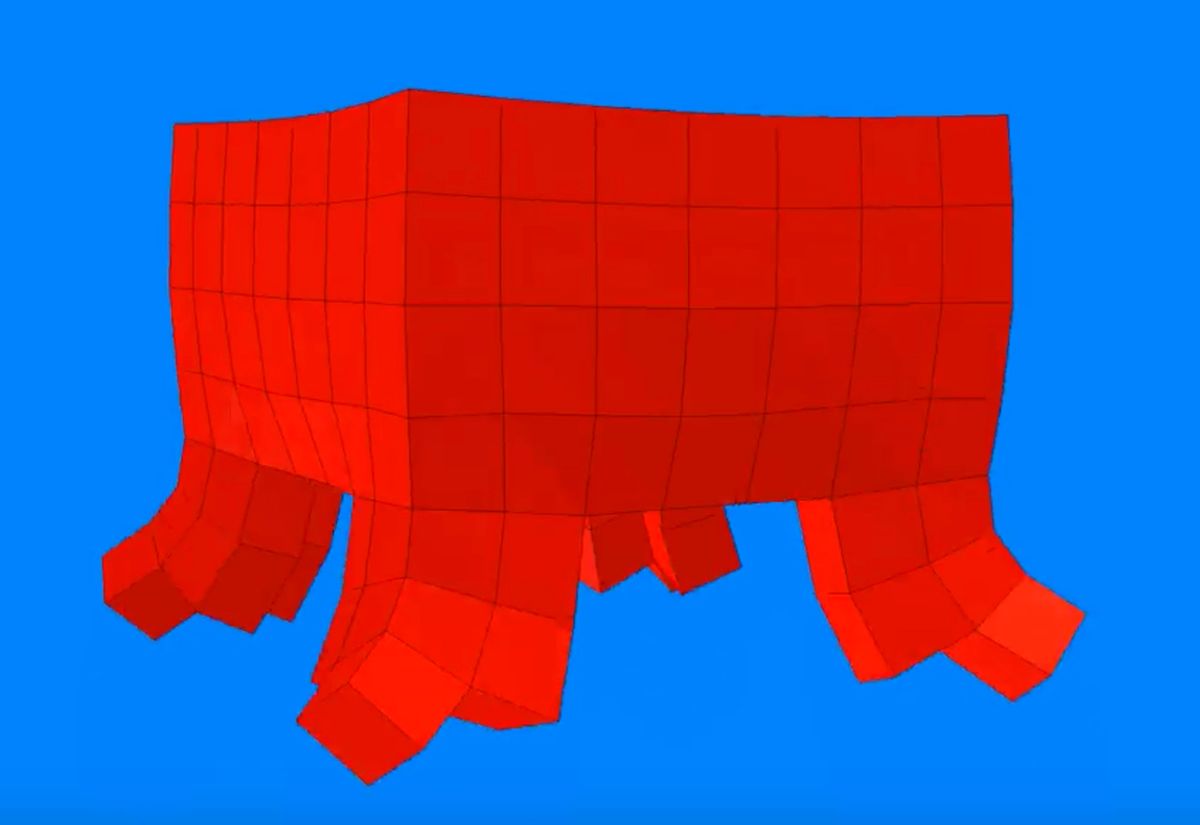

We’re applying algorithms inspired by the natural evolution in order to automatically “breed” soft robots for specific tasks and environments. This process, performed within a physically-realistic virtual environment, usually requires several days (more often, weeks) of computation even on a big supercomputer. In this moment we are using these tools in order to study general properties of soft-bodied creatures in simulation, trying to provide new insights to fields such as (soft) robotics, artificial intelligence, and (when possible) to biology as well.

The first project regards the evolution of growing soft robots, i.e. robots that can adapt during their lifetime based on a developmental program. Particularly, we are studying soft robots that adapt their bodies in response to environmental stimuli (morphological plasticity/environment-mediated growth).

A first result that we achieved with this setup, which once again highlights the importance of the body and of material properties on the evolution of adaptive and intelligent behavior, is related to the concept of morphological computation, i.e. the possibility to outsource to the physical body of a robot part of the computation needed to solve a task. We showed that the material properties of a robot can either prevent or facilitate the spontaneous emergence of morphological computation (which is associated with robust, adaptive and, ultimately, intelligent behavior). If evolution is provided with suitable materials in order to devise the morphology of the robot, morphological computation will emerge, characterized by effective and natural-looking behaviors requiring very little active control. If not, evolution will have harder times devising effective robot bodies and brains, and will automatically try to “complexify” the control in order to maximize performances.

At the same time, our results suggest that automated optimization tools such as evolutionary algorithms should be put in place in order to design soft robots, whose effective behavior usually arises from a complex intertwining of different factors, whose complexity can easily become intractable for a human designer.

The second project concerns the evolution of soft swimming robots. The goal of this project is to study the potential benefits of being soft or stiff in different environments, from an evolutionary perspective. We are also interested in studying the effects of environmental transitions during evolution on morphology, with particular focus on the transitions water<->land. The video summarizes very preliminary results in this direction.

Thanks Francesco!

The Institute for Robotics and Intelligent Machines (IRIM) at Georgia Tech has launched a new series of robotics research videos on YouTube. Donated by Dr. Clinton W. Kelly III, a member of the College of Computing’s advisory board and a longtime benefactor of Georgia Tech, the diverse collection of videos covers an extended period of time and offers a behind-the-scenes look at different aspects of the earlier days of unmanned vehicle and other robotics research conducted across multiple institutions, companies, and funding agencies.

The first video in the series features the Leg Lab, established at CMU by Marc Raibert and later moved to MIT. The Leg Lab developed robots that ran and maneuvered like animals and formed the basis for the company Boston Dynamics, which was founded by Raibert in 1992. The video shows some of the early examples of legged robots from monopods to quadropeds.

[ Georgia Tech ]

Here’s a fantastic idea for a DIY autonomous driving competition:

Formula Pi is an exciting new race series and club designed to get people started with self-driving robotics. The aim is to give people with little hardware or software experience a platform to get started and learn how autonomous vehicles work. We provide the hardware and basic software to join the race series. Competitors can modify the software however they like, and come race day a prepared SD card with your software on it will be placed into our club robots and the race will begin! You don’t need to be in the UK, you can enter the series from anywhere in the world. Just join and send code to compete!

The Kickstarter is already way past its goal, but if you’re willing to provide your own Raspberry Pi hardware, you can still get the chassis and motors and enter the competition.

[ Kickstarter ] via [ DIY Drones ]

Starship is using robots to deliver FREE GROCERIES to Germans in Palo Alto:

Sort of like the aerial variety of delivery drone, it looks like Starship’s robots are designed to be mostly autonomous, handing things off to a human teleoperator when situations get tricky or confusing.

[ Starship ]

This is a robot arm that you can preorder on Kickstarter for $50:

The kit includes all 3D printed components, an articulated gripper, four microservos with fasteners, one mini breadboard, an Arduino Uno, and a 6V power supply. If you already have the Arduino, the cost drops to $42.

[ Kickstarter ] via [ Slant Robotics ]

Thanks Gabe!

The Flying Ring is a new flying vehicle being developed at the Institute for Dynamic Systems and Control, ETH Zurich:

Aaand I’m dizzy now.

[ ETH Zurich ]

Thanks Markus!

“The MDARS, the Mobile Detection Assessment Response System, are security patrol vehicles,” said Joshua Kordani, Land Sea Air Autonomy software engineer [at the Combined Joint Task Force-Horn of Africa (CJTF-HOA), a command led by the U.S. military and international partners in East Africa to counter violent extremist organizations]. “They provide the operator with a mobile platform they can use to assess any sort of condition remotely around the base.” Resembling a high-tech golf cart, the MDARS is an automated vehicle with the primary duty to travel around the perimeter of the airfield to detect and deter any possible threats— and all on its own accord.

While working at the airfield, the robot has encountered a number of intruders, including humans, camels, donkeys, hyenas, antelopes, goats and other indigenous creatures that MDARS has been able to ward off the facility.

Gotta watch out for those tactical assault antelopes.

Baxter, bringing you haptic fistbumps in VR:

[ Queens University Belfast ] via [ Digital Trends ]

What can Tiago do for you? Find out with five demos in under 2 minutes:

[ PAL Robotics ]

If I had a Crabster, I would ride it to the grocery store.

And it would live in my bathtub.

[ KIOST ]

In this video, we applied our Distributed Iterative Learning Control (ILC) approach to a team of four quadrotors. Four vehicles learn to synchronize their motion. The goal of our work is to enable a team of robots to learn how to accurately track a desired trajectory while holding a given formation. We solve this problem in a distributed manner, where each vehicle has only access to the information of its neighbors and no central control unit is necessary. The desired trajectory is only available to one vehicle. We present a distributed iterative learning control (ILC) approach where the same task is repeated several times. Each vehicle learns from the experience of its own and its neighbors’ previous task repetitions and adapts its feedforward input to improve performance.

[ Paper ] via [ Dynamic Systems Lab ]

Moscow had a Mini Maker Faire, and PLEN was there:

[ PLEN ]

Just in case you didn’t already know:

Husky Unmanned Ground Vehicle (UGV) is a mobile robotic platform built for rough, all-terrain environments. Unlike other robotic platforms, Husky is durable and can easily incorporate a variety of payloads including sensors, manipulators and additional customization.

[ Clearpath ]

This counts as a robot until they stick people into it:

[ Volocopter ]

The narration on this will put you to sleep, but it’s always fun to see high speed picking and placing robots doing their thing:

[ Fanuc ]

[Evive] is an open-source embedded platform for makers to serve a wide range of applications. It can be used by beginners to develop their making skills towards robotics, embedded systems etc., by hobbyists and students to build projects and experimental setups with ease and by advanced users like researchers, professionals and educators to analyze and debug their projects. Made with the vision of transforming the very basics of making, it helps makers learn better, build easier and debug smarter and enables them to innovate and transform. evive is available onIndiegogo.

[ Indiegogo ]

Thanks Dhrupal!

We’re still a wee bit skeptical about this whole social companion robots thing, but if nothing else, Buddy will be coming with a simulator and SDK (and so will Jibo). Here’s a look at how it’ll work:

I spoke at a TTI Vanguard conference a few years ago, which means that their talks are certainly worth listening to. Here are a couple:

Rodolphe Gelin, Chief Scientific Officer, SoftBank Robotics: “Emotion for Better Robot Interactions”

In 2007, SoftBank Robotics commercialized Nao, the first in a series of a companion robots designed to improve human quality of life. More than 9000 units of this 58 cm (height) robot have been sold. In 2014, the company presented Pepper, a 1.2 m service robot that offers an intuitive human–robot interaction. To be accepted at first sight, especially by non-technophile users, a robot should have a humanoid appearance and be capable of natural interaction, preferably speech. But more—non-verbal communication, carrying emotion, turns out to be at least as important. The body language of the robot gives a real added value, and its understanding the gestures and other non-verbal signals by the human can improve dramatically its efficiency.

Alex Kendall, Department of Engineering, University of Cambridge: “A Deep Learning Framework For Visual Scene Understanding”

We can now teach machines to recognize objects. However, in order to teach a machine to “see” we need to understand geometry as well as semantics. Given an image of a road scene, for example, an autonomous vehicle needs to determine where it is, what’s around it, and what’s going to happen next. This requires not only object recognition, but depth, motion and spatial perception, and instance-level identification. A deep learning architecture can achieve all these tasks at once, even when given a single monocular input image. Surprisingly, jointly learning these different tasks results in superior performance, because it causes the deep network to uncover a better deep representation by explicitly supervising more information about the scene. This method outperforms other approaches on a number of benchmark datasets, such as SUN RGB-D indoor scene understanding and CityScapes road scene understanding. Besides cars, potential applications include factory robotics and systems to help the blind.

[ TTI Vanguard ]

Evan Ackerman is a senior editor at IEEE Spectrum. Since 2007, he has written over 6,000 articles on robotics and technology. He has a degree in Martian geology and is excellent at playing bagpipes.

Erico Guizzo is the director of digital innovation at IEEE Spectrum, and cofounder of the IEEE Robots Guide, an award-winning interactive site about robotics. He oversees the operation, integration, and new feature development for all digital properties and platforms, including the Spectrum website, newsletters, CMS, editorial workflow systems, and analytics and AI tools. An IEEE Member, he is an electrical engineer by training and has a master’s degree in science writing from MIT.