You know what’s really tedious and boring? Teaching robots to grasp a whole bunch of different kinds of objects. This is why roboticists have started to turn to AI strategies like self-supervised learning instead, where you let a robot gradually figure out on its own how to grasp things by trying slightly different techniques over and over again. Even with a big pile o’ robots, this takes a long time (thousands of robot-hours, at least), and while you can end up with a very nice generalized grasping framework at the end of it, that framework doesn’t have a very good idea of what a good grasp is.

The problem here is that most of the time, these techniques measure grasps in a binary-type fashion using very basic sensors: Was the object picked up and not dropped? If so, the grasp is declared a success. Real-world grasping doesn’t work exactly like that, as most humans can attest to: Just because it’s possible to pick something up and not drop it does not necessarily mean that the way you’re picking it up is the best way, or even a particularly good way. And unstable, barely functional grasps mean that dropping the object is significantly more likely, especially if anything unforeseen happens, a frustratingly common occurrence outside of robotics laboratories.

With this in mind, researchers from Carnegie Mellon University and Google decided to combine game theory and deep learning to make grasping better. Their idea was to introduce an adversary as part of the learning process—an “evil robot” that does its best to make otherwise acceptable grasps fail.

The concept of adversarial grasping is simple: It’s all about trying to grasp something while something else (the adversary, in research-speak) is making it difficult to do so:

The researchers—Lerrel Pinto, James Davidson, and Abhinav Gupta, who presented their work at the IEEE International Conference on Robotics and Automation (ICRA) last week—formulated their adversarial approach as a two-player zero-sum repeated game (a popular technique from game theory). In their model, each player is a convolutional neural network, one trying to succeed at grasping and the other trying to disrupt the first.

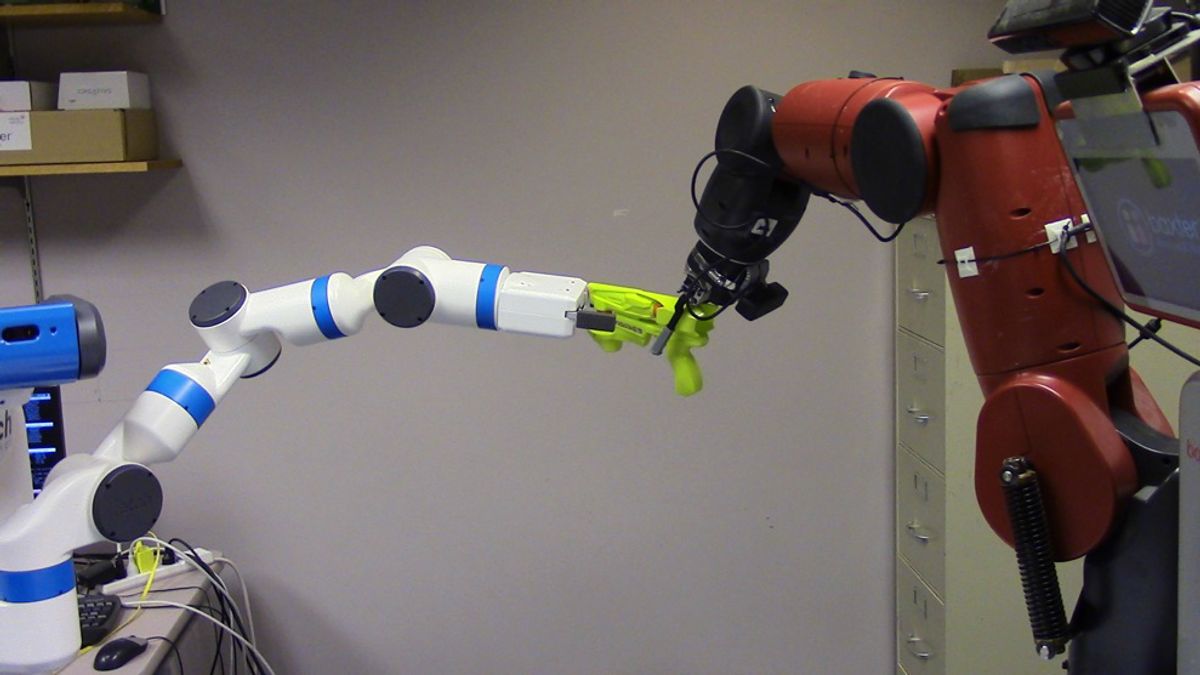

Things like gravity, inertia, and friction (or lack of friction) are basic adversaries that a grasping robot has to deal with all the time, but that robot can make grasping more difficult for itself by shaking the object as it picks it up. This is one of the nice things about robots: You can program them with adversarial alter egos such that they’re able to interfere with themselves, whether it’s causing one arm to shake, or using a second arm to more directly disturb the first by attempting to snatch an object away.

If the adversary is successful, it means that the grip wasn’t a good one, and the grasping program learns from that failure. At the same time, the adversary program learns from its success, and you end up with a sort of escalating arms race, with both the grasping program and the adversary program getting better and better at their jobs. And that’s why this research is promising for real-world applications. For robots to be useful, they’ll need to operate in environments where they’re being challenged all the time.

The researchers demonstrated that their adversarial strategy can accelerate the training process and lead to a more robust system than an approach that doesn’t rely on an adversary. They also showed how it works, too, much better than simply trying lots of additional grasps without an adversary:

After 3 iterations of training with shaking adversary, our grasp rate improves from 43 percent to 58 percent. Note that our baseline network that does not perform adversarial training has a grasp rate of only 47 percent. This clearly indicates that having extra supervision from adversarial agent is significantly more useful than just collecting more grasping data. What is interesting is the fact that 6K adversary examples lead to 52 percent grasp rate (iteration 1) whereas 16K extra grasp examples only have 47 percent grasp rate. This clearly shows that in case of multiple robots, training via adversarial setting is a more beneficial strategy.

The [overall] result is a significant improvement over baseline in grasping of novel objects: an increase in overall grasp success rate to 82 percent (compared to 68 percent if no adversarial training is used). Even more dramatically, if we handicapped the grasping by reducing maximum force and contact friction, the method achieved 65 percent success rate (as compared to 47 percent if no adversarial training was used).

Part of the trick here is making the adversary useful by choosing a behavior that will be a challenging (but not impossible) for the grasping robot. You can do this by watching how an undisturbed grasping robot tends to fail, and then programming the adversary to target that failure mode. Shaking and snatching tend to be effective at messing with grasps that can hold objects but aren’t stable, so robots that learn how to defeat these become much better at grasping in general. Depending on the kinds of things that you want to grasp, and the situations that you want to grasp them in, you could imagine other kinds of adversaries being effective teachers as well.

“Supervision via Competition: Robot Adversaries for Learning Tasks,” by Lerrel Pinto, James Davidson, and Abhinav Gupta from Carnegie Mellon University and Google Research, was presented at ICRA 2017 in Singapore.

Evan Ackerman is a senior editor at IEEE Spectrum. Since 2007, he has written over 6,000 articles on robotics and technology. He has a degree in Martian geology and is excellent at playing bagpipes.