For the most part, robots are a mystery to end users. And that’s part of the point: Robots are autonomous, so they’re supposed to do their own thing (presumably the thing that you want them to do) and not bother you about it. But as humans start to work more closely with robots, in collaborative tasks or social or assistive contexts, it’s going to be hard for us to trust them if their autonomy is such that we find it difficult to understand what they’re doing.

In a paper published in Science Robotics, researchers from UCLA have developed a robotic system that can generate different kinds of real-time, human-readable explanations about its actions, and then did some testing to figure which of the explanations were the most effective at improving a human’s trust in the system. Does this mean we can totally understand and trust robots now? Not yet—but it’s a start.

This work was funded by DARPA’s Explainable AI (XAI) program, which has a goal of being able to “understand the context and environment in which they operate, and over time build underlying explanatory models that allow them to characterize real world phenomena.” According to DARPA, “explainable AI—especially explainable machine learning—will be essential if [humans] are to understand, appropriately trust, and effectively manage an emerging generation of artificially intelligent machine partners.”

There are a few different issues that XAI has to tackle. One of those is the inherent opaqueness of machine learning models, where you throw a big pile of training data at some kind of network, which then does what you want it to do most of the time but also sometimes fails in weird ways that are very difficult to understand or predict. A second issue is figuring out how AI systems (and the robots that they inhabit) can effectively communicate what they’re doing with humans, via what DARPA refers to as an explanation interface. This is what UCLA has been working on.

The present project aims to disentangle explainability from task performance, measuring each separately to gauge the advantages and limitations of two major families of representations—symbolic representations and data-driven representations—in both task performance and fostering human trust. The goals are to explore (i) what constitutes a good performer for a complex robot manipulation task? (ii) How can we construct an effective explainer to explain robot behavior and foster human trust?

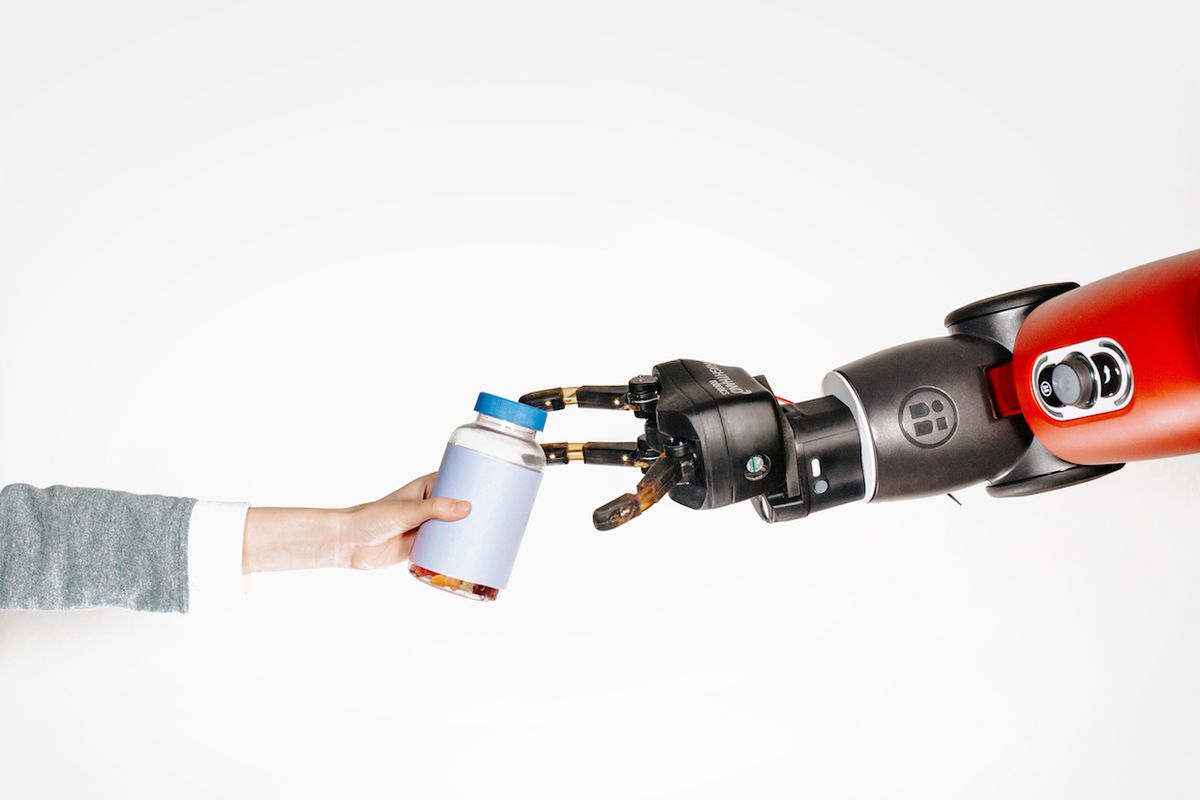

UCLA’s Baxter robot learned how to open a safety-cap medication bottle (tricky for robots and humans alike) by learning a manipulation model from haptic demonstrations provided by humans opening medication bottles while wearing a sensorized glove. This was combined with a symbolic action planner to allow the robot adjust its actions to adapt to bottles with different kinds of caps, and it does a good job without the inherent mystery of a neural network.

Intuitively, such an integration of the symbolic planner and haptic model enables the robot to ask itself: “On the basis of the human demonstration, the poses and forces I perceive right now, and the action sequence I have executed thus far, which action has the highest likelihood of opening the bottle?”

Both the haptic model and the symbolic planner can be leveraged to provide human-compatible explanations of what the robot is doing. The haptic model can visually explain an individual action that the robot is taking, while the symbolic planner can show a sequence of actions that are (ideally) leading towards a goal. What’s key here is that these explanations are coming from the planning system itself, rather than something that’s been added later to try and translate between a planner and a human.

To figure out whether these explanations made a difference in the level of a human’s trust or confidence or belief that the robot would be successful at its task, the researchers conducted a psychological study with 150 participants. While watching a video of the robot opening a medicine bottle, groups of participants were shown the haptic planner, the symbolic planner, or both planners at the same time, while two other groups were either shown no explanation at all, or a human-generated one-sentence summary of what the robot did. Survey results showed that the highest trust rating came from the group that had access to both the symbolic and haptic explanations, although the symbolic explanation was more impactful.

In general, humans appear to need real-time, symbolic explanations of the robot’s internal decisions for performed action sequences to establish trust in machines performing multistep complex tasks… Information at the haptic level may be excessively tedious and may not yield a sense of rational agency that allows the robot to gain human trust. To establish human trust in machines and enable humans to predict robot behaviors, it appears that an effective explanation should provide a symbolic interpretation and maintain a tight temporal coupling between the explanation and the robot’s immediate behavior.

This paper focuses on a very specific interpretation of the word “explain.” The robot is able to explain what it’s doing (i.e. the steps that it’s taking) in a way that is easy for humans to interpret, and it’s effective in doing so. However, it’s really just explaining the “what” rather than the “why,” because at least in this case, the “why” (as far as the robot knows) is really just “because a human did it this way” due to the way the robot learned to do the task.

While the “what” explanations did foster more trust in humans in this study, long term, XAI will need to include “why” as well, and the example of the robot unscrewing a medicine bottle illustrates a situation in which it would be useful.

You can see that there are several repetitive steps in this successful bottle opening, and as an observer, I have no way of knowing if the robot is repeating an action because the first action failed, or if that was just part of its plan. Maybe the opening the bottle really just takes one single grasp-push-twist sequence, but the robot’s gripper slipped the first time.

Personally, when I think of a robot explaining what it’s doing, this is what I’m thinking of. Knowing what a robot was “thinking,” or at least the reasoning behind its actions or non-actions, would significantly increase my comfort with and confidence around robotic systems, because they wouldn’t seem so… Dumb? For example, is that robot just sitting there and not doing anything because it’s broken, or because it’s doing some really complicated motion planning? Is my Roomba wandering around randomly because it’s lost, or is it wandering around pseudorandomly because that’s the most efficient way to clean? Does that medicine bottle need to be twisted again because a gripper slipped the first time, or because it takes two twists to open?

Even if the robot makes a decision that I would disagree with, this level of “why” explanation or “because” explanation means that I can have confidence that the robot isn’t dumb or broken, but is either doing what it was programmed to do, or dealing with some situation that it wasn’t prepared for. In either case, I feel like my trust in it would significantly improve, because I know it’s doing what it’s supposed to be doing and/or the best it can, rather than just having some kind of internal blue screen of death experience or something like that. And if it is dead inside, well, I’d want to know that, too.

Longer-term, the UCLA researchers are working on the “why” as well, but it’s going to take a major shift in the robotics community for even the “what” to become a priority. The fundamental problem is that right now, roboticists in general are relentlessly focused on optimization for performance—who cares what’s going on inside your black box system as long as it can successfully grasp random objects 99.9 percent of the time?

But people should care, says lead author of the UCLA paper Mark Edmonds. “I think that explanation should be considered along with performance,” he says. “Even if you have better performance, if you’re not able to provide an explanation, is that actually better?” He added: “The purpose of XAI in general is not to encourage people to stop going down that performance-driven path, but to instead take a step back, and ask, ‘What is this system really learning, and how can we get it to tell us?’ ”

It’s a little scary, I think, to have systems (and in some cases safety critical systems) that work just because they work—because they were fed a ton of training data and consequently seem to do what they’re supposed to do to the extent that you’re able to test them. But you only ever have the vaguest of ideas why these systems are working, and as robots and AI become a more prominent part of our society, explainability will be a critical factor in allowing us to comfortably trust them.

“A Tale of Two Explanations: Enhancing Human Trust by Explaining Robot Behavior,” by M. Edmonds, F. Gao, H. Liu, X. Xie, S. Qi, Y. Zhu, Y.N. Wu, H. Lu, and S.-C. Zhu from the University of California, Los Angeles, and B. Rothrock from the California Institute of Technology, in Pasadena, Calif., appears in the current issue of Science Robotics.

Evan Ackerman is a senior editor at IEEE Spectrum. Since 2007, he has written over 6,000 articles on robotics and technology. He has a degree in Martian geology and is excellent at playing bagpipes.