This is part three of a six-part series on the history of natural language processing.

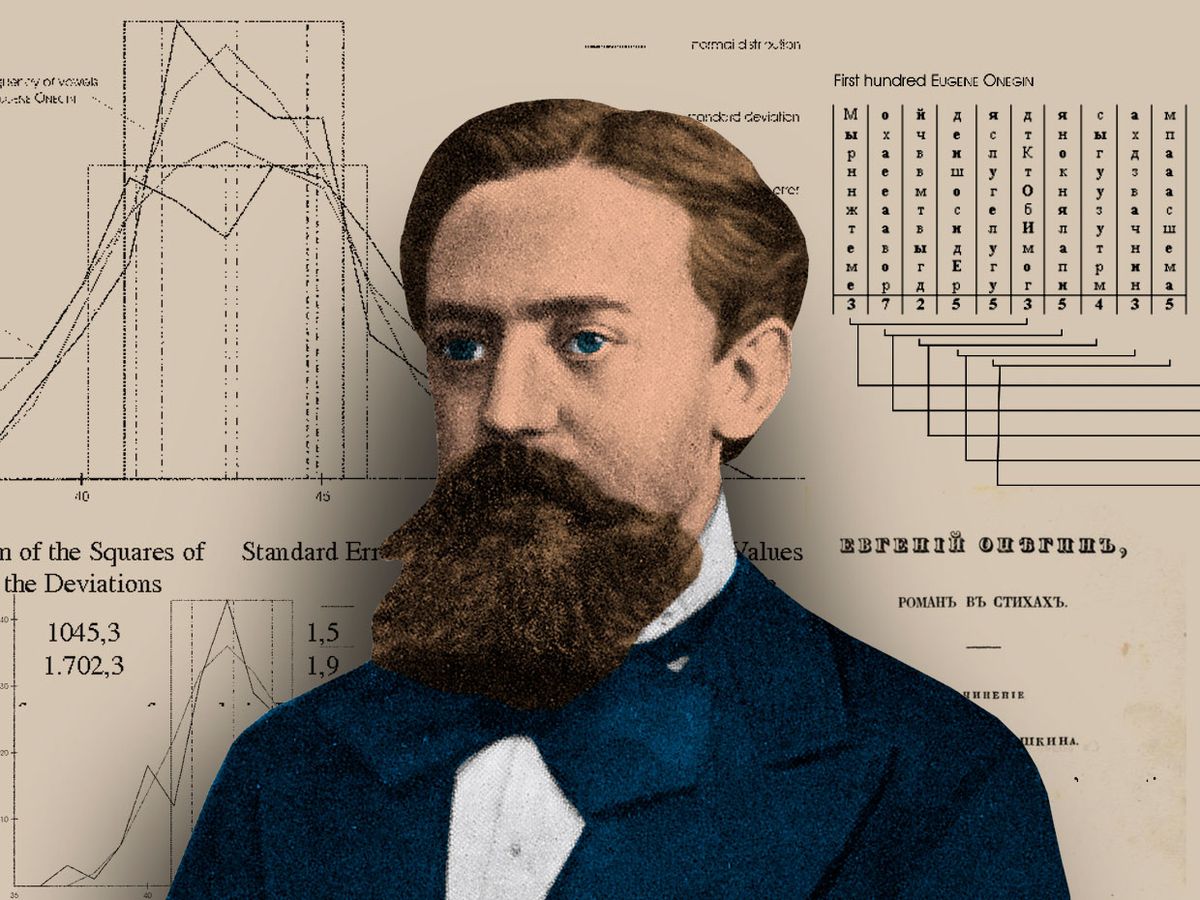

In 1913, the Russian mathematician Andrey Andreyevich Markov sat down in his study in St. Petersburg with a copy of Alexander Pushkin’s 19th century verse novel, Eugene Onegin, a literary classic at the time. Markov, however, did not start reading Pushkin’s famous text. Rather, he took a pen and piece of drafting paper, and wrote out the first 20,000 letters of the book in one long string of letters, eliminating all punctuation and spaces. Then he arranged these letters in 200 grids (10-by-10 characters each) and began counting the vowels in every row and column, tallying the results.

To an onlooker, Markov’s behavior would have appeared bizarre. Why would someone deconstruct a work of literary genius in this way, rendering it incomprehensible? But Markov was not reading the book to learn lessons about life and human nature; he was searching for the text’s more fundamental mathematical structure.

In separating the vowels from the consonants, Markov was testing a theory of probability that he had been developing since 1909. Up until that point, the field of probability had been mostly limited to analyzing phenomena like roulette or coin flipping, where the outcome of previous events does not change the probability of current events. But Markov felt that most things happen in chains of causality and are dependent on prior outcomes. He wanted a way of modeling these occurrences through probabilistic analysis.

Language, Markov believed, was an example of a system where past occurrences partly determine present outcomes. To demonstrate this, he wanted to show that in a text like Pushkin’s novel, the chance of a certain letter appearing at some point in the text is dependent, to some extent, on the letter that came before it.

To do so, Markov began counting vowels in Eugene Onegin, and found that 43 percent of letters were vowels and 57 percent were consonants. Then Markov separated the 20,000 letters into pairs of vowels and consonant combinations: He found that there were 1,104 vowel-vowel pairs, 3,827 consonant-consonant pairs, and 15,069 vowel-consonant and consonant-vowel pairs. What this demonstrated, statistically speaking, was that for any given letter in Pushkin’s text, if it was a vowel, odds were that the next letter would be a consonant, and vice versa.

Markov used this analysis to demonstrate that Pushkin’s Eugene Onegin wasn’t just a random distribution of letters but had some underlying statistical qualities that could be modeled. The enigmatic research paper that came out of this study, entitled “An Example of Statistical Investigation of the Text Eugene Onegin Concerning the Connection of Samples in Chains,” was not widely cited in Markov’s lifetime, and not translated to English until 2006. But some of its central concepts around probability and language spread across the globe, eventually finding re-articulation in Claude Shannon’s hugely influential paper, “A Mathematical Theory of Communication,” which came out in 1948.

Shannon’s paper outlined a way to precisely measure the quantity of information in a message, and in doing so, set the foundations for a theory of information that would come to define the digital age. Shannon was fascinated by Markov’s idea that in a given text, the likelihood of some letter or word appearing could be approximated. Like Markov, Shannon demonstrated this by performing some textual experiments that involved making a statistical model of language, then took a step further by trying to use the model to generate text according to those statistical rules.

In an initial control experiment, he started by generating a sentence by picking letters randomly from a 27-symbol alphabet (26 letters, plus a space), and got the following output:

XFOML RXKHRJFFJUJ ZLPWCFWKCYJ FFJEYVKCQSGHYD QPAAMKBZAACIBZLHJQD

The sentence was meaningless noise, Shannon said, because when we communicate we don’t choose letters with equal probability. As Markov had shown, consonants are more likely than vowels. But at a greater level of granularity, E’s are more common than S’s which are more common than Q’s. To account for this, Shannon amended his original alphabet so that it modeled the probability of English more closely—he was 11 percent more likely to draw an E from the alphabet than a Q. When he again drew letters at random from this recalibrated corpus he got a sentence that came a bit closer to English.

OCRO HLI RGWR NMIELWIS EU LL NBNESEBYA TH EEI ALHENHTTPA OOBTTVA NAH BRL.

In a series of subsequent experiments, Shannon demonstrated that as you make the statistical model even more complex, you get increasingly more comprehensible results. Shannon, via Markov, revealed a statistical framework for the English language, and showed that by modeling this framework—by analyzing the dependent probabilities of letters and words appearing in combination with each other—he could actually generate language.

The more complex the statistical model of a given text, the more accurate the language generation becomes—or as Shannon put it, the greater “resemblance to ordinary English text.” In the final experiment, Shannon drew from a corpus of words instead of letters and achieved the following:

THE HEAD AND IN FRONTAL ATTACK ON AN ENGLISH WRITER THAT THE CHARACTER OF THIS POINT IS THEREFORE ANOTHER METHOD FOR THE LETTERS THAT THE TIME OF WHO EVER TOLD THE PROBLEM FOR AN UNEXPECTED.

For both Shannon and Markov, the insight that language’s statistical properties could be modeled offered a way to re-think broader problems that they were working on.

For Markov, it extended the study of stochasticity beyond mutually independent events, paving the way for a new era in probability theory. For Shannon, it helped him formulate a precise way of measuring and encoding units of information in a message, which revolutionized telecommunications and, eventually, digital communication. But their statistical approach to language modeling and generation also ushered in a new era for natural language processing, which has ramified through the digital age to this day.

This is the third installment of a six-part series on the history of natural language processing. Last week’s post described Leibniz’s proposal for a machine that combined concepts to form reasoned arguments. Come back next Monday for part four, “Why People Demanded Privacy to Confide in the World’s First Chatbot.”

You can also check out our prior series on the untold history of AI.