Computers are getting better each year at AI-style tasks, especially those involving vision—identifying a face, say, or telling if a picture contains a certain object. In fact, their progress has been so significant that some researchers now believe the standardized tests used to evaluate these programs have become too easy to pass, and therefore need to be made more demanding.

At issue are the “public data sets” commonly used by vision researchers to benchmark their progress, such as LabelMe at MIT or Labeled Faces in the Wild at the University of Massachusetts, Amherst. The former, for example, contains photographs that have been labeled via crowdsourcing, so that a photo of street scene might have a “car” and a “tree” and a “pedestrian” highlighted and tagged. Success rates have been climbing for computer vision programs that can find these objects, with most of the credit for that improvement going to machine learning techniques such as convolutional networks, often called Deep Learning.

But a group of vision researchers say that simply calling out objects in a photograph, in addition to having become too easy, is simply not very useful; that what computers really need to be able to do is to “understand” what is “happening” in the picture. And so with support from DARPA, Stuart Geman, a professor of applied mathematics at Brown University, and three others have developed a framework for a standardized test that could evaluate the accuracy of a new generation of more ambitious computer vision programs.

The research was published this week in the Proceedings of the National Academy of Sciences; Geman’s co-authors are all from Johns Hopkins University, in Baltimore, Md., and include his brother, Donald Geman, along with Neil Hallonquist and Laurent Younes.

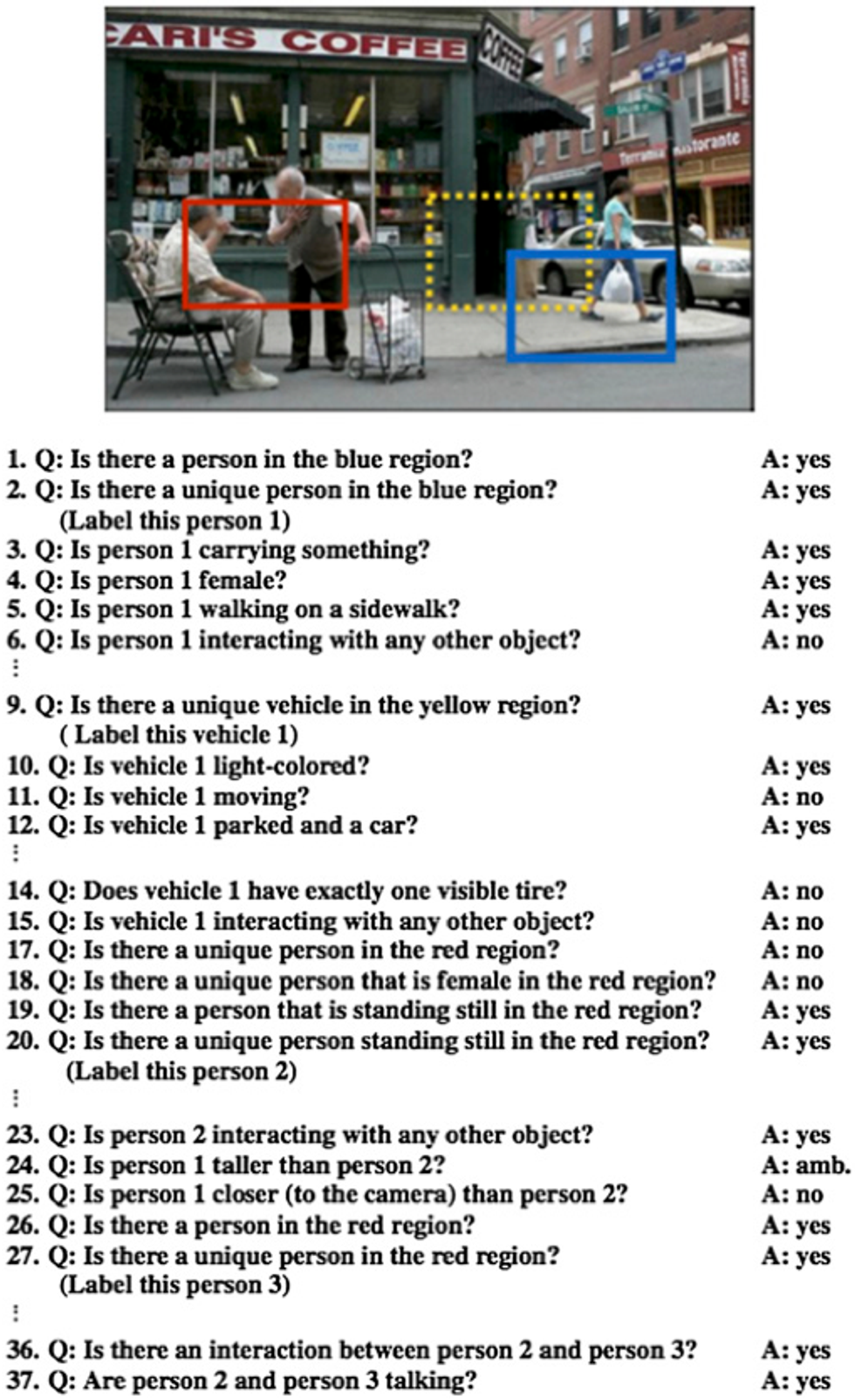

Their proposed method calls for human test-designers to develop a list of certain attributes that a picture might have, like whether a street scene has people in it, or whether the people are carrying anything or talking with each other. Photographs would first be hand-scored by humans on these criteria; a computer vision system would then be shown the same picture, without the “answers,” to determine if it was able to pick out what the humans had spotted.

Initially, the questions would be rudimentary, asking if there is a person in a designated region of the picture, for example. But the questions would grow in complexity as programs became more sophisticated; a more complicated question might involve the nature of an interaction between different people in the picture.

Eventually, test-developers could end up asking machines for the common-sense, real-world knowledge that has always been the goal of AI researchers. For example, a future question might be, “What will happen to the man in front of the building on whom the piano is about to fall?”

One advantage of the proposed approach, says Geman, is that it would allow a hierarchy of information to be developed for a picture, starting simple and growing more complex. It would also provide the basis for straightforward automated tests that can score how much of that context was being gleaned by the software.

Geman says that because of limitations of today’s data sets, computer vision researchers have been “teaching to the test,” such as creating systems that try to simply detect whether or not a photograph contains a cat. “It’s time to raise the bar,” he says.

Geman conceded that none of the Deep Learning systems currently in use would be able to pass even rudimentary versions of his proposed test. Asked whether the Deep Learning methodology is robust enough to one day be able handle the more complicated contexts and relationships that he interested in, Geman says, “I think the jury is still out on that.”

The proposal by Geman and his colleagues comes at a time when the AI community seems interested in developing better ways of measuring its progress. For example, researchers at a recent conference in Austin, Texas, attempted to come up with a replacement for the well-known Turing Test. They plan to continue their discussions in July in Buenos Aires, at the of the International Joint Conferences on Artificial Intelligence.

Gary Marcus, an NYU researcher who is coordinating those conference sessions, says that while no single test can determine everything about intelligence, the approach being taken by Geman and others is “a very nice, tractable step in the right direction” in a field that “desperately needs a strong set of new challenges that lead to more sophisticated systems.”