Can we study AI the same way we study lab rats? Researchers at DeepMind and Harvard University seem to think so. They built an AI-powered virtual rat that can carry out multiple complex tasks. Then, they used neuroscience techniques to understand how its artificial “brain” controls its movements.

Today’s most advanced AI is powered by artificial neural networks—machine learning algorithms made up of layers of interconnected components called “neurons” that are loosely inspired by the structure of the brain. While they operate in very different ways, a growing number of researchers believe drawing parallels between the two could both improve our understanding of neuroscience and make smarter AI.

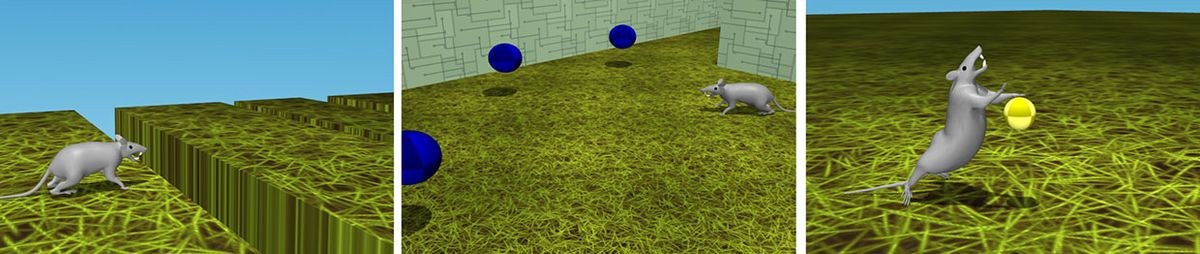

Now the authors of a new paper due to be presented this week at the International Conference on Learning Representations have created a biologically accurate 3D model of a rat that can be controlled by a neural network in a simulated environment. They also showed that they could use neuroscience techniques for analyzing biological brain activity to understand how the neural net controlled the rat’s movements.

The platform could be the neuroscience equivalent of a wind tunnel, says Jesse Marshall, coauthor and postdoctoral researcher at Harvard, by letting researchers test different neural networks with varying degrees of biological realism to see how well they tackle complex challenges.

“Typical experiments in neuroscience probe the brains of animals performing single behaviors, like lever tapping, while most robots are tailor-made to solve specific tasks, like home vacuuming,” he says. “This paper is the start of our effort to understand how flexibility arises and is implemented in the brain, and use the insights we gain to design artificial agents with similar capabilities.”

The virtual rodent features muscles and joints based on measurements from real-life rats, as well as vision and a sense of proprioception, which refers to the feedback system that tells animals where their body parts are and how they’re moving. The researchers then trained a neural network to guide the rat through four tasks—jumping over a series of gaps, foraging in a maze, trying to escape a hilly environment, and performing precisely timed pairs of taps on a ball.

Once the rat could successfully complete the tasks, the research team then analyzed recordings of its neural activity using techniques borrowed from neuroscience to understand how the neural network was achieving the motor control required to complete the tasks.

Because the researchers had built the AI that powered the rat, much of what they found was expected. But one interesting insight they gained was that the neural activity seemed to occur over longer time scales than would be expected if it were directly controlling muscle forces and limb movements, says Diego Aldarondo, a coauthor and graduate student at Harvard.

“This implies that the network represents behaviors at an abstract scale of running, jumping, spinning, and other intuitive behavioral categories,” he says, a cognitive model that has previously been proposed to exist in animals.

The neural network appeared to reuse some such representations across tasks, and the neural activity encoding them often took the form of sequences, a phenomenon that has been observed in both rodents and songbirds.

The researchers have open sourced the virtual rat in the hopes that other researchers will build on their findings, says Josh Merel, a coauthor and a senior research scientist at DeepMind.

While neural networks don’t have the physiological realism of some models, Blake Richards, a neuroscientist from McGill University in Canada who was not involved in the work, says they capture enough important features of neural processing to generate useful predictions about how neural activity impacts behavior. The big contribution of this paper, he says, is to come up with a way to train these networks in a realistic manner that makes it much easier to compare against biological data.

“[The authors] are providing a platform for training [neural networks] in a realistic body and set of tasks, which will make comparisons to real brains in rodents far more valuable,” he adds.

While one must be cautious about making overly broad comparisons between artificial and biological neural networks, this approach could be a fruitful way to probe the neural underpinnings of behavior, says Stephen Scott, a neuroscientist at Queen’s University in Canada who was not involved in the work.

The complexity of recording neural activity in animals and linking it to specific behaviors means most experiments are done on relatively simple tasks in rigid experimental settings, Scott says. In contrast, the virtual rat can carry out complex, multipart behaviors like foraging that can be linked to its sensory input and neural activity with high precision.

The only problem is that collecting neural data from animals on tasks this complicated is very difficult, says Scott. He would like to see the authors test the virtual rat on some of the simpler tasks used in laboratory settings so that the neural activity patterns could be compared against those found in animals to see where they diverge.

Edd Gent is a freelance science and technology writer based in Bengaluru, India. His writing focuses on emerging technologies across computing, engineering, energy and bioscience. He's on Twitter at @EddytheGent and email at edd dot gent at outlook dot com. His PGP fingerprint is ABB8 6BB3 3E69 C4A7 EC91 611B 5C12 193D 5DFC C01B. His public key is here. DM for Signal info.