Hollywood has already used computer-aided visual effects to make veteran actors look decades younger, enable action stars such as Arnold Schwarzenegger and Will Smith to battle digital doubles of themselves, and virtually resurrect dead actors for new Star Wars films or TV commercials. Now AI that can learn the idiosyncrasies of each actor’s voice and facial expressions is making it easier to dub films and TV shows in new languages while preserving the acting nuances and voices of the original-language performances.

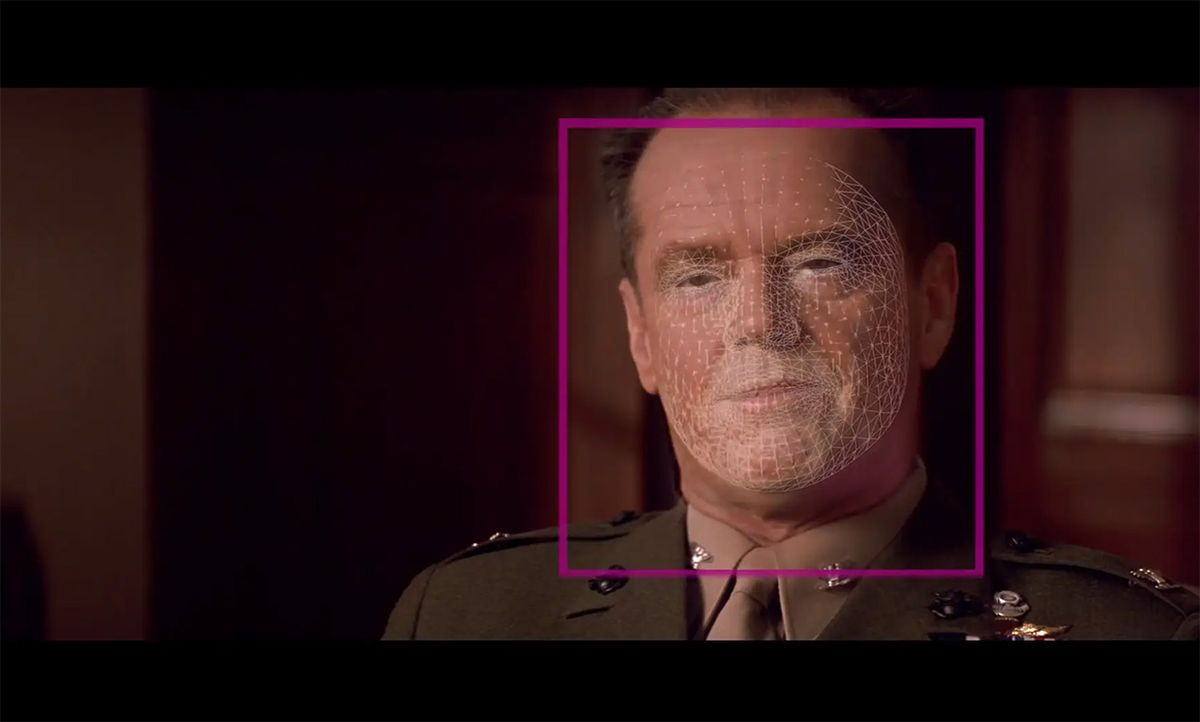

The London-based startup Flawless AI has partnered with researchers at the Max Planck Institute for Informatics in Germany to commercialize technology that can digitally capture actors’ performances from 2D film or TV image frames and transform those into a 3D computer model. After training AI to learn specific actors’ vocal and facial performances, the startup can generate modified versions of the original performance that change the actor’s voice and facial expression to fit an entirely different language.

“It's actually a pixel perfect 3D representation of the head of each of the actors,” says Nick Lynes, co-CEO and founder of Flawless AI. “And because of that pixel perfect frame, it represents every single phenomena and idiosyncratic style possible that the actor does, because it doesn't take much before the AI has understood all of the idiosyncrasies.”

The AI-generated dubbing performances still require some manual touch-ups by human visual effects artists, and Lynes expects that to be the case for the foreseeable future. But the impressive end results showcased in a Flawless AI demo reel include Tom Cruise and Jack Nicholson confronting each other in fluent French in the 1992 film “A Few Good Men,” Robert DeNiro speaking German in the 2015 film “Heist,” and Tom Hank’s titular “Forrest Gump” character crying over Jenny’s grave while speaking German, Spanish, and Japanese in the 1994 film.

Several other companies have been using AI to dub movies, TV shows, advertisements, and other content in new languages while retaining the original voices, including Israeli companies such as Deepdub and Canny AI. But Flawless AI’s approach goes beyond just redoing the audio by reshaping the actor’s mouth movements and facial expressions to suit the new language dub. It’s likely one of the very first efforts to commercialize such technology beyond what has been demonstrated in academic research papers.

The idea for the startup originated from a conversation between Lynes and Scott Mann, a Hollywood film director, back when Lynes was shopping around a TV show script in Los Angeles. The two men quickly found common ground discussing how AI might help film and TV show productions reduce the cost of certain visual effects and reshoots, which led to them forming a partnership and becoming co-CEOs and founders of Flawless AI.

In addition to hiring veteran visual effects supervisors and Hollywood dealmakers, Flawless AI has teamed up with researchers led by Christian Theobalt, scientific director at the Max Planck Institute for Informatics in Germany. Theobalt’s research group uses AI techniques based on deep neural networks to digitally change the clothing that an actor is wearing in the same scene, generate 3D models of people’s faces and heads based on 2D images, and create 3D computer-animated figures based on a single 2D image of a person.

The startup can currently perform multiple dubs within the usual production time-frame for a film or TV show, or it can go back and do dubbing for an older film within six to 10 weeks. It has also figured out how to reduce the amount of training time and data necessary for its AI to learn all the performance nuances of each actor. The team initially used all the available raw, unedited film footage from each film or TV production to train the AI, but has since figured out how to more efficiently train its AI using just a small fraction of such footage.

Digitally modifying a person’s mouth movements to fit entirely different words is something that can also be seen in AI-assisted “deepfake” videos. But Flawless AI’s technology delivers more realistic and natural modified performances than the typical deepfake video, which is important given that the end results may need to be shown on a standard movie theater screen or even in the giant IMAX format.

Lynes was also careful to distinguish Flawless AI’s approach from the negative applications of deepfakes that can involve putting both celebrities and ordinary people into embarrassing or compromising videos. “What we're doing is with permission and approval and excitement where we've got the content approval, we're doing it commercially, we're doing it with respect,” Lynes says.

The language dubbing application alone has big implications for Hollywood studios—not to mention streaming platforms such as Netflix and Disney Plus—that are looking to make more films and TV shows available to the largest audiences possible. Flawless AI has already signed at least one contract with an undisclosed customer and is talking with “all the major streamers and most of the major studios” about possible deals, Lynes says.

But the Flawless AI approach to digitizing actor performances may have even bigger implications beyond enabling seamless dubbing of the newest films and TV shows in multiple languages. The company’s technology not only digitally captures the actor’s facial expressions and mouth movements, but also trains the AI on patterns in the walking gait and body movements of the actors, Lynes explains.

That raises the possibility of using the actors’ digital doubles to modify certain movie scenes in ways that fix mistakes or better conform to a director’s vision instead of getting the actors and crew back together for expensive reshoots. For example, it’s not hard to imagine how such technology could make it much easier for a Hollywood studio to change something such as removing Henry Cavill’s contractually-obligated mustache from the face of Superman during reshoots for the 2017 film “Justice League.”

The Flawless AI approach also digitally captures the entire environment and surroundings of an actor in a given scene. That could make it easier for algorithms to remove mistakes such as the infamous coffee cup that appears in the last season of the HBO medieval fantasy series “Game of Thrones,” as Lynes pointed out.

“Sometimes in the live action world, the real world, it’s hard to make sure everything goes in the way that you want it to go to be able to tell your story exactly as you wanted to tell it,” Lynes says. “So having AI [enabled] visual effects gives me functions in the future that are not possible to do with the standard visual effects process.”

Jeremy Hsu has been working as a science and technology journalist in New York City since 2008. He has written on subjects as diverse as supercomputing and wearable electronics for IEEE Spectrum. When he’s not trying to wrap his head around the latest quantum computing news for Spectrum, he also contributes to a variety of publications such as Scientific American, Discover, Popular Science, and others. He is a graduate of New York University’s Science, Health & Environmental Reporting Program.