If a distant comet is on course to collide with Earth, scientists will be able to detect it only about a year in advance. That doesn’t leave much time to prepare.

Artificial intelligence researchers believe they have the key to providing astronomers more foresight: machine learning algorithms that can more quickly identify and cluster the debris that comets leave in their wake. By speeding up analysis of meteor showers, researchers hope to pinpoint the orbits of distant, but potentially dangerous, comets. This project is one of five being explored as part of an artificial intelligence pilot research program sponsored by NASA.

Last Thursday at an event at Intel, participants in the NASA Frontier Development Laboratory research accelerators presented results showing how artificial intelligence can speed up space science. The lab, part of an effort by NASA to test the machine learning waters, is run by the SETI Institute; engineers at private companies including Intel, IBM, NVIDIA, and Lockheed Martin, among others, helped support the projects.

Companies such as Facebook and Google use machine learning to predict people’s buying habits and tag photos, but so far it hasn’t been widely applied to basic science problems, says Bill Diamond, CEO of the SETI Institute. Through Frontier Development Laboratory, which just finished its second year, NASA is exploring the possibilities. The lab sponsors small groups of computer and planetary science researchers to work on important problems in space science for two months each summer.

NASA scientists in the audience were excited, but skeptical, about the results from the comet detection project. Long-period comets, whose orbits take them far beyond Jupiter, are too distant to observe directly. What we can see is the evidence they leave in their wake. One type of clue is a meteor shower, which happens when Earth moves through debris left by a comet. Researchers on the comet project developed an image-classifying algorithm to more rapidly distinguish meteors from passing clouds, fireflies, and airplanes (a task that’s usually done by people) and then cluster these individual observations over time. In so doing, they were able to draw attention to a group of previously unidentified meteor showers. These showers, the group believes, may be evidence of previously undetected long-period comets.

The neural network, which the group put together and tested over the course of two months, agreed with human classifications of meteors about 90 percent of the time. In the pilot project, the group analyzed about one million meteors.

Some NASA reviewers in the audience wanted to see more evidence that the meteors detected by the neural network were not noise; others wanted more evidence that the meteors were actually from comets, not asteroids or other sources. Project scientist Marcelo de Cicco, an astronomer at the Brazilian National Metrology Institute, said there are many next steps to take. “We want to learn from what we can see, and look into these predicted orbits, because right now we have nothing,” he said.

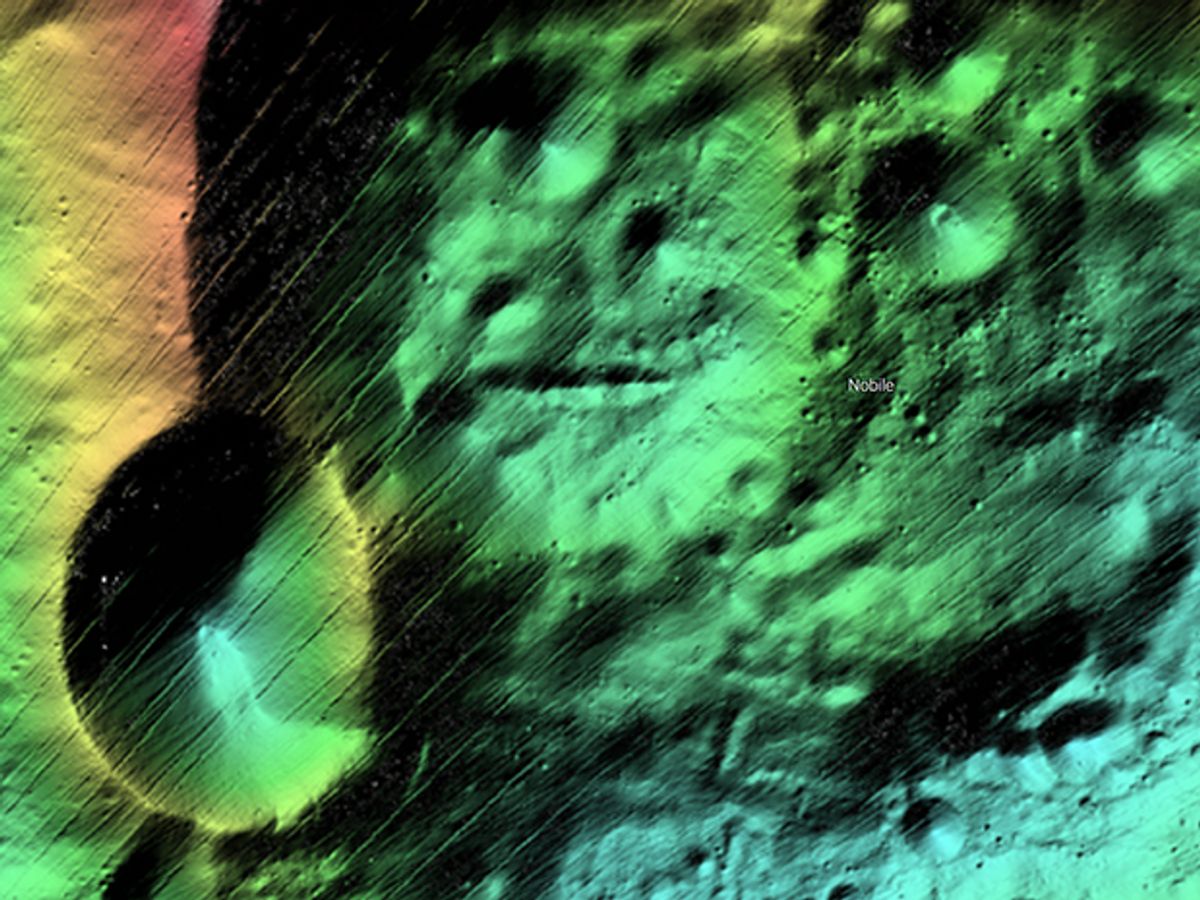

Other projects had more to go on. One group used Intel’s deep-learning accelerator, called Nervana, to improve the resolution of maps of the moon. This team also used a neural net to classify images—crater or no crater? Their results agreed with human image classification about 98 percent of the time, about five times the accuracy of previous image analysis systems. The group’s aim is to provide recon so that future lunar rovers don’t fall into unmapped craters while looking for water at the moon’s poles. The poles are highly shaded, so it’s difficult to distinguish crater from shadow.

Two teams working on forecasting solar flares—magnetic pulses that can cause problems with the power grid, GPS, and other systems—had support from IBM and Lockheed Martin. One group’s algorithm, called FlareNET, outperformed NOAA’s existing system for predicting solar flares. “I don’t know who’s got the job of telling NOAA about this,” quipped Frontier Development Lab director James Parr.

“The projects show how AI can crunch the workflow, and do a few months of work in a few hours,” says Parr. Scientists in the room were excited about the prospects for continuing these projects beyond the pilot stage—and for putting the detection and forecasting systems into practice. However, neither Diamond nor Parr could comment on whether NASA will take up and expand on any of the projects before next summer’s session.

Katherine Bourzac is a freelance journalist based in San Francisco, Calif. She writes about materials science, nanotechnology, energy, computing, and medicine—and about how all these fields overlap. Bourzac is a contributing editor at Technology Review and a contributor at Chemical & Engineering News; her work can also be found in Nature and Scientific American. She serves on the board of the Northern California chapter of the Society of Professional Journalists.