On 20 September, Nvidia’s vice president of applied deep learning, Bryan Catanzaro, went to Twitter with a bold claim: In certain GPU-heavy games, like the classic first-person platformer Portal, seven out of eight pixels on the screen are generated by a new machine-learning algorithm. That’s enough, he said, to accelerate rendering by up to 5 times.

This impressive feat is currently limited to a few dozen 3D games, but it’s a hint at the gains that neural rendering will soon deliver. The technique will unlock new potential in everyday consumer electronics.

Neural rendering as turbocharger

Catanzaro’s claim is made by possible by DLSS 3, the latest version of Nvidia’s DLSS (Deep Learning Super Sampling). It combines AI-powered image upscaling with a new feature exclusive to DLSS 3: optical multiframe generation. Sequential frames are combined with an optical flow field used to predict changes between frames. DLSS 3 then slots unique, AI-generated frames between traditionally rendered frames.

“When you’re playing with DLSS super resolution on performance mode in 4K, seven out of every eight pixels are being run through a neural network,” says Catanzaro. “I think that’s one of the reasons why you see such a great speedup. In that mode, in games that are GPU-heavy like Portal RTX […] seven out of every eight pixels are being generated by AI, and as a result we’re 530 percent faster.”

This example, which references testing by the 3D graphics publication and YouTube channel Digital Foundry, is a best-case scenario. But results in other tests remain impressive. Most show DLSS 3 delivering a two- to three-times performance gain over purely traditional rendering at 4K resolution. And while Nvidia leads the pack, it has competitors. Intel offers XeSS (Xe Super Sampling), an AI-powered upscaler. AMD’s RDNA 3 graphics architecture includes a pair of AI accelerators in each compute unit, though it’s yet unclear how the company will use them.

Microsoft Flight Simulator | NVIDIA DLSS 3 - Exclusive First-Lookwww.youtube.com

Games have led the wave of neural rendering because they’re well suited to the use of machine-learning techniques. “That problem there, where you look at little patches of an image and try to guess what’s missing, is just a really good fit for machine learning,” says Jon Barron, senior staff researcher at Google. The similarity between frames, along with a frame rate high enough to obscure minor errors in motion, works to machine learning’s strengths.

It’s not perfect: DLSS 3 has trouble with scene transitions, while XeSS can cause a shimmering effect in some situations. However, both Barron and Catanzaro think obstacles in quality can be overcome by feeding neural rendering models additional training data. 2023 provides the chance to see the technology progress as Nvidia, Intel, and AMD work with software partners to enhance their respective neural rendering techniques.

3D neural rendering steps into the spotlight

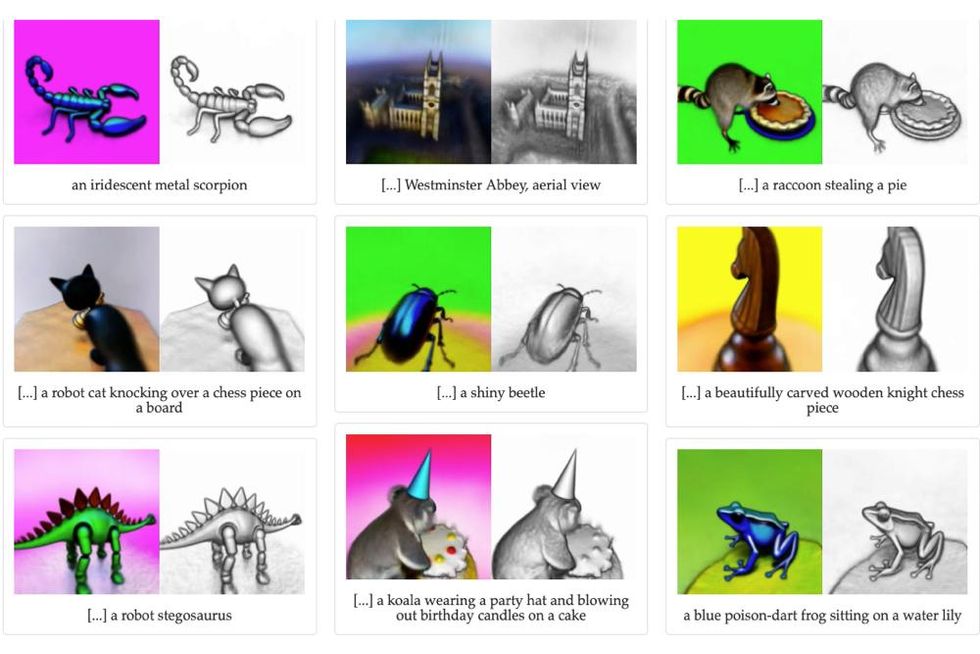

This is just the tip of the spear. Barron sees a fork between “2D neural rendering” techniques like Nvidia DLSS 3, which improves the results of a traditional graphics pipeline, and “3D neural rendering,” which generates graphics entirely through machine learning. Barron coauthored a paper on DreamFusion, a machine-learning model that generates 3D objects from plain text inputs. The resulting 3D models can be exported to rendering software and game engines. Nvidia has shown equally impressive results with Instant NeRF, which generates full-color 3D scenes from 2D images.

Anton Kaplanyan, vice president of graphics research at Intel, believes that neural rendering techniques will make 3D content creation more approachable. “If you look at the current social networks, it’s so much commoditized. A person can just click on a button, take a photo, share it with their friends and relatives,” says Kaplanyan. “If we want to elevate this experience into 3D, we need to pull people [in] who don’t know the professional tools to become content creators as well.”

The pace of 3D neural rendering’s improvement through 2023 will be a key component of its future. It’s impressive, but unproven compared to traditional rendering. “Computer graphics are amazing, it works really well, and we have really good ways of solving a lot of problems that may be the way we do it forever,” says Barron. He notes that content creators and developers are already familiar with the tools used to create for, and optimize, a traditional graphics pipeline.

The question, then, is how quickly the graphics industry will embrace 3D neural rendering as an alternative to tried-and-true methods. It may prove an unsettling transition because of the conflicting incentives that surround it. Machine-learning models often run well on modern graphics architectures, but there’s tension in how GPU, CPU, and dedicated AI coprocessors—all of which are relevant to AI performance, depending on its implementation—combine in a consumer product. Betting on the wrong technique, or the wrong architecture to support it, could prove a costly mistake.

Still, Catanzaro believes the lure of 3D neural rendering will be hard to resist. “I think that we’re going to see a lot of neural rendering techniques that are even more radical,” he says, referencing generative text-to-image and text-to-3D techniques. “The graphical quality from some of these completely neural models is quite extraordinary. Some of them are able to do shadows and refractions and reflections and, you know, these things that we typically only know how to do in graphics with ray tracing, are able to be simulated by a neural network without any explicit instructions on how to do that. So I would consider those even more radical approaches to neural rendering than DLSS, and I think the future of graphics is going to use both of those things.”

Neural rendering’s best perk? Efficiency

Neural rendering is alluring not just because of its potential performance but also for its potential efficiency. The 530 percent gain DLSS 3 delivers in Portal with RTX can improve frame rates—or it can lower power consumption by capping the frame rate at a target. In that scenario, DLSS 3 can reduce the cost of rendering each frame.

“Moore’s Law is running out of steam...my personal belief is that post-Moore graphics is neural graphics.”

—Bryan Catanzaro, Nvidia vice president of applied deep learning

That’s a big deal, because consumer electronics has a problem. Moore’s Law is dead—or, if not dead, on life support. “Moore’s Law is running out of steam, as you know, and my personal belief is that post-Moore graphics is neural graphics,” says Catanzaro. For Nvidia, neural rendering represents a way to keep delivering big gains without doubling up on transistors.

Intel’s Kaplanyan disputes the demise of Moore’s Law (Intel CEO Pat Gelsinger insists it’s alive and well), but agrees that neural rendering can improve efficiency. “There are some solutions to chip size, there are the chiplets, which Pat has talked about,” he says. “On the other hand, I also agree that we have a great opportunity with machine-learning algorithms to use this energy and this area way more efficiently to produce new visuals.”

Efficiency is a battleground for AMD, Nvidia, and Intel, as all three companies work with device manufacturers to design new consumer laptops and tablets. For device makers, efficiency gains lead to thinner, lighter devices that last longer on battery, while at the same time enhancing what users can accomplish with the device.

“I am very excited about enabling...the experiences that you would otherwise see only in high-end Hollywood movies or Triple-A games, but those experiences you would be able to make yourself,” says Kaplanyan. “You’d be able to do it on your laptop, or some other very power-confined device.”

NVIDIA’s New AI: Wow, Instant Neural Graphics! 🤖www.youtube.com

It’s clear that 2023 will be a foundational year for neural rendering in consumer devices. Nvidia’s RTX 40-series with DLSS 3 support will roll out broadly to consumer desktops and laptops; Intel is expected to expand its Arc graphics line with its upcoming Battlemage architecture; and AMD will launch more variants of cards using its RDNA 3 architecture.

These releases lay the groundwork for a revolution in graphics. It won’t happen overnight, and it won’t be easy—but as consumers demand ever more impressive visuals, and more capable content creation, from smaller, thinner form factors, neural rendering could prove the best way to deliver.

Correction 28 Nov. 2022: A previous version of this story spelled Nvidia’s Bryan Catanzaro’s name incorrectly. Spectrum regrets the error.

This article appears in the January 2023 print issue as “AI Is a Powerful Pixel Painter.”

Matthew S. Smith is a freelance consumer technology journalist with 17 years of experience and the former Lead Reviews Editor at Digital Trends. An IEEE Spectrum Contributing Editor, he covers consumer tech with a focus on display innovations, artificial intelligence, and augmented reality. A vintage computing enthusiast, Matthew covers retro computers and computer games on his YouTube channel, Computer Gaming Yesterday.