Chips and talent are in short supply as companies scrabble to jump on the AI bandwagon. Startup SambaNova claims its new processor could help companies have their own large language model (LLM) up and running in just days.

The Palo Alto–based company, which has raised more than US $1 billion in venture funding, won’t be selling the chip directly to companies. Instead it sells access to its custom-built technology stack, which features proprietary hardware and software especially designed to run the largest AI models.

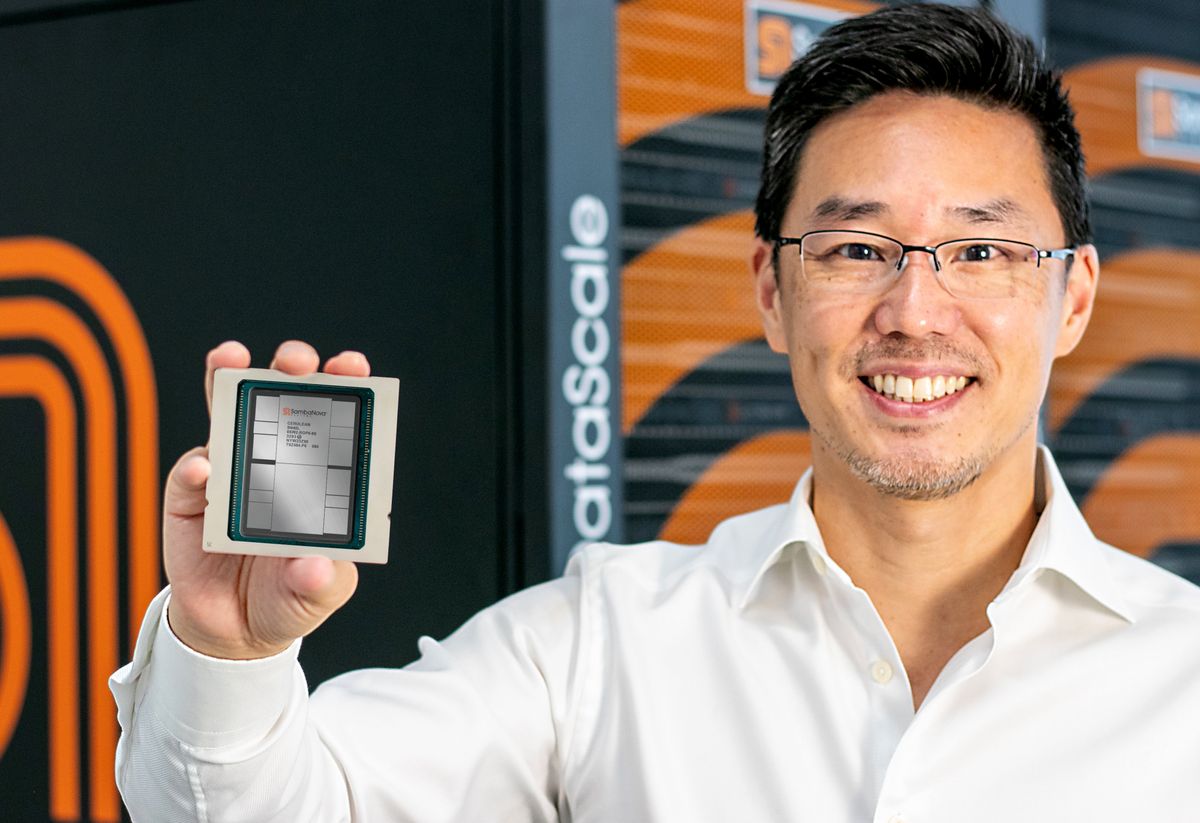

That technology stack has now received a major upgrade following the launch of the company’s new SN40L processor. Built using Taiwanese chip giant Taiwan Semiconductor Manufacturing Co.’s 5-nanometer process, each device features 102 billion transistors spread across 1,040 cores that are capable of speeds as high as 638 teraflops. It also has a novel three-tier memory system designed to cope with the huge data flows associated with AI workloads.

“A trillion parameters is actually not a big model if you can run it on eight [chips].”

—Rodrigo Liang, SambaNova

SambaNova claims that a node made up of just eight of these chips is capable of supporting models with as many as 5 trillion parameters, which is almost three times the reported size of OpenAI’s GPT-4 LLM. And that’s with a sequence length—a measure of the length of input a model can handle—as high as 256,000 tokens. Doing the same using industry-standard GPUs would require hundreds of chips, claims CEO Rodrigo Liang, representing a total cost of ownership less than 1/25 of the industry-standard approach.

“A trillion parameters is actually not a big model if you can run it on eight [chip] sockets,” says Liang. “We’re collapsing the cost structure and really refactoring how people are thinking about this, not looking at trillion-parameter models as something inaccessible.”

The new chip uses the same data-flow architecture that the company has relied on for its previous processors. SambaNova’s underlying thesis is that existing chip designs are overly focused on easing the flow of instructions, but for most machine-learning applications, efficient movement of data is a bigger bottleneck.

To get around this, the company’s chips feature a tiled array of memory and compute units connected by a high-speed switching fabric, which makes it possible to dynamically reconfigure how the units are linked up depending on the problem at hand. This works hand-in-hand with the company’s SambaFlow software, which can analyze a machine-learning model and work out the best way to connect the units to ensure seamless data flows and maximum hardware use.

The main difference between the company’s latest chip and its predecessor, the SN30, other than the shift from a 7-nm to a 5-nm process, is the addition of a third memory layer. The earlier chip featured 640 megabytes of on-chip SRAM and a terabyte of external DRAM, but the SN40L will have 520 MB of on-chip memory, 1.5 terabytes of external memory, and an additional 64 gigabytes of high bandwidth memory (HBM).

Memory is increasingly becoming a key differentiator for AI chips as the ballooning size of generative AI models mean that moving data around is often more of a drag on performance than raw compute power is. This is pushing companies to boost both the amount and speed of memory on their chips. SambaNova isn’t the first to turn to HBM to combat this so-called memory wall, and its new chip features less memory than its competitors—Nvidia’s industry-leading H100 GPU features 80 GB worth, while AMD’s forthcoming MI300X GPU will feature 192 GB. SambaNova wouldn’t disclose bandwidth figures for its memory, so it’s hard to judge how it stacks up against other chips.

But while it is more reliant on slower external memory, what singles SambaNova’s technology out, says Liang, is a software compiler that can intelligently divide the load between the three memory layers. A proprietary interconnect between the company’s chips also allows the compiler to treat an eight-processor setup as if it were a single system. “Performance on training is going to be fantastic,” says Liang. “The inferencing will be a showstopper.”

SambaNova’s technology stack is expressly focused on running the largest AI models. Their target audience is the 2,000 largest companies in the world.

SambaNova was also cagey about how it deals with another hot topic for AI chips—sparsity. Many of the weights in LLMs are set to zero, and so performing operations on them is a waste of computation. Finding ways to exploit this sparsity can provide significant speedups. In their promotional materials, SambaNova claims the SN40L “offers both dense and sparse compute.” This is partly enabled at the software layer through scheduling and how data is brought onto the chip, says Liang, but there is also a hardware component that he declined to discuss. “Sparsity is a battleground area,” he says, “so we’re not yet ready to disclose exactly how we do it.”

Another common trick to help AI chips run large models faster and cheaper is to reduce the precision with which parameters are represented. The SN40L uses the bfloat16 numerical format invented by Google engineers, and it also has support for 8-bit precision, but Liang says that low-precision computing is not a focus for them because their architecture already allows them to run models on a much smaller footprint.

Liang says the company’s technology stack is expressly focused on running the largest AI models—their target audience is the 2,000 largest companies in the world. The sales pitch is that these companies are sitting on huge stores of data but they don’t know what most of it says. SambaNova says it can provide all the hardware and software needed to build AI models that unlock this data without companies having to fight for chips or AI talent. “You’re up and running in days, not months or quarters,” says Liang. “Every company can now have their own GPT model.”

One area where the SN40L is likely to have a significant advantage over competing hardware, says Gartner analyst Chirag Dekate, is on multimodal AI. The future of generative AI is large models that can handle a variety of different types of data, such as images, videos, and text, he says, but this results in highly variable workloads. The fairly rigid architectures found in GPUs aren’t well suited to this kind of work, says Dekate, but this is where SambaNova’s focus on reconfigurability could shine. “You can adapt the hardware to match the workload requirements,” he says.

However, custom AI chips like those made by SambaNova do make a trade-off between performance and flexibility, says Dekate. Although they might not be quite as powerful, GPUs can run almost any neural network out of the box and are supported by a powerful software ecosystem. In contrast, models have to be specially adapted to work on chips like the SN40L. Dekate notes that SambaNova has been building up a catalog of prebaked models that customers can take advantage of, but Nvidia’s dominance in all aspects of AI development is a major challenge.

“The architecture is actually superior to conventional GPU architectures,” Dekate says. “But unless you get these technologies in the hands of customers and enable mass consumerization, I think you’re likely going to struggle.”

That will be even more challenging now that Nvidia is also moving into the full-stack, AI-as-a-service market with its DGX Cloud offering, says Dylan Patel, chief analyst at the consultancy SemiAnalysis. “The chip is a significant step forward,” he says. “I don’t believe the chip will change the landscape.”

- IBM’S AI Chip May Find Use in Generative AI ›

- Cerebras’ New Monster AI Chip Adds 1.4 Trillion Transistors ›

- Electron E1: Efficient Dataflow Architecture - IEEE Spectrum ›

Edd Gent is a freelance science and technology writer based in Bengaluru, India. His writing focuses on emerging technologies across computing, engineering, energy and bioscience. He's on Twitter at @EddytheGent and email at edd dot gent at outlook dot com. His PGP fingerprint is ABB8 6BB3 3E69 C4A7 EC91 611B 5C12 193D 5DFC C01B. His public key is here. DM for Signal info.