15 November 2010—Early risers may think it’s tough to fix breakfast first thing in the morning, but robots have it even harder. Even grabbing a cereal box is a challenge for your run-of-the-mill artificial intelligence (AI). Frosted Flakes come in a rectangular prism with colorful decorations, but so does your childhood copy of Chicken Little. Do you need to teach the AI to read before it can grab breakfast?

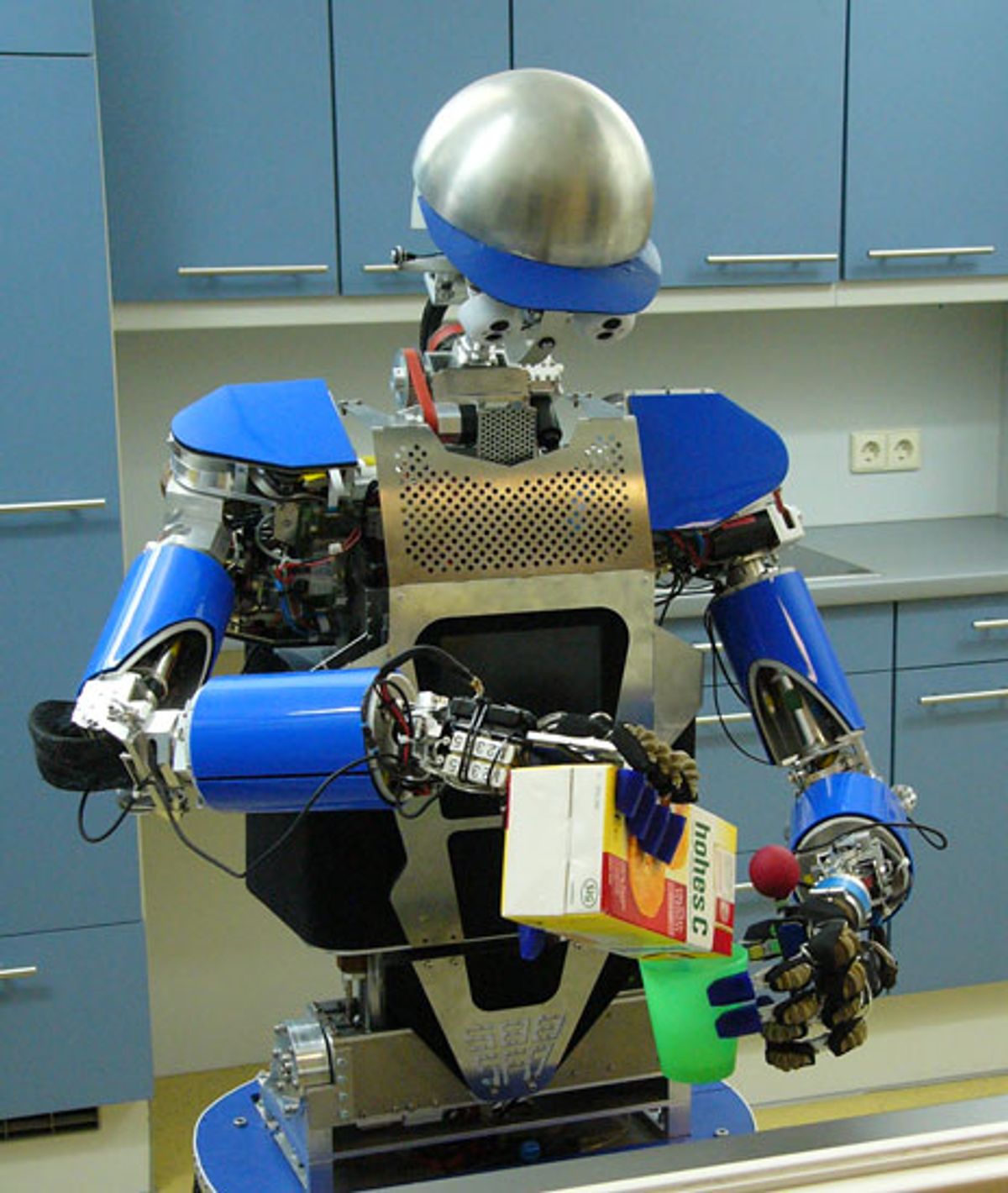

Maybe not. A team of European researchers has built a robot called ARMAR-III, which tries to learn not just from previously stored instructions or massive processing power but also from reaching out and touching things. Consider the cereal box: By picking it up, the robot could learn that the cereal box weighs less than a similarly sized book, and if it flips the box over, cereal comes out. Together with guidance and maybe a little scolding from a human coach, the robot—the result of the PACO-PLUS research project—can build general representations of objects and the actions that can be applied to them. "[The robot] builds object representations through manipulation," explains Tamim Asfour of the Karlsruhe Institute of Technology, in Germany, who worked on the hardware side of the system.

The robot’s thinking is not separated from its body, because it must use its body to learn how to think. The idea, sometimes called embodied cognition, has a long history in the cognitive sciences, says psychologist Art Glenberg at Arizona State University in Tempe, who is not involved in the project. "All sorts of our thinking are in terms of how we can act in the world, which is determined jointly by characteristics of the world and our bodies."

Embodied cognition also requires sophisticated two-way communication between a robot’s lower-level sensors—such as its hands and camera eyes—and its higher-level planning processor. "The hope is that an embodied-cognition robot would be able to solve other sorts of problems unanticipated by the programmer," Glenberg says. If ARMAR-III doesn’t know how to do something, it will build up a library of new ways to look at things or move things until its higher-level processor can connect the new dots.

The PACO-PLUS system’s masters tested it in a laboratory kitchen. After some rudimentary fumbling, the robot learned to complete tasks such as searching for, identifying, and retrieving a stray box of cereal in an unexpected location in the kitchen or ordering cups by color on a table. "It sounds trivial, but it isn’t," says team member Bernhard Hommel, a psychologist at Leiden University in the Netherlands. "He has to know what you’re talking about, where to look for it in the kitchen, even if it’s been moved, and then grasp it and return to you." When trainers placed a cup in the robot’s way after asking it to set the table, it worked out how to move the cup out of the way before continuing the task. The robot wouldn’t have known to do that if it hadn’t already figured out what a cup is and that it’s movable and would get knocked down if left in place. These are all things the robot learned by moving its body around and exploring the scenery with its eyes.

ARMAR-III’s capabilities can be broken down into three categories: creating representations of objects and actions, understanding verbal instructions, and figuring out how to execute those instructions. However, having the robot go through trial-and-error ways to figure out all three would just take too long. "We weren’t successful in showing the complete cycle," Asfour says of the four-year-long project, which ended last summer. Instead, they’d provide one of the three components and let the robot figure out the rest. The team ran experiments in which they gave the robot hints, sometimes by programming and sometimes by having human trainers demonstrate something. For example, they would tell it, "This is a green cup," instead of expecting the robot to take the time to learn the millions of shades of green. Once it had the perception given to it, the robot could then proceed with figuring out what the user wanted it to do with the cup and planning the actions involved.

The key was the system’s ability to form representations of objects that worked at the sensory level and combine that with planning and verbal communications. "That’s our major achievement from a scientific point of view," Asfour says.

ARMAR-III may represent a new breed of robots that don’t try to anticipate every possible environmental input, instead seeking out stimuli using their bodies to create a joint mental and physical map of the possibilities. Rolf Pfeifer of the University of Zurich, who was not involved in the project, says, "One of the basic insights of embodiment is that we generate environmental stimulation. We don’t just sit there like a computer waiting for stimulation."

This type of thinking mimics other insights from psychology, such as the idea that humans perceive their environment in terms that depend on their physical ability to interact with it. In short, a hill looks steeper when you’re tired. One study in 2008 found that when alone, people perceive hills as being less steep than they do when accompanied by a friend. ARMAR-III’s progeny may even offer insights into how embodied cognition works in humans, Glenberg adds. "Robots offer a marvelous way of trying to test this sort of thinking."

About the Author

Lucas Laursen is a freelance journalist based in Madrid. In the September 2010 issue he wrote about a computer system that warns egg farmers when hens are going to start murderous rampages.