By mimicking the natural abilities of our skin, a team of researchers at Johns Hopkins University has enabled a prosthesis to perceive and transmit the feeling of pain.

But why would anyone want to feel pain? Study author Nitish Thakor, a professor of biomedical engineering at Hopkins and IEEE Fellow, has been getting that question a lot.

In the most practical sense, pain sensors in the skin help protect our bodies from damaging objects, such as a hot stove or sharp knife. By the same token, an amputee could rely on the perception of pain to protect his or her prosthesis from damage, says Thakor.

But he also gives a more holistic, almost poetic answer: “We can now span a very human-like sense of perception, from light touch to pressure to pain, and I think that makes prosthetics more human.”

In a study published today in Science Robotics, Thakor, along with graduate student Luke Osborn and their colleagues, describe the design and initial test of their “e-dermis” system. It’s the latest in a ongoing effort to add a sense of touch to prosthetics, à la Luke Skywalker feeling a needle prick the fingers and palm of his bionic hand.

The Hopkins team was inspired by the way biological touch receptors work in human skin, says Thakor. Real skin consists of layers of receptors. Similarly, the e-dermis has numerous layers—made of piezoresistive and conductive fabrics, rather than different types of cells—that sense and measure pressure. Also like real skin, those sensing layers react in different ways to pressure: some react quickly to stimuli, while others respond more slowly.

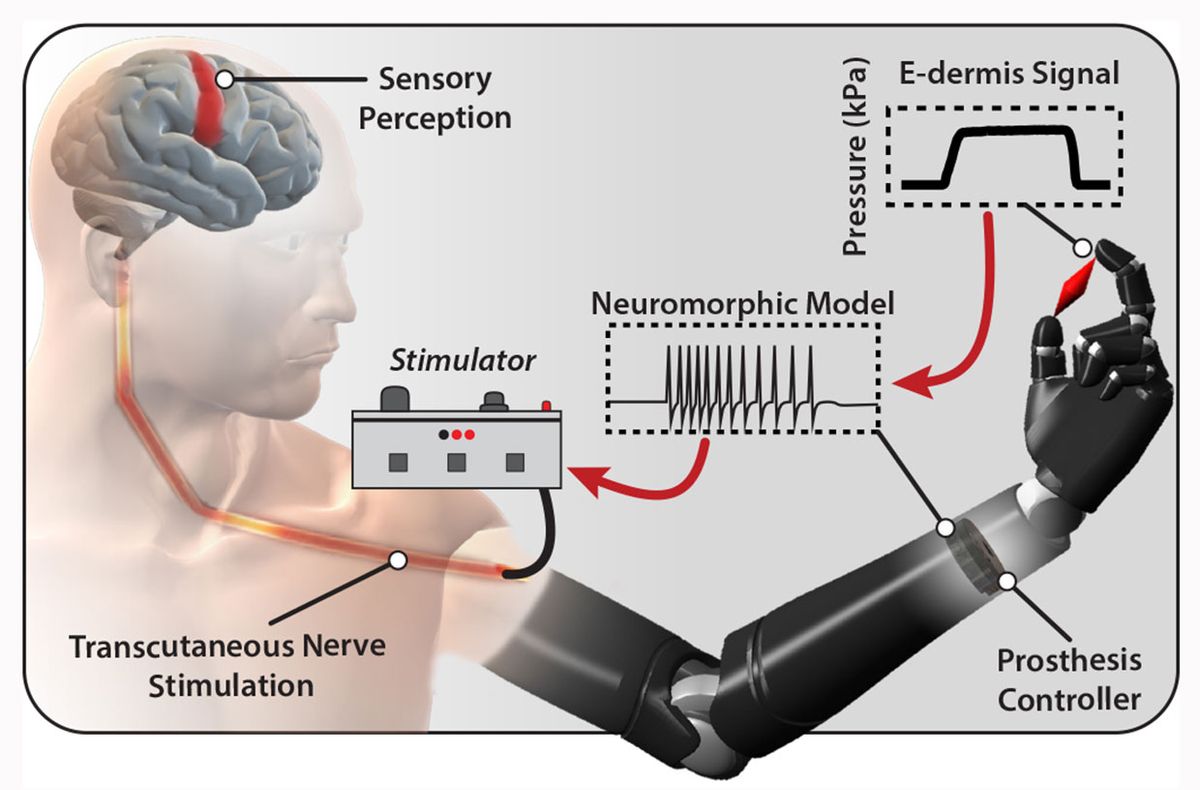

The pressure information from the e-dermis is converted into neuron-like pulses that are similar to the spikes of electricity, or action potentials, that living neurons use to communicate. That neuron-like, or neuromorphic, signal is then delivered via small electrical stimulations to the peripheral nerves in the skin of an amputee to elicit feelings of pressure and, yes, pain.

Thanks to a dedicated volunteer who was not named in the study, the team was able to implement and test their system. Osborn spent two months mapping the peripheral nerves in the amputated left arm of a 29-year-old man who had an above-the-elbow amputation following an illness. Using small electrical stimulations, the graduate student mapped out how different peripheral nerves in the volunteer’s residual limb related to his feeling of a phantom limb.

During this process, Osborn discovered that the right amount of current delivered at a specific frequency elicited not only a sense of touch, but a sense of pain. (Not too much pain though—they stimulated the nerves until the volunteer felt a 3 out of 10 on a pain scale, Thakor carefully notes.)

The team then put the whole system in place—e-dermis on the fingers of the prosthetic, neuron-like signaling model in the prosthesis controller, and electrical stimulator on the residual limb. With the system, the volunteer could clearly distinguish between rounded and sharp objects and felt the sensation coming directly from his phantom limb. In an additional experiment, the prosthesis was programmed with a pain reflex so that it automatically released a sharp object when pain was detected.

In this single case study, the touch information was delivered to the nervous system by stimulating the skin of the amputee, but it could also be delivered via other technologies, such as implanted electrodes, targeted muscle reinnervation, and maybe, someday, brain-machine interfaces.

“Someday all this could be implanted to directly go to nerve rather than via skin, but this approach is available here and now,” says Thakor, who is also co-founder of a prosthetics company, Infinite Biomedical Technologies. Moving forward, his lab plans to investigate other materials for the e-dermis and explore how to deliver a wider range of sensations.

The technology also has possible applications in robotics and augmented reality, though Thakor declined to disclose any current ideas or projects in the works. But it’s clear that better tactile capabilities could help robots grasp objects better and perform a wider range of functions. And if the robotics industry adopted such a technology, mass manufacturing could lead to a dramatic decrease in cost and widespread adoption of such technologies.

Megan is an award-winning freelance journalist based in Boston, Massachusetts, specializing in the life sciences and biotechnology. She was previously a health columnist for the Boston Globe and has contributed to Newsweek, Scientific American, and Nature, among others. She is the co-author of a college biology textbook, “Biology Now,” published by W.W. Norton. Megan received an M.S. from the Graduate Program in Science Writing at the Massachusetts Institute of Technology, a B.A. at Boston College, and worked as an educator at the Museum of Science, Boston.