A Cloud You Can Trust

How to ensure that cloud computing’s problems—data breaches, leaks, service outages—don’t obscure its virtues

This past April, Amazon’s Elastic Compute Cloud service crashed during a system upgrade, knocking customers’ websites off-line for anywhere from several hours to several days. That same month, hackers broke into the Sony PlayStation Network, exposing the personal information of 77 million people around the world. And in June a software glitch at cloud-storage provider Dropbox temporarily allowed visitors to log in to any of its 25 million customers’ accounts using any password—or none at all. As a company blogger drily noted: “This should never have happened.”

And yet it did, and it does, with astonishing regularity. The Privacy Rights Clearinghouse has logged 175 data breaches this year in the United States alone, involving more than 13 million records.

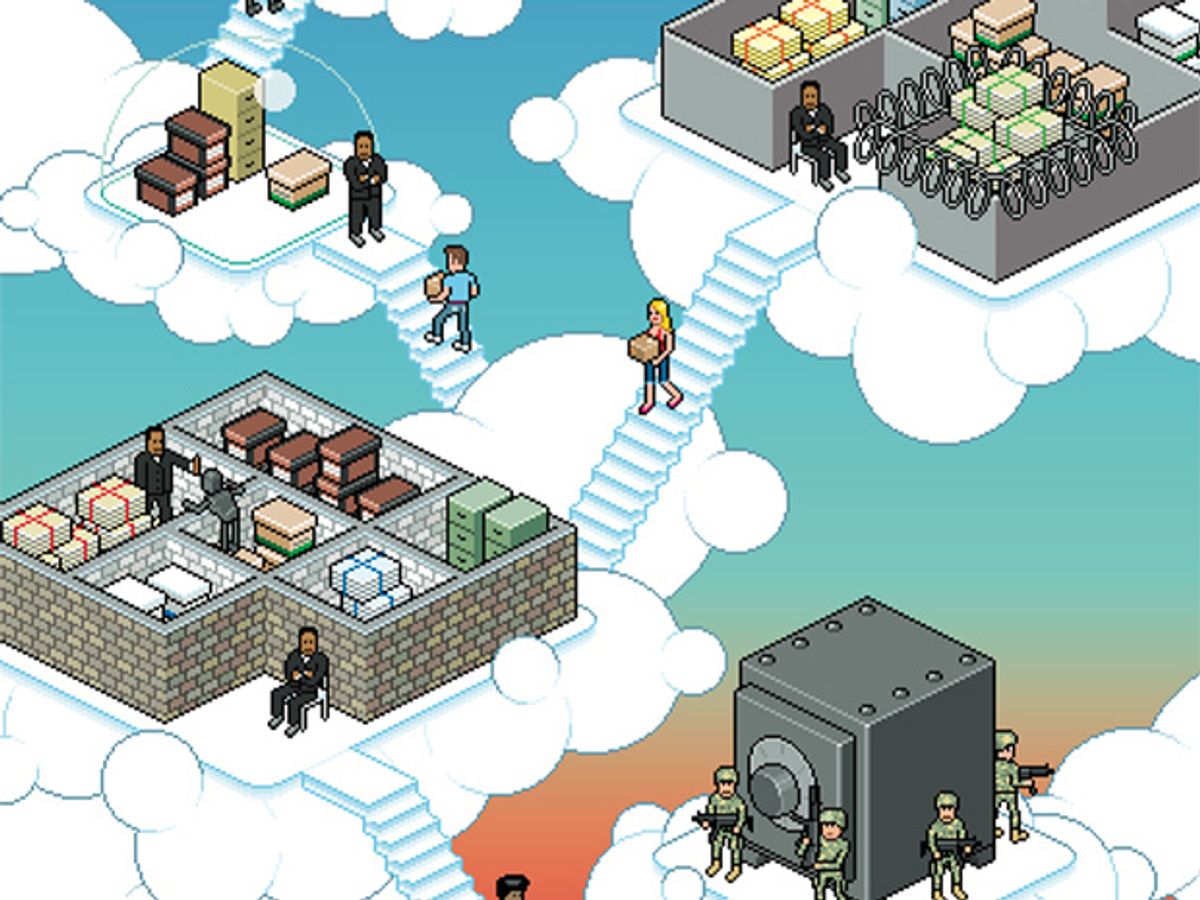

Such statistics should give you pause, especially if you plan to entrust information that used to exist only on your own computer to an online provider’s machines. And yet it’s very hard these days to avoid that. Whenever you update your status on Facebook, check your e-mail via Gmail, post your vacation photos on Flickr, or shop, bank, or play games online, you are relying on somebody else’s computers to safeguard your stuff. Many businesses, too, are buying into the promise of using computers they don’t own or operate, because it gives them affordable and convenient access to computing resources, storage, and networking, as well as sophisticated software and services, that they might not otherwise be able to afford.

Regardless of how exactly they use such Internet-based computing services in “the cloud,” these businesses stand to benefit. They gain in particular from the cloud’s ability to pool equipment, allowing them to pay only for the resources they use and to scale their operations up or down almost instantaneously. Need more capacity? Just lease it from the burgeoning number of cloud providers, including Amazon, Google, Microsoft, or the company we work for, IBM. Cloud services also provide their customers with detailed metrics that track just how they use their cloud resources. And customers no longer have to wait around for the tech-support guy; their interactions with the cloud provider are almost entirely automated. So rather than being burdened with the expense and effort of procuring and maintaining an in-house computer network, even the smallest business can operate as if it had a world-class IT system.

More and more companies are doing just that. That’s why, according to analysts at the technology-research firm Gartner, by next year 20 percent of all businesses will no longer own their own servers. That percentage is likely to grow in the coming years. In short, cloud computing is here to stay.

But this transformation of the IT landscape brings with it some new problems stemming from the very nature of outsourcing and from sharing resources with others. These problems include service disruptions and the inability of cloud providers to accommodate customized networks. But the top concern that businesses have with cloud computing, repeated surveys have found, is security—with good reason. By moving its data and computation to the cloud, a company runs the risk that the cloud-service provider, one of the provider’s other customers, or a hacker might inappropriately gain access to sensitive or proprietary information. Customers just have to trust the cloud-service provider to safeguard their data. But unexpected things can and do happen, even when you’re dealing with well-established and presumably well-run companies. So it’s no wonder that many IT managers remain jittery. If businesses are going to reap the full benefits of cloud computing, cloud providers will need to do much more to address security concerns. Here’s an overview of how we think they could start.

Click illustration to enlarge.

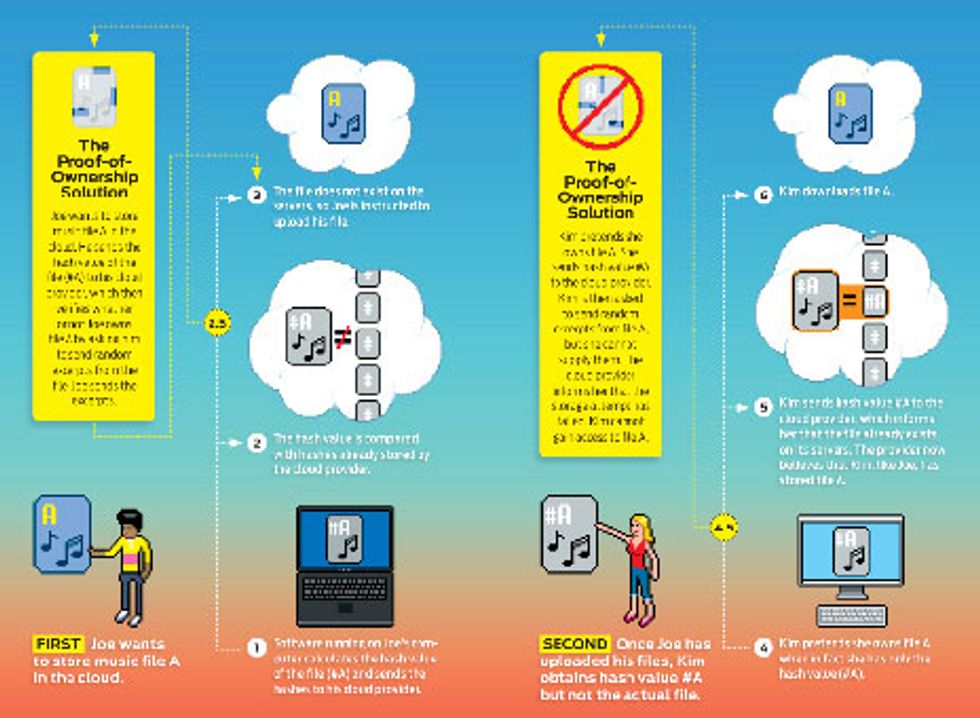

Many cloud storage providers use a technique known as deduplication to minimize the number of unique files they store. Whenever a user tries to upload a file that has previously been stored, whether by that user or someone else, the cloud provider doesn’t upload the redundant file; instead, it creates a link between the user’s account and the existing file. The example shown here illustrates one problem with deduplication: Cloud customer Kim can surreptitiously download Joe’s stored files, thereby exploiting the cloud service for unapproved content dis- tribution. The proof-of-ownership solution, shown in steps 2.5 and 4.5, thwarts Kim’s attempt to access Joe’s files. The solution was developed by Shai Halevi and his colleagues at IBM.

Although “cloud computing” is the current buzz phrase, the concept has been around for half a century. In 1961, the late John McCarthy, an artificial-intelligence pioneer, proposed a different term for what is essentially the same thing: utility computing. “Computing may someday be organized as a public utility, just as the telephone system is a public utility,” McCarthy said. “The computer utility could become the basis of a new and important industry.”

But at the time, and for several decades afterward, computer hardware and software weren’t up to the task. Only in the past few years, with the advent of high-bandwidth networking, Web-based applications, and powerful and cheap server technology, has McCarthy’s vision finally been realized.

In many ways, cloud computing is just another form of outsourcing. But traditional outsourcing arrangements—contract manufacturing, say—come with legal, organizational, and technical controls. Cloud computing hasn’t yet developed such protections. Nobody even agrees on what the best practices should be. Typical cloud-service agreements guarantee only that the provider will make its “best effort” to deliver services. Rarely do these providers pay any penalties should their services suffer an outage, breach, or failure.

What’s more, many cloud providers do not consider security a top priority, according to a report released in April by CA Technologies and the Ponemon Institute [PDF]. The study, which surveyed 103 providers in the United States and 24 in Europe, found that the majority “do not consider computing security as one of their most important responsibilities and do not believe their products or services substantially protect and secure the confidential or sensitive information of their customers.” They also stated that it is “their customer’s responsibility to secure the cloud” and that “their systems and applications are not always evaluated for security threats prior to deployment to customers.”

If you want a higher level of security, you may be hard-pressed to find a provider that will customize its services to satisfy your concerns. Right now, cloud providers favor one-size-fits-all services: By offering a single and fully standardized cloud service, they can maximize economies of scale and thus lower costs. The downside, however, is that the cloud services they provide meet only the most basic requirements for security. That’s fine for many of their customers, to be sure, but certainly not for all. Today’s cloud is like the Model T Ford circa 1914: You can have any color cloud you want, as long as it’s black.

Ironically, the greatest risk in cloud computing stems from its greatest advantage: resource sharing. Let’s say you’ve developed an online game, and rather than buy your own servers, you lease computing time from a service like Amazon Elastic Compute Cloud, also known as EC2. That way, if your game becomes an overnight hit, you won’t have to worry about thousands of players crashing your servers.

To run your code, you create what are called virtual machine images on the Amazon EC2 servers. Each VMI is the software equivalent of a stand-alone computer running its own operating system. In addition to specifying the number and type of VMIs you want, you can select where you’d like each one to reside. Amazon lets you choose among six geographic regions, each having one or more data centers. You can even spread out your virtual machines within a region by putting them in different availability zones. Each VMI will then be assigned to a physical server in a data center and will remain there as long as it is active.

Amazon promises that your virtual machines will be kept “virtually isolated” from those of other customers. But virtual isolation is not good enough. Research by Thomas Ristenpart and his colleagues at the University of California, San Diego, and MIT showed that a determined outsider stood a very good chance of putting his virtual machine onto the same server as another customer’s VMI and then launching attacks from it.

Being located on the same server would give the attacker access to information about the target, such as cache usage and data traffic rates. And what could be done with that kind of information? Let’s say the EC2 server is running encryption for one customer’s VMI. To allow its CPU to run more efficiently, the server does a lot of caching; in this case, information about the encryption key might be available through the cache. And because the server cache is shared by the customers, the attacker might be able to access information about the key and thereby gain entry to the other customer’s encrypted data.

More recently, Sven Bugiel and his colleagues at the Darmstadt Research Center for Advanced Security [PDF], in Germany, looked at a practice that allows Amazon cloud customers to publish their VMIs for others to use. Of the 1100 Amazon Machine Images (AMIs) the researchers looked at, about 30 percent contained private data that the creators had unintentionally published. These data included cryptographic keys, passwords, and security certificates, which attackers could extract and then use to gain illegal access to services that were built around the AMIs. And while both of these studies looked at Amazon’s cloud services, such vulnerabilities aren’t unique to them—they probably also exist on popular cloud services such as Microsoft’s Azure and the Rackspace Cloud.

Data-storage clouds are also vulnerable. One class of attacks exploits a space-saving technique known as data deduplication. Many files that people upload to the cloud end up being duplicates—identical copies of software user manuals, say, or MP3s of Lady Gaga’s “Telephone.” Deduplication allows a data-storage cloud to keep only one copy of each file. Any time a customer attempts to upload a file, the contents of the file are first compared with other stored files. The new file is uploaded only if it doesn’t already exist in the cloud; otherwise the customer’s account is linked to the stored file.

In a paper they presented at the Usenix Security Symposium in August, Martin Mulazzani and his colleagues at SBA Research [PDF], in Vienna, described several ways in which deduplication could be used to access files uploaded to Dropbox. One way to do this involves hash values, which are short, unique digests assigned by an algorithm to a stored file. When a customer attempts to upload a file, the Dropbox software running on his computer first calculates the hash values of the chunks of data in the file. The hash values are then compared with hash values already stored by Dropbox. If the file does not yet exist, it gets uploaded.

But if customer Joe inadvertently shares the hash values for his stored files with customer Kim, or if Kim steals them from Joe, she will be able to freely download those files without Joe’s permission or even his knowledge. Mulazzani and his colleagues worked with Dropbox to plug this and other security holes they had identified before going public with them.

Clearly, isolating customers from one another is, or should be, a major concern for cloud providers. No data from one customer should be exposed to any other, nor should one customer’s behavior affect another. With traditional outsourcing, isolation is achieved by maintaining dedicated physical infrastructure—separate production lines at a contract manufacturer, for instance—for each customer and by wiping clean all shared computers (such as workstations storing customer designs) before reuse.

To plug known security holes, cloud providers sometimes offer add-on services. For instance, Amazon Virtual Private Cloud allows the customer to specify a set of virtual machines that may communicate only through an encrypted virtual private network. EC2 also allows its users to define security groups, which operate like firewalls to control the incoming connections to a virtual machine.

There are a few products and services on the market aimed at enhancing cloud security. IBM’s Websphere Cast Iron and Cisco IronPort, for instance, provide secure online messaging. Informatica Corp. offers businesses a way to protect sensitive information by masking it as realistic-looking but nonsensitive data. Eventually, though, cloud security systems will need to be fully automated, so that customers can detect, analyze, and respond to their own security issues, rather than rely on the cloud provider’s staff for support and troubleshooting.

Other cloud security offerings include e-mail scanning, which checks for malicious code embedded in messages, and identity-management services, which control users’ access to resources and automate related tasks, like resetting passwords. The trick, though, is for cloud providers to integrate the customer’s own security measures with their operations.

That turns out to be hard to do, according to research by Burton S. Kaliski Jr. and Wayne Pauley [PDF] of EMC Corp., in Hopkinton, Mass. They argue that the very features that define cloud computing—automated transactions, resource pooling, and so on—make traditional security assessments difficult. Indeed, they noted in a paper last year, these features offer opportunities for security breaches. For example, cloud providers continuously monitor and measure activity within their networks, to better allocate shared resources and keep their costs down. But the collection of that metering data itself opens up a security hole, say Kaliski and Pauley. A devious customer could, for example, infer behavioral patterns of other customers by analyzing her own usage.

Despite these and other vulnerabilities, cloud customers can do quite a bit to boost their security. Businesses typically operate their in-house data networks according to the principle of least privilege, conferring to a given user only those privileges needed to do his or her job. A bank teller, for instance, doesn’t need full access to the bank’s mainframes. The same rule should apply when using a cloud service. Though that may seem obvious, many companies fail to take this simple step.

Users can also do things to confirm the integrity of the cloud infrastructure they’re using. Amazon CloudWatch allows EC2 users to do real-time monitoring of their CPU utilization, data transfers, and disk usage. The CloudAudit working group, a volunteer effort whose members include big cloud operators like Google, Microsoft, and Rackspace, is also exploring methods for monitoring the cloud’s performance. In the future, trusted computing technology could make it possible for a customer to verify that the code running remotely in the cloud matches certain guarantees made by the cloud provider or attested to by a third-party auditor.

Of course, the strongest protection you can give to the information you send off premises is to encrypt it. But it isn’t possible to encrypt everything: Data used in remote computations, for instance, cannot be encrypted easily.

Is there such a thing as a totally secure cloud? No. But we, along with many other cloud-security researchers around the world, are constantly striving toward that goal.

One such effort we are involved in aims to develop and demonstrate a secure cloud infrastructure. With funding from the European Union and others, the three-year, 10.5 million (US $14.9 million) Trustworthy Clouds, or TClouds, project is a collaboration that includes our group at IBM Research–Zurich, the security company Sirrix, the Portuguese power companies Energias de Portugal and Efacec, and San Raffaele Hospital in Milan, as well as a number of universities and other companies.

TClouds is developing two secure cloud applications. The first will be a home health-care service that will remotely monitor, diagnose, and assist patients. Each patient’s medical file can be stored securely in the cloud and be accessible to the patient, doctors, and pharmacy staff. Because of the sensitive nature of this information—not to mention the regulations that apply to patient privacy—TClouds will encrypt the data. The goal is to show how in-home health care can be improved cost-efficiently without sacrificing privacy.

The second application will be a smart street-lighting system for several Portuguese cities. Currently, the streetlights are switched on and off by means of a box that sits in a power station. The TClouds system will allow workers to log into a Web portal and type in when the lights should turn on and off in a given neighborhood; the use of smart meters will help control energy consumption. TClouds will show how such a system can run securely on a cloud provider’s computers even in the face of hacker attacks and network outages.

TClouds will also build a “cloud of clouds” framework, to back up data and applications in case one cloud provider suffers a failure or intrusion. Recently TClouds researchers at the University of Lisbon and at IBM Research–Zurich demonstrated one such cloud of clouds architecture. It used a data-replication protocol to store data among four commercial storage clouds—Amazon S3, Rackspace Files, Windows Azure Blob Service, and Nirvanix CDN—in such a way that the data were kept confidential and also stored efficiently.

Although the technology is a major focus of TClouds, it is also addressing the legal, business, and social aspects of cloud computing. Many countries, for instance, have their own data‑privacy laws, which will have to be considered carefully in cases where data must cross national boundaries.

Will everyone rush to adopt the kinds of improvements we’re working on? Probably not. What we see happening down the road, though, is the diversification of today’s one-size-fits-all approach to cloud computing. The demand for basic, low-cost cloud services will remain, but providers will also offer services with quantifiable and guaranteed security levels.

In the future, individual clouds will most likely give way to federations of clouds. That is, businesses will use multiple cloud providers for storage, backup, archiving, computing, and so on, and those separate clouds will link their services. (The social-networking sites Facebook and LinkedIn are already doing this.) So even if one provider suffers an outage, customers will still enjoy continued service.

Ultimately, we believe the cloud can be made at least as secure as any company’s own IT system. Once that happens, reaching out to a cloud provider for your computing needs will be as commonplace as getting hooked up to the gas or electric company.

About the Authors

Christian Cachin and Matthias Schunter are computer scientists at IBM Research–Zurich. A cryptography expert concerned with cloud security, Cachin likes to start the day with a 5 a.m. row on Lake Zurich. Schunter, technical leader of the European Union–funded TClouds project, prefers bicycling to all other forms of transportation. Both say some of their best ideas about computer security occur when they’re in transit.