The Great Radio Spectrum Famine

Mobile broadband is consuming the available radio spectrum. Serving up more won’t be easy

Not even sci-fi writers foresaw what we’d be doing with our phones once technology put color screens and a lot of computing power in our pockets.

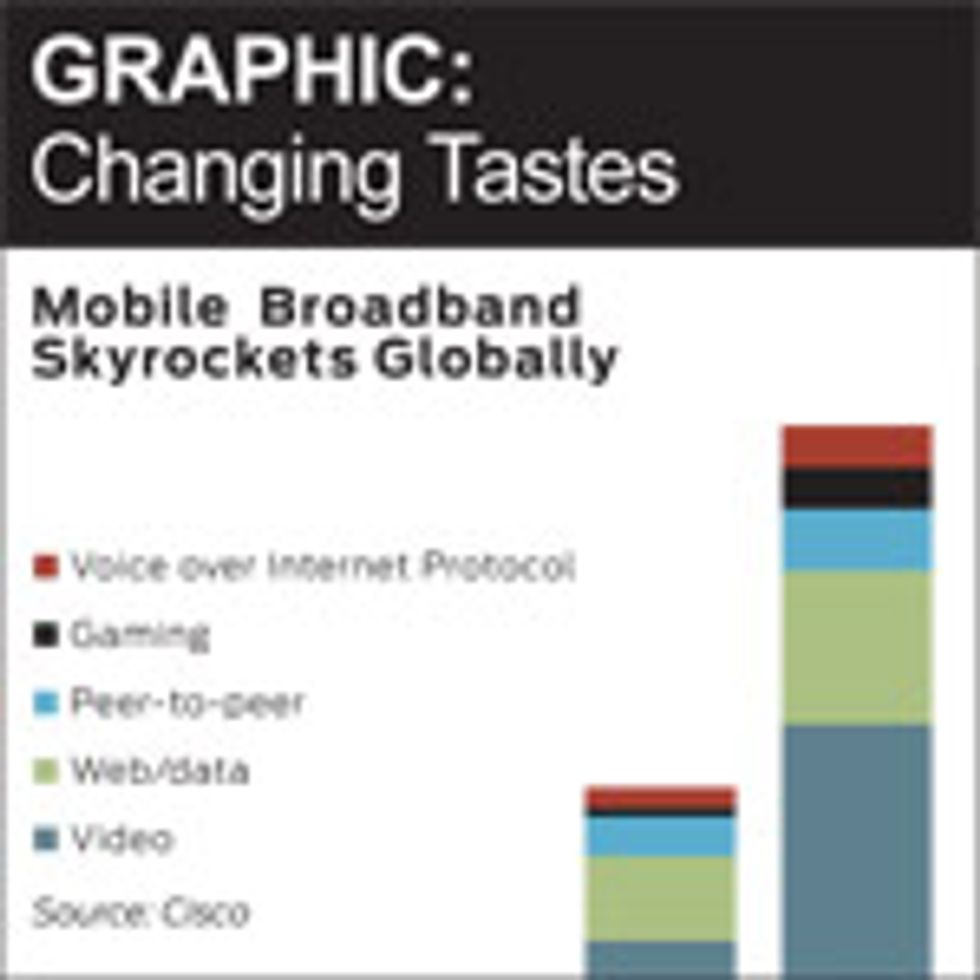

Now we know: We use them to stream YouTube and Facebook videos; we watch TV shows; we download and store songs and movies; we take pictures of everything going on around us; we read (and some of us even write) novels; we play video games; we surf the Web. Sometimes we even talk to each other. These days you can unleash a gusher of bits over the air that would have choked even a wired connection to the Internet not so long ago.

These transmissions consume radio bandwidth—lots of it. And they will take increasing amounts of this precious commodity as the iPad and its Androidgenous kin proliferate. People are already feeling the pinch.

Regulators have few options to head off the coming bandwidth crisis. They can’t realistically expect to reduce demand. Nor can they expand the overall supply. That leaves the daunting chore of squeezing today’s users into narrower slices of the radio spectrum, thereby eking out more space for other things. That’s sometimes possible, but it’s not easy. To reengineer existing radio systems—or their users—is a bit like trying to overhaul a car’s engine while it’s barreling down the highway.

Policymakers, at least in private, sometimes hold out hope for a fourth option: that some game-changing technical breakthrough will save the day at the 11th hour. But nothing now on the drawing board suggests that technology alone can get us out of this predicament.

In a sense, history is just repeating itself. Two decades ago, people who accessed the Internet typically did so with phone-line modems chugging along at 14.4 kilobits per second. That was fine for the largely static, text-based Internet of the day. But as the use of graphics and sound, and then video, expanded, so did the bandwidth needed, prompting more people to obtain broadband Internet connections. The spread of faster connections in turn spurred Web designers to load up their sites with multimedia. Technology and content each drove the other.

Now we are seeing an equally vicious cycle in the wireless realm. Smartphones, along with fully mobile laptops and tablets, are spreading fast, and people are using them ever more hours of the day. Estimates show the amounts of such wireless data doubling or tripling annually. We can expect a hundredfold expansion in just a few years.

Where will all the new capacity come from? Addressing this issue demands first an understanding of why all radio spectrum is not created equal.

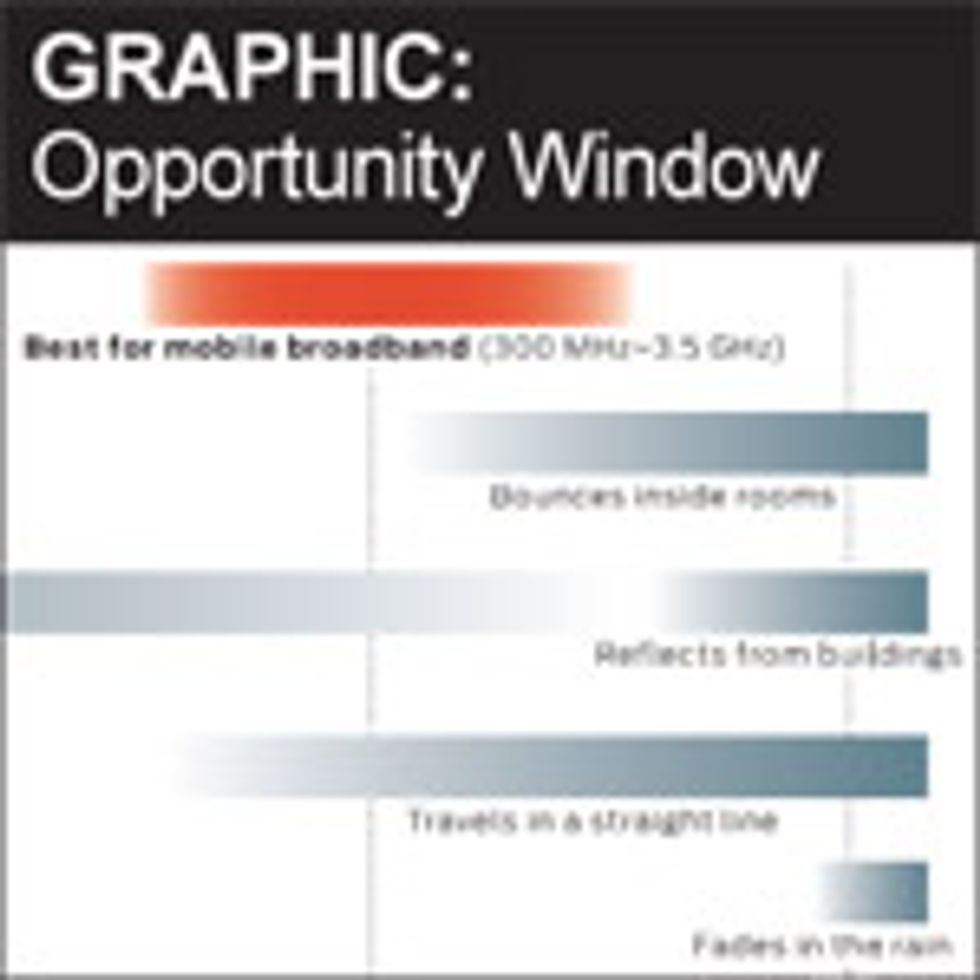

Every application of radio works best within a certain range of frequencies, and mobile broadband is no exception. Its sweet spot is relatively narrow, roughly in the range of 300 to 3500 megahertz. That’s because radio waves that are much above 3500 MHz (shorter than about 9 centimeters) do not penetrate well into buildings or through rugged terrain, leading to frustrating dead spots. Lower frequencies are better in this regard, but they require awkwardly large antennas for efficient transmission; 300 MHz is roughly the lowest frequency compatible with a reasonably efficient antenna that’s small enough to fit in a handheld device.

Not surprisingly, this swath of the spectrum is already staked out in much of the world. Finding ways that use less radio bandwidth to carry out these communications is not impossible, but it requires the adoption of some new technologies.

Telecommunications regulators try to anticipate such developments, and sometimes they even help to bring them about. But much of their work consists simply of codifying and institutionalizing established ways of doing things, which can interfere with efforts to use the airwaves in better ways.

Two-way radio is a good example. It became popular in the 1960s with the appearance of compact transistor-based gear. Back then, a one-way FM voice channel required 25 or 30 kilohertz. That’s a gluttonous use of spectral bandwidth by today’s standards. Actually, it was inefficient even then: Amateur radio equipment in those days routinely squeezed a voice signal into 5 kHz. Nevertheless, when the Federal Communications Commission set aside portions of the spectrum for two-way radios, it subdivided the bands into 25-kHz channels. The FCC then made things worse by assigning blocks of channels to particular industries, including subdivisions as small as “Motion Picture” and “Forest Products.” The result, a decade or two later, was a huge embedded base of inefficient radios, spread unevenly over dozens of channel blocks.

The FCC has since merged the channel blocks across all industries, keeping only public safety separate. But narrowing the channels proved more difficult. Not until 1992 did the FCC launch a “refarming” program to cut the standard 25-kHz bandwidth to 12.5 kHz, with plans for a further trimming to 6.25 kHz. Twenty years later a lot of 25-kHz equipment is still in use, and the FCC-required implementation of 6.25-kHz equipment is still years away. Users, happy with their inefficient radios, resist government efforts to take them away. In the meantime, the goals of the program have been overtaken by technology. Doubling and quadrupling capacity may have been worth the effort in 1992, but such a target seems almost pointless today. Cellphone systems can carry 10 to 100 times the amount of voice traffic in the same amount of spectrum by using a dense network of towers and taking advantage of digital encoding and data compression.

Sometimes the problematic consequences of outdated regulations are less obvious. For example, all radio communications services have power limits, typically chosen to provide for reliable communications under near-worst-case conditions. But even when conditions are good, transmitters can still blast away at the same high power, tying up their frequencies over a wide geographic area. The old rules ignore the fact that modern equipment can be designed to automatically adjust power levels to the minimum needed, varying its output from moment to moment. Cellphones do this routinely. Most of the time the transmitter in your phone runs at well under its full power rating, facilitating reuse of the same frequency nearby (and prolonging battery life to boot). But only a few kinds of radios, such as those used for wireless Internet access in the 5-gigahertz band, are required to have this spectrum-saving feature.

Why are such improvements not more readily adopted? One reason is that they cost money, and often those who must pay and those who will benefit are not the same. The recent shift to digital television in the United States, for example, freed up 108 MHz of prime spectrum. Obvious beneficiaries were the U.S. Treasury, which auctioned just under half that spectrum for US $19 billion, and public-safety personnel, who received some badly needed additional capacity without charge. But to make those gains possible, U.S. TV stations had to replace much of their equipment, and consumers had to shell out cash for new receivers. (The government subsidized digital-to-analog converter boxes, but for only 10 percent or so of the sets in use.) Similarly, the FCC’s refarming program requires those now using two-way radios to replace their equipment at their own expense for the benefit of others.

The government sometimes does better and puts the costs where they belong. In the United States, for example, 1.9-GHz cellphones operate in spectrum formerly used for fixed point-to-point microwave communications. The FCC auctioned the spectrum for mobile use but warned bidders they would have to pay the costs of “relocating” the fixed users to other bands. Predictably, disputes broke out over the details. But the principle made sense: The party that benefits from a change should pay for it.

Money is not the only problem; practical considerations impose limits, too. Suppose, for example, a designer wants to modify a system to operate in half the radio bandwidth it currently uses. Other things being equal, that halves the data throughput, as Harry Nyquist proved for telegraph lines in 1928. Restoring the original throughput of that radio channel without changing anything else risks increasing the bit error rate. To keep the rate level, the designer can increase the power, which impairs battery life. Or he can limit the range—or perhaps compress the data to reduce the bit payload. But that delays the signal and may reduce how accurately it can be reconstructed at the receiver. The bottom line is, making more efficient use of spectrum usually means something else has to give.

Regulators sometimes try to boost spectrum efficiency by fiat. In the United States, fixed-location microwave equipment for some bands cannot legally be sold unless it can transmit at least 2.5 to 4.5 bits per second per hertz, the exact value depending on its bandwidth. Two-way radios in some bands also have a minimum, although it is much more lenient.

Often more effective, though, is a regulatory environment that gives licensees both the motive and the means to improve efficiency on their own. Wireless-phone carriers in the United States must bid at auction for exclusive use of a frequency band over a specified geographic area. Nationwide, the auction prices have totaled many billions of dollars. Writing big checks powerfully motivates the licensees to generate the most possible revenue from the available spectrum, which in turn encourages the adoption of equipment that can serve the maximum number of subscribers. Licensees are free to choose whatever forms of radio technology they think will work best.

With that kind of financial incentive, coupled with minimal regulatory constraints, wireless-service providers have achieved dramatic improvements in spectrum efficiency. They’ve done that by being clever about the modulation schemes, data encoding, and tower configurations they adopt. Back in the 1970s the early mobile telephony providers used one transmitter to serve an entire city, typically with all users sharing just one or two dozen voice channels. The service was expensive, required a lot of heavy equipment stowed in the trunk of your car, and it often entailed long waits to make a call.

Cellular carriers in the 1980s vastly improved mobile services using 832 pairs of 30-kHz-spaced analog FM channels in the 800-MHz band. The cellular layout reused the same frequencies at different locations across a city to support many thousands of conversations. But charges for wireless minutes remained high, geared mostly to business customers.

The next iteration, in the 1990s, was 1.9-GHz “2G” voice service, among the first to make use of auctioned spectrum in the United States. Although the FCC’s rules do not require it, all of the licensees opted for digital transmission, which yielded a big improvement. Digital modulation is not inherently more spectrum efficient than analog, but it allows much better compression and offers more ways to combine multiple communications onto one channel.

Those advantages were enough to persuade the companies operating older, analog cellphone systems to go digital. In the late 1980s, the carriers had begun shipping dual-mode analog/digital handsets and converting their base stations to digital. The handset automatically switched to whichever mode suited the equipment installed at the nearest tower. The conversion took about a decade, although carriers kept some analog service in place until 2008. The outcome was a tenfold increase in the capacity of these wireless networks.

The regulators learned some valuable lessons from that transition. First, it can be done pretty painlessly. In this case, subscribers were mostly unaware of it—people just kept on talking, with no significant interruptions or inconvenience (although a few analog-only holdouts had to be urged to upgrade their handsets). Second, the changeover need not be forced from on high. The analog-to-digital switch required essentially no government involvement. Carriers made the change on their own, for their own benefit, and on their own timetables.

Contrast that with the transition from analog to digital television, which was mostly completed in the United States by June 2009. That job was only a little bigger—today the United States has just a few more TV receivers than cellphones—but it proved much harder.

The digital-TV conversion took 22 years and cost broadcasters, viewers, and the U.S. government billions of dollars. One key difference was that in the TV switchover none of the broadcasters stood to cash in, at least not immediately. Most of the money that changed hands went the other way, to buy new studio and transmission equipment. Consumers paid for new home TVs and converter boxes. With prodding from the government, the broadcasting and consumer-electronics industries mounted a massive publicity campaign to prepare viewers for the coming sea change. Cable and satellite-TV companies ran their own campaigns, promoting their services as a way to keep old sets working. The government offered free vouchers for converter boxes (then ran out of money to distribute them). Still, in the end, on the morning of 13 June 2009, many viewers were shocked to find that their beloved analog TV sets showed only snow.

Compared with the wireless-phone conversion, the shift to digital TV was slow and painful. Whereas the wireless-phone changeover was an inside job, one largely driven by the market’s invisible hand, digital TV was directed by the government at every stage: adopting technical standards, setting required start-up dates for digital broadcasting, even imposing fines on electronics distributors who trafficked in analog-only TVs. Market forces and incentives played little part. And the TV transition required the participation of consumers in ways the wireless-phone conversion did not.

On the positive side, though, the switch to digital TV did work: It enabled the FCC to repack transmissions from digital TV stations more tightly than their analog predecessors. That freed up 108 MHz of spectrum, over a quarter of the total bandwidth allotted to broadcast TV before the transition. And thanks to data compression, each digital channel accommodates about four analog-quality video signals, and digital TV also offers new options for high-definition programming and data services. The overall result is about a fivefold improvement in spectrum efficiency—a success by any measure.

Or maybe not. The United States still has 294 MHz of spectrum set aside primarily for TV. But the vast majority of U.S. TV-owning households subscribe to cable or satellite television. Just 10 percent watch only transmissions sent over the air. And the over-the-air fraction has declined steadily over the decades. So the 294 MHz of TV spectrum—much of it in a frequency range ideal for mobile broadband—serves a small and shrinking number of viewers.

Noting this fact, some policymakers have proposed to divert still more of the TV broadcast spectrum to mobile broadband. One such plan in the United States would reallocate and auction 120 MHz, or about 41 percent of the postanalog TV capacity. Broadcasters who lose their channels could receive part of the auction revenues. Or they might be allowed a share of the newly expanded channel capacity taken from a fellow broadcaster whose station stays on the air

Not surprisingly, broadcasters as a group vehemently oppose any such reorganization of the airwaves, although some individual station owners would likely be happy to take the money and close shop. Others favor keeping their channels but renting out bandwidth for wireless use. Maybe that would be less disruptive to these businesses. And it does seem a little soon to require American TV watchers to relearn how to orient their antennas and tune their sets.

That we’re even talking about revamping the U.S. TV bands barely a year after the last reorganization suggests how thorny spectrum issues have become.

Any solution ultimately has to identify the least efficient or least critical services and redesign them to use less spectrum. Consider, for example, the current situation with two-way radios: 12.5 kHz for a one-way voice channel, with many channels vacant at any given moment. Such radios are indispensable to police, firefighters, and other emergency responders, as well as utility workers, taxi drivers, plumbers, construction crews, and many others. But their collective traffic could be handled in far less spectrum than is being used today. Unfortunately, there’s no practical way to improve these devices on their present frequencies, beyond the long-awaited halving of their bandwidth.

We need to offer these people a more efficient alternative while making it more costly for them to use their old equipment. Suppose the FCC gave a nonprofit industry group a few megahertz in which to provide efficient, digital, two-way radio service on an at-cost basis. To be sure, many users would prefer to keep their existing radio gear. But the FCC could make their licenses more expensive and equipment requirements more demanding, while pointing users to the new collective service as a better option.

Eventually, enough will have migrated out of the original band to allow the FCC to take it back and reallocate it for other purposes. The result would be two-way radio use that is 10 to 100 times as spectrum efficient as today’s with little disruption along the way.

Other bands may require different approaches. For example, the FCC is considering ways to convert underused mobile satellite bands to a primarily terrestrial cellphone-type service. And the U.S. government occupies large swaths of valuable spectrum that Congress could help to make available for private use.

True, any such reorganization of the airwaves would take years. But what solution wouldn’t? Given the growing hunger for mobile broadband, we ought to get cracking.

This article originally appeared in print as “The Great Spectrum Famine.”

About the Author

Mitchell Lazarus is a partner in the Washington, D.C.–area law firm of Fletcher, Heald & Hildreth, which specializes in telecommunications law and regulation. In addition to a law degree, he holds two degrees in electrical engineering and a doctorate in experimental psychology. During the 1970s, while working on mathematics education reform, he wrote on the subject of math anxiety. His article on the looming crisis in wireless broadband nevertheless takes an unblinking look at the numbers.