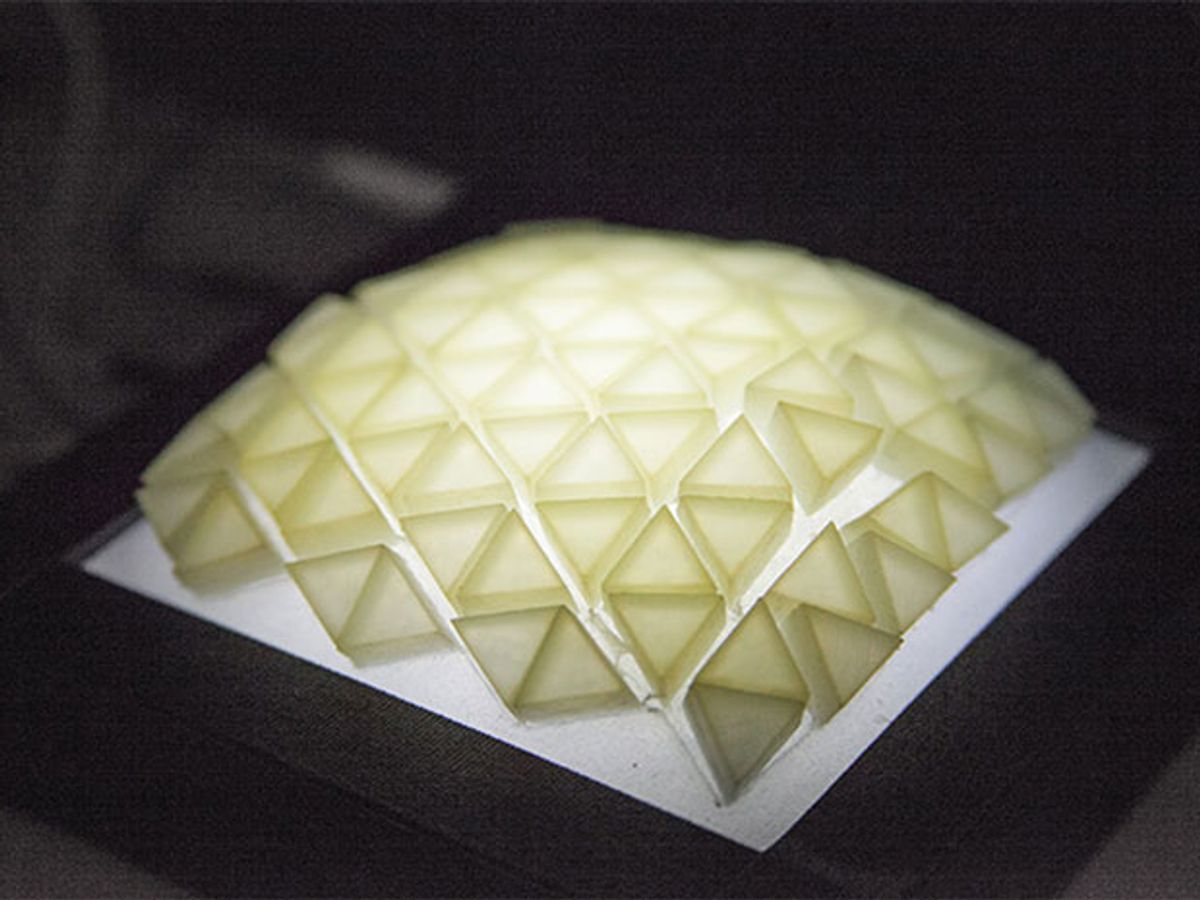

Programmable matter isn't a thing that we have a lot of experience with yet. It's still very much a technology that’s slowly emerging from research labs. MIT is one of those research centers, and Basheer Tome, a masters student at the MIT Tangible Media Group, has been working on one type of programmable material. Tome’s “membrane-backed rigid material,” called Exoskin, is made up of tessellated triangles of firm silicone mounted on top of a stack of flexible silicone bladders. By selectively inflating these air bladders, the Exoskin can dynamically change its shape to react to your touch, communicate information, change functionality, and more.

If the description of this stuff is a bit confusing, don't worry, the video makes the functionality very clear. Just a quick warning: If I'm not mistaken, the video soundtrack uses samples of the default Windows 95 startup chime. This, rather than the Exoskin itself, is probably why watching the video may fill you with a low-level sense of impending doom.

Here's a potential application that Tome explored: a steering wheel with embedded Exoskin that can be used in a bunch of different ways.

We present a texture-changing steering wheel that integrates GPS navigation directions among other information into your driving experience in an unobtrusive, natural way by driving your intuition through subtle, tactile feedback. We seek to increase spatial memory, reduce driver distraction, and simplify previously complex multi-tasking.

Haptics remain unreliable and low-fidelity in the constantly evolving automotive environment. Evolving to shape & texture change can solve that amongst other issues. One of the many advantages of using them for relaying information is their inherent ability to allow fast reflexive motor responses to stimuli. In contrast to visual displays or haptic displays, Exoskin's stimuli are both highly tactily perceptible and visually interpretable.

From what we can tell, the biggest gap between the prototype and an eventual commercial product (or even concept and prototype) will be the level of granular control that the user has over an Exoskin array. Control is dependent on the number of individually actuatable bladders that you have. In most of the concepts shown in the videos above, each one of the rigid elements can be actuated individually, meaning that every single one of them has to have its own bladder and pneumatic actuator. Using current fabrication techniques, this is not practical, which is why the physical demo that we see just has one big bladder that actuates many rigid elements at once.

Fortunately, this limitation may not last much longer. Rather than having to rely on external air pumps and lots of tubing for pneumatic actuation, it may be possible to make completely self-contained pneumatic actuators that could be embedded underneath individual Exoskin elements to actuate them separately. One possible technique: reversible electrolysis that uses electricity to turn water into hydrogen and oxygen and back again. Actuators like these are already well into the prototype stage. Other options could include thermally sensitive shape-memory elastomers or electroactive polymers.

Tome hopes that research like this will lead to a disruption of “the divide between rigid and soft, and animate and inanimate, providing inspiration for Human-Computer Interaction researchers to design more interfaces using physical materials around them, rather than just relying on intangible pixels and their limitations.” That might be just slightly overblown, but seeing a practical path towards physical objects that can adapt themselves to our needs is exciting nonetheless.

Evan Ackerman is a senior editor at IEEE Spectrum. Since 2007, he has written over 6,000 articles on robotics and technology. He has a degree in Martian geology and is excellent at playing bagpipes.