The End of AT&T

Ma Bell may be gone, but its innovations are everywhere

It’s 1974. Platform shoes are the height of urban fashion. Disco is just getting into full stride. The Watergate scandal has paralyzed the U.S. government. The new Porsche 911 Turbo helps car lovers at the Paris motor show briefly forget the recent Arab oil embargo. And the American Telephone & Telegraph Co. is far and away the largest corporation in the world

AT&T’s US $26 billion in revenues—the equivalent of $82 billion today—represents 1.4 percent of the U.S. gross domestic product. The next-largest enterprise, sprawling General Motors Corp., is a third its size, dwarfed by AT&T’s $75 billion in assets, more than 100 million customers, and nearly a million employees.

AT&T was a corporate Goliath that seemed as immutable as Gibraltar. And yet now, only 30 years later, the colossus is no more. Of the many events that contributed to the company’s long decline, a crucial one took place in the autumn of that year. On 20 November 1974, the U.S. Department of Justice filed the antitrust suit that would end a decade later with the breakup of AT&T and its network, the Bell System, into seven regional carriers, the Baby Bells. AT&T retained its long-distance service, along with Bell Telephone Laboratories Inc., its legendary research arm, and the Western Electric Co., its manufacturing subsidiary. From that point on, the company had plenty of ups and downs. It started new businesses, spun off divisions, and acquired and sold companies. But in the end it succumbed. Now AT&T is gone.

The company—still a telecom giant but more focused on the corporate market—agreed to be acquired by one of the Baby Bells, SBC Communications Inc. of San Antonio, in a deal valued at $16 billion. In a few months, AT&T’s famous ticker symbol—T, for telephone—will disappear from the New York Stock Exchange listings, and the company that grew out of Alexander Graham Bell’s original telephone patents will officially cease to exist.

Should we mourn the loss? The easy answer is no. Telephone providers abound nowadays. AT&T’s services continue to exist and could be easily replaced if they didn’t.

But that easy answer ignores AT&T’s unparalleled history of research and innovation. During the company’s heyday, from 1925 to the mid-1980s, Bell Labs brought us inventions and discoveries that changed the way we live and broadened our understanding of the universe. How many companies can make such a claim?

The oft-repeated list of Bell Labs innovations features many of the milestone developments of the 20th century, including the transistor, the laser, the solar cell, fiber optics, and satellite communications. Few doubt that AT&T’s R&D machine was among the greatest ever. But few realize that its innovations, paradoxically, contributed to the downfall of its parent. And now, through a series of events during the past three decades, this remarkable R&D engine has run out of steam.

When The AT&T Monopoly Held Sway over U.S. telecommunications, R&D managers at Bell Labs and Western Electric were assured steady funding that allowed them to look forward 10 or 20 years—the kind of long view that truly disruptive technologies need in order to germinate and thrive. That combination of stable funding and long-term thinking produced core contributions to a wide variety of fields, including wireless and optical communications, information and control theory, microelectronics, computer software, systems engineering, audio recording, and digital imaging. Accumulating more than 30 000 patents, Bell Labs also played host to a long string of scientific breakthroughs, garnering six Nobel Prizes in physics [see sidebar, "Bell's Nobels"] and many other awards.

The funding came in large part from what was essentially a built-in “R&D tax” on telephone service. Every time we picked up the phone to place a long-distance call half a century ago, a few pennies of every dollar—a dollar worth far more than it is today—went to Bell Labs and Western Electric, much of it for long-term R&D on telecommunications improvements.

In 1974, for example, Bell Labs spent over $500 million on nonmilitary R&D, or about 2 percent of AT&T’s gross revenues. Western Electric spent even more on its internal engineering and development operations. Thus, more than 4 cents of every dollar received by AT&T that year went to R&D at Bell Labs and Western Electric.

Bell’s Nobels

In its 80 years, Bell Labs has garnered six prizes in physics

1937—Wave Nature Of Matter

By firing an electron beam at a nickel crystal, Clinton J. Davisson showed that the ricocheting electrons diffracted just like electromagnetic waves. His demonstration of the electron's wave nature eventually led to solid-state physics. George P. Thomson shared the prize.

1956—The Transistor

Put semiconductors together the right way and you can make them amplify and switch signals. The invention of the transistor by John Bardeen, Walter H. Brattain, and William B. Shockley made all digital devices possible.

1977—Electrons In Imperfect Crystals

How do electrons behave inside metal alloys and noncrystalline materials like glass? Philip W. Anderson came up with a quantum mechanical model, work that earned him a Nobel prize, shared with Nevill F. Mott and John H. van Vleck. It found practical applications with the development of memory chips and other solid-state devices.

1978—Cosmic Microwave Background

Probing the sky with a radio antenna originally developed for satellite communications, Arno A. Penzias and Robert W. Wilson detected a faint microwave echo of the universe's birth. Their discovery provided key support for the big bang theory.

1997—Laser Cooling

By shining converging laser beams at a group of atoms, Steven Chu was able to slow the atoms and reduce their temperature almost to absolute zero. This “optical molasses” effect led to atomic lasers and to improved atomic clocks and navigation devices. Chu shared the prize with Claude Cohen-Tannoudji and William D. Phillips.

1998—The Fractional Quantum Hall Effect

Using a powerful magnetic field, Horst L. Störmer, Robert B. Laughlin, and Daniel C. Tsui put electrons into a quantum state with liquidlike properties. The phenomenon, called the “fractional quantum Hall effect,” is shedding light on the behavior of electrons and other elementary particles.

And it was worth every penny. This was mission-oriented R&D in an industrial context, with an eye toward practical applications and their eventual impact on the bottom line.

AT&T’s commitment to R&D stemmed mainly from its pre-World War I experiences in developing high-power vacuum tubes for use as amplifiers for transcontinental telephone service. Facing scrappy competition from a hornet’s nest of local phone companies after Bell’s original patents had expired, AT&T saw its leadership threatened—even though it controlled about half the country’s telephones.

The company wanted to expand and offer “universal service” to its customers, aiming to put its phones in every home and office across the country and connect them with one another. But that required a very-low-distortion amplifier, or repeater, that could allow AT&T to provide something no other company was offering: coast-to-coast telephone calls.

In 1912, Harold D. Arnold, a young Ph.D. physicist fresh from the University of Chicago, joined AT&T’s engineering department. He began trying to improve the performance of the low-power Audion triode tube invented by Lee de Forest several years earlier. Arnold coated the tube’s tungsten cathode with an oxide layer to encourage the emission of electrons and pumped out excess air molecules from the tube that he figured were impeding current flow through it. The resulting high-power vacuum tubes performed splendidly, regenerating voice signals sent over long distances with minimal distortion [see photo, "A Famous Visitor"].

Using repeaters based on Arnold’s tubes, AT&T created a sensation in 1915 at the Panama-Pacific International Exposition in San Francisco, where the company demonstrated transcontinental service for the first time. From AT&T headquarters in New York City, Alexander Graham Bell once again uttered his famous command into the mouthpiece, “Mr. Watson, come here. I want you.” From San Francisco, his old assistant bellowed back, “It will take me five days to get there now!”

For the next half century, AT&T had the U.S. transcontinental telephone market all to itself—an advantage that helped the company reestablish its monopoly, bringing many of the small local phone companies under the umbrella of its Bell System. Thus, firmly convinced of the value of investing in research and development, in 1925, AT&T managers reorganized most of the company’s R&D activities into a single organization: Bell Telephone Laboratories.

The first Bell Labs headquarters, in a gracious, sun-filled, 12-story building at 463 West St. in New York City, looking out across the Hudson River, soon became home to 2000 scientists and engineers. Its founding president, Frank B. Jewett, would later help lead the United States’ R&D efforts during World War II, as president of the National Academy of Sciences from 1939 to 1947.

As An Industrial Laboratory , Bell Labs was primarily committed to improving AT&T’s telephone operations. But Jewett and Arnold, his first director of research, wisely supported projects whose results might not necessarily be useful in the short run. Their commitment to such basic research was quickly rewarded in 1927 by a scientific breakthrough of epic proportions.

Observing electrons as they sped through a vacuum tube and ricocheted from a nickel crystal, physicist Clinton J. Davisson recognized that beams of these feisty subatomic particles seemed to behave like waves! The intriguing hypothesis that matter could have wavelike properties, proposed by Louis de Broglie, was just then the subject of heated debate in Europe. Davisson’s serendipitous discovery of electron waves went a long way toward verifying de Broglie’s theory—and earned him half of the 1937 Nobel Prize in physics, the first for Bell Labs.

The quantum description of matter that emerged from that 1920s’ ferment soon found practical applications in the work of other Bell Labs scientists. It became essential to understanding electrical conduction in semiconductors such as silicon and germanium that emerged from the World War II U.S. radar program, in which Bell Labs and Western Electric played key R&D roles. This emerging quantum theory of solids was also crucial to the postwar invention of the transistor by physicists John Bardeen, Walter H. Brattain, and William B. Shockley—then working at Bell Labs’ new home, a sprawling suburban campus in Murray Hill, N.J.

But the transistor was still a long way from becoming the mass-produced gizmo that would reshape—or create—huge industries, including radio, television, microelectronics, and aerospace. More than a decade of development—involving silicon purification, crystal growing, and the diffusion of chemical agents called dopants into semiconductors—was required before transistors could begin to assume the forms they are found in today. Much of that work took place not at Bell Labs but at two Western Electric plants in Pennsylvania, in Allentown and nearby Reading, where engineers developed the precision manufacturing processes and techniques needed to mass-produce transistors. The clean room, used today in almost every aspect of semiconductor manufacturing, was born and raised in Allentown.

“Bell Laboratories scientists in Murray Hill, N.J., may have won the Nobel Prizes and gotten most of the press, but Allentown and Reading delivered the goods,” notes Stuart W. Leslie, a historian of science at Johns Hopkins University in Baltimore. “Their research and production engineers, tool-and-die makers, layout operators, and assembly-line workers figured out how to transform prize-winning research into devices that were reliable, durable, consistent, and cheap.”

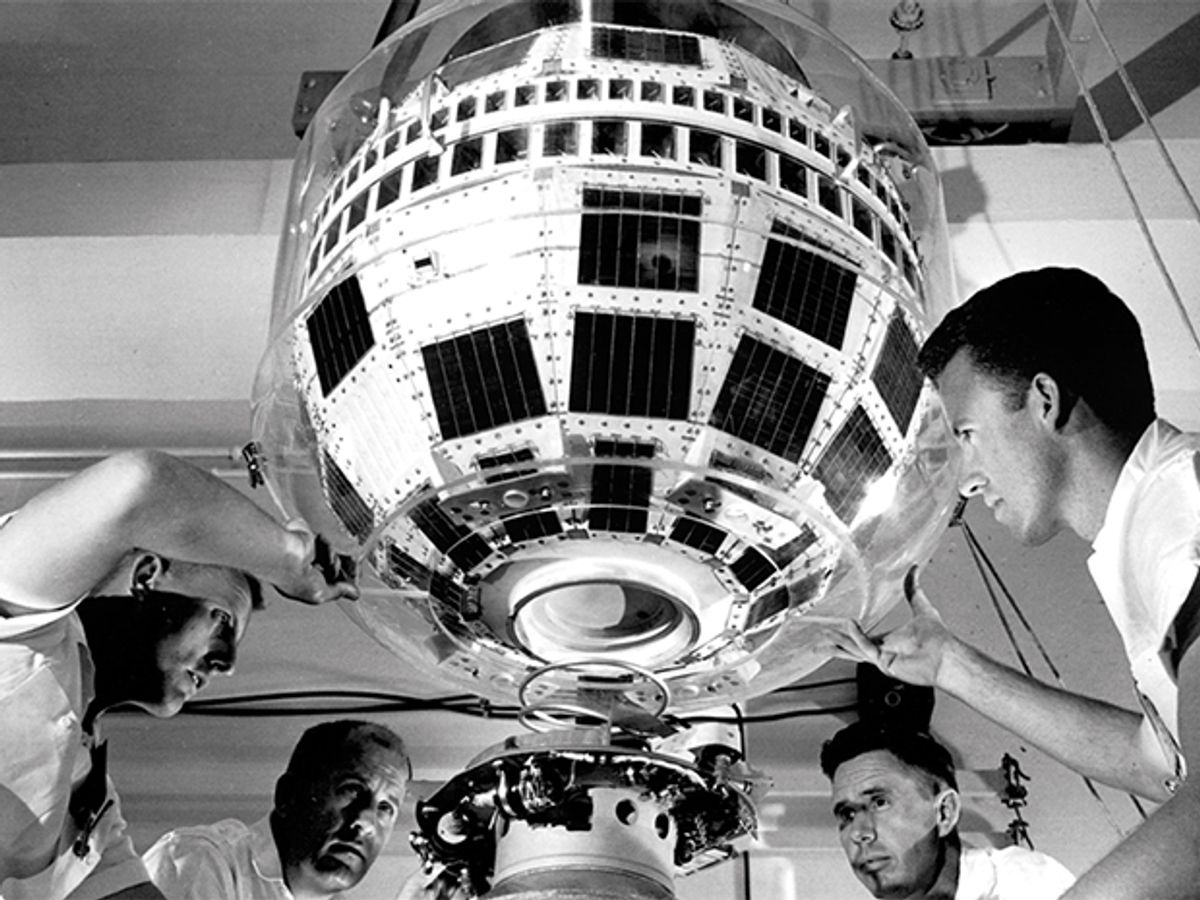

Many other innovations spewed forth from Bell Labs during the 1950s in the wake of the transistor’s invention, for which Bardeen, Brattain, and Shockley received the 1956 Nobel Prize in physics. Silicon technology spawned the integrated circuit. It also led to the solar cell, which provided a durable power source for generations of satellites in succeeding decades. And electrical engineer John R. Pierce perfected the wartime traveling-wave radar tube into an efficient microwave source to make his dream of satellite communications a reality. He played a key role in the development of Telstar, the satellite that carried an amplification circuit designed to retransmit signals over enormous distances.

Then, in 1964, using a huge horn-shaped antenna salvaged from the Telstar project, physicists Arno A. Penzias and Robert W. Wilson accidentally stumbled across the dim afterglow of the universe’s birth: the remnant microwaves from the big bang. Their discovery triggered a revolution in cosmology and earned them a 1978 trip along the by then well-worn path from Bell Labs to Stockholm.

But AT&T’S Magnificent R&D Program , which helped the company consolidate its monopoly and dramatically improve phone service for its customers, also contributed to the company’s dissolution, as noted by Christopher Rhoads in a recent Wall Street Journal article. Consider, for example, the transistor, the invention that today lies at the heart of all things digital, from DVD players to satellite transponders. AT&T at first licensed the patent rights to the invention for a paltry $25 000 and later put them in the public domain as part of a 1956 consent decree that averted a court breakup of the monopoly.

AT&T leaders recognized that the transistor was just too important to keep to themselves—and the courts probably would not have allowed that anyway. But more than that, Bell Labs and Western Electric actively encouraged the diffusion of their semiconductor technology by offering a series of workshops during the 1950s that were well attended by engineers from many other companies. The participants included Jack Kilby, who would go on to pioneer the integrated circuit at Texas Instruments, and a handful of engineers from a small Tokyo electronics firm that would parlay early success with the transistor radio into a decades-long dominance of consumer electronics: Sony. If the invention of the transistor can be said to have sparked the information age, it really became a global phenomenon after those workshops helped stoke the fires.

At the time, Bell Labs managers generally regarded their company as a quasi-public institution contributing to the national welfare by enriching the country’s science and technology. Seen in that light, AT&T’s vigorous promotion of semiconductor technology made good sense—especially during a time the company was churning out profits and didn’t feel any competition breathing down its neck.

But such generosity may have been one of the crucial forces behind its eventual downfall, as smaller, nimbler, and more legally unfettered firms seized the opportunity to develop and deploy innovations that would help undermine AT&T’s dominance of U.S. telecommunications. “After its forced breakup in 1984,” The Wall Street Journal’s Rhoads wrote, “it was slowly crushed by technologies that drove down the price of a long-distance call, and more recently by wireless calling and Internet phoning.”

At the same time Bell Labs and Western Electric were working on their many innovations, there was a resistance to rapid change rooted deep within the parent company’s culture. According to Sheldon Hochheiser, former AT&T corporate historian, “a service ethos and the absence of the countervailing pressures of competition produced a corporate culture dominated to a great degree by an engineering mentality.” That culture, he adds, “encouraged a value system where managers tended to take the time to get innovations right, as an engineer would define right.”

Thus, AT&T engineers usually emphasized reliability and robustness of the network over the rapid introduction of advanced technologies. Often a decade or more passed before new features, such as long-distance direct dialing and touch-tone phones, would finally percolate throughout the system. And cellular telephony, first described in detail by Bell Labs engineers in 1947, never gained widespread commercial operation as part of the Bell System.

Perhaps The Most Egregious Example of the company’s technological conservatism was the languid introduction of electronic switching, conceived in the 1930s by Bell Labs research director Mervin J. Kelly (who later became president). Kelly was the one who had hired Shockley, directing him to find a solid-state replacement for the electromechanical relays used in the switches in the Bell System’s many central offices.

The noisy, clunky relays opened and closed circuits to establish continuous physical connections between any two phones. A solid-state switch, on the other hand, would have no moving parts, making it smaller, faster, quieter, and more reliable. Although electronic switches based on solid-state components had been developed by 1959, AT&T didn’t introduce the first digital switch into the Bell System until 1976. And electronic switching was still being gradually rolled out well into the 1980s, when AT&T’s monopoly on telephone service came to an abrupt end. The much more rapid introduction of digital switches by MCI and Sprint probably contributed to AT&T’s downfall.

And even though Bell Labs and Western Electric developed most of the underlying silicon technology required for the integrated circuit, which eventually became the guts of the electronic central-office switch, AT&T wasn’t in on its creation. The upstarts Fairchild Semiconductor and Texas Instruments, focused as they were on miniaturizing electronics for their military and aerospace customers, led the way instead. Here again, AT&T engineers probably contributed to the lapse by insisting on high-performance discrete components built for 40-year lifetimes in the Bell System. There was no great drive for miniaturization in the system, acknowledged Ian Ross, the president of Bell Labs at the time of the breakup. “The weight of the central offices was not a big concern,” Ross said.

Another factor contributing to the technological inertia was the billions of dollars already sunk into the Bell System. Any responsible corporate manager would prefer to amortize such investments before introducing newer, better devices, especially when no real competitors existed. As the historian Hochheiser notes, the “absence of competition allowed the Bell System’s managers the freedom to take an extremely long view.”

That Ability To Take The Long View was a boon to Bell Labs researchers, who could follow their own instincts and explore what especially intrigued them, rather than what might bolster AT&T’s bottom line during the next few years. “The only pressure at Bell Labs was to do work that was good enough to be published or patented,” recalls Morris Tanenbaum, who developed the first silicon transistor in 1954 [see “The Lost History of the Transistor,” IEEE Spectrum, May 2004] and rose to the upper echelons of Bell Labs management in the 1970s.

With ample offices and well-equipped lab space, lush green surroundings, classy cafeterias, and an extensive library, the Bell Labs campus in Murray Hill became a magnet for some of the best scientists and engineers in the world. Given broad research freedom, they rewarded their far-sighted employer with a remarkable series of technological firsts, right up to and beyond the 1984 breakup of AT&T.

Take researchers Izuo Hayashi and Morton Panish, for example. In 1970 they developed the first semiconductor lasers able to function at room temperature—a prerequisite for use in CD and DVD players, printers, bar-code scanners, and fiber-optic networks. At about the same time, Willard Boyle and George Smith invented the charge-coupled device, or CCD, which is now the heart and soul of digital imaging, with millions produced annually for digital cameras. Meanwhile, Bell Labs researchers created the Unix operating system and the C programming language and its offshoots [see sidebar, "Not Just Hardware"]—key computer-engineering developments that helped other companies such as Sun Microsystems flourish.

Unix was another Bell Labs brainchild

Today, Unix, in all of its variants, descendants, and imitators, is easily the most influential operating system in the world. MS-DOS, the foundation on which Windows was built, started out as a poor man’s Unix. Apple’s Mac OS X, as well, comes from a version of Unix created at the University of California, Berkeley. And, of course, Unix was the model for Linux. But despite its importance, Unix’s 1969 origin at Bell Labs began with an outright failure, a system called Multics.

Back in the 1960s, multimillion-dollar dinosaurs such as the IBM 360 bestrode the computing landscape. Powerful as they were, mainframes were single-user machines until time-sharing systems were developed. Multics was to be one of them. Developed jointly by Bell Labs, GE, and MIT, the creators of Multics had an ambitious goal. They were to design a system that would meet “almost all of the present and near-future requirements of a large computer utility,” according to a 1965 planning document.

Four years later, a commercially viable system was still a distant goal. Bell Labs withdrew from the project, but a core group of researchers there, led by Kenneth Thompson [seated in photo above] and Dennis Ritchie [standing] continued their research on operating systems. Thompson devised the file system that lies at the heart of Unix. (He also wrote a game called Space Travel that spurred the development of the operating system itself by showing what resources a program needed to be able to run.)

By the late 1960s, minicomputers were scurrying about the computer world, and the post-Multics group was allowed to buy a Digital Equipment Corp. PDP-11 (a bargain at only US $65 000). The first PDP-11 version of Unix was a wonder, a mere 16 kilobytes in size, with 8 KB of memory for additional software. The first Unix application would be a word-processing program to be used by AT&T’s patent-writing group. The experiment was a success; other AT&T divisions began using Unix, and the new operating system was off and running. When AT&T published the code and made it available for noncommercial use, UC Berkeley; Carnegie Mellon, in Pittsburgh; and other schools created even richer systems. A generation of software developers would grow up with Unix in one form or another.

Soon, Thompson decided to write a Fortran compiler for the new operating system. What he came up with, though, was a new programming language, similar to the Basic Combined Programming Language, or BCPL, that had been written for Multics. He dubbed it “B.” By 1971 it had evolved to become the C language. Today, most major software projects are written in C or its descendant, C++, which itself was invented at Bell Labs, by Bjarne Stroustrup in the early 1980s.

—Steven Cherry

And the world-class science continued well into the 1980s. Arriving at Bell Labs in 1978, physicist Steven Chu got to spend six months figuring out what excited him the most and was told to settle for nothing less than “starting a new field.” He says he felt he was among the elect, “with no obligation to do anything except the research we loved best.” Chu rewarded his employer’s confidence with the development of a laser method to cool atoms. That research, which earned him a share of the 1997 Nobel Prize in physics, is now allowing others to explore the quantum behavior of atoms and molecules.

Over the past two decades, however, basic and applied research have increasingly parted company at AT&T. Many of Bell Labs’ best scientists have left since the 1984 breakup. Then came the 1996 spin-off of Lucent Technologies Inc., which inherited most of Bell Labs. The exodus of top talent continued and accelerated after the collapse of Lucent’s stock in the past few years and a highly publicized scandal over fabrication of data by physicist Jan Hendrik Schön. The departing scientists have joined the legions of Bell Labs alumni already in academic positions—including Chu, who just replaced another AT&T alumnus, Charles Shank, as the director of the Lawrence Berkeley National Laboratory in California.

The Bell Labs budget has suffered, too, as R&D funding at Lucent has plummeted in the past few years. In 2003, with R&D expenditures of $1.49 billion (down from $2.31 billion in 2002), it was outspent by 56 companies around the world. Having recently returned to profitability in large part by cutting back on research, Lucent will be lucky to remain in the top 100.

In Retrospect, It Seems Unreasonable to expect that a publicly held corporation can devote so much money to long-term research when facing the ruthless forces of the marketplace. AT&T added tremendous value to society, but as a condition of its regulated monopoly status, the company was not allowed to commercialize new technology that was not directly related to telephony.

Nor could AT&T charge customers for the technology except through its fees for telephone equipment and services. When it was a regulated monopoly, the company could build into those charges a pittance devoted to risky future-oriented research, such as setting up a solid-state physics department in the postwar years. But as ordinary corporations competing for customer dollars after the breakup and later spin-off, AT&T and Lucent could afford no such luxury.

We the customers are the ultimate losers. A vigorous, forward-looking society needs mechanisms like this to set aside funds for its long-term technological future. Letting governments serve the purpose is an imperfect alternative at best, fraught with the difficulty of making wise choices. The peer-review process widely used to select projects may be able to direct public funds to worthwhile research, but it usually favors established scientists and often overlooks bright young researchers—such as Chu—with bold but risky ideas.

AT&T, Bell Labs, and Western Electric effectively diverted a tiny fraction of our everyday expenses—and from all corners of the U.S. economy—into long-term R&D projects in an industrial setting that could, and often did, make major improvements in our lives. Today we are eating up the technological capital they built during those amazingly productive years. Are we doing anything to replace it?

About the Author

Michael Riordan teaches the history of physics and technology at Stanford University and the University of California, Santa Cruz.

To Probe Further

A detailed account of the transistor’s invention and development can be found in Crystal Fire: The Birth of the Information Age, by Michael Riordan and Lillian Hoddeson (W.W. Norton & Co., 1997).

The major events that led to AT&T’s demise are discussed in The Fall of the Bell System: A Study in Prices and Politics, by Peter Temin with Louis Galambos (Cambridge University Press, 1987).

A timeline with AT&T’s innovation milestones is available at https://www.att.com/history.