The Silicon Solution

In the future, ordinary silicon chips will move data using light rather than electrons, unleashing nearly limitless bandwidth and revolutionizing computing

The relentless push of Moore's Law has allowed data rates to soar, Internet traffic to swell, and wired and wireless technology to cover continents. Increasingly, we all expect fast, free-flowing bandwidth whenever and wherever we connect with the world. Within the next decade, the circuitry embodied by a rack of today's servers, able to churn through billions of bits of data per second and handle all the data-processing needs of a small company, will fit neatly on a single silicon chip half the size of a postage stamp.

But there's a problem. As newer, faster microprocessors roll out, the copper connections that feed those processors within computers and servers will prove inadequate to handle the crushing tides of data. At data rates approaching 10 billion bits per second, microscopic imperfections in the copper or irregularities in a printed-circuit board begin to weaken and distort the signals--even traveling distances as short as 50 centimeters can be a problem. New board materials and new techniques could provide some additional performance gains, but only at increased cost.

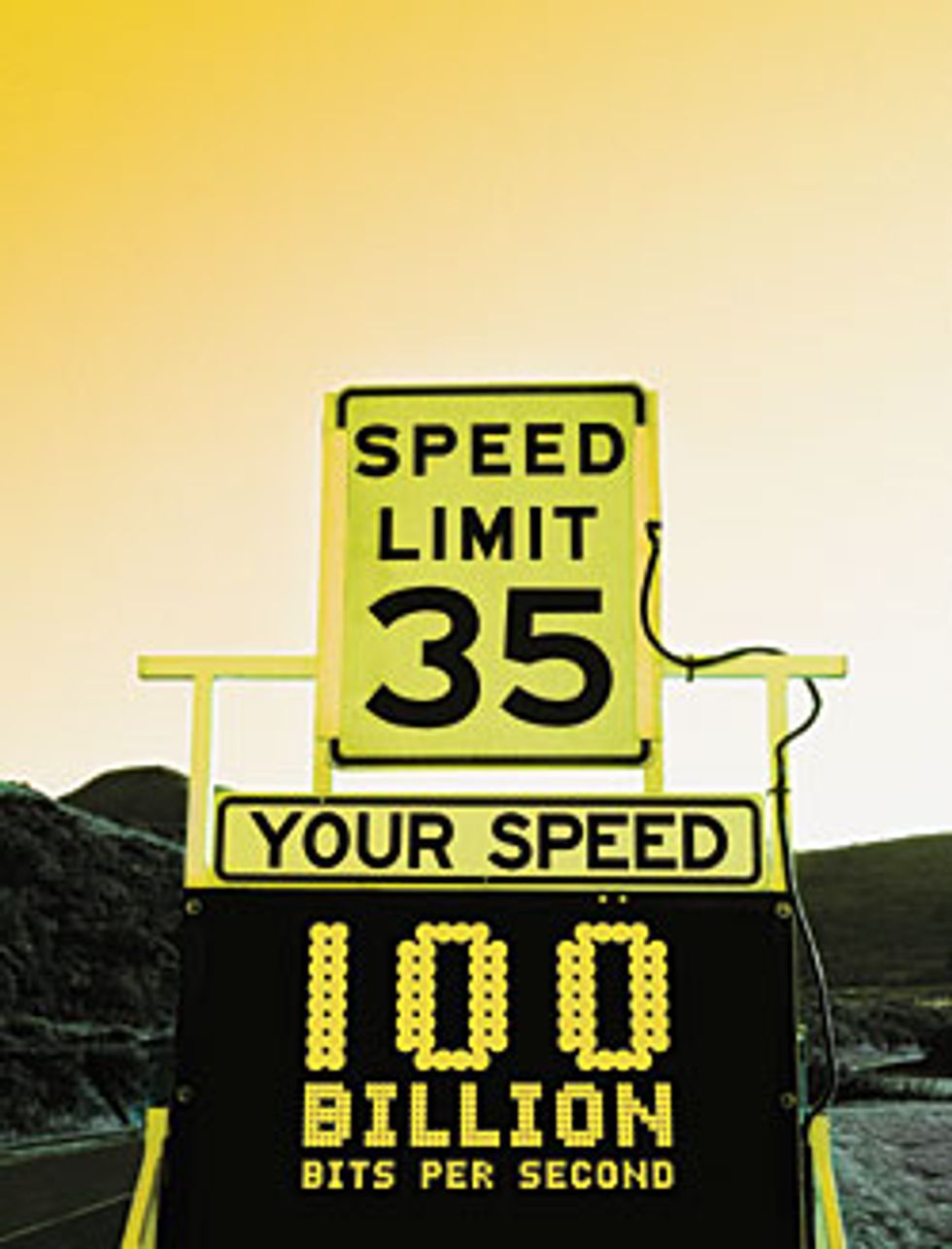

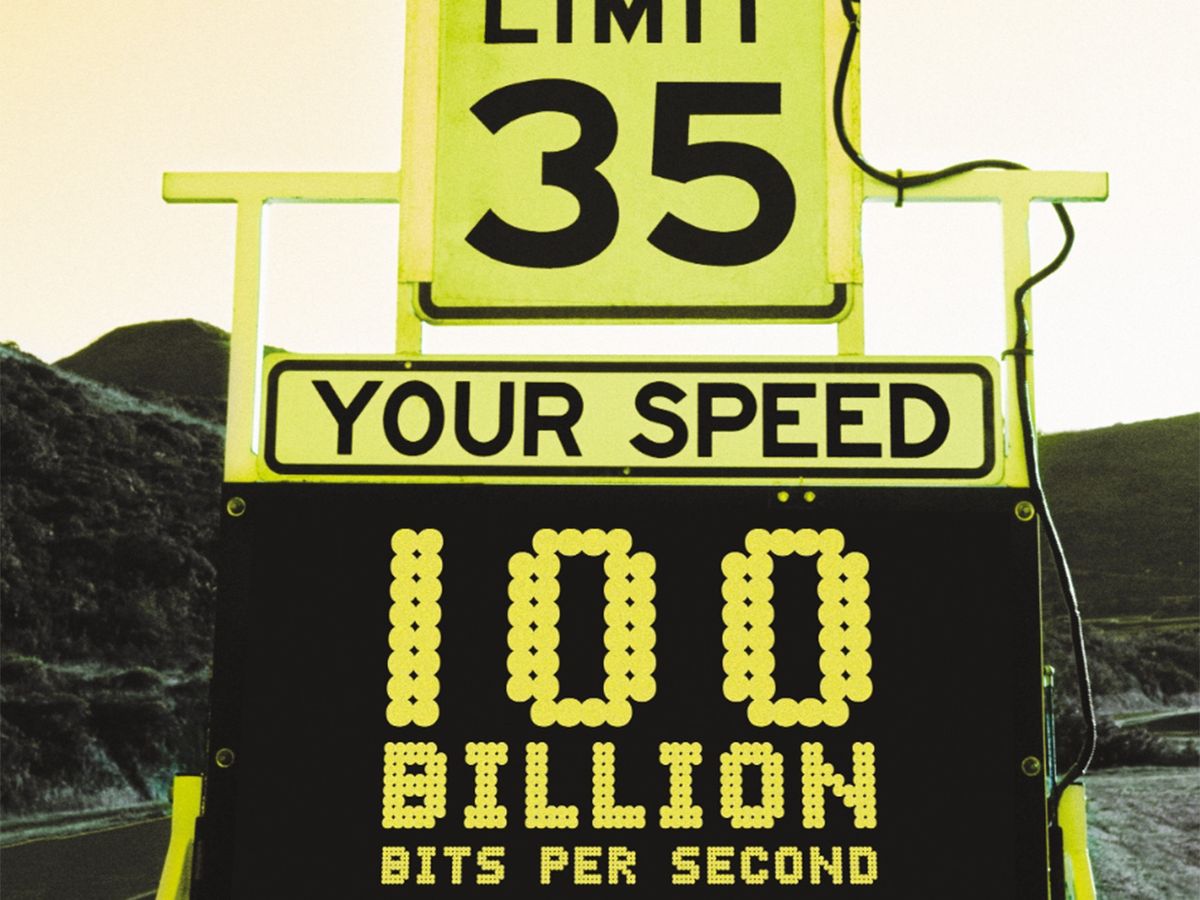

Here's a better way: replace the copper with optical fiber and the electrons with photons. That's the promise of silicon photonics: affordable optical communications for everything. It will let manufacturers build optical components using the same semiconductor equipment and methods they use now for ordinary integrated circuits, thereby dramatically lowering the cost of photonics. Meanwhile, the performance gains will be significant: integrated onto a silicon chip, an optical transceiver could send and receive data at 10 billion or even 100 billion bits per second.

That kind of bandwidth, in turn, will dramatically alter the ways we use computers. With optical interconnects in and around our desktop computers and servers, we'll download movies in seconds rather than hours and conduct lightning-fast searches through gigabytes of image, audio, or text data. Multiple simultaneous streams of video arriving on our PCs will open up new applications in remote monitoring and surveillance, teleconferencing, and entertainment.

This quick and efficient photon-based means of moving and manipulating vast data files is already proving itself in our laboratory at Intel Corp., in Santa Clara, Calif., as well as in several others around the world. When or whether it will find its way into ordinary PCs depends not just on building individual optical components from silicon--a huge challenge, to be sure--but also on integrating and assembling these devices so that they're competitive with existing optical products. But if recent research results are any gauge, we are well on the way.

The core of the internet and long-haul telecom links made the switch to fiber optics long ago. A single fiber strand can now carry up to one trillion bits of data per second, enough to transmit a phone call from every resident of New York City simultaneously. In theory, you could push fiber up to 150 trillion bits per second--a rate that would deliver the text of all the books in the U.S. Library of Congress in about a second.

Unlike electronic data, optical signals can travel tens of kilometers without distortion or attenuation. You can also pack dozens of channels of high-speed data onto a single fiber, separating the channels by wavelength, a technique called wavelength-division multiplexing. Today, 40 separate signals, each running at 10 gigabits per second, can be squeezed onto a hair-thin fiber.

Inside your PC, though, it's copper all the way. In fact, more than 99 percent of the world's interconnects reside in and around PCs and servers, where optical communication is nowhere to be found.

Why? Because photonic components are expensive. Today's devices are specialized components made from indium phosphide, lithium niobate, and other exotic materials that can't be integrated onto silicon chips. That makes their assembly much more complex than the assembly of ordinary electronics, because the paths that the light travels must be painstakingly aligned to micrometer precision. In a sense, the photonics industry is where the electronics industry was a half century ago, before the breakthrough of the integrated circuit.

The only way for photonics to move into the mass market is to introduce integration, high-volume manufacturing, and low-cost assembly--that is, to "siliconize" photonics. By that we mean integrating several different optical devices onto one silicon chip, rather than separately assembling each from exotic materials. In our lab, we have been developing all the photonic devices needed for optical communications, using the same complementary metal oxide semiconductor (CMOS) manufacturing techniques that the world's chip makers now use to fabricate tens of millions of microprocessors and memory chips each year.

To understand how optical data might one day travel through silicon in your computer, it helps to know how it travels over optical fiber today. First, a computer sends regular electrical data to an optical transmitter, where the signal is converted into pulses of light. The transmitter contains a laser and an electrical driver, which uses the source data to modulate the laser beam, turning it on and off to generate 1s and 0s.

Imprinted with the data, the beam travels through the glass fiber, encountering switches at various junctures that route the data to different destinations. If the data must travel more than about 100 kilometers, an optical amplifier boosts the signal. At the destination, a photodetector reads and converts the data encoded in the photons back into electrical data.

Similar techniques could someday allow us to collapse the dozens of copper conductors that currently carry data between processors and memory chips into a single photonic link. To do that cheaply, though, you need to figure out how to render the optical components--the laser, the modulator, the photodetector, and so on--in silicon [see illustration, " Moving Data With Light"].

Until very recently, silicon had not been considered a good candidate for optical communications. Just three years ago, an article in this magazine carried the memorable quote, "If God wanted ordinary silicon to efficiently emit light, he would not have given us gallium arsenide." [See IEEE Spectrum, "Linking With Light," August 2002.]

Such skepticism is not unfounded. It's hard to make silicon emit light efficiently. In contrast, materials such as indium phosphide readily emit light; applying a voltage temporarily elevates the energy of electrons in the material's crystal lattice from one energy level, known as the valence band, to a higher one, called the conduction band. When an electron returns to the valence band, it releases energy in the form of a photon. In silicon, though, the electrons tend to release their energy as heat, in the form of lattice vibrations, rather than light.

What's more, silicon lacks a strong electro-optic effect--a measure of how fast light travels through it in the presence of an electrical field. That characteristic means it's not very good at modulating a laser beam. Finally, silicon is poor at photodetection--converting photons into electrons--at the infrared wavelengths commonly used for optical communications.

Despite silicon's shortcomings, researchers have been studying silicon photonics for more than 20 years, starting with Richard Soref's pioneering work in the mid-1980s at the Air Force Research Laboratory. Since then, there have been a host of silicon photonics breakthroughs at Cornell University, the Massachusetts Institute of Technology, the University of California at Los Angeles, the University of Catania in Sicily, the University of Surrey, IBM, Intel, STMicroelectronics, and elsewhere [for a discussion of the photonics work in Catania, see " Light From Silicon," in this issue]. To date, though, none of the silicon-based devices developed can manipulate and control light as well as existing commercial optical devices made from such nontraditional semiconductors as indium phosphide and gallium nitride.

To siliconize photonics, you need six basic building blocks.

An inexpensive light source.

Devices that route, split, and direct light on the silicon chip.

A modulator to encode or modulate data into the optical signal.

A photodetector to convert the optical signal back into electrical bits.

Low-cost, high-volume assembly methods.

Supporting electronics for intelligence and photonics control.

It's a tall order. Nevertheless, we at Intel's Photonics Technology Lab have been working on these building blocks for several years. One of our latest achievements, announced last February, is the world's first continuous all-silicon laser, which is based on the Raman scattering effect. Named for the Indian physicist Chandrasekhara Venkata Raman, who first described it in 1928, this effect causes light to scatter in certain materials to produce longer or shorter wavelengths.

Raman scattering is used today, for example, to boost signals traveling through long stretches of glass fiber. It allows light energy to be transferred from a strong pump beam into a weaker data beam. Most long-distance telephone calls today benefit from Raman amplification.

Typically, a Raman amplifier requires kilometers of fiber to produce a useful amount of amplification, because glass exhibits very weak scattering. Silicon, though, has a crystal structure, so its Raman scattering is more than 10 000 times as strong as that of ordinary glass fiber. In other words, you could achieve the same amplification in a centimeter-square chip that you'd get in kilometers of glass fiber.

In fact, so intense is the light amplification in silicon that it sets the stage for creating a laser. To build a Raman laser in silicon, you first need to create a conduit, also known as a waveguide, for the light beam. This can be done using standard CMOS techniques to etch a ridge or channel into a silicon wafer. In any waveguide, some light is lost through imperfections, surface roughness, and absorption by the material. The trick, of course, is to ensure that the amplification provided by the Raman effect exceeds the loss in the waveguide.

In mid-2004, we discovered that increasing the pump power beyond a certain point failed to increase the Raman amplification and eventually even reduced it. The culprit was a process called two-photon absorption, which caused the silicon to absorb a fraction of the pump beam's photons and release free electrons. Almost immediately after we turned on the pump laser, a cloud of free electrons built up in the waveguide, absorbing some of the pump and signal beams and killing the amplification. The stronger the pump beam, the more electrons created and the more photons lost.

Intel researchers found a way to flush out the extra electrons by sandwiching the waveguide within a device called a PIN diode; PIN stands for p-typeintrinsicn-type. When a voltage is applied, the free electrons move toward the diode's positively charged side; the diode effectively acts like a vacuum and sweeps the free electrons from the path of the light. Using the PIN waveguide, we demonstrated continuous amplification of a stream of optical bits, more than doubling its original power.

Once we had the amplification, we created the silicon laser by coating the ends of the PIN waveguide with specially designed mirrors. We make these dielectric mirrors by carefully stacking alternating layers of nonconducting materials, so that the reflected light waves combine and intensify. They can also reflect light at certain wavelengths while allowing other wavelengths to pass through.

With the Raman amplifier between the two dielectric mirrors, we had the basic configuration needed for a laser. After all, laser stands for "light amplification by stimulated emission of radiation," and that's what was going on in our device. Photons that entered were multiplied in number by the Raman amplifier. Meanwhile, as the light waves bounced back and forth between the two mirrors, they stimulated the emission of yet more photons through Raman scattering.

The photons stimulated in this way were in phase with the others in the amplifier, so the beam generated was coherent. Once the round-trip gain of photons in the cavity exceeded the round-trip loss, we observed a steady beam of laser light exiting the silicon chip. We had built the first continuous silicon laser [see illustration, " Let There Be Light"].

Beyond building the light source and moving light through the chip, you need a way to modulate the light beam with data. The simplest option is switching the laser on and off, a technique called direct modulation. An alternative, called external modulation, is analogous to waving your hand in front of a flashlight beam: blocking the beam of light represents a logical 0; letting it pass represents a 1. The only difference is that in external modulation the beam is always on.

For data rates of 10 Gb/s or higher and traveling distances greater than tens of kilometers, this difference is critical. Each time a semiconductor laser is turned on, it "chirps." The initial surge of current through the laser changes its optical properties, causing an undesired shift in wavelength. A similar phenomenon occurs when you turn on a flashlight: the light changes quickly from orange to yellow to white as the bulb filament heats up.

If the chirped beam is sent through an optical fiber, the different wavelengths will travel at slightly different speeds, which warps data patterns. When there's a lot of data traveling quickly, this distortion causes errors in the data.

With an external modulator, by contrast, the laser beam remains stable, continuous, and chirp-free. The light enters the modulator, which shutters the beam rapidly to produce a data stream; even 10-Gb/s data can be sent up to about 100 km with no significant distortion. Fast modulators are typically made from lithium niobate, which has a strong electro-optic effect--that is, when an electric field is applied to it, it changes the speed at which light travels through the material.

You start by splitting the laser beam in two and then applying an electric field to one beam. If the speed changes enough to delay the beam by half of one wavelength, that beam will be out of phase with its mate. When the beams recombine, they will interfere with each other and cancel out [see illustration, " Encoding Photons With Data"].

If, on the other hand, no voltage is applied, the beams remain in phase, and they will add constructively when recombined. Encoding the beam with 1s and 0s, then, means making the beams interfere (0) or keeping them in phase (1).

A silicon-based modulator, as mentioned before, has the disadvantage of lacking this electro-optic effect. To get around this drawback, we devised a way to selectively inject charge carriers (electrons or holes) into the silicon waveguide as the light beam passes through. Because of a phenomenon known as the free carrier plasma dispersion effect, the accumulated charges change the silicon's refractive index and thus the speed at which light travels through it. The silicon modulator splits the beam in two, just like the lithium niobate modulator. However, instead of the electro-optic effect, it's the presence or absence of electrons and holes that determines the phases of the beams and whether they combine to produce a 1 or a 0.

The trick is to get those electrons and holes into and out of the beam's path fast enough to reach gigahertz data rates. Previous schemes injected the electrons and holes into the same region of the waveguide. When the power was turned off, the free electrons and holes faded away very slowly; the maximum speed was about 20 megahertz.

Intel's silicon modulator uses a transistorlike device rather than a diode both to inject and to remove the charges. Electrons and holes are inserted on opposite sides of an oxide layer at the heart of the waveguide, where the light is most intense. Rather than waiting for the charges to fade away, the transistor structure pulls them out as rapidly as they go in. To date, this silicon modulator has encoded data at speeds of up to 10 Gb/s--fast enough to rival conventional optical communications systems in use today.

Once the beam is flowing through the waveguide, photodetectors are used to collect the photons and convert them into electrical signals. They can also be used to monitor the optical beam's properties--power, wavelength, and so on--and feed this information back to the transmitter, so that it can optimize the beam.

Silicon absorbs visible light well, which is why it appears opaque to the naked eye. Infrared, however, passes through silicon without being absorbed, so photons at those wavelengths can be neither collected nor detected.

This problem can be overcome by adding germanium to the silicon waveguides. Germanium absorbs infrared radiation at longer wavelengths than does silicon. So using an alloy of silicon and germanium in part of the waveguide creates a region where infrared photons can be absorbed. Our research shows that silicon germanium can achieve fast and efficient infrared photodetection at 850 nanometers and 1310 nm, the communications wavelengths most commonly used in enterprise networks today.

One step that's often overlooked in discussions of optical devices is assembly. But this step can account for as much as a third of the cost of the finished product. Integrating all the devices onto a single chip will help reduce costs significantly; the fewer discrete devices, the fewer assembly steps required. We're not yet at the point of full integration, however, and in the meantime, we still need a way to assemble and connect the silicon optical devices to external light emitters and optical fibers.

Optical assembly has long been much more challenging than electronics assembly. First, the surfaces where light enters and exits each component must be polished to near perfection. Each of these mirrorlike facets must then be coated to prevent reflections, just as sunglasses are coated to reduce glare.

With silicon, some of these extra assembly steps can be greatly simplified by making them part of the wafer fabrication process. For example, the ends of the chips can be etched away to a mirror-smooth finish using a procedure known as deep silicon etching, first developed for making microelectromechanical systems. This smooth facet can then be coated with a dielectric layer to produce an antireflective coating.

Because a fiber and a waveguide are different sizes, a third device--typically a taper--is needed to connect the two. The taper acts like a funnel, taking light from a larger optical fiber or laser and feeding it into a smaller silicon waveguide; it works in the opposite direction as well. Obviously, you don't want to lose light in the process, which can be tricky when hooking up a waveguide 1 micrometer across to a 10-mm-diameter optical fiber.

Connecting optical fibers to optical devices on a chip requires attaching the fiber directly to the chip somehow. One approach we are pursuing is micromachining precise grooves in the chip that are lithographically aligned with the waveguide. Fibers placed in these grooves fall naturally into the proper position. Our research indicates that such passive alignments could lose less than 1 decibel of light as the beam passes from the fiber through the taper and into the waveguide.

To passively couple a laser to a silicon photonic chip, you start by bonding the laser onto the silicon. Silicon etching can be used to produce mirrors, to help align the laser beam to the waveguides. You can also etch posts and stops into the silicon surface, to control the vertical alignment of the laser to the waveguide, and add lithographic marks, to help with horizontal alignment.

The ultimate goal in siliconizing photonics is to integrate many silicon optical devices onto a low-cost silicon substrate using standard CMOS manufacturing techniques. Parts of this scheme already exist. Intel's modulator, for example, relies on the same deposition tools and doping techniques used to mass-produce transistors. Silicon and oxide etching tools, which are also standard in the industry, are used to define optical waveguides. Even the deposition machines needed for silicon-germanium photodetectors are becoming more commonplace in today's state-of-the-art CMOS fab facilities.

What will the path to integration look like? Right now, we are starting to see discrete silicon photonics devices that can operate at 10 Gb/s and perform at levels approaching those of existing optical products. The next step will be boosting the performance of these silicon devices to 40 Gb/s and beyond. In the next few years, we expect to see some individual silicon devices begin to replace the exotic devices used today in communications applications.

The next phase of development is to build a "hybrid" silicon photonic platform using a mix of silicon and nonsilicon devices attached to a silicon substrate. Over time, we will be able to replace the nonsilicon components with silicon devices. This integration could bring about enough of a cost reduction to make these hybrid devices attractive for corporate data centers and high-end servers.

The final phase is to integrate a majority of the devices into silicon and to begin integrating electronics with the photonics--the convergence of communications and computing on a single silicon chip. At that point, we will start to see the emergence of completely new kinds of computing applications and architectures, afforded by the availability of low-cost photonic devices.

Momentum is building in silicon photonics. During the past 12 months, there have been significant leaps in silicon device performance in the areas of modulation and lasing. Government funding in this field has been accelerating, as has the dissemination of research publications. A few optical start-ups have even been choosing silicon as their device platform. All these signs, we believe, point to dramatic changes to come, changes that may one day revolutionize optical communications.

About the Author

Mario Paniccia and Sean Koehl are with Intel Corp.'s Photonics Technology Lab in Santa Clara, Calif. Paniccia, an IEEE senior member, is the lab's director and a senior principal engineer. He earned a Ph.D. in solid-state physics from Purdue University, in West Lafayette, Ind., in 1994. Paniccia has published numerous papers and has 62 patents issued or pending. Koehl is a senior technical marketing engineer and holds a B.S. in applied physics from Purdue.

To Probe Further

For more information about Intel's Photonics Technology Lab, visit the lab's Web site at https://www.intel.com/go/sp.

Recent research papers published by the group include: "A Continuous-Wave Raman Silicon Laser," by H. Rong et al., Nature, 2005, Vol. 433, pp. 725–28; "An All-Silicon Raman Laser," by H. Rong et al., Nature, 2005, Vol. 433, pp. 292–94; and "A High-Speed Silicon Optical Modulator Based on a Metal-Oxide-Semiconductor Capacitor," by A. Liu et al., Nature, 2004, Vol. 427, pp. 615–18.

Useful technical overviews of silicon photonics can be found in Silicon Photonics: An Introduction, by Graham T. Reed and Andrew P. Knights (John Wiley, 2004), and Silicon Photonics, edited by Lorenzo Pavesi and David J. Lockwood (Springer, 2004).