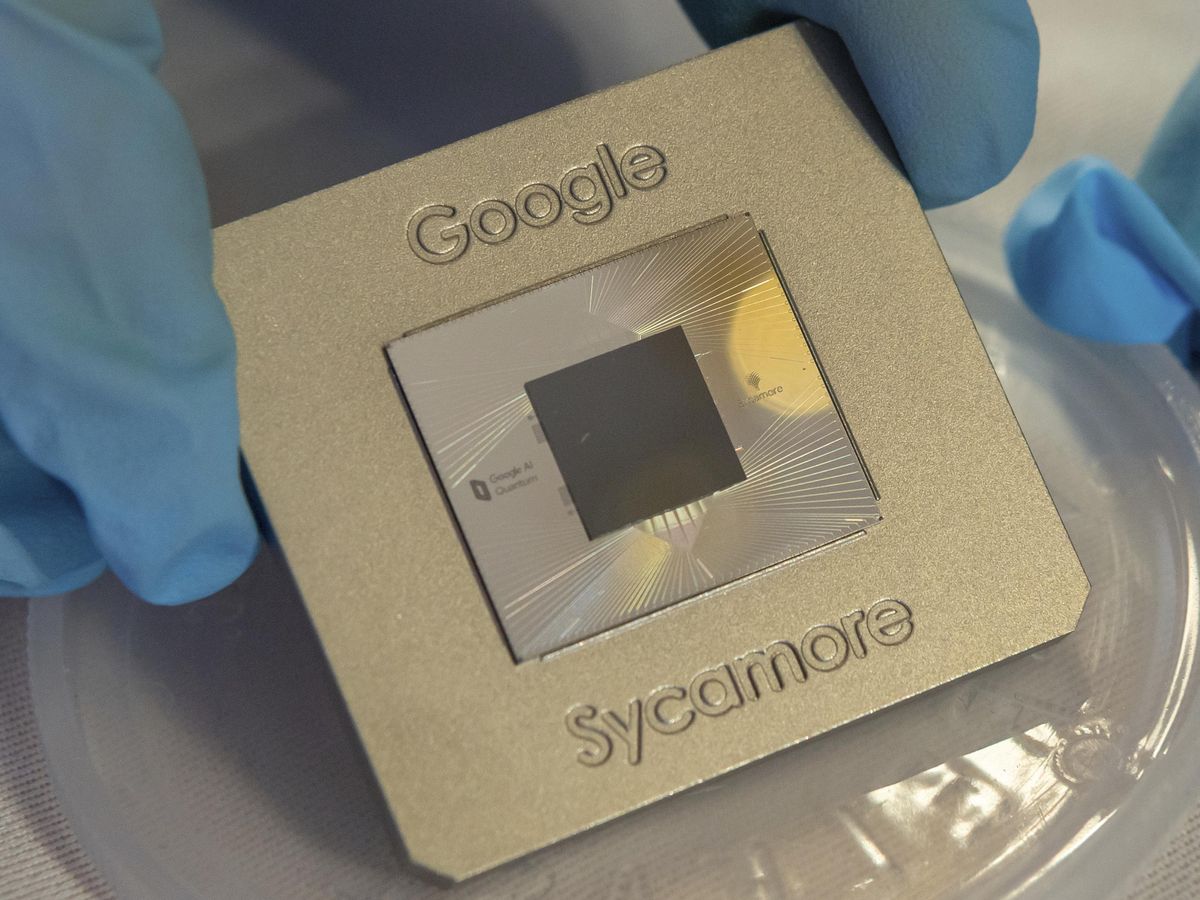

Lately, it seems as though the path to quantum computing has more milestones than there are miles. Judging by headlines, each week holds another big announcement—an advance in qubit size, or another record-breaking investment: First IBM announced a 127-qubit chip. Then QuEra announced a 256-qubit neutral atom quantum computer. There’s now a new behemoth quantum computing company, “Quantinuum” thanks to the merger of Honeywell Quantum Solutions and Cambridge Quantum. And today, Google’s Sycamore announced another leap toward quantum error correction.

A curmudgeon might argue that quantum computing is like fusion, or any promising tech whose real rewards are—if even achievable—decades off. The future remains distant, and all the present has for us is smoke, mirrors, and hype.

To rebut the cynic, an optimist might point to the glut of top-tier research being done in academia and industry. If there’s new news each week, it’s a sign that sinking hundreds of millions into a really hard problem does actually reap rewards.

For a measured perspective on how much quantum computing is actually advancing as a field, we spoke with John Martinis, a professor of physics at the University of California, Santa Barbara, and the former chief architect of Google’s Sycamore.

IEEE Spectrum: So it's been about two years since you unveiled results from Sycamore. In the last few weeks, we've seen announcements of a 127-qubit chip from IBM and a 256-qubit neutral atom quantum computer from QuEra. What kind of progress would you say has actually been made?

John Martinis: Well, clearly, everyone's working hard to build a quantum computer. And it's great that there are all these systems people are working on. There's real progress. But if you go back to one of the points of the quantum supremacy experiment—and something I've been talking about for a few years now—one of the key requirements is gate errors. I think gate errors are way more important than the number of qubits at this time. It's nice to show that you can make a lot of qubits, but if you don't make them well enough, it's less clear what the advance is. In the long run, if you want to do a complex quantum computation, say with error correction, you need way below 1% gate errors. So it's great that people are building larger systems, but it would be even more important to see data on how well the qubits are working. In this regard, I am impressed with the group in China who reproduced the quantum supremacy results, where they show that they can operate their system well with low errors.

I want to drill down on “scale versus quality,” because I think it's sort of easy for people to understand that 127 qubits is more qubits.

Yes, it’s a good advance, but computer companies know all about systems engineering, so you have to also improve reliability by making qubits with lower errors.

So I know that Google, and I believe Chris Monroe's group, have both come up with fault tolerance results this year. Could you talk about any of those results?

I think it's good that these experiments were done. They're a real advance in the field to be able to do error correction. Unfortunately, I don’t completely agree calling such experiments fault tolerance, as it makes one think like you’ve solved error correction, but in fact it’s just the first step. In the end, you want to do error corrections so that the net logical error [rate] is something like 10-10 to 10-20, and the experiments that were done are nowhere telling you yet that it's possible.

Yeah, I think they're like 10-3.

It depends how you want to quantify it, but it’s not a huge factor. It could be a bit better if you had more qubits, but you would maybe have to architect it in a different way. I don’t think it is good for the field to oversell results making people think that you're almost there. It's progress, and that's great, but there still is a long way to go

I remember that IBM had, once upon a time, touted their quantum volume as a more appropriate universal benchmark. Do you have thoughts about how people can reasonably compare claims between different groups, even using different kinds of qubits?

Metrics are needed, but it is important to choose them carefully. Quantum volume is a good metric. But is it really possible to expect something as new and complex as a quantum computer system to be characterized by one metric? You know, you can't even characterize your computer, your cell phone, by one metric. In that case, if there's any metric, it's the price of the cell phone.

[laughing] Yeah, that's true.

I think it is more realistic at this time to consider a suite of metrics, something that needs to be figured out in the next few years. At this point, building a quantum computer is a systems engineering problem, where you have to get a bunch of components all working well at the same time. Quantum volume is good because it combines several metrics together, but it is not clear they are put together in the best way. And of course if you have a single metric, you tend to optimize to that one metric, which is not necessarily solving the most important systems problems. One of the reasons we did the quantum supremacy experiment was because you had to get everything working well, at the same time, or the experiment would fail.

I mean, from my perspective, really the only thing that's been a reliable benchmark—or that I even get to see—is usually some kind of sampling problem, whether it's boson sampling or Gaussian boson sampling. As you said, it’s trying to see: can you actually get a quantum advantage over these classical computers? And then, of course, you have a really interesting debate about whether you can spoof the result. But there's something happening there. It's not just PR.

Yeah. You're performing a well-defined experiment, and then you directly compare it to a classical calculation. Boson sampling was the first proposal, and then the Google theory group figured out a way to do an analogous experiment with qubits. For the boson sampling, there’s a nice experiment coming from USTC in China, and there's an interesting debate that says the experiment is constructed in such a way that you can classically compute the results, whereas USTC believes there are higher-order correlations that are hard to compute. It’s great the scientists are learning more about these metrics through this debate. And it’s also been good that various groups have been working on the classical computation part of the Google quantum supremacy experiment. I am still interested whether IBM will actually run their algorithm on a supercomputer to see if it is a practical solution. But the most important result for the quantum supremacy experiment is that we showed there are no additional errors, fundamental or practical, when running a complex quantum computation. So this is good news for the field as we continue to build more powerful machines.

It's interesting, because I think there is that real interplay between the theory and the experiment, when you get to this cutting edge stuff, and people aren't quite sure where either side is and both keep making advances forward.

For classical computers, there has always been good interplay between theory and experiment. But because of the exponential power of a quantum computer, and because the ideas are still new and untested, we are expecting scientists to continue to be quite inventive.

What does the next step look like for quality? You were saying that that's the main roadblock. We are so far from having the kind of fidelity that we need. What is the next step for error correction? What should we be looking for?

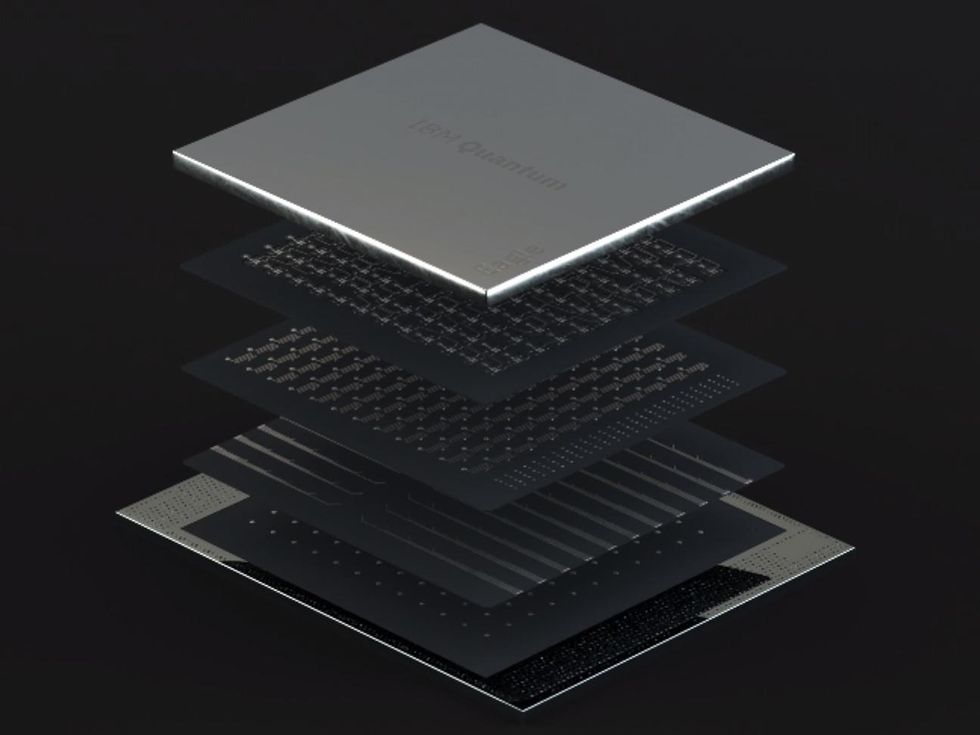

In the last year Google had a nice paper on error correction for bit flips or phase flips. They understood the experiment well, and discussed what they would have to do for error correction to work well for having both bit and phase at the same time. It has been clear for some time that the major advance is to improve gate errors, and to build superconducting qubits with better coherence. That's also something that I've been thinking about for a couple years. I think it's definitely possible, especially with the latest IBM announcement that they were able to build their 127-qubit device with long coherence times throughout the array. So for example, if you could have this coherence in the more complex architecture of the Google Sycamore processor, you would then have really good gate errors well below 0.1%. This is of course not easy because of systems engineering issues, but it does show that there is a lot of room for improvement with superconducting qubits.

You were saying that there is a trade-off between the gate coupling control and the coherence time of the qubit. You think we can overcome that trade-off?

Obviously the engineering and the physics pushes against each other. But I think that can be overcome. I'm pretty optimistic about solving this problem. People know how to make good devices at this time, but we probably need to understand all the physics and constraints better, and to be able to predict the coherence accurately. We need more research to improve the technology readiness level of this technology.

What would you say is the most overlooked, potential barrier to overcome? I've written about control chips, the elimination of the chandelier of wires, and getting down to something that's actually going to fit inside your dil fridge.

I have thought about wiring for about five years now, starting at Google. I can't talk about it, but I think there's a very nice solution here. I think this can be built given a focussed effort.

Is there anything we haven't talked about that you think is important for people to know about the state of quantum computing?

I think it's a really exciting time to be working on quantum computing, and it’s great that so many talented engineers and scientists are now in the field. In the next few years I think there will be more focus on the systems engineering aspects of building a quantum computer. As an important part of systems engineering is testing, better metrics will have to be developed. The quantum supremacy experiment was interesting as it showed that a powerful quantum computer could be built, and the next step will be to show both a powerful and useful computer. Then the field will really take off.

Some kind of standardization.

Yes, this will be an important next step. And I think such a suite of standards will help the business community and investors, as they will be better able to understand what developments are happening.

Not quite a consumer financial protection bureau, but some kind of business protection for investors.

With such a new technology, it is hard to understand how progress is being made. I think we can all work on ways to better communicate how this technology is advancing. I hope this Q&A has helped in this way.

- What Google's Quantum Supremacy Claim Means for Quantum ... ›

- Google's Quantum Computer Exponentially Suppresses Errors ... ›

- The Case Against Quantum Computing - IEEE Spectrum ›

- Schrödinger’s Tardigrade Claim Incites Pushback - IEEE Spectrum ›

- New Standards Rolling Out for Clocking Quantum-Computer Performance - IEEE Spectrum ›

- For Quantum Computing, Is 99 Percent Accuracy Enough? - IEEE Spectrum ›

- How To Build A Fault-Tolerant Superconducting Quantum Computer - IEEE Spectrum ›

- IBM's Target: a 4,000-Qubit Processor by 2025 - IEEE Spectrum ›

- Quantum Computers Exponentially Faster At Learning from Experiments - IEEE Spectrum ›

- Quantum Gate 100x Faster Than Quantum Noise - IEEE Spectrum ›

- Quantum Technology’s Unsung Heroes - IEEE Spectrum ›

Dan Garisto is a freelance science journalist who covers physics and other physical sciences. His work has appeared in Scientific American, Physics, Symmetry, Undark, and other outlets.