At the end of last year, IBM predicted that by 2017 limited forms of mind reading would “no longer [be] science fiction.” Along similar lines, though, in 1933 Nikola Tesla said he would soon be able to photograph people’s thoughts.

Is IBM going to be equally wrong?

Maybe not. Surveying leading neurotech experts has turned up some support—albeit limited and carefully qualified—for the company’s prediction. And oddly enough, one reason is that Tesla’s prediction is—in very limited ways as well—coming true too.

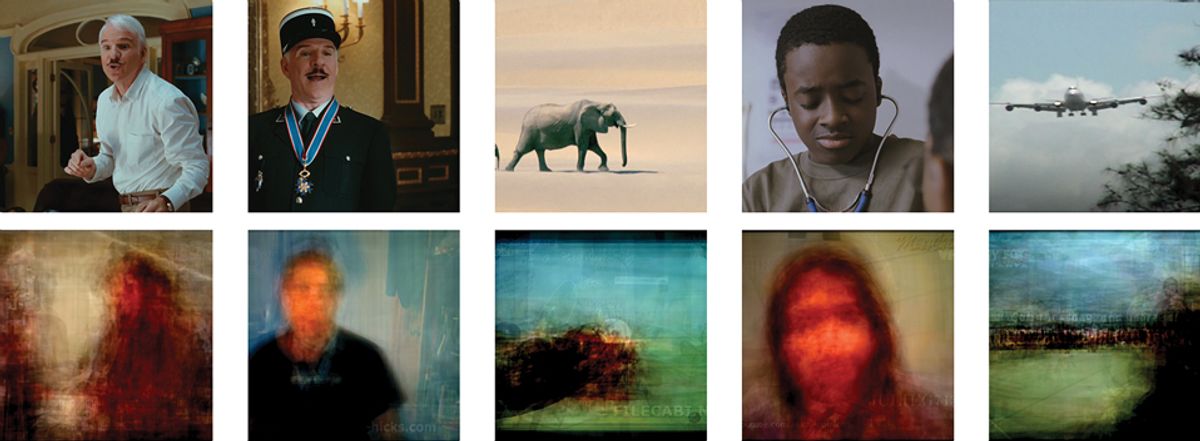

Neuroscientists at the University of California, Berkeley, have used functional magnetic resonance imaging (fMRI) to produce rough representations of images as actually seen by a subject’s visual cortex.

First, researchers created computer models of fMRI signals from each subject’s visual cortex while the subject watched Hollywood movie trailers. Then the scientists recorded visual cortex activity during a second set of clips. They then simulated what the brain “saw” during the second set by running their models on some 5000 hours of online video clips to isolate snippets of images and motions that most closely matched the visual cortex’s activity in each moment.

Lars Kai Hansen, professor of cognitive systems at the Technical University of Denmark, in Copenhagen, says his field has undergone dramatic change over the past 15 years. “I see no reason to doubt that...algorithms will allow us to speak ‘brain’ in much more detail than we can now,” he says.

That said, no one expects cutting-edge scientific laboratories, let alone consumer technology, to be able to decode the brain’s activities in any way that could enable actual mind reading. It’s more that scientists have learned the first few phrases in a very complex language. Worse, the phrases rarely have a fixed meaning and don’t combine to form sentences in a straightforward way.

Geraint Rees, director of the Institute of Cognitive Neuroscience at University College London, has been looking for the equivalent of a Rosetta Stone. “Is looking at red plus drinking a glass of wine equivalent to the sum of looking at red and, separately, drinking a glass of wine? Or is there some kind of nonlinear interaction? We don’t know the rules of those combinations.”

Moreover, says Chris Frith, emeritus professor at the Wellcome Trust Centre for Neuroimaging at University College London, no one has figured out how to read an individual neuron without opening up a skull and attaching a tiny wire to it. Instead, he says, fMRI reads the activity of clusters of hundreds of thousands of neurons. And even the best electroencephalogram (EEG) technology still can resolve only down to the level of hundreds of neurons.

That’s enough to do some useful work, though.

In 2009, an Australian company called Emotiv Systems released a commercial EEG unit called the EPOC. A US $299 fitted wireless headset, the EPOC reads faint neural signals through the device’s 14 scalp electrodes, which envelop the head like plastic tentacles. According to Emotiv’s website, the user’s computer—running Emotiv’s proprietary signal processing software—then translates Emotiv’s data stream into meaningful information about activity in various brain regions.

Scores of videos on YouTube show EPOC users training their brains to control a video game, keyboard, robot, or wheelchair. But this is a long way from—to steal Tesla’s term—thought photography. “Learning to control things using our brain activity is not the same as mind reading,” Frith says. “I agree that control via a brain is no longer science fiction. But it will not lead to thought monitoring.”

Ken Norman, principal investigator at Princeton’s Computational Memory Lab, says people should be “superskeptical” about any claims of mind-reading technology.

“Brain data is noisy,” Norman says. “The cognitive states we’re trying to detect are complicated. And we’re measuring all sorts of things in the brain that have nothing to do with the thought we want to decode.”

He adds, though, that devices like the EPOC could still find some amazing applications.

We can measure whether someone thinks they’re doing something right or wrong, how much effort they’re expending, their focus of attention, or their familiarity with an object, Norman says. “Most of the cleverness is going to come from people coming to grips with the limits of the technology and then being really smart about what sorts of applications you can make work with that signal.”