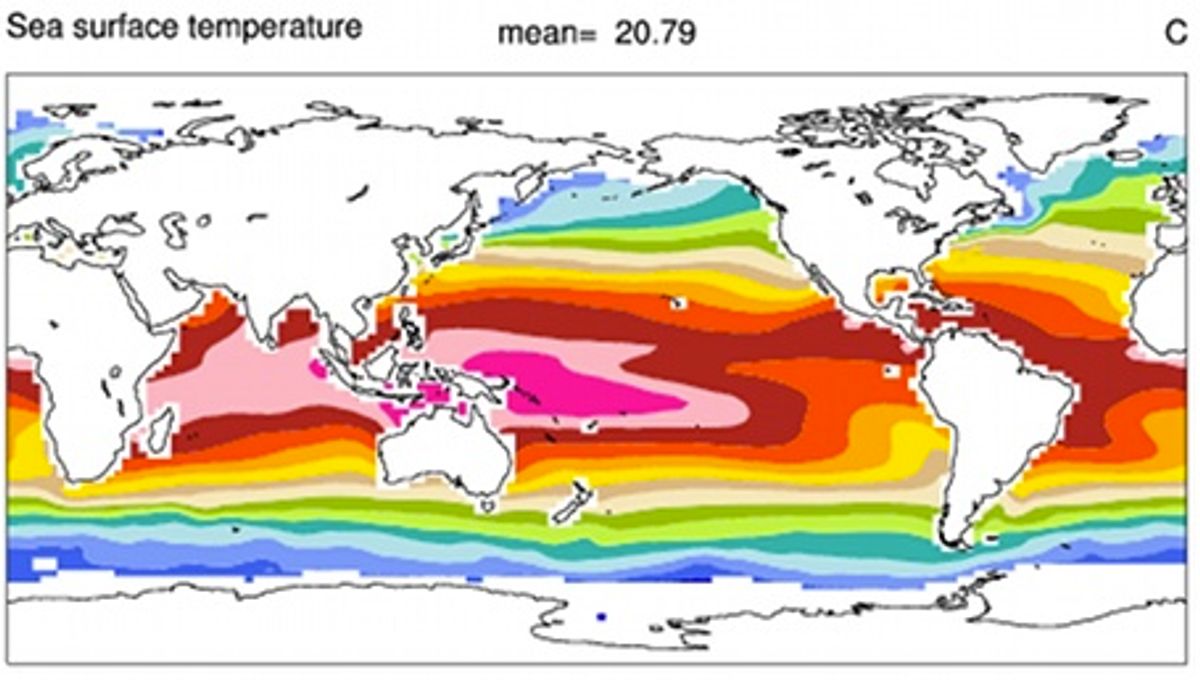

Climate science is a computationally intense discipline. The entire idea is to figure out what a massively complex system—essentially, the world— is going to do based on hundreds of different variables, including carbon dioxide concentrations, cloud cover, airplane contrails, and so on. And the scientific community's means for measuring those variables has improved dramatically in recent years, with satellites and any number of terrestrial sensors multiplying all the time. This is a good thing in principle, and a very complicated thing in practice.

"We face a data deluge," said Ian Foster, a professor of physical sciences at the University of Chicago's Computation Institute, during a session at the American Geophysical Union (AGU) meeting in San Francisco on 7 December. "Data volumes are increasing far faster than computer power, due to improvements in sensors. This is, of course, a tremendous opportunity for scientists, but it's also a tremendous challenge."

To address this challenge, many groups have started developing tools aimed specifically at helping computing power catch up with the available data. Brian Smith, a software engineer at Oak Ridge National Laboratory, delivered a talk at AGU about one such tool in development called ParCAT. The idea behind ParCAT is breaking down climate modeling runs into workable time scales using parallel analysis. This basically means that climate modeling can be done simultaneously for different spatial locations and points in time, rather than tackling each point in sequence. Smith said that without parallel analysis, a typical modeling run on 15 to 20 years of simulation data with 300-plus variables can take as long as a day. But in one test using ParCAT, Smith said, a 15-year run of monthly data on global ground temperatures that involved 303 variables took only 54 seconds. And that set of operations was run on a "low end cluster" of Oak Ridge Lab's computer systems.

Another new tool using parallel analysis is called TECA: Toolkit for Extreme Climate Analysis. According to Prabhat, of Lawrence Berkley National Laboratory, a single run with the commonly used NCAR CAM5.1 atmospheric model can generate 100 terabytes of data. TECA allows that mass of data to be quickly searched for signatures of extreme weather events, such as cyclones or "atmospheric rivers" (like the system that hit California just as the AGU meeting began). This type of analysis, paired with actual observation, can be used to verify that our climate models are actually working; Prabhat said TECA was picking up about 95 percent of extreme events.

Finally, researchers including NASA Goddard's Gavin Schmidt are developing a web-based application that will allow researchers to analyze data without even having to bother with command-line programming—an impressive feat considering the huge datasets in question. Chaowei Yang, an associate professor at George Mason University, told onlookers AGU that some of the more ambitious model runs—say, 1000 iterations of a given model out to 200 years in the future—can each generate almost 250 terabytes. With the new Web tool, manipulating that sort of data, or changing one of hundreds of variables to see how the model shifts, can be done easily. Because the data itself will be housed in the cloud, there won't be a need for figuring out how to move these large masses of data between collaborators. Yang said they hope to release the tool in 2013.

The generation of climate-related data isn't slowing down as we add more satellites, more ice flyovers, more ocean monitoring, and so on. It's good to see that our ability to say what all that data really means is striving to keep up.

Image via UCAR

Dave Levitan is the science writer for FactCheck.org, where he investigates the false and misleading claims about science that U.S. politicians occasionally make.