The Brain as Computer: Bad at Math, Good at Everything Else

Modeling computers after the brain could revolutionize robotics and big data

Painful exercises in basic arithmetic are a vivid part of our elementary school memories. A multiplication like 3,752 × 6,901 carried out with just pencil and paper for assistance may well take up to a minute. Of course, today, with a cellphone always at hand, we can quickly check that the result of our little exercise is 25,892,552. Indeed, the processors in modern cellphones can together carry out more than 100 billion such operations per second. What’s more, the chips consume just a few watts of power, making them vastly more efficient than our slow brains, which consume about 20 watts and need significantly more time to achieve the same result.

Of course, the brain didn’t evolve to perform arithmetic. So it does that rather badly. But it excels at processing a continuous stream of information from our surroundings. And it acts on that information—sometimes far more rapidly than we’re aware of. No matter how much energy a conventional computer consumes, it will struggle with feats the brain finds easy, such as understanding language and running up a flight of stairs.

If we could create machines with the computational capabilities and energy efficiency of the brain, it would be a game changer. Robots would be able to move masterfully through the physical world and communicate with us in plain language. Large-scale systems could rapidly harvest large volumes of data from business, science, medicine, or government to detect novel patterns, discover causal relationships, or make predictions. Intelligent mobile applications like Siri or Cortana would rely less on the cloud. The same technology could also lead to low-power devices that can support our senses, deliver drugs, and emulate nerve signals to compensate for organ damage or paralysis.

But isn’t it much too early for such a bold attempt? Isn’t our knowledge of the brain far too limited to begin building technologies based on its operation? I believe that emulating even very basic features of neural circuits could give many commercially relevant applications a remarkable boost. How faithfully computers will have to mimic biological detail to approach the brain’s level of performance remains an open question. But today’s brain-inspired, or neuromorphic, systems will be important research tools for answering it.

A key feature of conventional computers is the physical separation of memory, which stores data and instructions, from logic, which processes that information. The brain holds no such distinction. Computation and data storage are accomplished together locally in a vast network consisting of roughly 100 billion neural cells (neurons) and more than 100 trillion connections (synapses). Most of what the brain does is determined by those connections and by the manner in which each neuron responds to incoming signals from other neurons.

When we talk about the extraordinary capabilities of the human brain, we are usually referring to just the latest addition in the long evolutionary process that constructed it: the neocortex. This thin, highly folded layer forms the outer shell of our brains and carries out a diverse set of tasks that includes processing sensory inputs, motor control, memory, and learning. This great range of abilities is accomplished with a rather uniform structure: six horizontal layers and a million 500-micrometer-wide vertical columns all built from neurons, which integrate and distribute electrically coded information along tendrils that extend from them—the dendrites and axons.

Like all the cells in the human body, a neuron normally has an electric potential of about –70 millivolts between its interior and exterior. This membrane voltage changes when a neuron receives signals from other neurons connected to it. And if the membrane voltage rises to a critical threshold, it forms a voltage pulse, or spike, with a duration of a few milliseconds and a value of about 40 mV. This spike propagates along the neuron’s axon until it reaches a synapse, the complex biochemical structure that connects the axon of one neuron to a dendrite of another. If the spike meets certain criteria, the synapse transforms it into another voltage pulse that travels down the branching dendrite structure of the receiving neuron and contributes either positively or negatively to its cell membrane voltage.

Connectivity is a crucial feature of the brain. The pyramidal cell, for example—a particularly important kind of cell in the human neocortex—contains about 30,000 synapses and so 30,000 inputs from other neurons. And the brain is constantly adapting. Neuron and synapse properties—and even the network structure itself—are always changing, driven mostly by sensory input and feedback from the environment.

General-purpose computers these days are digital rather than analog, but the brain is not as easy to categorize. Neurons accumulate electric charge just as capacitors in electronic circuits do. That is clearly an analog process. But the brain also uses spikes as units of information, and these are fundamentally binary: At any one place and time, there is either a spike or there is not. Electronically speaking, the brain is a mixed-signal system, with local analog computing and binary-spike communication. This mix of analog and digital helps the brain overcome transmission losses. Because the spike essentially has a value of either 0 or 1, it can travel a long distance without losing that basic information; it is also regenerated when it reaches the next neuron in the network.

Another crucial difference between brains and computers is that the brain accomplishes all its information processing without a central clock to synchronize it. Although we observe synchronization events—brain waves—they are self-organized, emergent products of neural networks. Interestingly, modern computing has started to adopt brainlike asynchronicity, to help speed up computation by performing operations in parallel. But the degree and the purpose of parallelism in the two systems are vastly different.

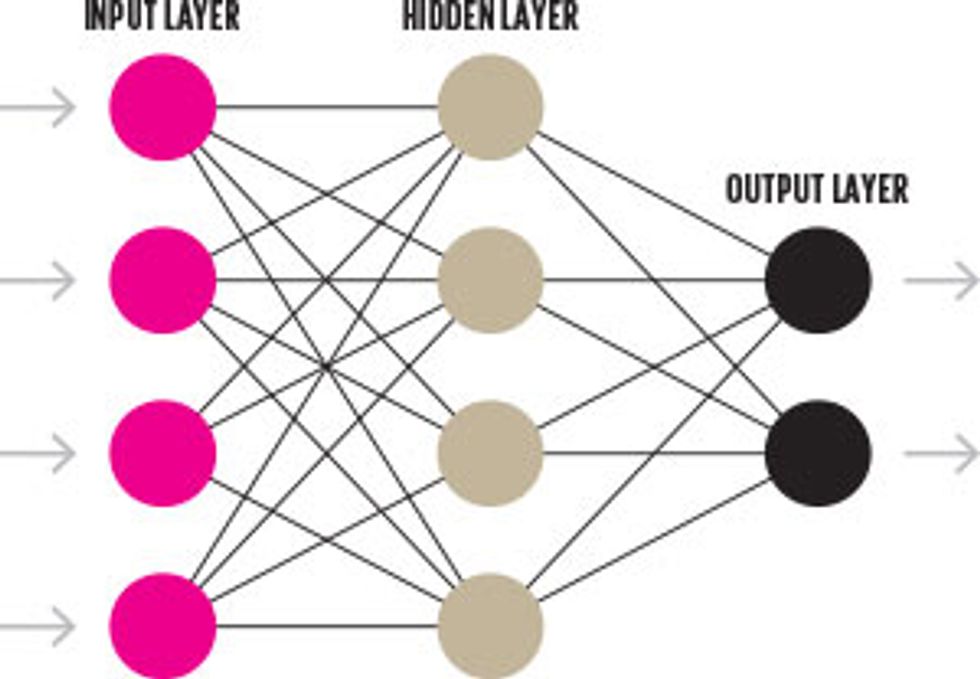

The idea of usingthe brain as a model of computation has deep roots. The first efforts focused on a simple threshold neuron, which gives one value if the sum of weighted inputs is above a threshold and another if it is below. The biological realism of this scheme, which Warren McCulloch and Walter Pitts conceived in the 1940s, is very limited. Nonetheless, it was the first step toward adopting the concept of a firing neuron as an element for computation.

In 1957, Frank Rosenblatt proposed a variation of the threshold neuron called the perceptron. A network of integrating nodes (artificial neurons) is arranged in layers. The “visible” layers at the edges of the network interact with the outside world as inputs or outputs, and the “hidden” layers, which perform the bulk of the computation, sit in between.

Rosenblatt also introduced an essential feature found in the brain: inhibition. Instead of simply adding inputs together, the neurons in a perceptron network could also make negative contributions. This feature allows a neural network using only a single hidden layer to solve the XOR problem in logic, in which the output is true only if exactly one of the two binary inputs is true. This simple example shows that adding biological realism can add new computational capabilities. But which features of the brain are essential to what it can do, and which are just useless vestiges of evolution? Nobody knows.

We do know that some impressive computational feats can be accomplished without resorting to much biological realism. Deep-learning researchers have, for example, made great strides in using computers to analyze large volumes of data and pick out features in complicated images. Although the neural networks they build have more inputs and hidden layers than ever before, they are still based on the very simple neuron models. Their great capabilities reflect not their biological realism, but the scale of the networks they contain and the very powerful computers that are used to train them. But deep-learning networks are still a long way from the computational performance, energy efficiency, and learning capabilities of biological brains.

The big gap between the brain and today’s computers is perhaps best underscored by looking at large-scale simulations of the brain. There have been several such efforts over the years, but they have all been severely limited by two factors: energy and simulation time. As an example, consider a simulation that Markus Diesmann and his colleagues conducted several years ago using nearly 83,000 processors on the K supercomputer in Japan. Simulating 1.73 billion neurons consumed 10 billion times as much energy as an equivalent size portion of the brain, even though it used very simplified models and did not perform any learning. And these simulations generally ran at less than a thousandth of the speed of biological real time.

Why so slow? The reason is that simulating the brain on a conventional computer requires billions of differential equations coupled together to describe the dynamics of cells and networks: analog processes like the movement of charges across a cell membrane. Computers that use Boolean logic—which trades energy for precision—and that separate memory and computing, appear to be very inefficient at truly emulating a brain.

These computer simulations can be a tool to help us understand the brain, by transferring the knowledge gained in the laboratory into simulations that we can experiment with and compare with real-world observations. But if we hope to go in the other direction and use the lessons of neuroscience to build powerful new computing systems, we have to rethink the way we design and build computers.

Neurons in Silico

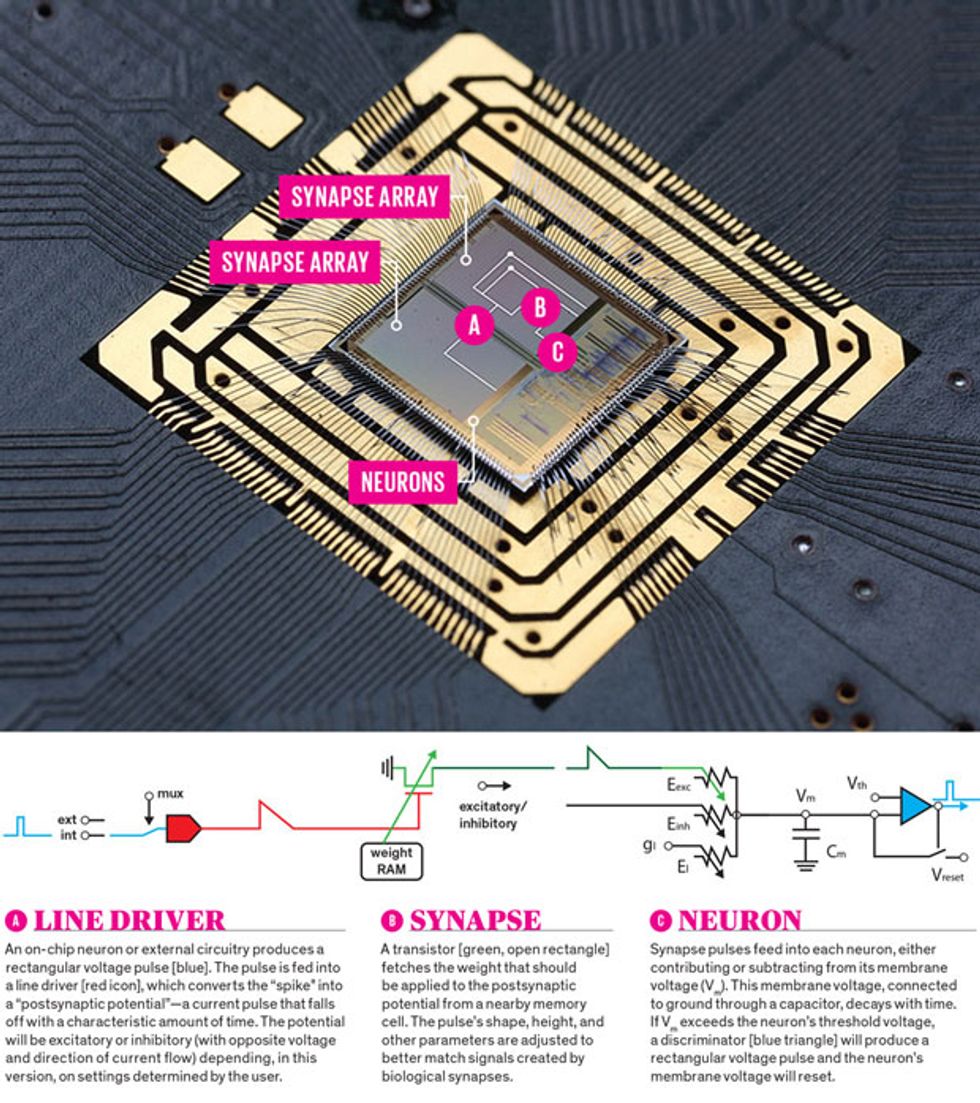

The BrainScaleS design evolved from this early prototype chip, dubbed “Spikey.” There are four main components to the chip: neurons, which integrate signals from multiple synapses and fire when the voltage exceeds a threshold; line drivers that help condition the signals coming from neurons and send them to synapses; dense arrays of synapses that weigh those signals and have “plasticity,” changing on short and long time scales; and digital circuitry to connect to the outside world and pass information such as chip configurations or data. The connections between neurons can be set externally or they can evolve internally according to plasticity mechanisms.

Copying brain operation in electronics may actually be more feasible than it seems at first glance. It turns out the energy cost of creating an electric potential in a synapse is about 10 femtojoules (10-15 joules). The gate of a metal-oxide-semiconductor (MOS) transistor that is considerably larger and more energy hungry than those used in state-of-the-art CPUs requires just 0.5 fJ to charge. A synaptic transmission is therefore equivalent to the charging of at least 20 transistors. What’s more, on the device level, biological and electronic circuits are not that different. So, in principle, we should be able to build structures like synapses and neurons from transistors and wire them up to make an artificial brain that wouldn’t consume an egregious amount of energy.

The notion of buildingcomputers by making transistors operate more like neurons began in the 1980s with Caltech professor Carver Mead. One of the core arguments behind what Mead came to call “neuromorphic” computing was that semiconductor devices can, when operated in a certain mode, follow the same physical rules as neurons do and that this analog behavior could be used to compute with a high level of energy efficiency.

The Mead group also invented a neural communication framework in which spikes are coded only by their network addresses and the time at which they occur. This was groundbreaking work because it was the first to make time an essential feature of artificial neural networks. Time is a key factor in the brain: It takes time for signals to propagate and membranes to respond to changing conditions, and time determines the shape of postsynaptic potentials.

Several research groups quite active today, such as those of Giacomo Indiveri at ETH Zurich and Kwabena Boahen at Stanford, have followed Mead’s approach and successfully implemented elements of biological cortical networks. The trick is to operate transistors below their turn-on threshold with extremely low currents, creating analog circuits that mimic neural behavior while consuming very little energy.

Further research in this direction may find applications in systems like brain-computer interfaces. But it would be a huge leap from there to circuitry that has anything like the network size, connectivity, and learning ability of a complete animal brain.

So, around 2005, three groups independently started to develop neuromorphic systems that deviate substantially from Mead’s original approach, with the goal of creating large-scale systems with millions of neurons.

Closest to conventional computing is the SpiNNaker project, led by Steve Furber at the University of Manchester, in England. That group has designed a custom, fully digital chip that contains 18 ARM microprocessor cores operating with a clock speed of 200 megahertz—about a tenth the speed of modern CPUs. Although the ARM cores are classical computers, they simulate spikes, which are sent through specialized routers designed to communicate asynchronously, just as the brain does. The current implementation, part of the European Union’s Human Brain Project, was completed in 2016 and features 500,000 ARM cores. Depending on the complexity of the neuron model, each core can simulate as many as 1,000 neurons.

The TrueNorth chip, developed by Dharmendra Modha and his colleagues at the IBM Research laboratory in Almaden, Calif., abandons the use of microprocessors as computational units and is a truly neuromorphic computing system, with computation and memory intertwined. TrueNorth is still a fully digital system, but it is based on custom neural circuits that implement a very specific neuron model. The chip features 5.4 billion transistors, built with 28-nanometer Samsung CMOS technology. These transistors are used to implement 1 million neuron circuits and 256 million simple (1-bit) synapses on a single chip.

The furthest away from conventional computing and closest to the biological brain, I would argue, is the BrainScaleS system, which my colleagues and I have developed at Heidelberg University, in Germany, for the Human Brain Project. BrainScaleS is a mixed-signal implementation. That is, it combines neurons and synapses made from silicon transistors that operate as analog devices with digital communication. The full-size system is built from 20 uncut 8-inch silicon wafers to create a total of 4 million neurons and 1 billion synapses.

The system can replicate eight different firing modes of biological neurons, derived in close collaboration with neuroscientists. Unlike with the analog approach pioneered by Mead, BrainScaleS operates in an accelerated mode, running about 10,000 times as fast as real time. This makes it especially suited to study learning and development.

Learning will likely be a critical component of neuromorphic systems going forward. At the moment, brain-inspired chips as well as the neural networks implemented on conventional computers are trained elsewhere by more powerful computers. But if we want to use neuromorphic systems in real-world applications to, say, power robots that will work alongside us, they will have to be able to learn and adapt on the fly.

In our second-generation BrainScaleS system, my colleagues and I implemented learning capabilities by building on-chip “plasticity processors,” which are used to alter a broad suite of neuron and synapse parameters when needed. This capability also lets us fine-tune parameters to compensate for differences in size and electric properties from device to device, much as the brain compensates for variation.

The three large-scale systems I’ve described are complementary approaches. SpiNNaker is highly configurable and so can be used to test a variety of different neuron models, TrueNorth has very high integration density, and BrainScaleS is designed for continuous operation of learning and development. Finding the right way to evaluate the performance of such systems is an ongoing effort. But early results indicate promise. The IBM TrueNorth group, for example, recently estimated that a synaptic transmission in its system costs 26 picojoules. Although this is about a thousand times the energy of the same action in a biological system, it is approaching 1/100,000 of the energy that would be consumed by a simulation carried out on a conventional general-purpose machine.

We are still in the early days of figuring out what these sorts of systems can do and how they can be applied to real-world applications. At the same time, we must find ways to combine many neuromorphic chips into larger networks with improved learning capabilities, while simultaneously driving down energy consumption. One challenge is simply connectivity: The brain is three-dimensional, and we build circuits in two. Three-dimensional integration of circuits, a field that is actively being explored, could help with this.

Another enabler will be non-CMOS devices, such as memristors or phase-change RAM. Today, the weight values that govern how artificial synapses respond to incoming signals are stored in conventional digital memory, which dominates the silicon resources required to build a network. But other forms of memory could let us shrink those cells down from micrometer to nanometer scales. As in today’s systems, a key challenge there will be how to handle variations between individual devices. The calibration strategies developed with BrainScaleS could help.

We are just getting started on the road toward usable and useful neuromorphic systems. But the effort is well worthwhile. If we succeed, we won’t just be able to build powerful computing systems; we may even gain insights about our own brains.

This article appears in the June 2017 print issue as “The Brain as Computer.”

About the Author

Karlheinz Meier is a professor of experimental physics at Heidelberg University, in Germany, and a codirector in the European Union’s Human Brain Project.