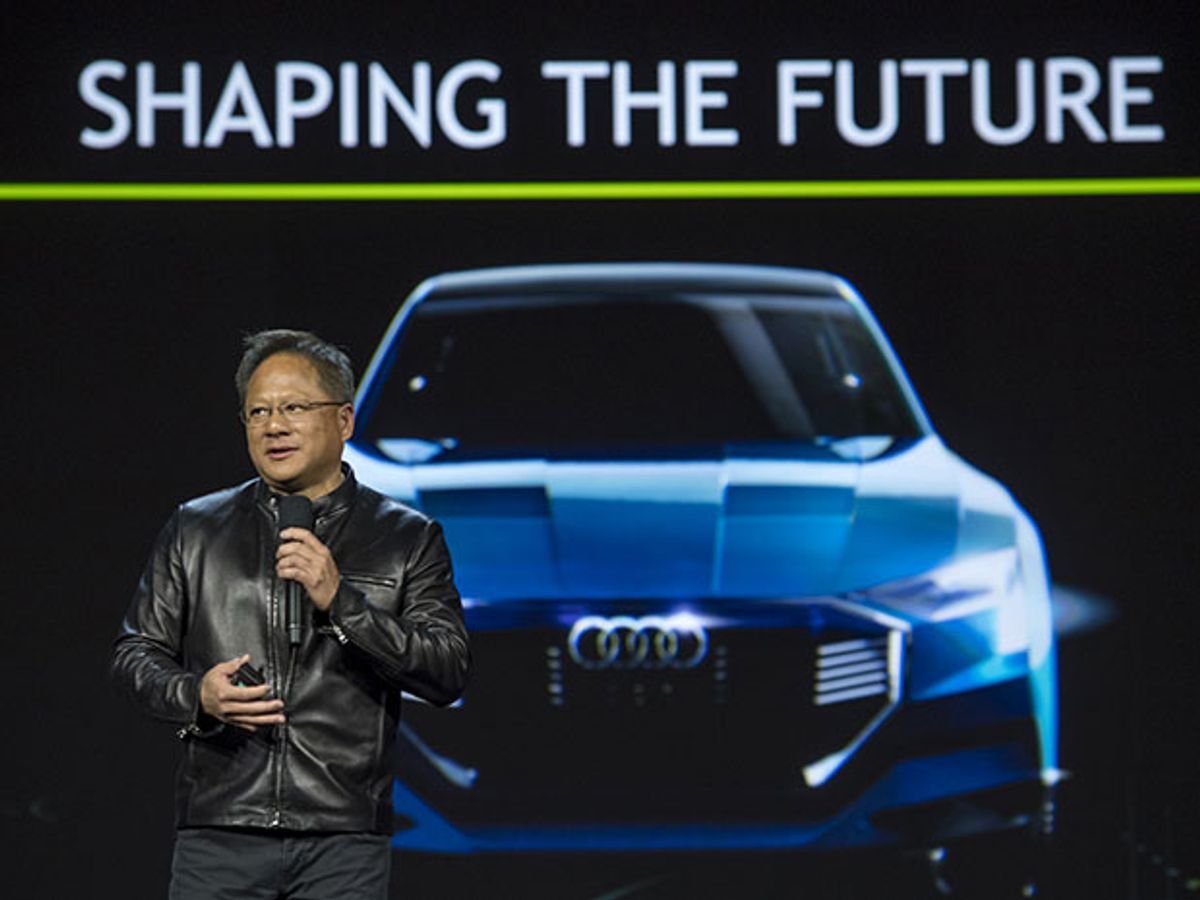

Jen-Hsun Huang, the CEO of Nvidia, said last night in Las Vegas that his company and Audi are developing a self-driving car that will finally be worthy of the name. That autonomous vehicle, he said, will be on the roads by 2020.

Huang made his remarks in a keynote address at CES. Then he was joined by Scott Keough, the head of Audi of America, who emphasized that the car really would drive itself. “We’re talking highly automated cars, operating in numerous conditions, in 2020,” Keough said. A prototype based on Audi’s Q7 car was, as he spoke, driving itself around the lot beside the convention center, he added.

This implies the Audi-Nvidia car will have “Level 4” capability, needing no human being to supervise it or take the wheel on short notice, at least not under “numerous” road conditions. So, maybe it won’t do cross-country moose chases in snowy climes.

These claims are pretty much in line with what other companies, notably Tesla, have been saying lately. The difference is in the timing: Nvidia and Audi have drawn a hard deadline for three years from now.

In a statement, Audi said that it would introduce what it called the world's first Level 3 car this year; it will be based on Nvidia computing hardware and software. Level 3 cars can do all the driving most of the time but require that a human be ready to take over.

At the heart of Nvidia’s strategy is the computational muscle of its graphics processing chips, or GPUs, which the company has honed over decades of work in the gaming industry. Some 18 months ago, it released its first automotive package, called Drive PX, and today it announced the successor to it, called Xavier. (That Audi in the parking lot uses the older, Drive PX version.)

“[Xavier] has eight high-end CPU cores, 512 of our next-gen GPUs,” Huang said. “It has the performance of a high-end PC shrunk onto a tiny chip, [with] teraflop operation, at just 3o watts.” By teraflop he meant 30 of them: 30 trillion operations per second, 15 times as much as the 2015 machine could handle.

That power is used in deep learning, the software technique that has transformed pattern recognition and other applications in the past three years. Deep learning uses a hierarchy of processing layers that make sense of a mass of data by organizing it into progressively more meaningful chunks.

For instance, it might begin in the lowest layer of processing by tracing a line of pixels to infer an edge. It might proceed up to the next layer up by combining edges to construct features, like a nose or an eyebrow. In the next higher layer it might notice a face, and in a still higher one, it might compare that face to a database of faces to identify a person. Presto, and you have facial recognition, a longstanding bugbear of AI.

And, if you can recognize faces, why not do the same for cars, sign posts, roadsides and pedestrians? Google’s Deep Mind, a pioneer in deep learning, did it for the infamously difficult Asian game of Go last year, when its Alphago program beat one of the best Go players in the world.

In Nvidia’s experimental self-driving car, dozens of cameras, microphones, speakers, and other sensors are strewn around the outside and also the inside. Reason: Until full autonomy is achieved, the person behind the wheel will still have to stay focused on the road, and the car will see to it that he is.

“The car itself will be an AI for driving, but it will also be an AI for codriving—the AI copilot,” Huang said. “We believe the AI is either driving you or looking out for you. When it is not driving you it is still completely engaged.”

In a video clip, the car warns the driver with a natural-language alert: “Careful, there is a motorcycle approaching the center lane,” it intones. And when the driver—an Nvidia employee named Janine—asks the car to take her home, it obeys her even when street noise interferes. That’s because it actually reads her lips, too (at least for a list of common phrases and sentences).

Huang cited work at Oxford and at Google’s Deep Mind outfit showing that deep learning can read lips with 95 percent accuracy, which is much better than most human lip-readers. In November, Nvidia announced that it was working on a similar system.

It would seem that the Nvidia test car is the first machine to emulate the ploy portrayed in 2001: A Space Odyssey, in which the HAL 9000 AI read the lips of astronauts plotting to shut the machine down.

These efforts to supervise the driver so the driver can better supervise the car is directed against Level 3’s main problem: driver complacency. Many experts believe that this is what occurred with the driver of the Tesla Model S that crashed into a truck. Some reports say he failed to override the vehicle’s decision making because he was watching a video.

Last night, Huang also announced deals with other auto industry players. Nvidia is partnering with Japan’s Zenrin mapping company, as it has done with Europe’s TomTom and China’s Baidu. Its robocar computer will be manufactured by ZF, an auto supplier in Europe; commercial samples are already available. And it is also partnering with Bosch, the world’s largest auto supplier.

Besides these automotive initiatives, Nvidia also announced new directions in gaming and consumer electronics. In March, it will release a cloud-based version of its GeForce gaming platform on Facebook that will provide a for-fee service through the cloud to any PC loaded with the right client software. This required that latency, the delay in response from the cloud, be reduced to manageable proportions. Nvidia also announced a voice-controlled television system based on Google’s Android system.

The common link among these businesses is Nvidia’s prowess in graphics processing, which provides the computational muscle needed for deep learning. In fact, you might say that deep learning—and robocars—came along at just the right time for the company: It had built up stupendous processing power in the isolated hothouse of gaming and needed a new outlet for it. Artificial intelligence is that outlet.

Philip E. Ross is a senior editor at IEEE Spectrum. His interests include transportation, energy storage, AI, and the economic aspects of technology. He has a master's degree in international affairs from Columbia University and another, in journalism, from the University of Michigan.