This is a guest post. The views expressed here are solely those of the author and do not represent positions of IEEE Spectrum or the IEEE.

The simple task of picking something up is not as easy as it seems. Not for a robot, at least. Roboticists aim to develop a robot that can pick up anything—but today most robots perform “blind grasping," where they're dedicated to picking up an object from the same location every time. If anything changes, such as the shape, texture, or location of the object, the robot won't know how to respond, and the grasp attempt will most likely fail.

Robots are still a long way off from being able to grasp any object perfectly on their first attempt. Why do grasping tasks pose such a difficult problem? Well, when people try to grasp something they use a combination of senses, the primary ones being visual and tactile. But so far, most attempts at solving the grasping problem have focused on using vision alone.

This approach is unlikely to give results that fully match human capabilities, because although vision is important for grasping tasks (such as for aiming at the right object), vision simply cannot tell you everything you need to know about grasping. Consider how Steven Pinker describes all the things the human sense of touch accomplishes: “Think of lifting a milk carton. Too loose a grasp, and you drop it; too tight, and you crush it; and with some gentle rocking, you can even use the tugging on your fingertips as a gauge of how much milk is inside!" he writes in How the Mind Works. Because robots lack these sensing capabilities, they still lag far behind humans when it comes to even the simplest pick-and-place tasks.

As a researcher leading the haptic and mechatronics group at the École de Technologie Supérieure's Control and Robotics (CoRo) Lab in Montreal, Canada, and as co-founder of Robotiq, a robotics company based in Québec City, I've long been tracking the most significant developments in grasping methods. I'm now convinced that the current focus on robotic vision is unlikely to enable perfect grasping. In addition to vision, the future of robotic grasping requires something else: tactile intelligence.

Previous studies have focused on vision, not tactile intelligence

So far, most of the research in robotic grasping has aimed at building intelligence around visual feedback. One way of doing so is through database image matching, which is the method used in the Million Objects Challenge at Brown's Humans to Robots Lab. The idea is for the robot to use a camera to detect the target object and monitor its own movements as it attempts to grasp the object. While doing so, the robot compares the real-time visual information with 3D image scans stored in the database. Once the robot finds a match, it can find the right algorithm for its current situation.

But while Brown's approach aims to collect visual data for a variety of objects, roboticists are unlikely to ever develop a visual database for every single item a robot could possibly encounter. Moreover, the database-matching approach doesn't include environmental constraints, so it doesn't let the robot adapt its grasp strategy for different contexts.

Other researchers have turned to machine learning techniques for improving robotic grasping. These techniques allow robots to learn from experience, so eventually the robots can figure out the best way to grasp something on their own. Plus, unlike the database-matching methods, machine learning requires minimal prior knowledge. The robots don't need to access a pre-made image database—they just need plenty of practice.

As IEEE Spectrum reported earlier this year, Google recently conducted an experiment in grasping technology that combined a vision system with machine learning. In the past, researchers tried to improve grasping abilities by teaching the robots to follow whatever method the humans had decided was best. Google's biggest breakthrough was in showing how robots could teach themselves—using a deep convolutional neural network, a vision system, and a lot of data (from 800,000 grasp attempts)—to improve based on what they learned from past experiences.

Their results seem extremely promising: Since the robots' responses were not pre-programmed, all of their improvements can truly be said to have “emerged naturally from learning" (as one of their researchers put it). But there are limits to what vision can tell robots about grasping, and Google might have already reached that frontier.

Focusing solely on vision leads to certain problems

There are three main reasons why the challenges Google and others are working on are hard to overcome with vision alone. First, vision is subject to numerous technical limitations. Even state-of-the-art vision systems can have trouble perceiving objects in certain light conditions (such as translucidity, reflection, and low-contrast colors), or when the object is too thin.

Second, many grasping tasks involve scenarios where it's hard to see the entire object, so vision often cannot provide all the information the robot might need. If a robot is trying to pick up a wooden block from a table, a simple vision system would only observe the top of the block, and the robot would have no idea what the block looks like on the other side. In a more complex task like bin-picking, which involves multiple objects, the target object could be partially or fully obscured by surrounding items.

Finally, and most importantly, vision is simply not suited to the nature of the problem: grasping tasks are a matter of contact and forces, which cannot be monitored by vision. At best, vision can inform the robot about finger configurations that are likely to succeed, but in the end a robot needs tactile information to know the physical values that are associated with grasping tasks.

How tactile intelligence can help

The sense of touch plays a central role for humans during grasping and manipulation tasks. For amputees that have lost their hands, one of the biggest sources of frustration is the inability to sense what they're touching while using prosthetic devices. Without the sense of touch, the amputees have to pay close visual attention during grasping and manipulation tasks, whereas a non-amputee could pick something up without even looking at it.

Researchers are aware of the crucial role that tactile sensors play in grasping, and the past 30 years have seen many attempts at building a tactile sensor that replicates the human apparatus. However, the signals sent by a tactile sensor are complex and of high dimension, and adding sensors to a robotic hand often doesn't directly translate into improved grasping capabilities. What's needed is a way to transform these raw and low-level data into high-level information that will result in better grasping and manipulation performance. Tactile intelligence could then give robots the ability to predict grasp success using touch, recognize object slippage, and identify objects based on their tactile signatures.

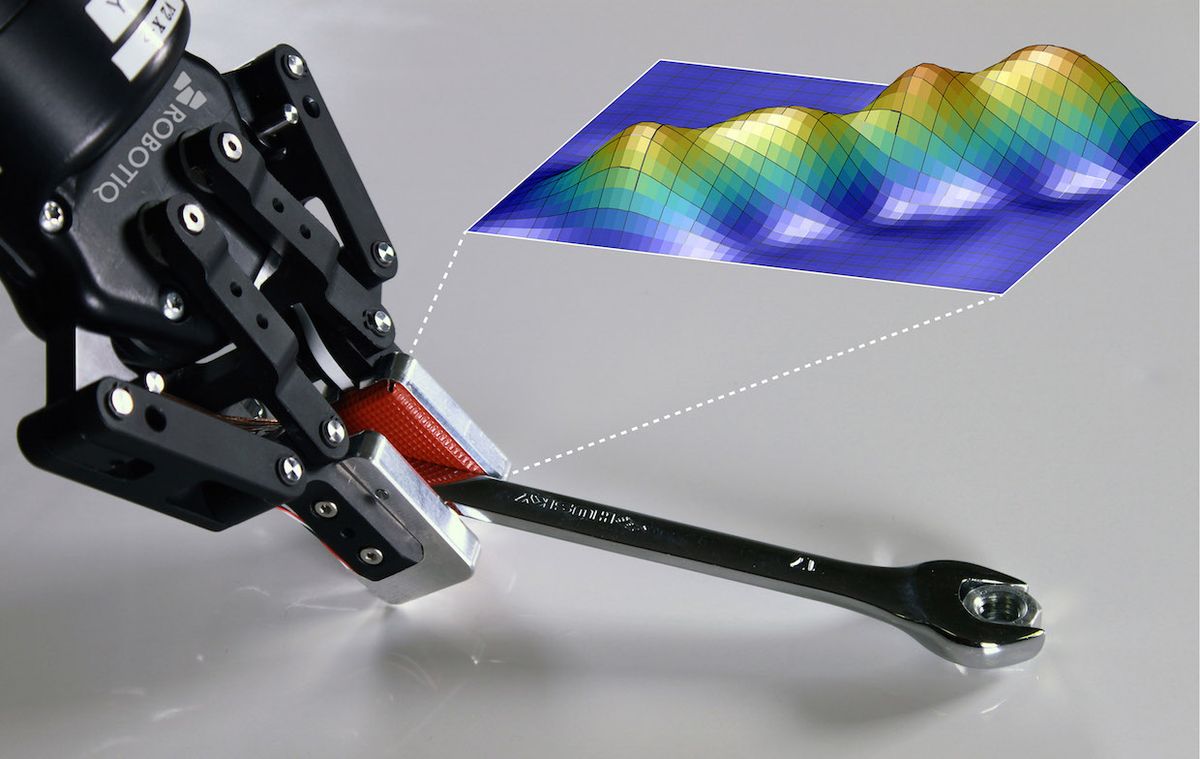

At ÉTS's CoRo Lab, my colleagues and I are building the blocks that will form the core of this new intelligence. One of the most recent developments is a machine learning algorithm that uses pressure images to predict successful and failed grasps. Developed by Deen Cockburn and Jean-Philippe Roberge, the system is an attempt to bring robots closer to human-level grasping abilities. We humans, of course, learn to recognize whether the configuration of our fingers will lead to a good grasp by using our sense of touch. Then we adjust our finger configuration until we're confident our grasp attempt will succeed. Before robots can learn to quickly adjust their configurations, they need to get better at predicting the outcome of a grasp.

This is where I believe CoRo Lab has triumphed. By combining a robotic hand from Robotiq with a UR10 manipulator from Universal Robots, and adding several multimodal tactile sensors we built in-house and a Kinect-based vision system (used only to aim at the geometrical center of each object), the resulting robot was able to pick up a variety of objects and use this data to learn. Ultimately, we succeeded at creating a system that correctly predicts grasp failure 83 percent of the time.

Another team at CoRo Lab, this time led by Jean-Philippe Roberge, focused specifically on slippage detection. We humans can quickly recognize when an object is slipping out of our grasp because our fingers contain fast-adaptive mechanoreceptors, which are receptors in our skin that detect rapid changes in pressure and vibration. Object slippage generates vibrations on the surface of one's hand, so the researchers input vibration images (spectrograms) instead of pressure images to their machine learning algorithm. Using the same robot as the one from the grasp-prediction experiment, their system was able to learn the features of vibration images that correspond with object slippage, and it identified object slippage with 92 percent accuracy.

It may seem easy to get a robot to notice slippage, since slippage is just a series of vibrations. But how do you get the robot to learn the difference between vibrations that occur because the object is about to slip out of the robot's grasp, and vibrations that occur because the robot is dragging the object across another surface (like a table)? Don't forget that there are also the tiny vibrations caused by the robot's arm as it moves. These are three different events that generate similar signals, but require very different reactions from the robot. Distinguishing between these events is where machine learning comes in.

When it comes to machine learning, the two CoRo teams have one thing in common: neither of them use handcrafted features for their machine learning algorithm. In other words, the system itself determines what is relevant for classifying slippage (or for predicting the grasp outcome, for the grasp-prediction experiment), instead of relying on the researchers' guesses about what the best indicator might be.

“High-level features" in the past were always handcrafted, meaning the researchers would manually select the features that could help distinguish between types of slippage events (or between a good and bad grasp). For instance, they might decide that a pressure image showing the robot had grasped only the tip of the item would make for a bad grasp. But it's actually far more accurate to let the robot learn on its own, since researchers' guesses don't always match reality.

Sparse coding is particularly useful for this purpose. It's an unsupervised feature learning algorithm, and it works by creating a sparse dictionary that is used to represent new data. First, the dictionary creates itself by taking the spectrograms (or raw pressure images) as the input to a sparse coding algorithm. It outputs the dictionary, which consists of representations of high-level features. Then when new data arrives from subsequent grasp attempts, the dictionary is used to transform the new raw data into representations of that data, which are called sparse vectors. Finally, the sparse vectors are grouped into various causes of vibrations (or successful and failed grasp outcomes).

The CoRo Lab teams are currently testing ways to have the sparse coding algorithm automatically update itself, so that each grasp attempt will help the robot get better at making predictions. The idea is that eventually the robot will be able to use this information to adjust its behavior during the grasp. Ultimately, this research is a great example of how tactile and visual intelligence can work together to help robots learn how to grasp different objects.

The future of tactile intelligence

The key takeaway from this research is not that vision should be left by the wayside. Vision still makes crucial contributions to grasping tasks. However, now that artificial vision has reached a certain level of development, it could be better to focus on developing new aspects of tactile intelligence, rather than continue to emphasize vision alone so strongly.

CoRo Lab's Roberge compares the potential of researching vision vs. tactile intelligence to Pareto's 80-20 rule: now that the robotics community has mastered the first 80 percent of visual intelligence, perfecting the last 20 percent of vision is hard to do and won't contribute much to object-manipulation tasks. By contrast, roboticists are still working on the first 80 percent of tactile sensing. So perfecting this first 80 percent will be relatively easy to do, and it has the potential to make a tremendous contribution to robots' grasping abilities.

We may still be a long way off from the day when a robot can identify any object through touch, let alone clean your room for you—but when that day comes, we'll surely have tactile intelligence researchers to thank.

Vincent Duchaine is a professor at École de Technologie Supérieure (ÉTS) in Montreal, Canada, where he leads the haptic and mechatronics group at the Control and Robotics (CoRo) Lab and holds the ÉTS Research Chair in interactive robotics. Duchaine's research interests include grasping, tactile sensing, and human-robot interaction. He is also a co-founder of Robotiq, which makes tools for agile automation such as the three-finger gripper described in this article.