At IROS in Madrid a few weeks ago, Marc Raibert showed a few new videos during his keynote presentation. One was of Atlas doing parkour, which showed up on YouTube last week, and the other was just a brief clip of SpotMini dancing, which Raibert said was a work in progress. Today, Boston Dynamics posted a new video of SpotMini (which they’re increasingly referring to as simply “Spot”) dancing to Uptown Funk, and frankly displaying more talent than the original human performance.

The twerking is cute, but gets a little weird when you realize that SpotMini’s got some eyeballs back there as well.

While we don’t know exactly what’s going on in this video (as with many of Boston Dynamics’ video), my guess would be that these are a series of discrete, scripted behaviors that are played in sequence. They’re likely interchangeable and adaptable to different beats, but (again, as with many of their videos) it’s not clear to what extent these dancing behaviors are autonomous, and how it would react to a different song.

With that in mind, if I were writing a paper about dancing robots, I’d probably say something like:

The development of robots that can dance has received considerable attention. However, they are often either limited to a pre-defined set of movements and music or demonstrate little variance when reacting to external stimuli, such as microphone or camera input.

I’m not writing a paper about dancing robots, because I’m not in the least bit qualified, but the folks at ANYbotics and the Robotics Systems Lab at ETH Zurich definitely are, and that was the intro to their 2018 IROS paper on “Real-Time Dance Generation to Music for a Legged Robot.” Why write a paper on this? Why is teaching ANYmal to dance important? It’s because people like dancing, of course, and we want people to like robots, too!

Dance, as a performance art form, has been part of human social interaction for multiple millennia. It helps us express emotion, communicate feelings and is often used as a form of entertainment. Consequently, this form of interaction has often been attempted to be imitated by robots.

Our goal with this work is to bridge the gap between the ability to react to external stimuli (in this case music), and the execution of dance motions that are both synchronized to the beat, and visually pleasing and varied.

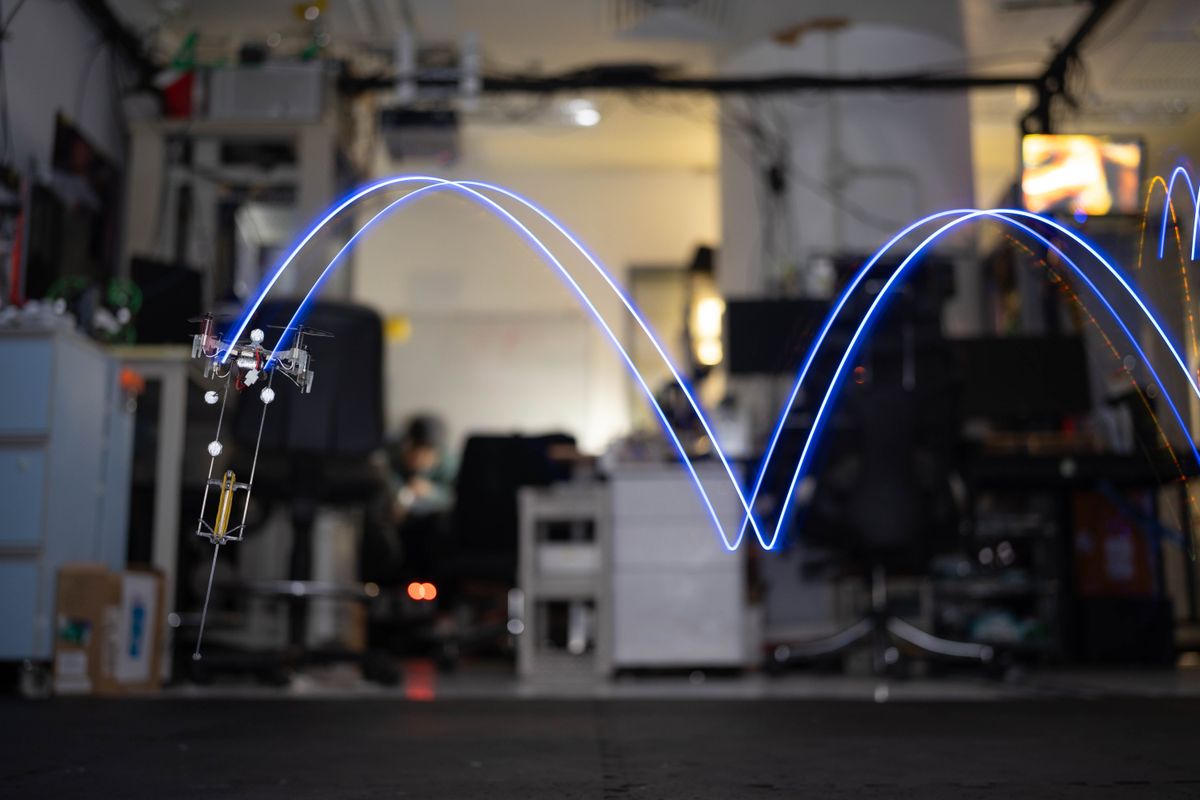

The first step to all of this is a beat tracker, which requires the robot to listen to music for between 1 second and 8 seconds, depending on how dominant the beat is. Once the beat is detected, a dance choreographer program builds dance moves by choosing dance motion primitives from a library. These primitives include parameters like repeatability, duration, trajectory amplitude, and tempo range, to help the program keep the overall choreography interesting. The overall idea is to emulate improvisation, in the same way that human dancers adaptively string together a series of different moves with transitions in between.

Once the robot has an idea for the dance moves that it wants to perform, the really tricky bit is performing those moves such that they properly land on the beat. For example, depending on how the robot’s limbs are positioned, the time that it takes for it to take a step in a particular direction can change, meaning that the robot has to predict how long each dance move will take to execute and plan its timing accordingly to compensate for the delay caused by actuators and motion and physics and all that stuff. ANYmal’s talented enough that it’s able to keep the beat even when it’s being shoved around, or when the surface that it’s standing on changes abruptly:

We have successfully built the foundation for a fully autonomous, improvising and synchronized dancing robot. Nevertheless, the robot is still limited in its ability to entertain for a long duration and appears robot-like, due to its still limited variation in dance choreography. Future work can be done to achieve our ultimate goal of building a system, which outputs natural human-like dance, with great variations in movement and seamless transitions from one song to another.

Can SpotMini compete with this? We hope to find out, on the first season of Dancing with the Robots, which isn’t a reality show yet but totally should be.

“Real-Time Dance Generation to Music for a Legged Robot,” by Thomas Bi, Peter Fankhauser, Dario Bellicoso, and Marco Hutter from ETH Zurich was presented earlier this month at IROS 2018 in Madrid, Spain, where it won the JTCF Novel Technology Paper Award for Amusement Culture.

Evan Ackerman is a senior editor at IEEE Spectrum. Since 2007, he has written over 6,000 articles on robotics and technology. He has a degree in Martian geology and is excellent at playing bagpipes.