Robots are already capable of using known relationships to organize objects in a home or office, but those relationships are between objects themselves, not objects and humans. This is a problem, since most of the stuff that needs to be organized in homes and offices is designed to be structured in such a way that humans can interact with it. Ashutosh Saxena's lab at Cornell is teaching robots to use their imaginations a little bit, to try and picture how we'd want them to organize our lives.

To understand the problem here, consider what a robot might do if you asked it to tidy up your living room and it found your TV remote lying on the floor. Let's say that the robot knows what a TV remote is, and that it's related to the TV, so as far as the robot is concerned, putting the TV remote next to the TV is the right thing to do. This isn't a bad way to go, but a better way to do it would be to consider what a human would prefer: the remote on a table near the couch, for example.

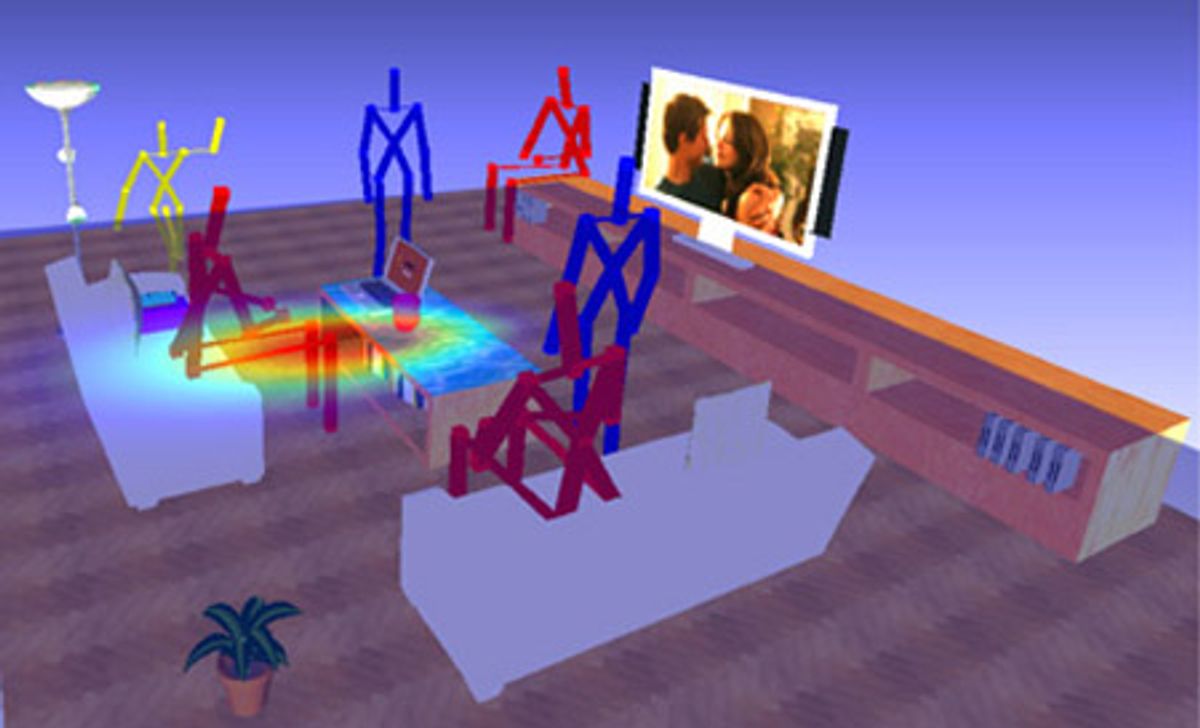

Essentially, what Saxena's group is doing is teaching robots to use their imaginations by placing virtual humans in the environment that they want to organize, and then figuring out what those virtual humans are likely to do. For example, the image above represents a 3D scan of a living room, including a bunch of different objects that humans like to interact with (the TV, a laptop, a mug, etc.). A robot (or more accurately a computer algorithm) then "imagines" a bunch of human figures in all sorts of different positions. The relevance of these positions is determined based on "usability cost:" in the above image, the seated position facing the TV has a low usability cost because it's easy for a human there to view the TV, access the laptop, and grasp the mug without having to move very much. In other words, the usability cost for a human sitting there is low, since you can get to a lot of stuff from that position. What this tells a robot is that the seated position is a place where a human is likely to want to sit, and the robot can then determine where it should place objects based on how a human sitting there might want to use them.

Of course, all of this depends on a robot already knowing how humans like to use things, and to teach robots that, the researchers had some volunteers manually place virtual objects in 3D scenes and then let his human-imagining algorithm analyze those scenes. Given a sample scene (10 x 10 meters) and imagining a person standing in the middle of the room and facing to the right, here's how the algorithm decided to place a bunch of different objects:

Not bad, right? The TV is next to the far wall, while the remote is at the person's side. Decorations can be wherever it's easy to see them, while the laptop needs to be close and the mouse needs to be just to the right of the laptop.

In testing, this new human-imagining algorithm proved to be significantly better than anything else short of a real human. Volunteers scored rooms that the algorithm organized at a 4.3 (out of 5), while the next best method that the researchers tested averaged only a 3.7. A paper on the subject, Learning Object Arrangements in 3D Scenes using Human Context, by Yun Jiang, Marcus Lim, Ashutosh Saxena, is set to appear in the International Conference of Machine Learning journal later this year.

[ Cornell Personal Robotics ] via [ Chronicle ]

Evan Ackerman is a senior editor at IEEE Spectrum. Since 2007, he has written over 6,000 articles on robotics and technology. He has a degree in Martian geology and is excellent at playing bagpipes.