How far away could an artificial brain be? Perhaps a very long way off still, but a working analogue to the essential element of the brain’s networks, the synapse, appears closer at hand now.

That’s because a device that draws inspiration from batteries now appears surprisingly well suited to run artificial neural networks. Called electrochemical RAM (ECRAM), it is giving traditional transistor-based AI an unexpected run for its money—and is quickly moving toward the head of the pack in the race to develop the perfect artificial synapse. Researchers recently reported a string of advances at this week’s IEEE International Electron Device Meeting (IEDM 2022) and elsewhere, including ECRAM devices that use less energy, hold memory longer, and take up less space.

The artificial neural networks that power today’s machine-learning algorithms are software that models a large collection of electronics-based “neurons,” along with their many connections, or synapses. Instead of representing neural networks in software, researchers think that faster, more energy-efficient AI would result from representing the components, especially the synapses, with real devices. This concept, called analog AI, requires a memory cell that combines a whole slew of difficult-to-obtain properties: it needs to hold a large enough range of analog values, switch between different values reliably and quickly, hold its value for a long time, and be amenable to manufacturing at scale.

“These devices responded much faster than the brain synapse. As a result, they give us the possibility of essentially being able to do a brainlike computation, artificial-intelligence computation, significantly faster than the brain, which is what we really need to realize the promise of artificial intelligence.”

—Jesus del Alamo, MIT

Most types of memory are well adapted to store digital values but are too noisy to reliably store analog. But back in 2015, a group of researchers at Sandia National Laboratories led by Alec Talin realized that the answer was right in front of them: the state of charge of a battery. “Fundamentally, a battery works by moving ions between two materials. As the ion moves between the two materials, the battery stores and releases energy,” says Yiyang Li, now a professor of materials science and engineering at the University of Michigan. “We found that we can use the same process for storing information.”

In other words, as many ions as there are in the channel determine a stored analog value. Theoretically, a difference of a single ion could be detectable. ECRAM uses these concepts by controlling how much charge is in the “battery” through a third gate terminal.

Picture a battery with a negative terminal on the left, an ion-doped channel in the middle, and a positive terminal on the right. The conductivity between the positive and negative terminal, prescribed by the number of ions in the channel, is what determines the analog value stored in the device. Above the channel, there’s an electrolyte barrier that permits ions (but not electrons) through. On top of the barrier is a reservoir layer, containing a supply of mobile ions. A voltage applied to this reservoir serves as a “gate,” forcing ions through the electrolyte barrier into the channel, or the reverse. These days, the time it takes to switch to any desired stored value is phenomenally fast.

“These devices responded much faster than the brain synapse,” says Jesus del Alamo, professor of engineering and computer science at MIT. “As a result, they give us the possibility of essentially being able to do a brainlike computation, artificial-intelligence computation, significantly faster than the brain, which is what we really need to realize the promise of artificial intelligence.”

Recent developments are rapidly bringing ECRAM closer to having all the qualities required for an ideal analog memory.

Lower energy

Ions don’t get any smaller than a single proton. Del Alamo’s group at MIT has opted for this smallest ion as their information carrier, because of its unparalleled speed. Just a few months ago, they demonstrated devices that move ions around in mere nanoseconds, about 10,000 times as fast as synapses in the brain. But fast was not enough.

“We can see the device responding very fast to [voltage] pulses that are still a little bit too big,” del Alamo says, “and that’s a problem. We want to be able to also get the devices to respond very fast with pulses that are of lower voltage because that is the key to energy efficiency.”

In research reported this week at IEEE IEDM 2022, the MIT group dug down into the details of their device’s operation with the first real-time study of current flow. They discovered what they believe is a bottleneck that prevents the devices from switching at lower voltages: The protons traveled easily across the electrolyte layer but needed an extra voltage push at the interface between the electrolyte and the channel. Armed with this knowledge, researchers believe they can engineer the material interface to reduce the voltage required for switching, opening the door to higher energy efficiency and scalability, says del Alamo.

Longer memory

Once programmed, these devices usually hold resistivity for a few hours. Researchers at Sandia National Laboratories and the University of Michigan have teamed up to push the envelope on this retention time—to 10 years. They published their results in the journal Advanced Electronic Materials in November.

To retain memory for this long, the team, led by Yiyang Li, opted for the heavier oxygen ion instead of the proton in the MIT device. Even with a more massive ion, what they observed was unexpected. “I remember one day, while I was traveling, my graduate student Diana Kim showed me the data, and I was astounded, thinking something was incorrectly done,” recalls Li. “We did not expect it to be so nonvolatile. We later repeated this over and over, before we gained enough confidence.”

They speculate that the nonvolatility comes from their choice of material, tungsten oxide, and the way oxygen ions arrange themselves inside it. “We think it’s due to a material property called phase separation that allows the ions to arrange themselves such that there’s no driving force pushing them back,” Li explains.

Unfortunately, this long retention time comes at the expense of switching speed, which is in the minutes for Li’s device. But, the researchers say, having a physical understanding of how the retention time is achieved enables them to look for other materials that show a long memory and faster switching properties simultaneously.

Tinier footprint

The added third terminal on these devices makes them bulkier than competing two-terminal memories, limiting scalability. To help shrink the devices and pack them efficiently into an array, researchers at Pohang University of Science and Technology, in South Korea, laid them on their side. This allowed the researchers to reduce the devices to a mere 30-by-30-nanometer footprint, an area about one-fifth as large as previous generations, while retaining switching speed and even improving on the energy efficiency and read time. They also reported their results this week at IEEE IEDM 2022.

The team structured their device as one big vertical stack: The source was deposited on the bottom, the conducting channel was placed next, then the drain above it. To allow the drain to permit ions in and out of the channel, they replaced the usual semiconductor material with a single layer of graphene. This graphene drain also served as an extra barrier controlling ion flow. Above it, they placed the electrolyte barrier, and finally the ion reservoir and gate terminal on top. With this configuration, not only did the performance not degrade, but the energy required to write and read information into the device decreased. And, as a result, the time required to read the state fell by a factor of 20.

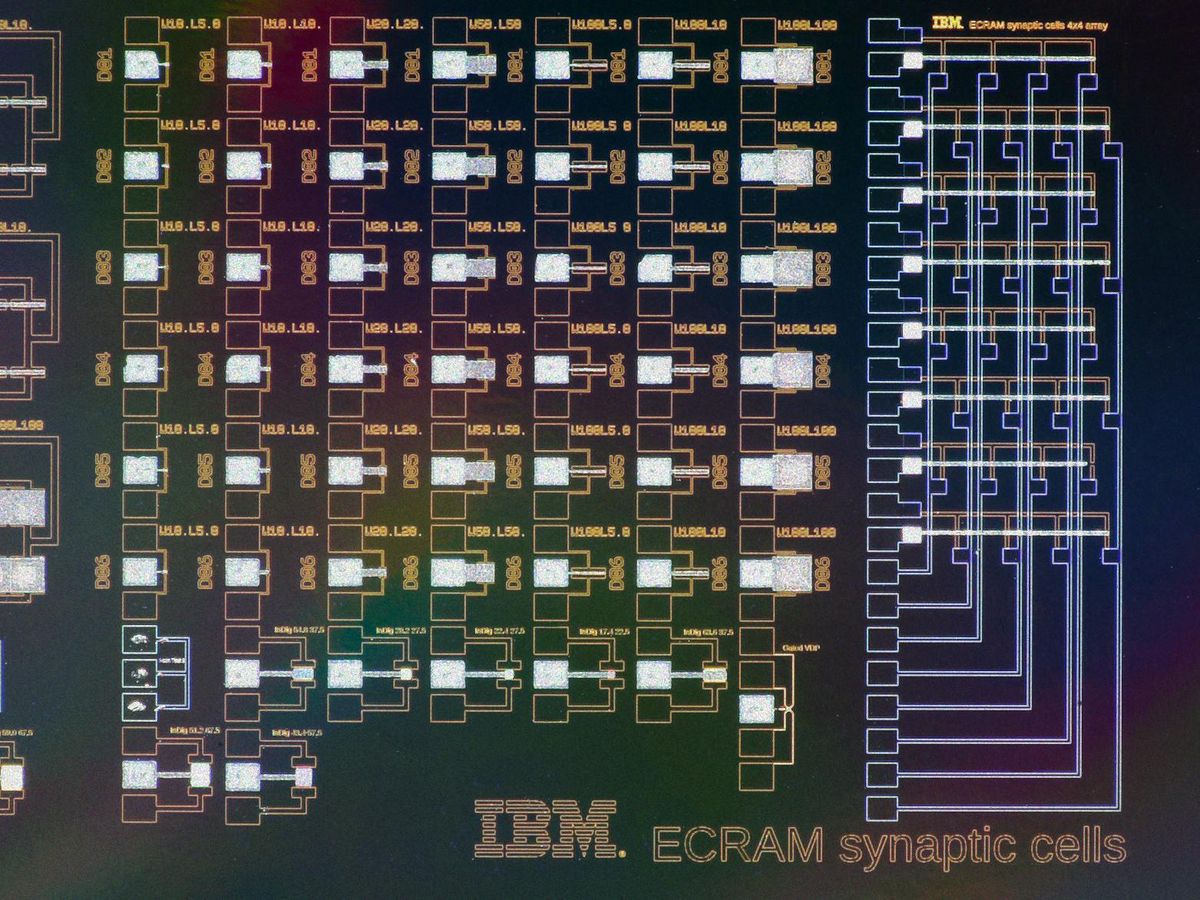

Even with all the above advances, a commercial ECRAM chip that accelerates AI training is still some distance away. The devices can now be made of foundry-friendly materials, but that’s only part of the story, says John Rozen, program director at the IBM Research AI Hardware Center. “A critical focus of the community should be to address integration issues to enable ECRAM devices to be coupled with front-end transistor logic monolithically on the same wafer, so that we can build demonstrators at scale and establish if it is indeed a viable technology.”

Rozen’s team at IBM is working toward this manufacturability. In the meantime, they’ve created a software tool that allows the user to play around with using different emulated analog AI devices, including ECRAM, to actually train neural networks and evaluate their performance.

This article appears in the February 2023 print issue as “Better AI Through Chemistry.”

- Vector-Matrix Multiplication with Analog AI - IEEE Spectrum ›

- Artificial Synapses 10,000x Faster Than Real Thing - IEEE Spectrum ›

- Searching for the Perfect Artificial Synapse for AI - IEEE Spectrum ›

Dina Genkina is an associate editor at IEEE Spectrum focused on computing and hardware. She holds a PhD in atomic physics and lives in Brooklyn.